AI doesn't kill prod. You do.

I had a conversation with a customer yesterday about how we use AI coding tools.

We treat AI tools like they're special, and something to be scared of.

Guardrails! Enterprise teams won't try the best coding tools because they are scared of what might happen.

AI coding agents won't ruin your product on their own. They're just tools. And like any tool, the person using them is responsible for what happens.

How We Use AI Today

Our whole team now uses AI coding tools. As I've wrote about before, Claude Code has changed how we do engineering at PromptLayer.

I'm half Claude Code, half OpenAI Codex these days. The improvement has been massive.

I love it so much I wrote articles on how Codex works behind-the-scenes and how Claude Code works behind-the-scenes.

As a founder, I don't code every single day anymore. Context switching used to kill me. But now I can jump back into a codebase and actually get stuff done. Coding agents understand the context.

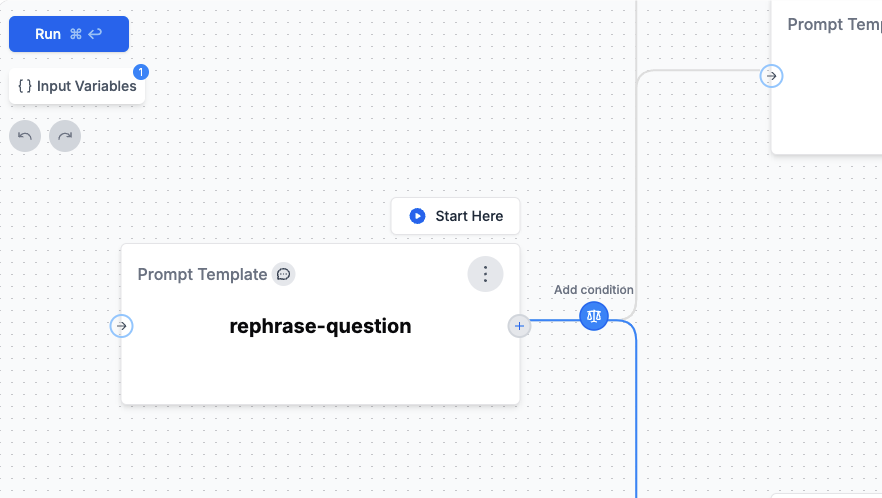

Yesterday I added a simple tooltip to our agent builder. It's the kind of small task that would've taken me at least an hour to figure out how our Canvas library works and how to test it. With Claude Code, I just pointed it at similar user-facing features in our code and it figured it out.

We've built internal agents too. I made a GitHub action that reads our commits and updates our docs automatically. Another engineer uses Claude Code to write our product update emails. Small fixes that used to sit in Slack for days (sorry, I mean months/years) now get done in under an hour.

The most common response on our #engineering Slack channel is now "Can you just Claude Code this now?"

The Guardrails Question

When people hear this, they always ask about guardrails. What if the AI drops a production database? What if it writes malicious code? What about critical systems?

These are real concerns. One of our engineers actually had Claude Code drop their local DB tables. So sure, definitely don't connect it to prod.

But as these coding agents get better and more ubiquitous, we simply need to return to fundamentals of engineering. Humans also make mistakes.

Interns Can Drop Databases Too

An intern can drop a database by accident. A senior engineer can push bad code to production. People make mistakes all the time.

When an intern joins, we don't say "humans are too dangerous for our codebase." We give them access, teach them best practices, and make them responsible for their work.

If the system breaks from their code, it should be upsetting. If we go down, they should wake up at 2am to fix it.

Now just treat AI tools in the same way you treat the humans that wield them. If you use Claude Code to write something, you're responsible for that code. Just like if you wrote it yourself. Just like if an intern wrote it for you.

There is no secret prompt for your CLAUDE.md or AGENTS.md or sandboxing environment to solve this problem. Just like there is no silver bullet to make sure the intern's code doesn't have bugs. Just code review, unit tests, staging environments.

Engineering Standards Are The Real Guardrails

Good engineering practices matter more than ever. We still do code reviews. We still write tests. We still check for side effects.

When I use Claude Code, my CLAUDE.md prompts are full of reminders: verify the changes, make sure it's DRY, check for side effects, don't reinvent the wheel. These aren't special AI instructions. They're just good engineering.

The difference is that bad practices become more obvious. If you're using AI as a black box to write code you don't understand, that's going to show up in review. Just like it would if you copy-pasted from Stack Overflow without understanding it.

If anything, good code structure helps AI tools work better. We built our prompt playground in a modular way, which makes it easier for Claude to understand and extend. Clear file names, small modules, obvious patterns - these things help humans and AI equally.

Humans aren't good at reading 10,000 line monolith routes.py files and neither is your AI.

Getting Your Team On Board

Cultural change is hard. Not everyone on our team was convinced at first.

We didn't mandate anything. Instead, we made it personal responsibility. Engineers can choose their own tools. If they want to use Claude Code, great. Zed? Great. Cursor? Great. If they want to write everything by hand, that's fine too.

But we do lead by example. When someone posts a small bug in Slack, we respond with "try Claude Code" instead of leaving it to enter the prioritization limbo. Our founders use it all the time, and always share how well it worked (or didn't). People see the results and get curious.

The key is starting small. Don't try to rewrite your whole codebase with AI. Use it for that annoying tooltip. That test you've been putting off. That documentation update. Build confidence and culture will follow.

Tools, Not Magic

AI coding tools aren't magic. They're not going to replace engineers (...yet). They're not going to destroy your codebase (...unless you let them).

They're just tools. Powerful tools, but tools nonetheless.

Treat them that way. Use them responsibly. Maintain your standards. Review the output. Take responsibility for what you ship.

Because at the end of the day, you're not shipping AI-generated code. You're shipping your code that you chose to create with AI assistance.

This article was inspired by a conversation with NoRedInk about how different teams are adopting AI tools. Thanks to Faraaz, Mandla, and Charbel for the thoughtful discussion about guardrails and best practices.