GPT-5-Codex: First Reactions

"An AI that can code for 7 hours straight" — VentureBeat's headline on gpt-5-codex.

OpenAI dropped GPT-5-Codex in mid-September 2025. Early adopters report game-changing productivity gains alongside some notable quirks. Here's what the community is discovering about OpenAI's latest agentic coding powerhouse, how it stacks up against competitors, and where you can start experimenting today.

The Leap That Surprised Developers

What sets GPT-5-Codex apart isn't just incremental improvement — it's a fundamental shift in how AI approaches coding tasks. The model exhibits true agentic autonomy, taking high-level instructions and independently executing the entire development workflow: planning, coding, running tests, debugging, and even conducting PR reviews with minimal human guidance.

The standout feature is what OpenAI calls "variable grit" — the model's ability to scale its reasoning time based on task complexity. Simple bug fixes get rapid responses, while complex refactoring jobs can see the AI working continuously for over 7 hours. VentureBeat and Latent Space documented cases where GPT-5-Codex autonomously refactored massive codebases, iterating through implementations until all tests passed.

Tool integration elevates this beyond a chatbot that writes code. GPT-5-Codex operates in a sandboxed execution environment where it can:

- Run and debug code in real-time

- Access debuggers and profilers

- Search documentation and web resources

- Perform GUI testing with screenshot analysis

- Generate transparent logs, diffs, and citations for every action

The model's training on real engineering workflows shows. It naturally honors project conventions, respects AGENTS.md files for coding standards, and maintains strong style adherence without verbose prompting. This real-world grounding makes its output feel more like code from a colleague than an AI.

Early benchmarks validate the hype: GPT-5-Codex achieves ~74.5% on SWE-bench, matching the base GPT-5's performance while adding specialized polish. For refactoring tasks specifically, it jumps to 51.3% accuracy versus GPT-5's 33.9% — a nearly 20-point improvement that BleepingComputer called "a clear sign of domain expertise."

Early Wins People Are Posting About

The most enthusiastic reactions center on code reviews. OpenAI teams now rely on GPT-5-Codex for the "vast majority" of their code reviews, with the AI catching critical issues human reviewers miss. Greg Brockman described it as a "safety net" — when the system went down temporarily, engineers felt exposed without their AI reviewer watching for bugs.

Real companies are seeing production saves:

- Duolingo uses it for backend code checks, catching compatibility issues other tools missed

- Ramp credits GPT-5-Codex with catching a bug mid-deployment that slipped past other automated systems

- Virgin Atlantic's data team leaves one-line PR comments and gets instant, accurate code diffs in response

- Cisco Meraki engineers delegate entire refactoring projects, freeing themselves for higher-priority work

The developer experience improvements are tangible. The model's diff-first explanations make changes easy to review. Cloud task completion times dropped 90% thanks to infrastructure improvements. The VS Code extension hit ~800,000 installs within weeks of launch, and iOS support brings coding assistance to mobile devices.

Anecdotal stories flood Reddit, X, and developer forums. Those are sometimes the most useful.

Rough Edges and Gotchas

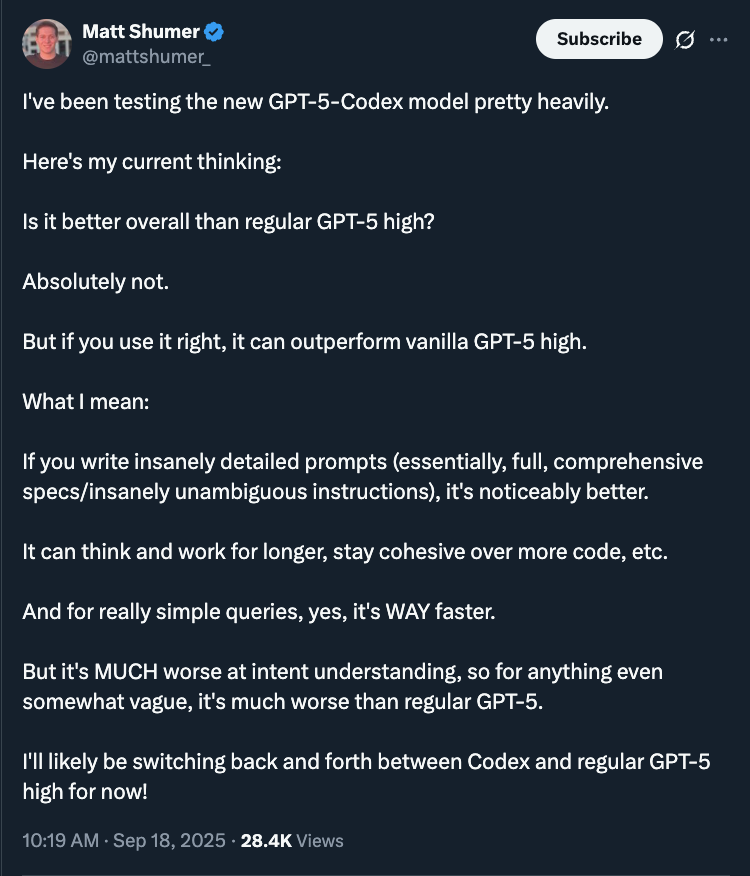

Reality check: GPT-5-Codex can be "brilliant one moment, mind-bogglingly stupid the next," as one Reddit user perfectly captured. The model sometimes fixates on edge cases or drifts into unnecessary complexity, churning away on tangents if not properly guided.

Experienced users have developed tactics to manage these quirks:

- Reset sessions when the conversation loses coherence

- Break complex work into smaller, focused tasks

- Guide it like a junior developer — clear objectives, regular check-ins

The throughput versus latency trade-off is real. While GPT-5-Codex excels at thorough analysis of complex problems, some developers find Claude faster for quick, straightforward prompts. It's the difference between a methodical engineer and a rapid prototyper.

The golden rule remains: keep humans in the loop. Always review diffs, gate production deployments, and use approval modes for sensitive operations. GPT-5-Codex amplifies developer capabilities but doesn't replace developer judgment.

How It Stacks Up (vs Claude Code and Copilot)

Community sentiment is shifting rapidly. "They better make a big move or this will kill Claude Code," one Hacker News commenter warned Anthropic. Many developers report GPT-5-Codex handles long, complex tasks with a thoroughness Claude can't match.

We've already written deep dives on OpenAI Codex CLI and behind-the-scenes of Claude Code.

The trade-offs are nuanced:

- GPT-5-Codex: Superior tool integration, persistence on hard problems, better code review quality

- Claude Code: Perceived as snappier for quick tasks, strong context window, simpler interface

Both models continue evolving rapidly, but GPT-5-Codex's agentic capabilities — actually running and testing code — are seriously impressive.

OpenAI positions Codex as complementary to GitHub Copilot rather than competitive. Copilot excels at line-by-line autocomplete during active coding, while Codex handles task-level delegation. Many developers now use both: Copilot for typing assistance, Codex for autonomous feature development.

Try It Yourself: Where First Testers Are Using It

Codex CLI offers the most control for local development. This open-source tool integrates directly with your terminal and workspace, featuring:

- Auto-generated to-do plans for complex tasks

- Three approval modes (read-only, auto with constraints, full access)

- Adjustable "reasoning effort" settings

- Support for attaching images and context files

IDE Extensions bring GPT-5-Codex into VS Code, Cursor, and other VS Code-based editors. The extension enables project-aware edits with smooth transitions between local and cloud execution. Early adoption numbers — 800k installs within weeks — suggest developers prefer working with AI directly in their coding environment.

Codex Cloud (via ChatGPT) provides a web-based option with persistent workspaces and ephemeral environments. The cloud version can spin up containers, run headless browsers, and handle resource-intensive tasks. Mobile access through the iOS app enables coding on the go — a sci-fi capability now available in your pocket.

Access follows OpenAI's tiered approach:

- ChatGPT Plus ($20/month): Limited Codex credits for occasional use

- ChatGPT Pro ($200/month): Substantially higher limits for daily development

- Business/Enterprise: Scalable team access with shared usage pools

The model isn't yet available through standalone API, though OpenAI indicates this is coming. Rollout has been staggered — if you don't see it immediately, check back in a few days. Pro tip: select "high reasoning" mode for challenging tasks to unlock the model's full capabilities.

Enthusiasm and Caution

The developer community's pulse on GPT-5-Codex: strong enthusiasm tempered by pragmatic caution. The autonomous capabilities and code review quality represent genuine breakthroughs, while the occasional lapses remind us this is still AI requiring human oversight.

Next steps for curious developers:

- Start with code reviews and refactoring tasks to build confidence

- Iterate on prompts and constraints — treat it like onboarding a new team member

- Evaluate against your existing tools with real projects

- Watch for API availability announcements for deeper integration

The most successful early adopters share one trait: they stopped asking "can AI do this?" and started asking "how do I help AI do this better?" That mental shift — from tool user to AI coach — is where the 10x gains hide.