The Prompt Engineering Triangle – the Future of GenAI

In his landmark paper 'A Mathematical Theory of Communication,' Claude Shannon laid the foundation of information theory.

In this seminal work, Shannon described the concept of information entropy. Information entropy is the idea that we can measure how much content is in a signal. Shannon then goes on to prove that there is a theoretical maximum rate at which information can be transmitted through a channel without error, known as channel capacity. In other words, in our world there is a limit of how much information can be packed into a communication channel.

Shannon taught us that if I have an idea that I want to communicate to you there are limits to how quickly I can communicate it to you. The universe does not let me instantaneously transfer that information to you.

Why Prompt Engineering Isn't Going Away

A question we often hear at PromptLayer is whether prompt engineering is a legitimate field or if it will become obsolete as models improve. Our answer is always the same, fundamentally there will always be some business logic that product owners will have to transfer onto the LLM. Even if the LLM is a universal learner who knows everything – it cannot know your unique vision as a product owner. What one company may define as a good design for a product, another company may reject.

We believe that while the nature of prompt engineering may change, there will always need to be business logic that needs to be imparted into the LLM. Further, we believe that business logic should live outside of application code. Business requirements belong on a platform where key stakeholders can directly interact with them, rather than being buried in python or yaml files.

The Future of Prompt Engineering

Prompt engineering will remain essential for translating business needs into LLM behaviors. The question now is: how will the field evolve?

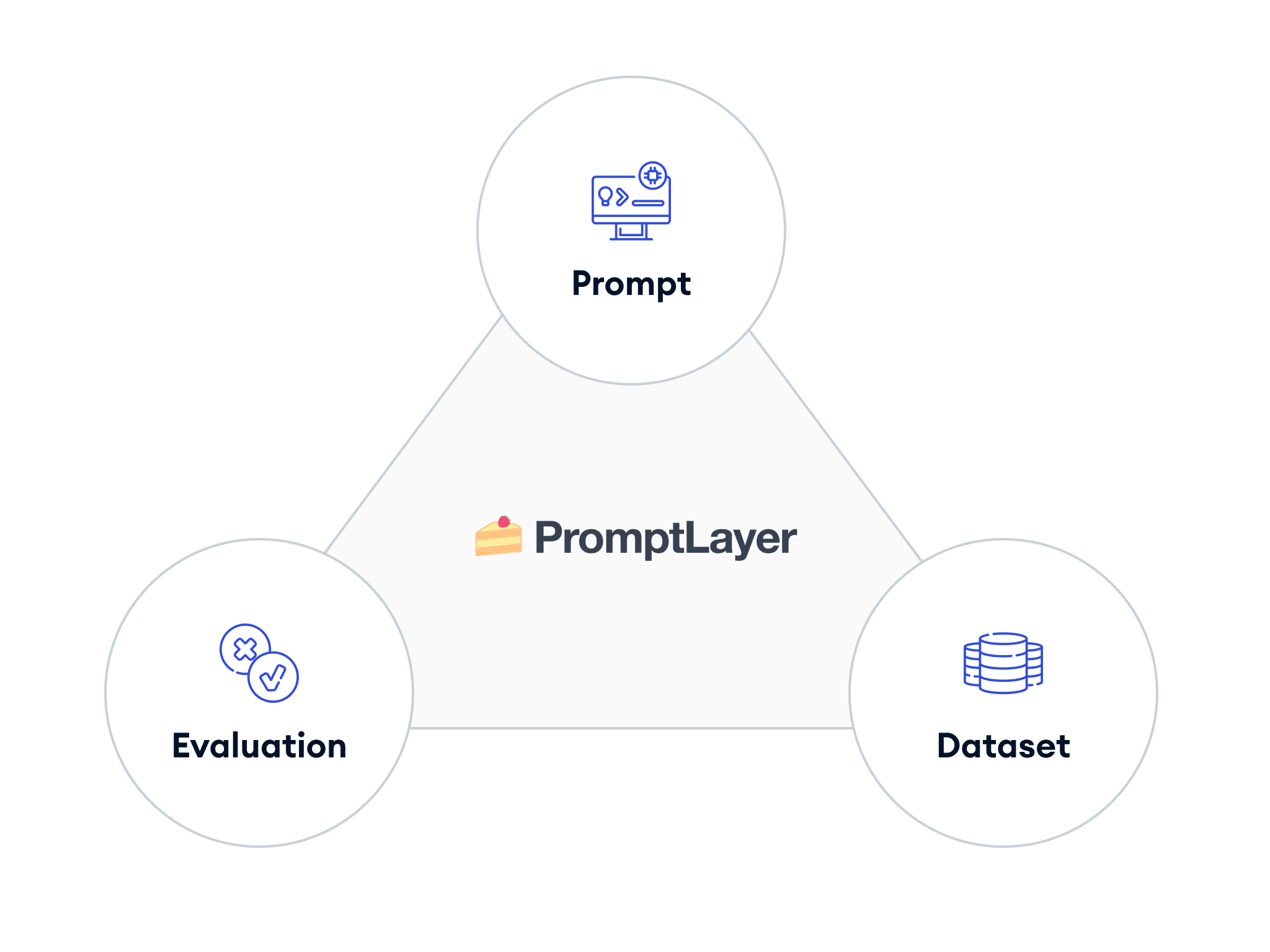

At PromptLayer, we believe the future of prompt engineering can be understood through what we call the Prompt Engineering Triangle. This framework consists of three core elements:

- Prompt Templates

- Datasets

- Evaluations

The core principle of this framework is that defining any two elements enables derivation of the third:

- Given a dataset and evaluation metrics, tools like dspy can generate optimal prompt templates

- Given prompt templates and evaluation metrics, synthetic data generation creates comprehensive test datasets

- Given prompt templates and datasets, appropriate evaluation metrics emerge to measure system performance

These three elements work together, creating a foundation for building LLM applications. Today's LLM architectures typically flow from prompt templates to datasets to evaluations, leaving other paths in this triangle open for innovation.

Whether teams focus on prompts, datasets, or evaluations, the core need remains the same: a platform to manage business requirements for LLM applications. At PromptLayer, we're partnering with our customers to build the future of LLM engineering.

About PromptLayer

PromptLayer is a prompt management system that helps you iterate on prompts faster — further speeding up the development cycle! Use their prompt CMS to update a prompt, run evaluations, and deploy it to production in minutes. Check them out here. 🍰