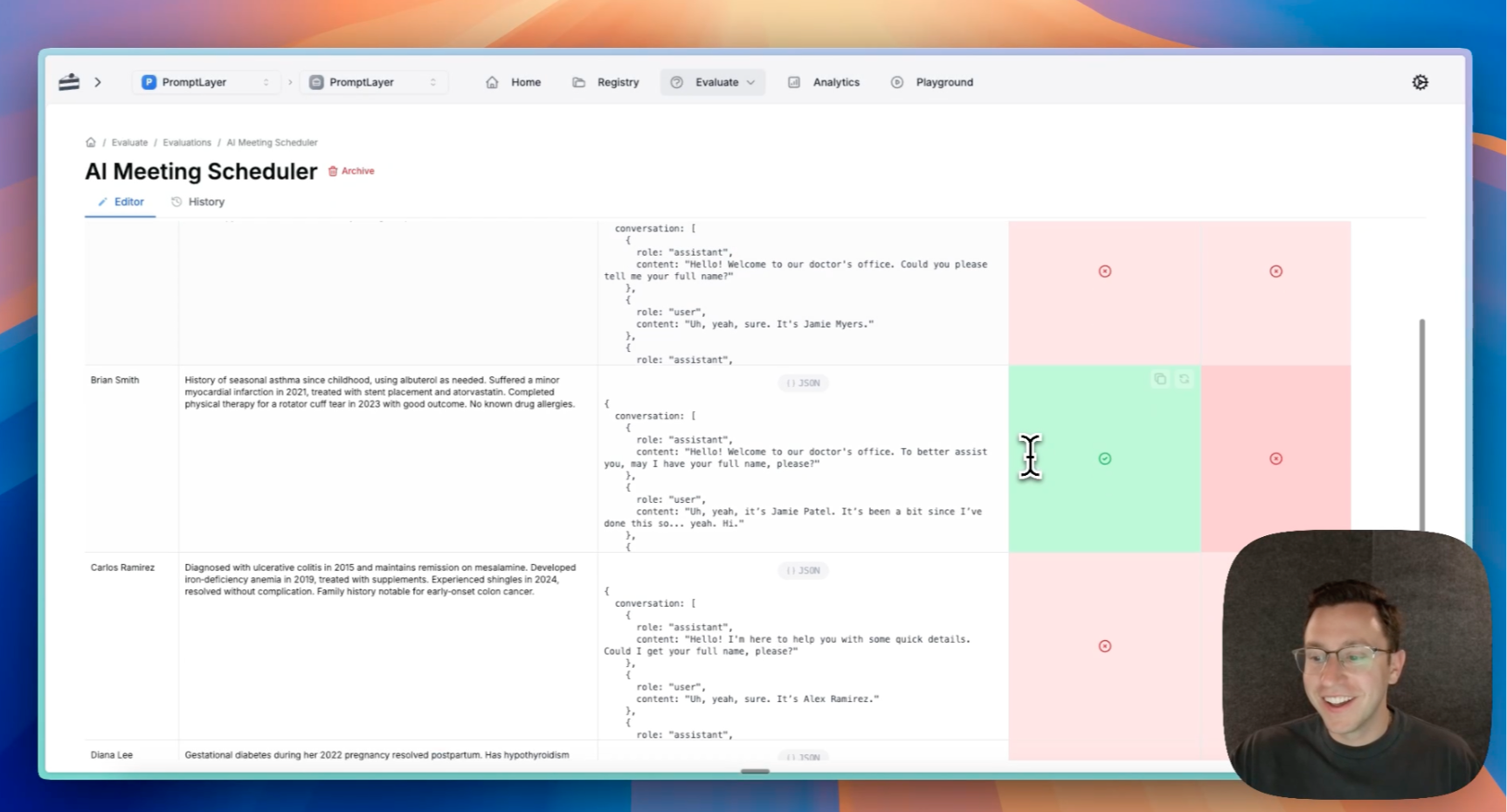

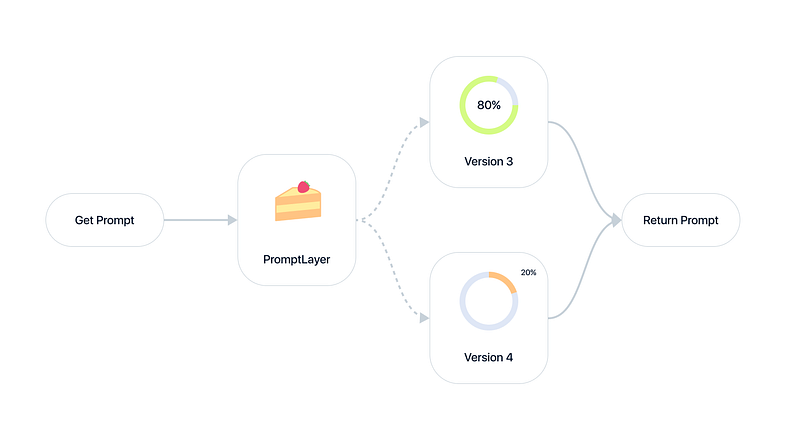

AI Sales Engineering: How We Built Hyper-Personalized Email Campaigns at PromptLayer

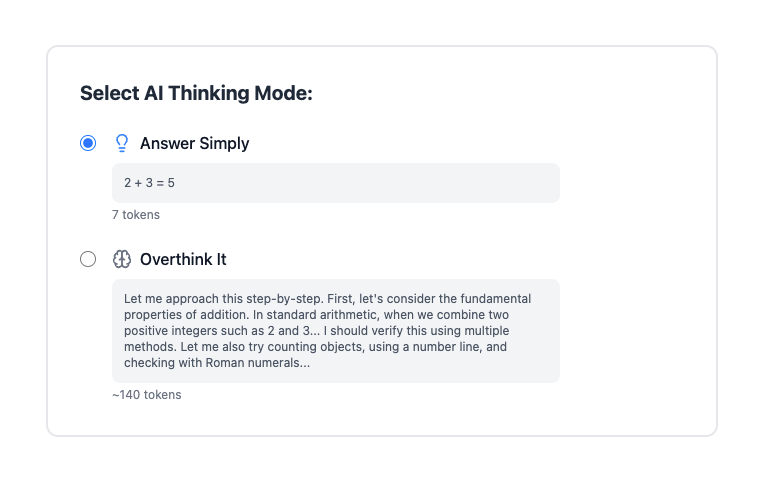

TL;DR

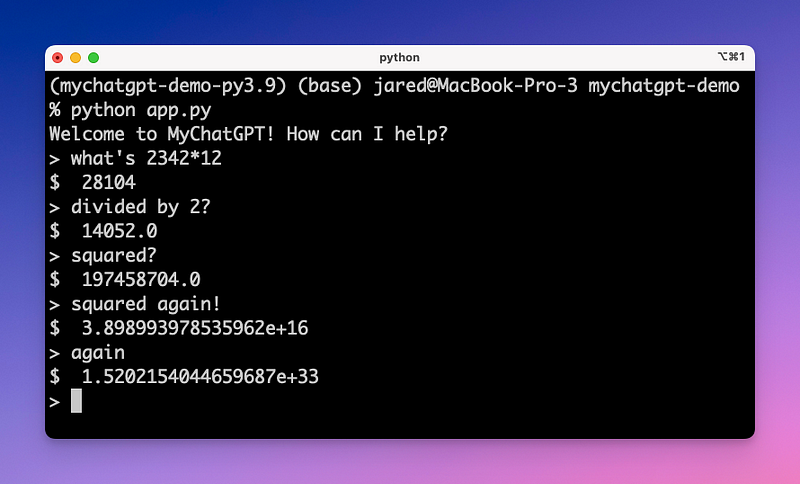

Our AI sales system automates hyper-personalized email campaigns by researching leads, scoring their fit, drafting tailored four-email sequences, and integrating seamlessly with HubSpot. With this approach, we achieve:

* ~7% positive reply rate, resulting in way more meetings than we can handle

* 50–60% email open rates

The key