Mastering AI Prompt Mini-Frameworks

Prompt engineering has evolved from a niche "hot job" to a core competency similar to using a spreadsheet, essential for everyone. What once required specialized knowledge has become as fundamental to modern work as email or document editing.

Mini-frameworks are structured logic blocks that break prompts into components like Role, Task, and Context. Think of them as recipes for AI interaction; instead of throwing random ingredients at your AI assistant and hoping for a decent meal, you follow a proven formula that consistently delivers the results you need.

These frameworks transform free-form requests into precise, repeatable instructions that eliminate ambiguity and align AI behavior with user intent. Rather than asking "Write something about marketing," a framework guides you to specify: "As a digital marketing expert (Role), create a social media strategy (Task) for a sustainable fashion startup targeting Gen Z consumers (Context)."

The birth of prompting

The release of OpenAI's GPT-3 marked a watershed moment in AI history. With its 175 billion parameters, GPT-3 demonstrated that carefully crafted language could "program" massive models without traditional coding. This realization sparked the birth of prompt engineering as a discipline.

Suddenly, developers and researchers discovered that the difference between a mediocre response and a brilliant one often came down to how you asked the question. The AI community began documenting patterns, certain phrasings consistently produced better results than others.

Rise of Reasoning (2022)

The next evolutionary leap came with the introduction of Chain-of-Thought and ReAct prompting. Google researchers discovered that simply adding "Let's think step by step" to prompts dramatically improved performance on complex tasks. This breakthrough allowed models to handle multi-step logic and tool usage, transforming them from simple question-answering machines into reasoning engines.

ReAct prompting took this further by combining reasoning with action, the AI could decide when to use external tools, fetch information, or perform calculations mid-response. This laid the groundwork for today's AI agents that can autonomously navigate complex workflows.

The Acronym Era (2023)

As prompt engineering matured, the community recognized a critical challenge: how could non-experts remember all the essential elements of an effective prompt? The answer came in the form of mnemonic frameworks: TAG, STAR, RISEN, and dozens more flooded the ecosystem.

These acronyms served as mental scaffolding, ensuring users didn't forget crucial components like context or format specifications. What started as informal community tips evolved into structured methodologies taught in workshops and documented in company playbooks.

Core General-Purpose Frameworks

RISEN (Role, Input, Steps, Expectation, Narrowing)

RISEN represents the gold standard for complex tasks requiring methodical approaches and strict constraints. Each component serves a specific purpose:

- Role: Define who the AI should embody

- Input: Provide the primary information or question

- Steps: Outline the process to follow

- Expectation: State the desired output

- Narrowing: Apply constraints or focus areas

For instance, a medical diagnosis query might look like: "You are an experienced diagnostician (Role). Given these symptoms: persistent cough, fatigue, and night sweats (Input). First list possible conditions, then evaluate likelihood based on symptom patterns (Steps). Provide your top three diagnoses with confidence levels (Expectation). Focus only on respiratory and infectious conditions (Narrowing)."

RTF (Request, Task, Format)

RTF offers a streamlined template ideal for ensuring outputs meet specific stylistic needs. Its simplicity makes it perfect for quick, structured requests:

- Request: What you're asking for

- Task: The specific action to perform

- Format: How the output should be structured

Example: "I need a competitive analysis (Request) comparing our product to three main competitors (Task) presented as a comparison table with pros/cons columns (Format)."

PGTC (Persona, Goal, Task, Context)

This four-part frame excels in conversational agents and educational scenarios, allowing you to set the scene quickly:

- Persona: The character or expertise level

- Goal: The overarching objective

- Task: The immediate action

- Context: Relevant background information

A tutoring scenario might use: "You are a patient algebra teacher (Persona) helping a struggling student build confidence (Goal). Explain quadratic equations (Task) to a 9th grader who has difficulty with basic multiplication (Context)."

The Checklist Effect

These acronyms serve as mental guardrails to ensure context and format are never omitted. Just as pilots use pre-flight checklists to avoid catastrophic oversights, prompt frameworks prevent common errors like:

- Forgetting to specify output format, resulting in walls of unstructured text

- Omitting context, leading to generic or irrelevant responses

- Failing to set appropriate expertise levels, causing overly technical or simplistic answers

The 5C Framework

The 5C Framework acknowledges that prompting is a loop, not a one-off interaction:

1. Clarity: Ensure your initial request is unambiguous

2. Contextualization: Provide necessary background

3. Command: Give specific instructions

4. Chaining: Break complex tasks into sub-prompts

5. Continuous Refinement: Iterate based on responses

This framework recognizes that even experienced prompt engineers rarely nail the perfect prompt on the first try. It encourages viewing AI interaction as a collaborative dialogue rather than a command-and-response transaction.

While frameworks provide valuable structure, over-reliance on templates can stifle AI creativity. Like training wheels on a bicycle, they're helpful for learning but can become limiting once you've developed intuition.

Some problems require non-linear thinking that frameworks inadvertently discourage. A creative writing prompt forced into RISEN format might produce technically correct but soulless prose. The BAB (Before-After-Bridge) framework assumes every problem follows a simple progression, but real-world challenges often spiral, branch, or require iterative exploration.

Role rigidity presents another challenge: locking the AI into a historian role might prevent it from offering valuable scientific context that could enrich the response.

Automation & Meta-Prompting

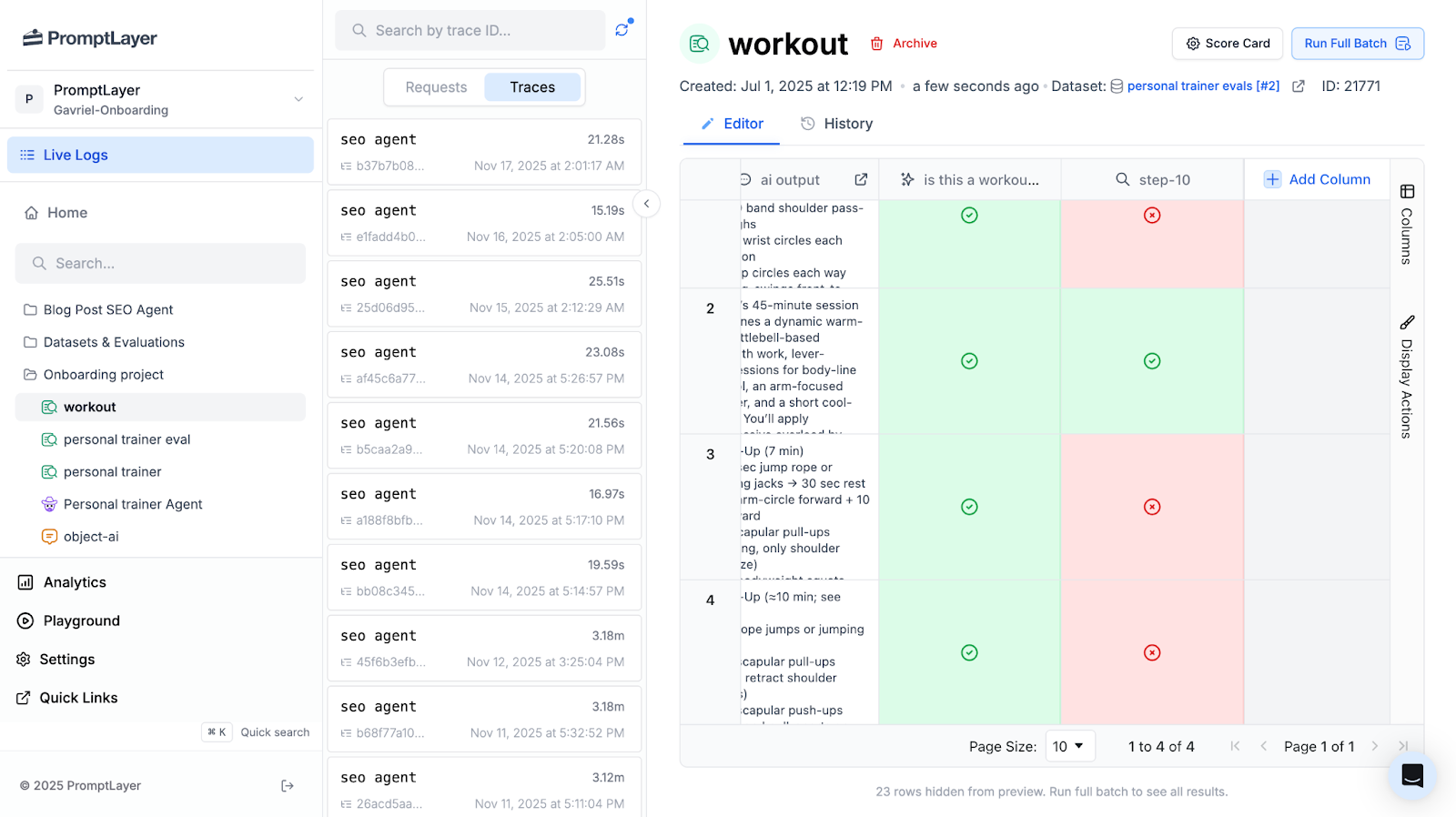

The landscape is shifting dramatically with the emergence of AI agents and automated prompt optimization. Tools like PromptLayer represent a fundamental change in how we think about prompt engineering.

Meta-prompting: using AI to generate and refine prompts for other AI systems, has shown remarkable results. Instead of manually tweaking prompts, systems can now run hundreds of variations, measure outputs, and converge on optimal formulations faster than any human could.

PromptLayer has professionalized prompt management, offering:

- Version control for prompts

- A/B testing capabilities

- Performance analytics

- Collaborative editing interfaces

These tools transform prompt engineering from an art into a data-driven discipline, complete with regression testing and continuous integration pipelines.

From Engineering to Orchestration

The emerging trend positions humans as conductors rather than composers. We define high-level goals while AI handles the "grunt work" of prompt construction and workflow management.

Consider a modern AI workflow for market research:

1. Human specifies: "Analyze competitor landscape for our SaaS product"

2. AI agent breaks this into sub-tasks

3. Each sub-task generates its own optimized prompts

4. Results are synthesized across multiple AI calls

5. Human reviews and refines the high-level approach

This shift from crafting individual prompts to orchestrating complex workflows represents the next frontier. Success lies in understanding when to apply frameworksF, when to break them, and how to combine them into sophisticated AI symphonies.

The Orchestrator's Mindset

Mini-frameworks like RISEN and RTF are the training wheels of the AI revolution, essential for initial balance, but limiting if you never take them off. As we settle into 2025, the era of the "prompt engineer" obsessing over magic words is fading. In its place rises the AI orchestrator: a professional who views prompts not as singular commands, but as programmable logic blocks within a larger system.

The real power now lies in meta-competency. It’s no longer enough to know how to write a prompt; you must know how to design a workflow where AI critiques its own output, chains reasoning steps, and optimizes its own instructions. As research suggests, the best prompts are increasingly written by the models themselves, guided by human intent.

Your next move is to experiment with "prompting 2.0." Don't just memorize the acronyms; use them to build a baseline, then break the structure to find what works for your specific domain. Treat the AI as a thinking partner, ask it to challenge your assumptions or refine your framework.