Bringing the Fundamentals to AI Engineering

AI engineering is a new discipline, but that doesn't mean we should throw out everything we know about engineering. The same fundamentals apply: de-scope ruthlessly, think in functions, and don't build what you don't need.

Too many are skipping the fundamentals.

Marketing Outpaced Reality

Everyone "knows" they need agents now. Multi-agent systems. Complex orchestration layers. AI told them so!

I keep seeing companies come in with these elaborate architectures.. five agents talking to each other, each one connecting to different data sources, APIs everywhere. They were sold on the idea that AI engineering is fundamentally different, that the old rules don't apply, that you need to build complex autonomous systems to get anything done.

They are wrong.

Do you need 5 agents or an HTML form?

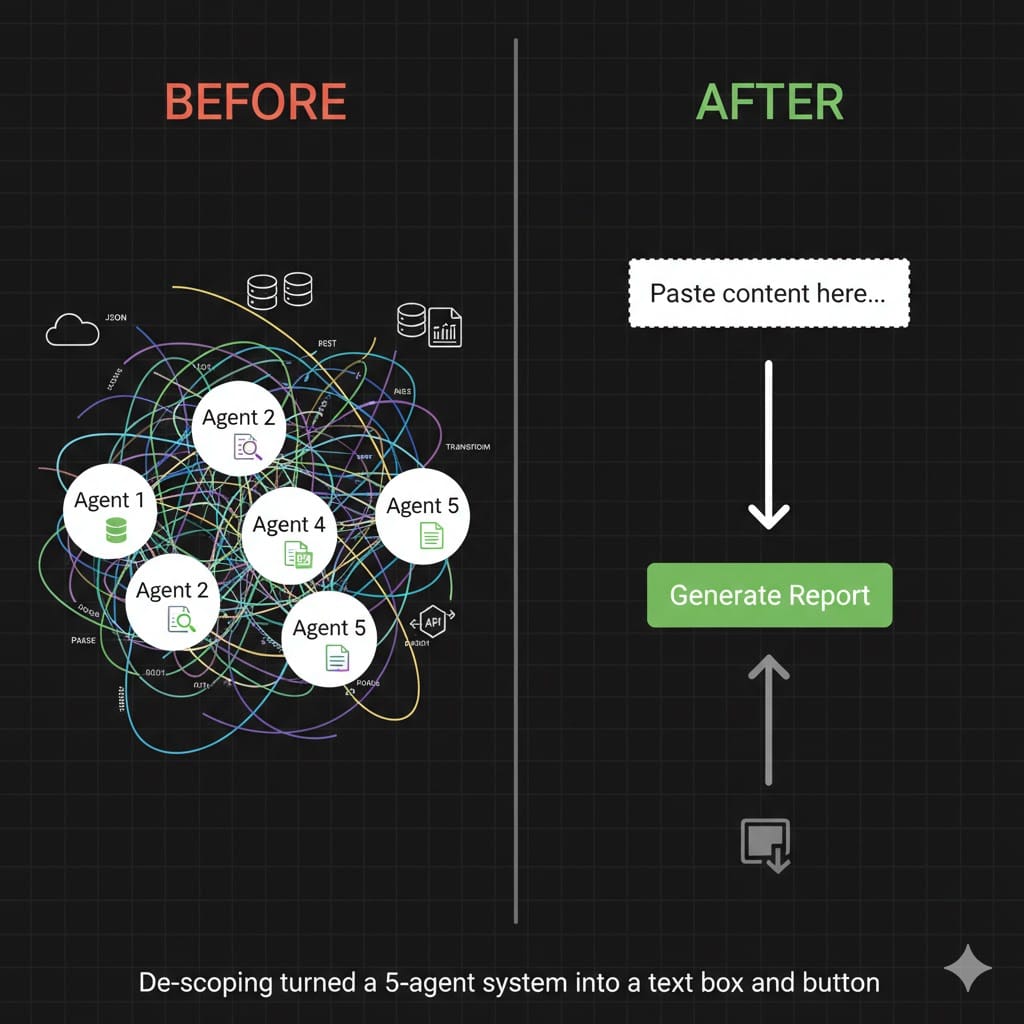

Let me illustrate this with a recent story. A customer approached us for help with implementing a five-agent system. Each agent was supposed to do something different.

- One connects to Google Drive

- Another processes the data

- Another brings in external web data

- Another generates outputs

- Another does QA

You get the idea. They had diagrams and agent names. They were ready to spend 3 months on this.

So I asked the most important question: Why?

Why five agents? What even is an agents? What problem are we actually solving here?

Turns out, they needed to process some documents and generate reports. That's it. After de-scoping, that beautiful process every engineer loves to hate, the solution became a text box and a button.

No Google Drive API. Just paste the content in.

No five agents. Just an LLM call.

No complex orchestration. Just input → output.

The system works. It solves the problem!

Fundamental #1: De-Scope then De-Scope again

This is basic engineering. Start with the simplest thing that could possibly work. Build that. Then, if you actually need more complexity, add it.

But AI marketing has convinced everyone they need to start with the complex version. Agents! Orchestration! RAG pipelines! Multi-step reasoning!

You don't.

Ask these questions before diving in:

- What problem are we solving? (Be specific)

- What's the simplest solution? (Usually simpler than you think)

- Can we ship that today? (Usually yes)

- Do we actually need more? (Usually no)

Most "AI engineering" problems are just engineering problems. The fact that you're using an LLM doesn't change the fundamentals. Start simple. Ship it. Iterate based on actual usage, not theoretical requirements.

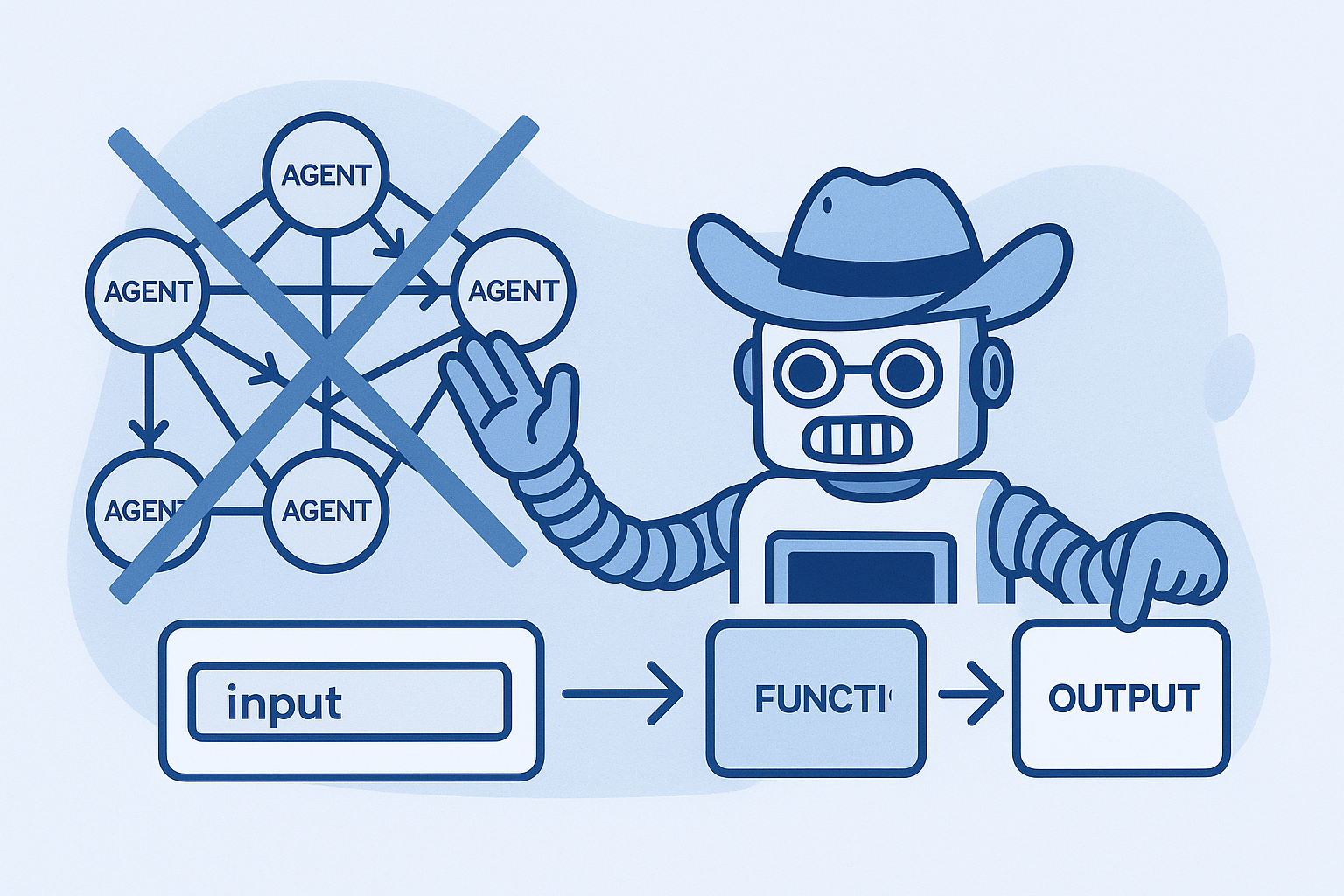

Fundamental #2: Everything Is Just a Function

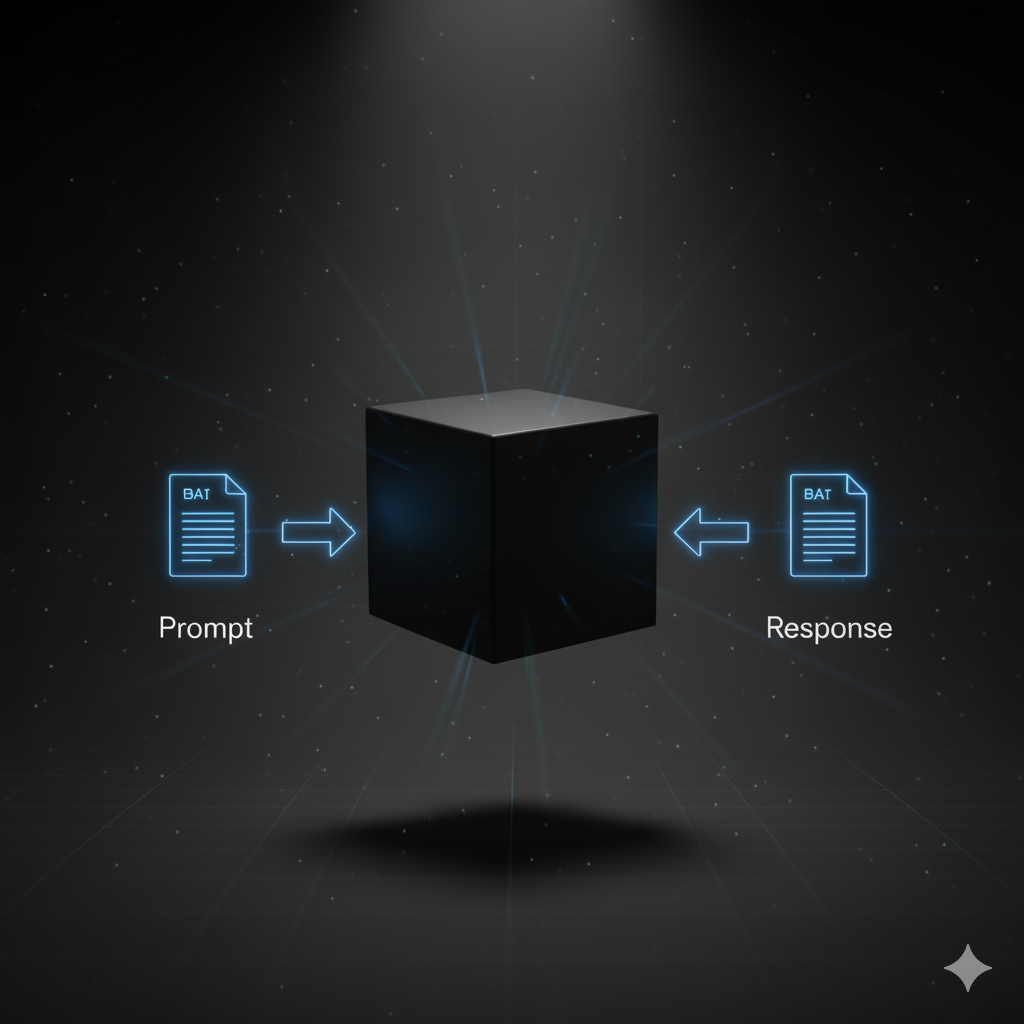

Treat everything as a black box function. Input goes in, output comes out. You don't need to understand what happens inside.

I've written about this before—the more you think of LLMs as black boxes, the easier it is to actually build with them. Don't try to reverse-engineer transformers. Don't worry about how the model "thinks."

This applies whether you're making a single API call or building what everyone calls a "complex agent."

Examples of Function-First Thinking

Claude Code SDK (headless mode): You give it an initial prompt and some files. It goes off and does work. When it's done, it returns the result. That's a function call. Sure it's long-running and monitorable. But mentally, think of it as claudeCode(prompt, files) → result.

"Autonomous agents": Strip away the marketing and you know what an agent is? A while loop with an LLM call inside it. That's it. And you know what's a better way to think about "autonomous agents always running in the background"? AI cron jobs. Same concept, way more tractable, way more understandable.

Multi-agent systems: Just multiple function calls that you're orchestrating. Same as any other service architecture. You wouldn't build a five-microservice system when one API endpoint would work. Don't build a five-agent system when one LLM call would work.

Think in terms of: What are my inputs? What are my outputs? How do I monitor this function? How do I improve it? That's engineering.

Fundamental #3: Engineering Best Practices

Version control. Testing. Observability. These don't suddenly become optional because you're using AI.

If anything, they become more important.

Version control for prompts: Your prompts are your IP. Treat them like code. Track changes. Know what version is running in production. Be able to roll back when something breaks. Use a prompt management platform (like PromptLayer).

Evals and testing: You wouldn't ship code without tests. Don't ship prompts without evals. The specifics are different—you're testing outputs that vary, dealing with probabilistic responses—but the fundamental practice is the same. Learn how to evaluate prompts for non-trivial use-cases.

Observability and monitoring: You need to see what's happening in production. What prompts are being used? What's the latency? Where are the failures? This is basic ops. Most prompt management platforms started as observability products.

These practices exist for a reason. They help you ship faster, break less, and fix things when they do break. Using an LLM doesn't change that.

Domain Experts Need Engineering, Not Hype

Here's what makes this particularly important right now: AI engineering is increasingly being done by domain experts, not traditional software engineers. Product managers building features. Support teams automating responses. Legal experts building contract analysis tools.

The hype doesn't matter. They don't need to be told everything is new and different and requires a completely fresh approach. They need engineering fundamentals adapted to the tools they're using.

That's the thesis behind what we're building at PromptLayer. Take the engineering practices that work—version control, testing, observability—and make them accessible for the people actually building with LLMs.

The same de-scoping principle applies: don't build what you don't need. The same function-first thinking applies: inputs, outputs, monitoring. The same engineering practices apply: version control, testing, observability.

AI engineering isn't special. It's just engineering.

Start simple. Use functions. Think iteratively.