Agent Client Protocol: The LSP for AI Coding Agents

What if switching between AI coding assistants was as easy as changing text editors?

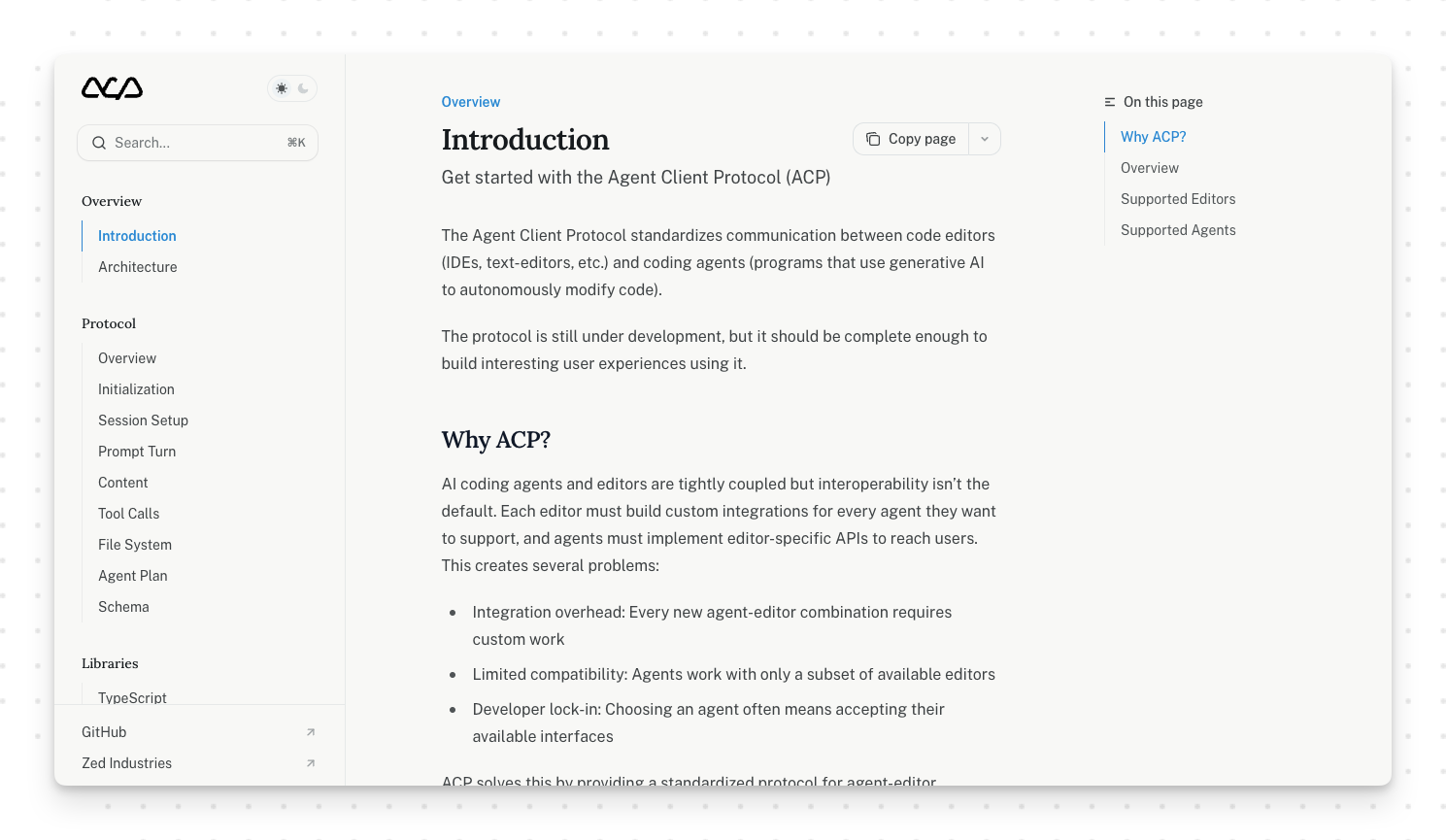

That's the promise of the Agent Client Protocol (ACP)—a new open standard that aims to do for AI agents what the Language Server Protocol did for programming languages. Just as LSP decoupled language smarts from specific editors, ACP unbundles AI assistance from the IDE, creating a universal interface between any editor and any AI coding agent.

This vision took concrete form in August 2025 when Zed Editor launched their "Bring Your Own Agent" feature, debuting with Google's Gemini CLI as the first reference implementation. As Nathan Sobo, co-founder of Zed, put it: the goal is to let developers "switch between multiple agents without switching your editor."

This post explores what ACP is, how it emerged from practical needs, its architecture leveraging JSON-RPC and the Model Context Protocol (MCP), the in-editor experience it enables, and why this matters for developers and prompt engineers seeking interoperable, vendor-agnostic AI workflows. We'll also clarify that this editor-focused ACP is distinct from the RESTful Agent Communication Protocol used for agent-to-agent messaging—this one connects your editor to AI assistants via JSON-RPC for a seamless coding experience.

What ACP Is: Decoupling AI from Editors

At its core, the Agent Client Protocol is a unified JSON-based interface between code editors (the client) and AI coding agents (the server). Think of it as a translator that lets any ACP-compatible editor work with any ACP-compliant AI agent, regardless of who built either component.

The LSP analogy is deliberate: before LSP, every editor needed custom plugins for each programming language's features. LSP changed that by creating one protocol that all editors and language servers could speak. Similarly, ACP creates a common language for AI assistance, eliminating the need for one-off integrations between specific editors and AI tools.

The protocol pursues four key goals:

- Interoperability: Any editor + any agent, no custom plugins required

- UX-first design: Rich interactions with live progress, multi-buffer diffs, and transparent agent actions

- Trusted local environment: Agents run in user-controlled spaces with editor-mediated permissions

- MCP-friendly data model: Reuses existing standards for tools and data exchange

-> Developers can choose their editor and AI assistant independently, mixing and matching based on preference or task requirements without vendor lock-in.

Origins: From Terminal Hacks to Open Standard

ACP emerged from a very practical problem. In early 2025, Zed's team was building experimental "agentic editing" features—AI assistants that could perform multi-step code modifications autonomously. When Google approached them with Gemini CLI, their command-line coding assistant, both teams wanted deeper integration than just running the tool in a terminal.

As Zed's developers explained: "We were already running Gemini CLI inside our embedded terminal... but we needed a more structured way of communicating than ANSI escape codes." Their solution was to define a minimal set of JSON-RPC endpoints to relay user requests and render agent responses. This pragmatic approach—born from immediate needs rather than committee design—became the foundation of ACP.

The collaboration bore fruit quickly. By August 2025, Zed launched ACP as an open standard under the Apache License, with Google's Gemini CLI serving as the flagship example. Importantly, Zed refactored their own built-in AI assistant to use ACP internally, ensuring any new features they developed would work equally well with external agents—a strong signal of commitment to the standard.

Early ecosystem growth has been promising. Beyond Zed's native support, the open-source community quickly added ACP to Neovim via the Code Companion plugin. Developers are already exploring integrations with other AI agents like Anthropic's Claude Code, suggesting genuine grassroots interest in bringing preferred AI tools to preferred editors.

Architecture: JSON-RPC Messages and Streaming

ACP's technical design prioritizes simplicity and proven patterns. The protocol uses JSON-RPC 2.0 over standard input/output streams— when you activate an AI agent, your editor spawns it as a subprocess and communicates through stdin/stdout pipes, just like LSP servers.

This bidirectional messaging enables rich interactions:

- Editors send requests ("refactor this function", "explain this code")

- Agents stream responses via JSON-RPC notifications for real-time progress

- Agents can query back to request permissions or additional context

The streaming capability is particularly important. Rather than waiting for complete responses, agents can send partial outputs token-by-token letting editors display progress as the AI thinks—crucial for maintaining responsive user experience during complex operations.

ACP consciously reuses data types from the Model Context Protocol (MCP) wherever possible. This "don't reinvent the wheel" approach means common structures like text content, code diffs, and tool results use established JSON schemas. Custom message types handle coding-specific UI elements, such as patches and multi-file changes. All human-readable text defaults to Markdown format, enabling rich formatting without complex rendering requirements.

Three design principles guide the architecture:

- MCP-Friendly: Shared vocabulary with tool protocols for seamless integration

- UX-First: Just enough structure for clear, intuitive agent interactions

- Trusted Environment: Agents operate with user privileges under editor oversight

This last principle is crucial: "Nothing touches our servers, and we don't have access to your code" when using third-party agents, as Zed emphasizes. The local-first model ensures sensitive code remains under user control.

MCP Integration: Tools and Capabilities

One of ACP's most powerful features is its seamless integration with the Model Context Protocol for tool access. When an ACP session starts, the editor passes available MCP server endpoints and credentials to the agent, essentially giving it a toolkit of capabilities.

This might include tools to:

- Run test suites and analyze results

- Query documentation or API references

- Access databases or external services- Execute code in sandboxed environments

The agent can then invoke these tools via standardized MCP calls, all through JSON-RPC messages. Crucially, the editor maintains oversight—it can require user confirmation for sensitive operations, ensuring agents don't run wild with destructive actions.

For extensibility, editors can even expose their own functions as MCP servers. This means an agent could leverage editor-specific features (like advanced search or refactoring tools) through the same standardized interface. The broader MCP ecosystem momentum—including Microsoft's VS Code Copilot adopting MCP—suggests a growing universe of tools that ACP agents can tap into.

This tool integration transforms AI agents from mere code generators into autonomous development partners that can execute, validate, and iterate on solutions under user control.

The In-Editor Experience: Transparency and Control

ACP enables a remarkably transparent coding experience. When an agent is active, developers can "follow the agent around the codebase as it works"—the editor highlights regions being read or modified, showing live progress similar to pair programming with a human colleague.

Key UX features include:

- Real-time visualization of insertions and deletions as the agent formulates changes

- Multi-buffer diff views for reviewing all proposed modifications with full syntax highlighting

- LSP integration for error checking and validation before accepting changes

- Markdown-formatted chat for agent explanations and clarifications

- Interrupt capability to stop or redirect the agent at any point

This transparency extends to the trust model. Since agents run locally or on user-specified servers, code never leaves your control by default. The editor acts as a permission gatekeeper, prompting for approval when agents request sensitive operations.

The result feels like a natural extension of the coding process rather than a bolted-on tool—AI assistance that respects both developer workflow and security concerns.

Why This Matters: Prompt Engineering in Production

For developers and prompt engineers, ACP represents a fundamental shift in how we work with AI coding assistants. Instead of treating prompt design as an isolated activity in notebooks or API playgrounds, ACP brings the entire workflow into the IDE.

This is exactly the kind of workflow tools like PromptLayer were built to support: managing, versioning, and analyzing prompts in real-world development contexts. With ACP, those structured prompt/response traces happen natively inside your editor — and PromptLayer can capture, compare, and evaluate them at scale. Together, ACP + PromptLayer make prompt engineering feel less like experimentation and more like a production-grade developer workflow.

Consider the iteration cycle: you write a prompt (perhaps as a code comment or chat message), the agent acts on it immediately, and you see live results in your actual project. If the output isn't quite right, you refine the prompt and try again—all without leaving your development environment. This tight feedback loop accelerates prompt iteration dramatically.

From a prompt engineering perspective, ACP's structured JSON messages enable systematic logging and analysis. Every prompt turn and agent response can be recorded, creating a traceable history of what prompts produced which code changes. This data becomes invaluable for understanding model behavior and improving prompt efficacy.

The protocol's agent-agnostic nature enables comparative testing at scale. Since ACP decouples the agent from the editor, you can swap Agent A for Agent B and rerun the same prompt-driven workflow to evaluate differences. Such experiments become trivial when the interface is standardized.

Looking ahead, if ACP follows LSP's adoption trajectory, we may soon see "supports ACP" become table stakes for both editors and AI coding tools. The early traction with Zed and Neovim, combined with Google's backing through Gemini CLI, suggests strong initial momentum toward this standardized future.

The Path Forward: Plug-and-Play AI

The Agent Client Protocol represents more than just another developer tool standard—it's a vision for how AI and human developers can collaborate seamlessly. By standardizing editor↔agent communication through JSON-RPC and leveraging MCP for tool access, ACP delivers on the promise of interoperable, UX-first, and secure AI coding assistance.

The implications are profound. Just as LSP enabled a flourishing ecosystem of language tools that work everywhere, ACP could spark an explosion of specialized AI agents—one for refactoring legacy code, another for writing tests, another for exploring unfamiliar codebases—all usable in your editor of choice without lock-in.

Watch the ACP ecosystem closely. The combination of Zed's implementation, Google's Gemini CLI, and growing community ports hints at a future where AI coding agents become as plug-and-play as language servers. For developers seeking to harness AI's potential while maintaining control over their tools and code, that future can't arrive soon enough.