You should be A/B testing your prompts.

Are you A/B testing your prompts? If not, you should be.

All of prompt engineering is built around answering the question: Do my prompts work well?

A/B tests are the only reliable way to determine this.

The Challenge of Evals

Creating good evals for AI outputs is challenging because they often require ground truth, which is frequently subjective or non-existent. For instance:

- What defines an optimal summary?

- What constitutes a friendly message?

The key is to anchor your ground truth to user metrics. This is how you build reliable evals for subjective tasks, much like how social media companies use “Big Data” analytics.

Real-World Metrics as Ground Truth

While historical backtesting can be useful, it’s limited. Focus on real ground truth metrics such as:

- Did the user save the summary?

- Did the user close the tab?

- Did the user resolve the ticket?

- Did the user click regenerate?

These metrics provide irrefutable evidence of your prompt’s effectiveness.

Strategies for A/B Testing Prompts

So, how do you actually go about A/B testing your prompts?

- Start small: Begin by rolling out the new prompt version to a small percentage of users, like 5–10% or just your free-tier customers. This limits risk while still giving you valuable data.

- Gradually ramp up: Keep a close eye on user metrics as you slowly increase the rollout percentage. Aim for milestones like 10%, 20%, 40%, and eventually 100%. Watch for any red flags or negative impacts.

- Segment users: Not all users are the same. Consider segmenting based on factors like user type (free vs paid), user ID, or company. This lets you test new versions on specific groups before a full rollout.

- Combine methods: A/B testing is powerful, but don’t stop there. Combine it with synthetic online evals and rigorous offline testing for a comprehensive view of prompt performance.

- Iterate and refine: A/B testing is an ongoing process. Analyze your results, make tweaks to your prompts, and run the test again. Continuous iteration is 🗝️ to dialing in top-notch prompts.

The key is to be methodical, data-driven, and unafraid to experiment. By starting small, segmenting users, and iterating based on real metrics, you can unlock the full potential of A/B testing for your prompts.

Implementing A/B Testing with PromptLayer

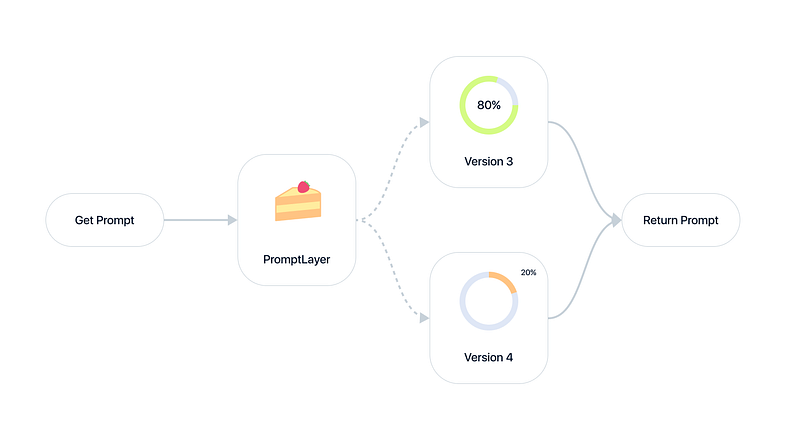

PromptLayer has full support for A/B testing using dynamic release labels. Dynamic release labels allow you to overload release labels and dynamically route traffic to different prompt versions based on percentages or user segments.

Create a new prompt version

Edit your prompt and try something new. Maybe try out a new open source model..

Set up an A/B Release

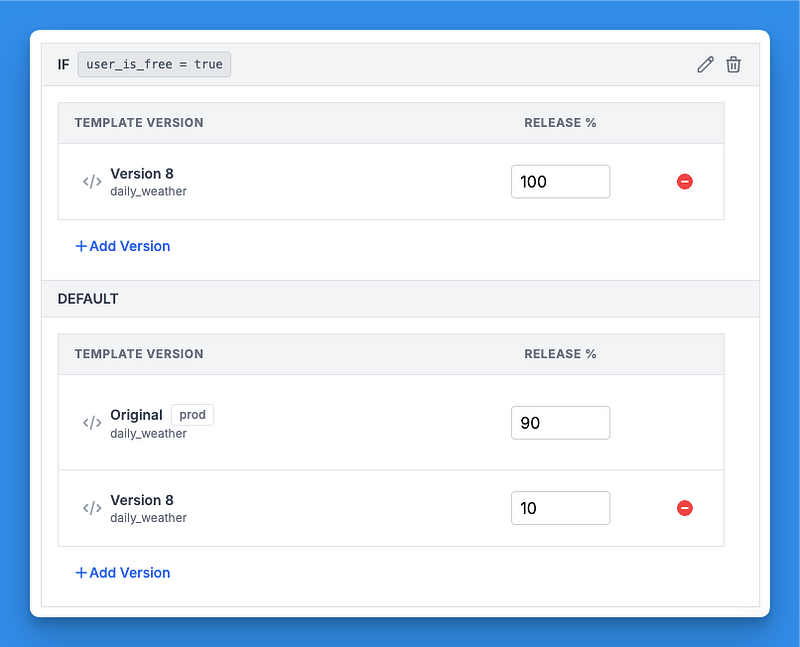

First, navigate to the A/B Releases Registry in PromptLayer. Create a new A/B Release and select the release label you want to overload (e.g., “prod”). Choose the base prompt version and the version(s) you want to test.

Set traffic percentages for each version, making sure they add up to 100%. For example, you might send 90% to v3 (stable) and 10% to v4 (new).

If desired, you can also add user segments to define which version each segment receives. Segments use request metadata, like user ID or company. As an example, you could specify that internal employees get v4 (dev) 50% of the time.

Once you’ve configured everything, save the A/B Release. It will now dynamically route traffic for that label.

Launch and monitor

Let’s go. 🏁

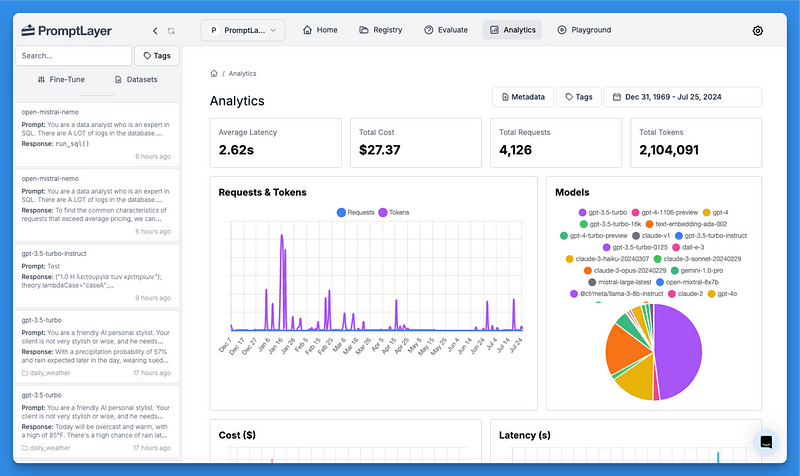

Start the test and keep a close eye on your key metrics. PromptLayer’s analytics dashboard makes it easy to track performance and compare versions side-by-side.

Remember, prompt iteration is all about feedback loops and minimizing risk.

The 🗝️ is to start small, monitor closely, and iterate based on real data. PromptLayer gives you fine-grained control over version routing, so you can safely test updates, roll out new versions, and segment users.

Happy prompting and A/B testing! 🍰

PromptLayer is the most popular platform for prompt engineering, management, and evaluation. Teams use PromptLayer to build AI applications with domain knowledge.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰