What is Context Engineering?

The term "prompt engineering" really exploded when ChatGPT launched in late 2022. It started as simple tricks to get better responses from AI. Add "please" and "thank you." Create elaborate role-playing scenarios. The typical optimization patterns we all tried.

As I've written about before, real prompt engineering isn't about these surface-level hacks. It's about communication. It's about taking human knowledge and goals and translating them into something AI can work with. We launched PromptLayer as the first ever prompt engineering platform, and our mission is to build the framework teams use to build (and talk to) AI.

But... is "prompt engineering" really the right word to use?

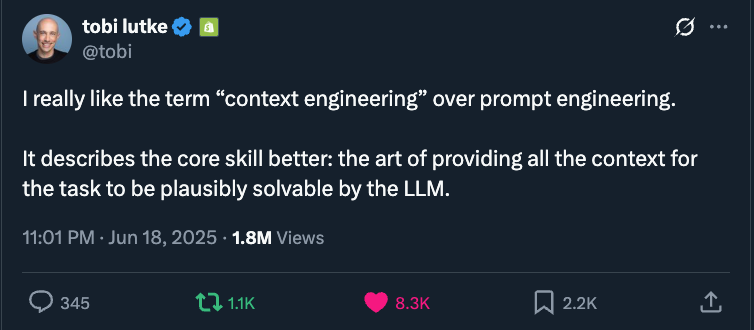

Shopify CEO Tobi Lütke doesn't think so.

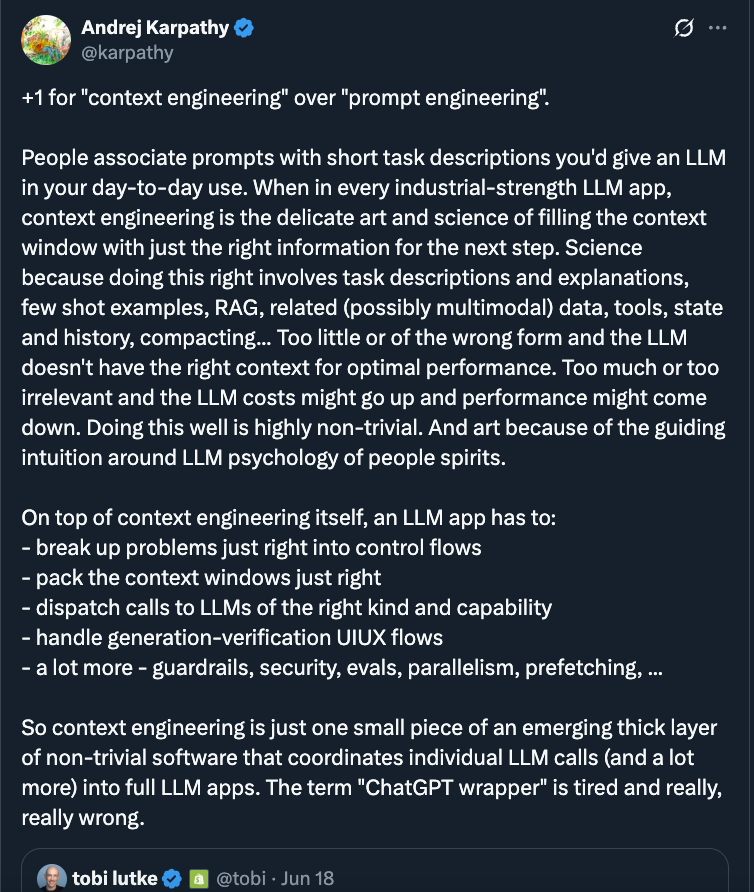

The legends of AI immediately agreed. Andrej Karpathy endorsed it, saying "+1 for 'context engineering' over 'prompt engineering'." Simon Willison highlighted it on his blog.

What's Wrong with Prompt Engineering?

Prompt engineering has a branding problem. Simon Willison nailed it: most people think it's "a laughably pretentious term for typing things into a chatbot."

First, it doesn't sound technical enough. When engineers hear "prompt engineering," many dismiss it as glorified copywriting. Is typing questions into ChatGPT really engineering? It reminds me of how hardware engineers sometimes question whether software engineering is real engineering. The cycle continues.

Second, the name doesn't capture what we actually do. Modern AI applications require far more than clever prompts. You need to fetch the right data. Manage conversation history. Integrate APIs. Format outputs. Maintain state. Calling all this "prompt engineering" is like calling software development "typing."

As Karpathy explained, "People associate prompts with short task descriptions, but apps build contexts — meticulously — for LLMs to solve their custom tasks."

Understanding "Context" in LLMs

So what is context? It's everything the model sees before it generates a response:

- System instructions that guide behavior

- The user's actual question

- Previous conversation history

- Data retrieved from databases or APIs

- Descriptions of tools the model can use

- Required output formats

- Examples of good responses

Context isn't static. It's assembled dynamically based on what the user needs right now. As one definition puts it: "Context Engineering is the discipline of designing and building dynamic systems that provide the right information and tools, in the right format, at the right time, to give an LLM everything it needs to accomplish a task."

Here's a concrete example: Say you're building an AI scheduling assistant. The basic approach just feeds it the request: "Are you free for a quick sync tomorrow?"

The context engineering approach? Load the user's calendar. Pull in their email history with the sender. Add their scheduling preferences. Include available meeting tools. The difference is dramatic. You go from generic bot responses to actually helpful, personalized assistance like: "Hey Jim! Tomorrow's packed for me, back-to-back all day. How about Thursday morning? I've sent an invite—let me know if that works."

The Future of This Career Path

Call it prompt engineering or context engineering. Either way, this skill is critical for the future of AI development.

Walden Yan from Cognition AI calls it "the #1 job of engineers building AI agents" in 2025. He's right. The bottleneck isn't model intelligence anymore. It's whether the model has the right context to solve the problem.

The field is maturing rapidly. We're moving from "vibe coding" (iterating until it feels right) to systematic engineering discipline. Companies like ours are building entire platforms around context management.

Here's what's particularly exciting: context engineering connects domain experts with AI capabilities. Build agents is not just technical—it's about encoding how your organization works into AI inputs. Doctors are building medical chatbots. Lawyers are creating legal assistants. Teachers are developing personalized tutors. They don't need to be AI experts. They just need to engineer the right context.

The terminology might continue evolving. But the core challenge remains constant. How do we give AI everything it needs to solve real problems?

...

For what it's worth, I still like "PROMPT ENGINEERING". Plus, we already invested in the hats.