What Is a Good R-Squared Value?

The coefficient of determination (R²) is one of the most commonly reported statistics in regression analysis, yet its interpretation remains a source of confusion for many researchers and analysts. This guide provides a plain-English explanation of what R² actually measures, why "good" is entirely contextual, field-specific benchmarks, and practical advice for judging your own R² values. Understanding these nuances prevents misinterpretation, helps avoid overfitting traps, and ultimately supports better decision-making in your analysis.

What R² Actually Measures in Plain English

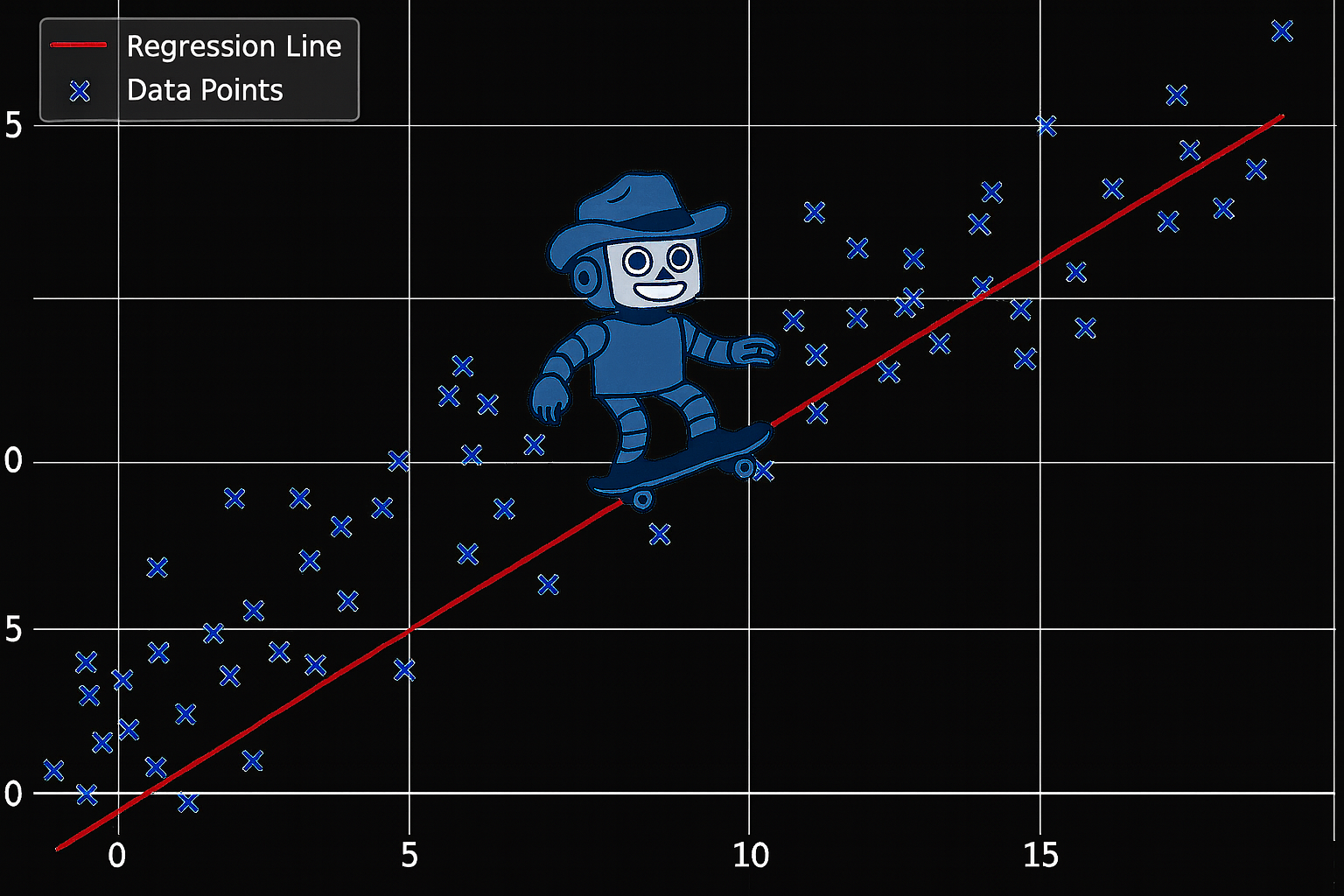

At its core, R² represents the proportion of variance in your outcome variable that's explained by your model. Think of it as answering the question: "How much of the variation in what I'm trying to predict can be accounted for by my predictors?"

The formula is straightforward: R² = 1 − (unexplained variance / total variance). When your model perfectly predicts every data point, the unexplained variance is zero, giving you R² = 1. When your model does no better than simply using the mean value as a prediction for everything, R² = 0.

Key technical points to remember:

- R² ranges from 0 to 1 when using ordinary least squares with an intercept

- In simple linear regression with one predictor, R² equals the square of the Pearson correlation coefficient (r²)

- With multiple predictors, R² never decreases as you add variables, which is why adjusted R² exists to penalize unnecessary complexity

- For classification problems, you'll encounter pseudo-R² values that aren't directly comparable to linear regression R²

- Surprisingly, R² can be negative if you fit without an intercept or if your model performs worse than the mean baseline

Why "Good" Depends on Context and Goal

The most critical insight about R² is that there's no universal threshold for "good". What constitutes an acceptable R² depends on three key factors:

Field noise and measurement precision drive what's achievable. In a physics lab measuring the relationship between force and acceleration, you might expect R² > 0.95 because measurements are precise and relationships are deterministic. In contrast, predicting human behavior involves countless unmeasured factors, making R² = 0.30 potentially impressive.

Your purpose matters enormously. If you're building a model for prediction (like forecasting sales), you typically want higher R² values because accuracy is paramount. But if you're conducting exploratory research to understand relationships between variables, even modest R² values can reveal meaningful insights when predictors are statistically significant.

Always compare to baselines in your specific domain. An R² of 0.60 might be disappointing in engineering but groundbreaking in sociology.

Benchmarks by Field (Rules of Thumb, Not Absolutes)

While every dataset is unique, certain patterns emerge across disciplines:

Physical Sciences and Engineering

In well-controlled experimental settings, R² values often exceed 0.90. Linear relationships in physics frequently produce R² approaching 1.0. When systems follow known physical laws with precise measurements, anything below 0.80 might signal measurement error or model misspecification.

Finance

Investment analysis uses specific thresholds: R² of 85-100% indicates very high alignment between a fund and its benchmark index. Values below 70% suggest low correlation. For general financial modeling, R² > 0.70 is considered strong, while R² < 0.40 is typically viewed as low.

Social and Behavioral Sciences

Human behavior introduces tremendous variability. Here, R² between 0.10-0.30 is often acceptable, especially when predictors show statistical significance. Values from 0.30-0.50 are considered very good. Even R² = 0.10 can be meaningful if it helps identify important relationships between variables.

Medical and Clinical Research

A comprehensive review of over 43,000 medical papers found an average R² of approximately 0.50, though many studies reported values near zero. Recent guidelines suggest R² > 0.15 is generally meaningful in clinical research. The high variability reflects the complexity of biological systems and measurement challenges.

Machine Learning

ML practitioners focus on out-of-sample performance. In noisy real-world datasets, R² of 0.30-0.50 on test data can be competitive. Synthetic or well-engineered datasets might approach R² = 1.0. Remember: only compare R² values across models using the same dataset and target variable.

Critical warning: These are rough guidelines, not rigid rules. Your specific context always takes precedence.

How to Judge Your R² in Practice

Beyond comparing to field benchmarks, follow these practical steps:

Validate Properly

Always check cross-validated or hold-out R² rather than training R². A negative test R² indicates your model performs worse than simply predicting the mean, a clear sign of overfitting or model misspecification.

Control for Complexity

When comparing models with different numbers of predictors, use adjusted R² which penalizes additional variables. Watch for signs of overfitting: if training R² is much higher than test R², you've likely fit noise rather than signal.

Use Complementary Metrics

R² alone never tells the full story. Also report:

- RMSE (Root Mean Square Error) or MAE (Mean Absolute Error) for prediction accuracy

- Residual plots to check assumptions and identify patterns

- Confidence intervals and p-values for inference

Remember What R² Doesn't Tell You

R² measures association, not causation. Two variables can show high R² without any causal relationship. Additionally, R² indicates effect size but not statistical significance, you can have high R² with non-significant predictors due to small sample sizes.

Watch for Data Issues

Outliers and high-leverage points can dramatically inflate or deflate R². Consider:

- Checking for influential observations

- Testing for nonlinear relationships that linear models miss

- Applying transformations when appropriate

Check Calibration

A high R² doesn't guarantee unbiased predictions. Your model might consistently over- or under-predict while still explaining variance. Always examine whether predictions are systematically biased.

Beyond the Numbers: Context-Driven R² Evaluation

The question "What is a good R² value?" has no simple answer, as it's entirely domain and purpose-dependent. An R² of 0.30 might be excellent in social science research but inadequate for engineering applications. Rather than seeking a universal threshold, focus on benchmarking against your specific field and similar studies, validating on new data to ensure generalization, pairing R² with adjusted R² and other diagnostic metrics, considering your goals whether prediction or explanation, and checking all model assumptions beyond just variance explained. Remember that R² is just one piece of the model evaluation puzzle, and a thoughtful analysis considers multiple metrics, validates properly, and interprets results within the appropriate context. By understanding these nuances, you'll avoid common pitfalls and make more informed decisions about your regression models.

PromptLayer is an end-to-end prompt engineering workbench for versioning, logging, and evals. Engineers and subject-matter-experts team up on the platform to build and scale production ready AI agents.

Made in NYC 🗽

Sign up for free at www.promptlayer.com 🍰