Unstable Diffusion: The Uncensored AI Silicon Valley Won't Touch

In August 2022, a Discord community launched what would become one of AI's most controversial projects: an "uncensored" image generator that grew to 350,000+ users generating half a million images daily.

Yet it couldn't raise money on mainstream platforms.

This is the story of Unstable Diffusion, an AI platform that fine-tunes Stable Diffusion models specifically for adult content generation. What started as a Reddit forum has evolved into a lightning rod for debates about AI innovation, creative freedom, and society's struggles with content moderation, consent, and artistic rights

From Discord Experiment to Contested Platform

The rapid ascent of Unstable Diffusion reads like a Silicon Valley startup story, except traditional Silicon Valley won't touch it.

Within mere months of Stable Diffusion's open-source release in mid-2022, a community of enthusiasts had coalesced around a singular goal: creating AI-generated adult content without restrictions.

The Funding Rollercoaster

The funding journey reveals the platform's precarious position in the tech ecosystem:

- Patreon (Nov 2022): ~$2,500 monthly from crowdfunding

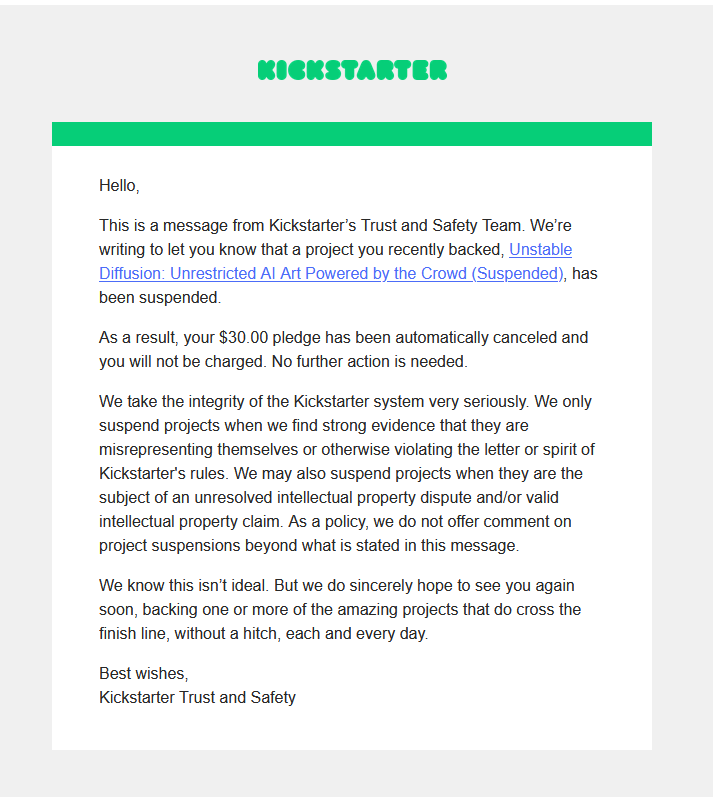

- Kickstarter (Dec 2022): 867 backers pledge $56,000 before suspension

- Direct fundraising (Mid-2023): ~$26,000 raised through Stripe

Kickstarter abruptly suspended the campaign, citing new AI content guidelines.

This forced a dramatic pivot. Unable to use mainstream crowdfunding platforms, they turned to direct fundraising through their own website. The $26,000 raised was a fraction of what a traditional AI startup might secure in seed funding.

Current Scale

Despite financial constraints, the platform's growth has been extraordinary:

- 350,000+ daily active users

- 500,000+ images generated per day

- 4-4.3 million images produced by late 2022 alone

The service operates through both a web application with custom-trained models and the original Discord bot, maintaining separate channels for "safe for work" and "not safe for work" content.

The Technology Behind the Controversy

Understanding Unstable Diffusion requires grasping both its technical sophistication and its fundamental departure from mainstream AI image generators. At its core, the platform uses the same latent diffusion architecture that powers Stable Diffusion: a U-Net neural network that denoises latent image representations, guided by a CLIP text encoder and a variational autoencoder (VAE) that maps between images and compressed latents.

The critical innovation, and source of controversy, lies in the training data. While Stable Diffusion's original dataset contained only about 2.9% NSFW content, resulting in poor-quality explicit images with distorted anatomy and odd proportions, Unstable Diffusion took a radically different approach. The team assembled a massive dataset of over 30 million adult images, carefully curated and labeled by community volunteers.

This specialized training has produced four distinct models, each fine-tuned for different aesthetic preferences:

- Merlin: A general-purpose model for varied adult content

- Echo: Optimized for photorealistic outputs

- Izanagi: Specialized in anime and manga styles

- Pan: Focused on anthropomorphic and furry content

The technical achievements are significant. By focusing their training on specific types of adult content, the models produce notably better anatomical accuracy and stylistic consistency than attempting to use general-purpose AI for explicit imagery. The platform's Hugging Face profile confirms that data collection and model training were community-driven efforts, representing one of the largest coordinated attempts to build specialized AI models outside of major tech companies.

Yet technical limitations persist, revealing the inherent challenges of the task. Simple prompts produce passable results, but complex scenes involving multiple people, intricate poses, or detailed interactions often fail spectacularly. Common artifacts include:

- Misaligned limbs

- Abnormal proportions

- Extra appendages

- Bizarre facial expressions

These issues stem from the fundamental complexity of human anatomy and the limitations of current diffusion architectures when pushed beyond their training distribution.

The platform also faces significant computational constraints. High-resolution image generation requires expensive GPU resources, leading to a credit-based system where free users receive 12 fast generations daily before needing to subscribe. Under heavy load, image generation can take up to a minute, illustrating the tension between accessibility and sustainability.

The Ethics dilemma

Unstable Diffusion exists at the intersection of numerous ethical fault lines in AI development. The most pressing concern is the platform's potential for creating nonconsensual intimate images, a fear that materialized in early 2023 when popular Twitch streamer "Atrioc" was caught viewing AI-generated pornography of female streamers. This incident highlighted how easily the technology can be weaponized for harassment.

The platform's initial promise of being completely "uncensored" quickly collided with reality. After reports emerged of celebrity deepfakes slipping through filters, Unstable Diffusion implemented AI-powered moderation systems and explicit policies banning:

- Content featuring real people

- Content featuring minors

- Extreme violence

This evolution from libertarian ideals to practical restrictions mirrors broader challenges in content moderation across tech platforms.

Copyright and artistic consent present another thorny issue. Like other diffusion models, Unstable Diffusion trained on vast amounts of web-scraped artwork without individual permission. Many adult artists worry their distinctive styles and original works were incorporated without consent or compensation. While the team has expressed vague intentions to "give back" to artists, concrete plans remain elusive. Some artistic communities have responded with outright bans:

- FurAffinity: Banned AI-generated art entirely

- Newgrounds: Banned AI-generated art entirely

The platform's outputs also amplify concerning biases present in its training data. Analysis reveals that without explicit prompting for diversity, the AI defaults to generating conventionally attractive, young, thin women, predominantly white or Asian. When prompted for professional scenarios without specific demographic indicators, the system often produces stereotypical imagery, such as Asian women in submissive poses for "secretary" prompts. These biases reflect and potentially reinforce narrow beauty standards and problematic stereotypes prevalent in mainstream pornography.

Gender and social implications extend beyond mere representation. Experts like Ravit Dotan of Mission Control warn that AI-generated pornography could establish "unreasonable expectations on women's bodies" while economically threatening sex workers and adult content creators. The perfect, endlessly customizable nature of AI-generated content may reshape consumer expectations in harmful ways.

Questions Without Answers

Unstable Diffusion exemplifies AI's double-edged nature: powerful tools for creative expression that simultaneously enable harm. What began as a technical experiment in removing content filters has evolved into a complex platform navigating between user demands, legal requirements, and ethical considerations.

The platform currently exists in a legal gray area. While most jurisdictions lack comprehensive regulation of AI-generated adult content, the landscape is shifting rapidly. Only a handful of U.S. states have enacted narrow statutes against nonconsensual intimate images, but broader legislation seems inevitable as deepfake technology becomes more sophisticated and accessible.

The future of Unstable Diffusion remains deeply uncertain. It may continue operating as a niche platform serving users who feel constrained by mainstream alternatives, gradually implementing more restrictions as legal and social pressures mount. Alternatively, it could face existential challenges as governments worldwide grapple with regulating AI-generated content, particularly involving consent and identity rights.

The broader implications extend far beyond one controversial platform. Unstable Diffusion forces us to confront fundamental questions about the AI age: How do we balance innovation with protection from harm? Where do artistic freedom and personal rights intersect? Who decides what content AI should or shouldn't create?

As AI capabilities expand exponentially, these questions become increasingly urgent. Unstable Diffusion may be operating in the shadows of mainstream tech, but the dilemmas it raises will soon emerge into the full light of public policy and social discourse. The platform's trajectory, whether toward greater legitimacy or further marginalization, may preview how society chooses to handle the awesome and troubling power of artificial intelligence to create any image we can imagine, and many we perhaps shouldn't.