Top 5 Prompt Engineering Tools for Evaluating Prompts

Enjoy our list of top prompt engineering tools. Each allows you to test, analyze, and improve your prompt engineering workflow.

Equipping your team with the right tools can save you time and keep your organization on the bleeding edge of generative AI. We've highlighted the services, pros, and cons of the leading Prompt Engineering tools on the market today.

1) PromptLayer

Designed for prompt management, collaboration, and evaluation.

Services:

- Visual Prompt Management: User friendly interface to write, organize, and improve prompts.

- Version Control: Edit and deploy prompt versions visually. No coding required.

- Testing and Evaluation: Run A/B test and to compare models, evaluate performance, and compare results.

- Usage Monitoring: Monitor usage statistics, understand latency trends, and manage execution logs.

- Team Collaboration: Allows non-technical team members to easily work with engineering.

Pros:

- Optimized Experience: Facilitates prompt workflows with management tools robust interfaces.

- Collaboration-First: Allows shared access and feedback across teams.

- Versatile Integrations: Supports integrations with most popular LLM frameworks and abstractions.

Cons:

- Niche Specialization: May not be as useful for generalist not in the Prompt Engineering niche.

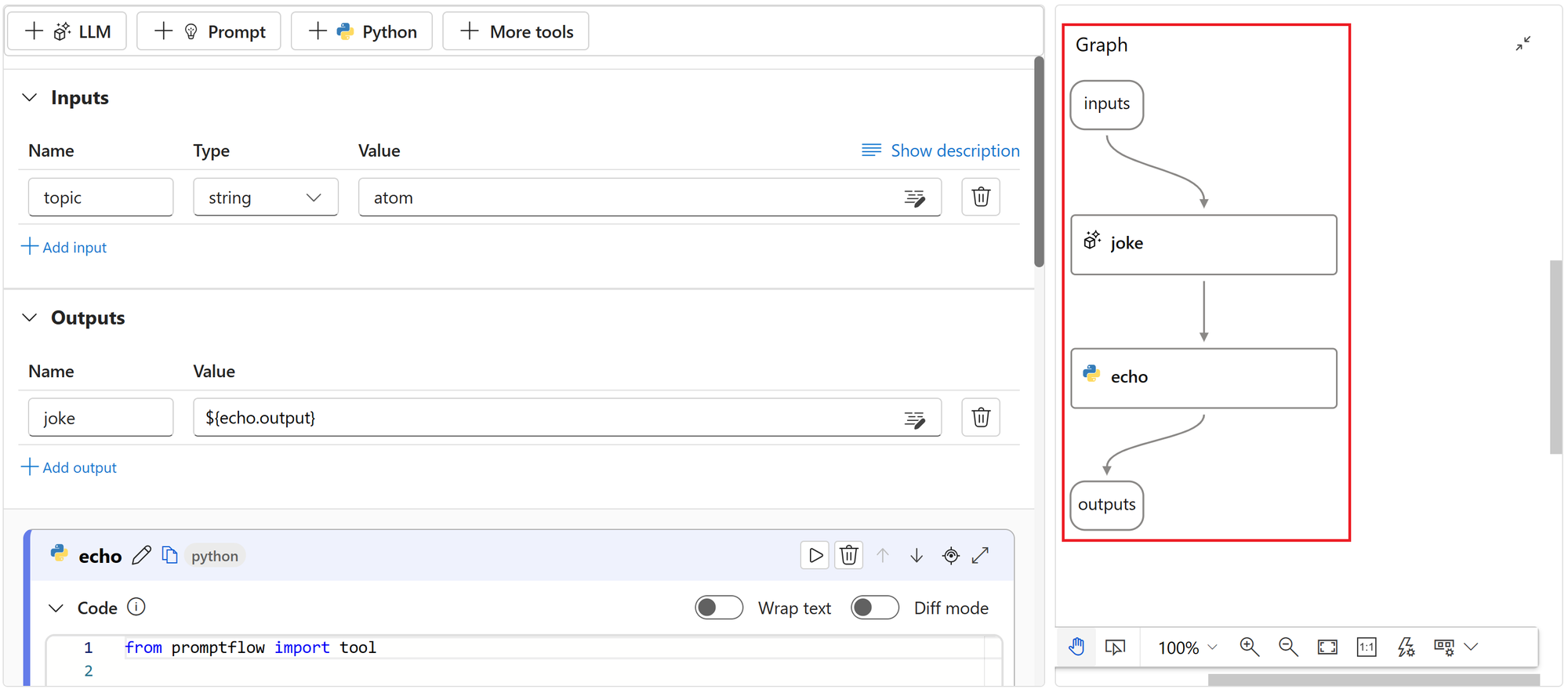

2) PromptFlow (Azure)

Designed to test, analyze, and update prompts in the Azure ecosystem.

Services:

- Prompt Management: Organize and manage prompts.

- Analytics: Offers metrics and visualization of prompt performance.

- Collaboration: Multi-user collaboration for prompts management.

- Version Control: Tracks and manages different prompt versions.

- Deployment Support: Allows deployment into production environments.

Pros:

- Comprehensive: Offers a range of services to manage, test, and optimize prompts.

- Azure Integrated: Tight integration with Azure's ecosystem.

- Collaboration: Supports multiple users working together.

Cons:

- Azure-Dependent: Requires users to be within Microsoft’s Azure ecosystem.

- Complexity: Has a steep learning curve for people unfamiliar with Azure

- Cost: The pricing structure can be high compared other tools.

3) LangSmith

Designed to build, test, and monitor LLM applications.

Services:

- LLM Monitoring: Track the performance of LLMs across various applications.

- Debugging: Looks into the chain of calls for error identification.

- Testing and Evaluation: Run tests on LLMS to assess performance

- Cost Tracking: Monitor and manage costs.

- Integration with LangChain: Integrated to directly with LangChain.

Pros:

- End-to-End Solution: Go from prototype to production for your applications.

- Evaluation Capabilities: Extensive testing and evaluation for variety of datasets

- Debugging: Easily traces the flow of information for error identification

Cons:

- Limited to LangChain : Limited to integrating with LangChain, excluding other frameworks.

- Pricing: Higher costs compared to other prompt engineering tools.

- Enterprise Scalability: May be better for smaller teams over larger organizations.

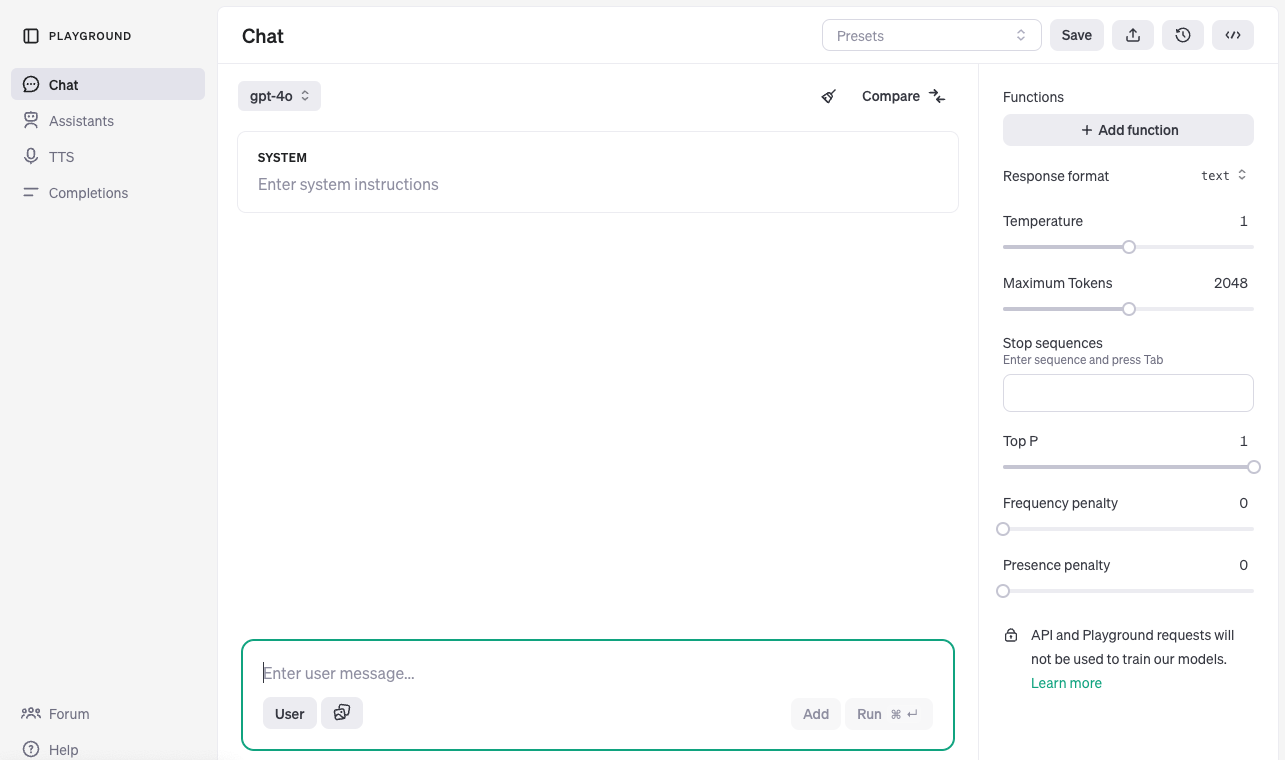

4) OpenAI Playground

Designed to test and customize prompts with OpenAI’s models.

Services:

- Build Prompts: Create and modify prompts with real-time responses using different models

- Parameter Adjustment: Customize settings like temperature, maximum tokens, and model selection.

- Prompt Templates: Offers pre-built templates.

- API Integration: Integrates with OpenAI’s API to test prompts before deploying to applications.

- Compare prompts: Test prompts side-by-side to analyze differences.

Pros:

- User-Friendly: Fairly accessible for beginners and experts.

- Customization: Parameter settings allow detailed control.

- Model Choice: Access to OpenAI’s latest models.

Cons:

- Limited Features: Lacks comprehensive management and analytics

- Dependency: Available only in OpenAI’s infrastructure and services.

- Learning Curve: Complex parameter options can require time and effort to understand.

5) Langfuse

Built to monitor, analyze, and optimize LLM applications.

Services:

- LLM Monitoring: Track the performance of LLMs across various applications.

- Prompt Analytics: Detailed metrics and visualizations of prompt performance.

- Error Logging: Log errors or unexpected outputs.

- Cost Tracking: Monitor and manage costs.

- Custom Dashboards: Personalized dashboards with KPIs and project-specific metrics.

- Security and Compliance: Secure environment that adhere’s to safety standards.

Pros:

- Comprehensive: Compatible with a wide range of platforms and tools.

- Detailed Analytics: Robust analytics and visualization options.

- Customizable Dashboards: Create dashboards for your specific needs.

Cons:

- Learning Curve: Extensive features may be overwhelming for new users.

- Pricing: Higher costs compared to other prompt engineering tools.

- Complexity for Small-Scale: Possible unnecessary complexity for smaller AI projects.

Select the right prompt engineering tool

You can improve your prompt engineering workflow with the right tool. Each tool listed above offers features and benefits specific to teams using generative AI. Whether you prioritize collaboration, analytics, or specific integrations platforms, there's a tool on this list that will improve your process.

About PromptLayer

PromptLayer is a prompt management system that helps you iterate on prompts faster — further speeding up the development cycle! Use their prompt CMS to update a prompt, run evaluations, and deploy it to production in minutes. Check them out here. 🍰