Tool Calling with LLMs: How and when to use it?

It’s been about a year since OpenAI first released function calling capability for their language models. This update allowed LLMs to not only return text, but also call predefined functions and with structured JSON arguments.

The primary motivation behind this feature seemed to be plugin support for ChatGPT, which required a structured method for invoking third-party plugins and handling their outputs. Function calling likely emerged as an internal solution that was subsequently released to the public, building upon the concept of a plugin “app store.”

Modern LLMs respond can respond to input with a combination of text, images, audio, or structured data. This article will discuss tool calling, which is a specific type of structured data response.

Tool calling (recently renamed from “function calling”) has become one of the most powerful tools in prompt engineering. It leverages an idiom that the language model inherently understands for invoking external actions, allowing you to communicate with the AI in concepts that it gets. This means you don’t have to explain functions and JSON structures in the prompt itself.

How Tool Calling Works

At its core, an LLM response can be text, an image, audio, or, with the introduction of function calling, a function invocation. A function call consists of two primary components:

- The function name

- A structured set of arguments, typically defined using JSONSchema

These arguments are then used to execute the specified function. The key benefit of this approach is its simplicity… offload the responsibility of parsing the structured output to the model providers like OpenAI. Otherwise, you would need to tweak your prompt again and again until it can reliably return structured output.

It also makes “self-healing” simple— if implemented properly, the LLM can make a function call, read the results, and decide to retry if needed.

Why Tool Calling Matters

Tool calling has several key advantages:

- Structured Outputs: Tool arguments are always in JSON format, and you can enforce the parameter structure with JSONSchema at the API level. This ensures your LLM outputs are consistently structured.

- Tokens and Understandability: Tool calling is a concept baked right into the model. There’s no need to waste tokens and energy trying to explain to the model that it should return a specific format.

- Model Routing: Tool calling provides an easy way to set up model routing architectures. This is arguably the most scalable approach to building complex LLM systems using modular prompts with singular responsibilities.

- Prompt Injection Protection: It’s harder for users to “jailbreak” a model when you have strict schema definitions that are adhered to at the model level.

Why Not Just Use JSON Mode?

OpenAI’s JSON mode is an alternative approach where you set the response_format to {“type": “json_object"} and include the word “JSON” in the system prompt to force a JSON-formatted response.

While JSON mode is more flexible than tool calling, tool calling provides a “language” for communicating with the AI in a structured way, while JSON mode is just a forcing function for the response format.

Real-World Examples

To illustrate the power of tool calling, let’s walk through a few practical examples:

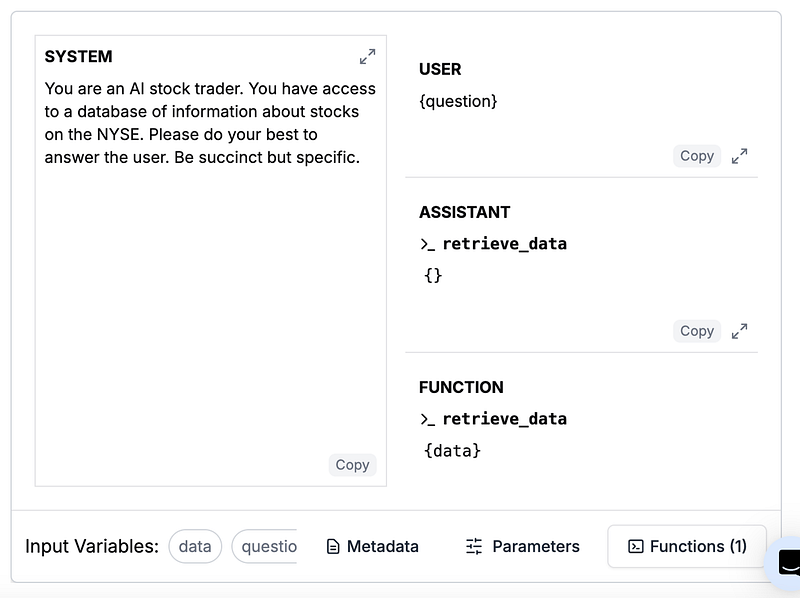

Using RAG Data

Suppose we want to build a financial advisor using a vector database of NASDAQ stock data. With function calling, we can define a retrieve_data function that allows the model to access the vector store. The model can choose to call this function as needed to retrieve relevant data.

A naive approach would be to use a simple system prompt:

You are an AI stock trader.

The user just asked the following question: {question}

You retrieved this data from your internal stock dataset:

{data}

Please answer the user's questionWhile this approach works decently well, it lacks organization and clarity. The sections are not clearly divided, and the prompt appears as a blob of text. Markdown helps, but tool calling provides a better solution.

With tool calling, we can leverage the “idioms” that the model understands:

- Messages: GPT and Claude are specifically trained as chatbots. They implicitly understand what System and User messages are. We can use this to convey information and the task more effectively by moving the question into the user message.

- Function/Tool Calling: This idiom is also trained into the model. We can use it to allow the model to access our RAG database. The model can choose to call the tools or not, or we can manually use these function calls to ask for and retrieve data. To save tokens, we can avoid passing the question to the

retrieve_datafunction and inject the data in the "function response" manually.

Here’s a simple function schema:

{

"description":"Get data about the NYSE",

"name":"retrieve_data",

"parameters":{"properties":{},"required":[],"type":"object"}

}Building a SQL Chatbot

When building an natural language to SQL chatbot, we want to leverage the LLM’s ability to detect and correct mistakes. Without tool calling, we would be forced to manually parse the string responses.

For example, the LLM might respond something like:

Sure! Happy to help. Please try the SQL statement below

```sql

SELECT COUNT(*) AS user_count FROM users;

```

This will return the amount of users you have in the users table.This approach wastes tokens (cost & latency), and we should be able to just have it return SQL. While we can write a System prompt to do that, there are always chances of edge cases and prompt injections.

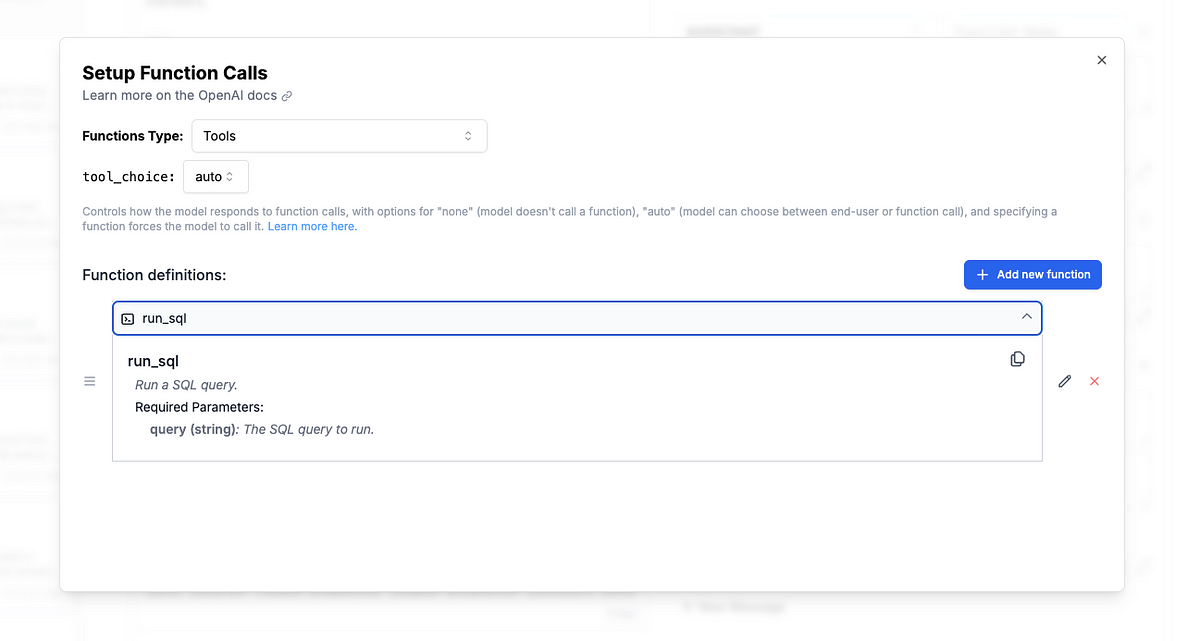

Alternatively, we can define a run_sql() function that takes in the query as a string:

Above we used PromptLayer to interactively create the tool call schema. Using PromptLayer for prompt management makes this easy to iterate on and update.

The JSONSchema generated is:

{

"description":"Run a SQL query.",

"name":"run_sql",

"parameters": {

"properties": {

"query": {"description":"The SQL query to run.","type":"string"}

},

"required":["query"],

"type":"object"

}

}We can set tool_call to auto so the model chooses whether or not to run it. If it generates an invalid query, it will get an error response and can then try again with a correction - all without any additional parsing code on our end.

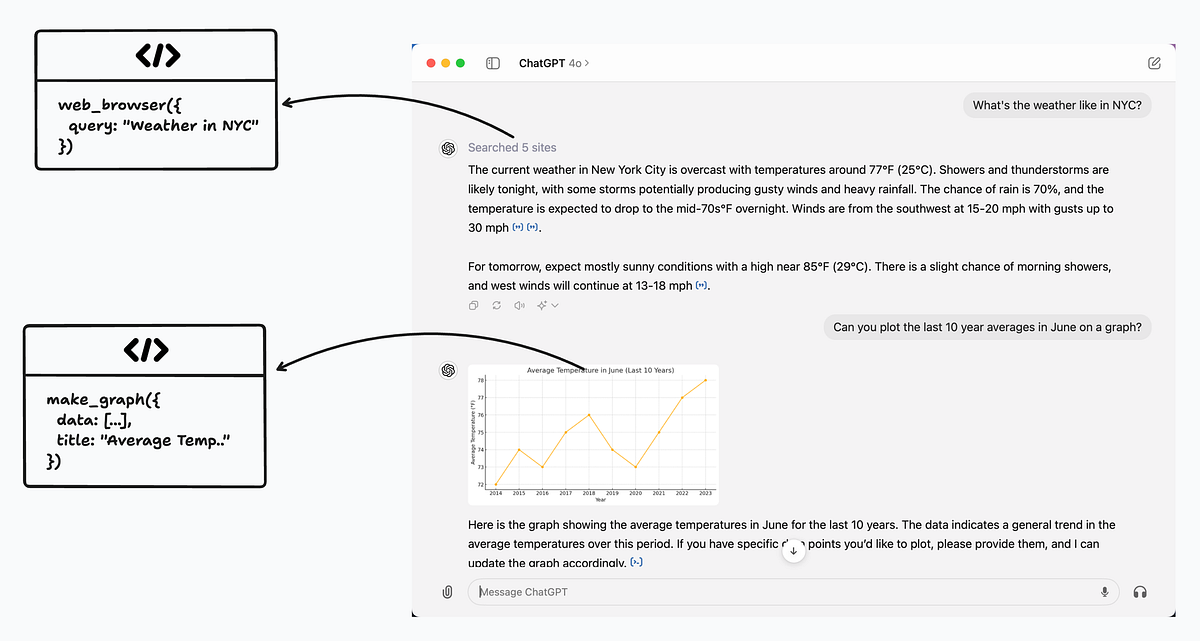

Web Browsing with ChatGPT

ChatGPT’s web browsing capability is enabled by a simple web_browser style function. The model can make one or more calls to this function, passing in URLs, and receive back DOM results to inform its final response. All of this is powered by the tool calling paradigm under the hood.

LLM-Specific Tool Calling Syntax

The exact function calling syntax and capabilities vary between LLM providers. Here’s a quick overview of the current state of function calling for OpenAI and Anthropic models:

OpenAI (“Tools”)

- Latest and most supported version is now called “Tools”

- More restrictive than original “Functions” — each tool call must have a matching tool response. Tool calls and responses are matched with an ID, and the generation will error if the IDs do not match.

- Better for sending multiple calls in one request

- GPT-4 has a strong preference for Tools over Functions

- Use JSONSchema to specify function signatures

OpenAI (“Functions”)

- Original function calling still supported but deprecated

- More flexible, won’t error on mismatched calls

- Matches functions by name only

- Use JSONSchema to specify function signatures

Anthropic

- Similar JSONSchema-based function calling as OpenAI

- Some differences in keyword arguments

- See Anthropic’s function calling docs for latest details

PromptLayer’s prompt CMS stores function definitions in a model-agnostic format that can easily by run with both OpenAI models and Anthropic models.

Pydantic Structured Output

For those interested in implementing function calling themselves, check out the open-source Instructor library. It handles function calling through prompting and Pydantic validation, making it easy to get started without being locked into a particular LLM provider.

I hope this post helps explain what LLM function calling is, why it’s so powerful, and how you can start leveraging it in your own projects. It’s definitely one of my favorite prompt engineering shortcuts.

PromptLayer is the most popular platform for prompt engineering, management, and evaluation. Teams use PromptLayer to build AI applications with domain knowledge.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰