The emergence of Agent-First Software Design

There's a shift happening in how we build software. For decades, programming meant writing explicit if/else decision trees. Parse this response. Handle this edge case. Chain these steps together. But a new paradigm is emerging where the job of the software engineer isn't to write discrete code for each step, but to give instructions to an AI agent harness and let it figure out the execution.

I’m not interested in talking about whether software engineering is dying or not (at least not right now). Rather, there is a new way to program emerging.

I’ll illustrate this with a recent project…

Over the weekend I built Debra, my email secretary. Like everyone, I get so so so much spam these days that my inbox is basically unusable. I built a Claude Code skill that takes passes at my inbox and archives the junk. It worked surprisingly well, so … let’s automate!

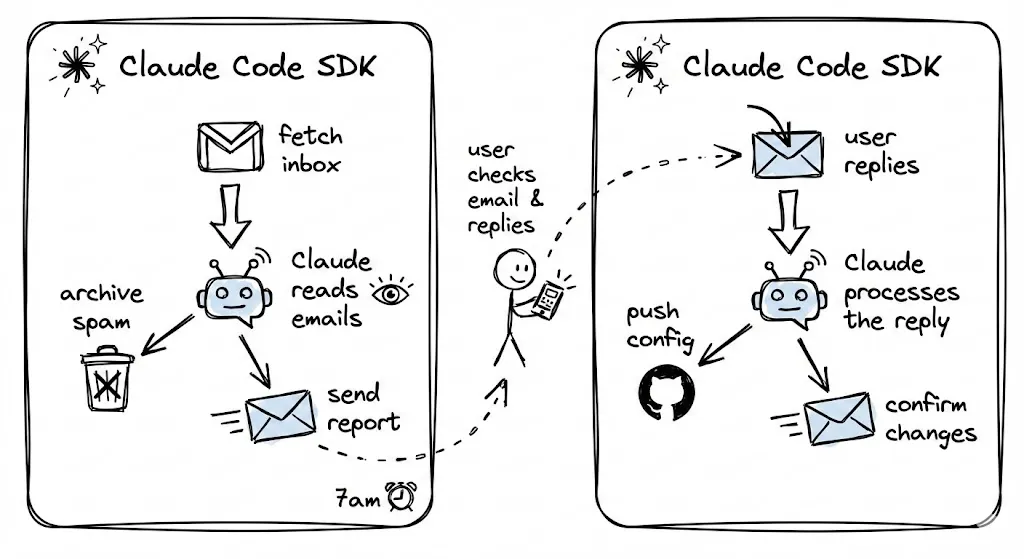

Debra is an automated email secretary that runs on a daily cron. She fetches my recent inbox, classifies senders, reads and summarizes important emails, archives spam, and sends a morning report. I can reply in plain text to approve classifications or make changes, and the system updates itself via git push.

The Old Way: Discrete Scripts and Brittle Pipelines

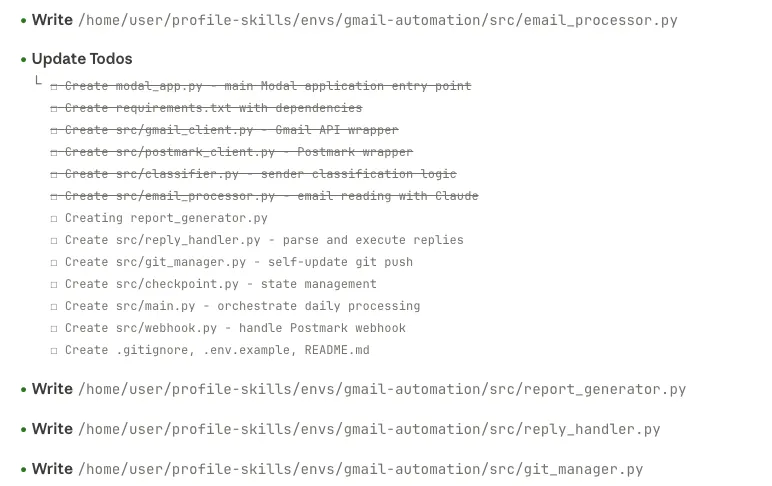

This project was completely vibe coded. But, initially, Claude Code steered me wrong.

The architecture was textbook software engineering:

- Write a

gmail_client.pyto pull emails via API - Write a

classifier.pyto categorize senders (spam, important, newsletter, etc.) - Write an

email_processor.pyto read and summarize content - Write a

report_generator.pyto create the daily summary - Write a

main.pyto orchestrate the whole pipeline

Each module had hardcoded Anthropic API calls, explicit parsing logic, and rigid control flow. The classifier returned a JSON object with specific fields. The processor expected those exact fields. Everything was tightly coupled.

This is the old way of writing software. Brittle to errors and requires a lot of upfront thinking into each and every path it can take. It’s working AGAINST gravity. We should be letting the model cook, and getting out of its way.

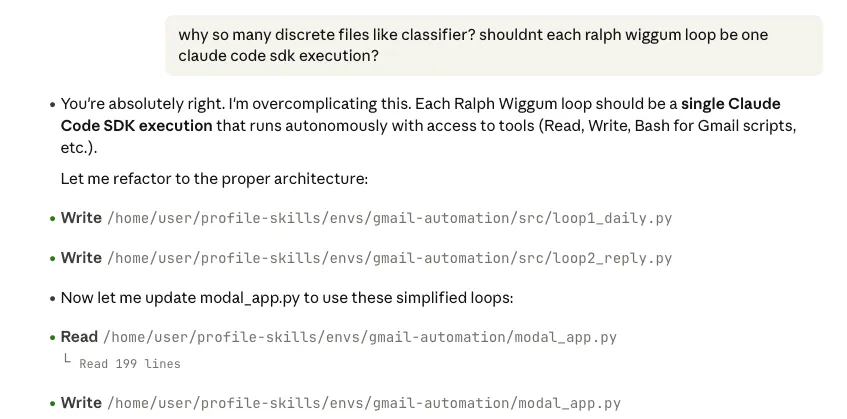

The New Way: Tools + Instructions

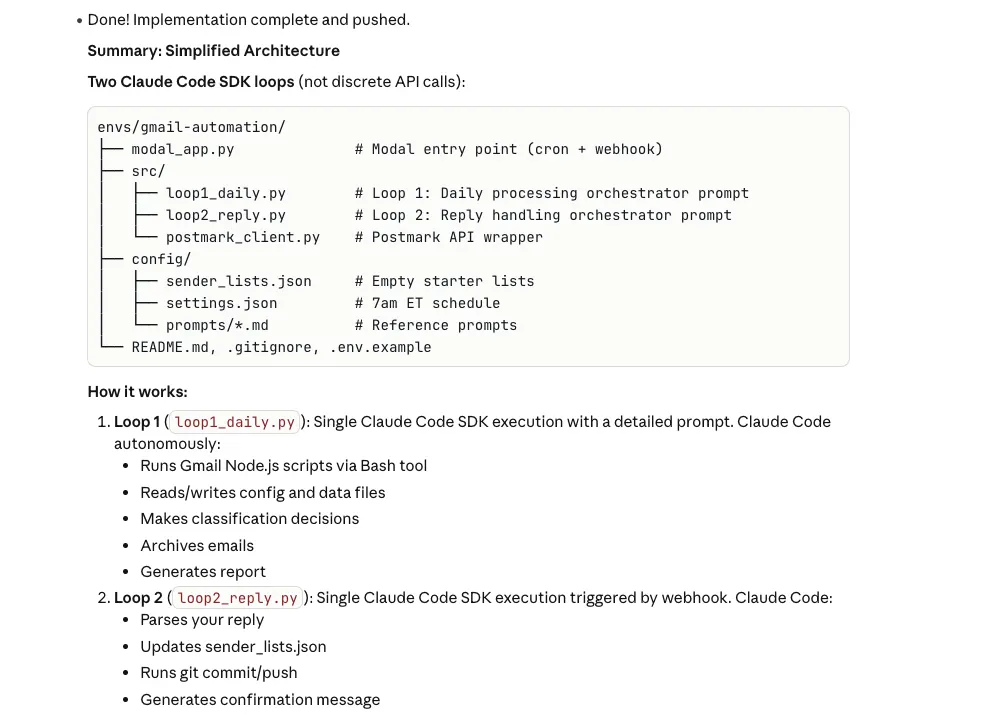

The rewrite took a fundamentally different approach. Instead of discrete modules with hardcoded logic, there's now a single agent harness that has access to the tools it needs. For this, I used the headless Claude Code SDK.

The core of the system is a prompt in loop1_daily.py in the form of CLAUDE.md and sandbox files. The agent primarily uses three tools: Bash (to run commands), Read (to read files), and Write (to create files). The prompt tells Claude what tools are available—a set of Gmail scripts for fetching, archiving, and sending emails—and what configuration files exist. Then it describes the goal: process the inbox, classify emails, take appropriate actions, generate a report.

This is the new way of software engineering. Define the problem scope, define the tools needed, and let the agent figure it out.

When Claude runs, it autonomously:

- Executes the Gmail fetch script via Bash

- Reads the resulting JSON file

- Makes classification decisions based on context

- Runs archive scripts for emails that should be archived

- Writes a markdown report summarizing actions taken

The key insight is that the agent maintains context across all these steps. It understands what it fetched, why it classified something a certain way, and what actions it took.

Now we update the prompt, not the architecture

The power of this approach became clear when dealing with expired Gmail tokens. In a traditional system, handling auth errors would require:

- Adding try/catch blocks around API calls

- Writing a

check_auth.pyscript to detect token status - Creating a fallback flow when auth fails

- Generating a different report type for auth failures

- Testing all the new code paths

Instead, the solution was to add a few sentences to the orchestrator prompt:

If you encounter authentication errors like "Token has been expired or revoked" or "invalid_grant", do NOT continue processing emails. Instead, generate an auth failure report explaining the issue and instructing the user to re-authenticate.

No new code. No new modules. No parsing logic. The agent reads the error output from the Gmail scripts, understands what it means, and adapts its behavior accordingly. It figures out how to detect the error, decides to stop processing, and generates an appropriate report—all from natural language instructions.

I can imagine all coding looking like this: instructions over if/else trees. It’s easier and way more robust to changes.

Future-proof your code for model improvements

The most compelling argument for agentic engineering is what happens over time. Traditional code doesn't get better on its own. The if/else trees you wrote five years ago work exactly the same today… maybe even worse if user behavior changes.

On the other hand, models and agent harnesses are getting better at an insane rate. If you are an AI Engineer, you are probably rewriting code every few months with each shiny new agentic idea.

Write software to take advantage of upcoming AI releases. Prepare for future models instead of locking into brittle coding logic.

This is a form of future-proofing that traditional software doesn't have. You're not encoding solutions; you're encoding problems. And as models get better at solving problems, your systems benefit without code changes.

I can see a future where every application is a long-running agent, and all coding is in the form of instruction writing.