The Agentic System Design Interview: How to evaluate AI Engineers

So you need a team to build an LLM multi-agent system... how do you interview candidates? I'll try to provide some ideas and strategies in this article.

Firstly... What is an AI Engineer?

AI engineers build the future. They create scalable AI systems and agents. They test, evaluate, and debug complex issues. They collaborate with domain experts. They stay current on RAG, models, LLM best practices, prompt engineering, and context design. Most importantly, they understand how to build AI systems that actually work.

There are different types of AI engineering roles. This article focuses on software engineering positions that build LLM applications – not prompt engineering specialists or domain experts, but the engineers who architect and implement AI agents.

Gauging Passion for LLMs

What we're testing: In this fast-moving space, we need engineers who demonstrate genuine enthusiasm and stay up-to-date. Top models change daily. This is their job. Keeping current is the only way to know which model or strategy to use.

Why it matters: An engineer who stopped learning six months ago is already obsolete. The best candidates live and breathe this stuff.

Candidates of course should be familiar with the latest models, the meta of LLM application building, and the hip terminology to use. But even more importantly, you should look for engineers with opinions.

I've never met a great AI Engineer who wasn't highly opinionated on LLM strategies, OpenAI vs. Anthropic, or the future of AGI.

Sample questions to gauge interest and current knowledge (this might get out of date - this was written July 2025):

- "Which model provider do you lean on for creative writing? Claude, OpenAI, Gemini?"

- "What AI coding assistants do you use? Can you compare Cursor, Windsurf, Zed, and Claude Code?"

- "How do Open Source LLM stack up? What trends have you seen?"

- "Will AGI kill us all?"

- "Tell me about a recent paper or development in AI that caught your attention"

- "What side projects have you built with AI recently?"

Look for candidates who speak intelligently and passionately about trade-offs between models. They should have opinions on open source versus proprietary models. They should mention specific use cases where they'd choose one model over another.

You are looking for candidates who 💡 light up 💡. Their answers should be interesting. They should ramble incoherently but get really excited.

Red flags: Boring textbook answers.

Writing Code Still Matters

What we're testing: Core engineering competence. AI engineers still need to write clean, efficient code. They must handle data processing, API integration, and system architecture. They are hackers who need to build real production systems.

Why it matters: Brilliant AI ideas mean nothing if the engineer can't implement them reliably at scale.

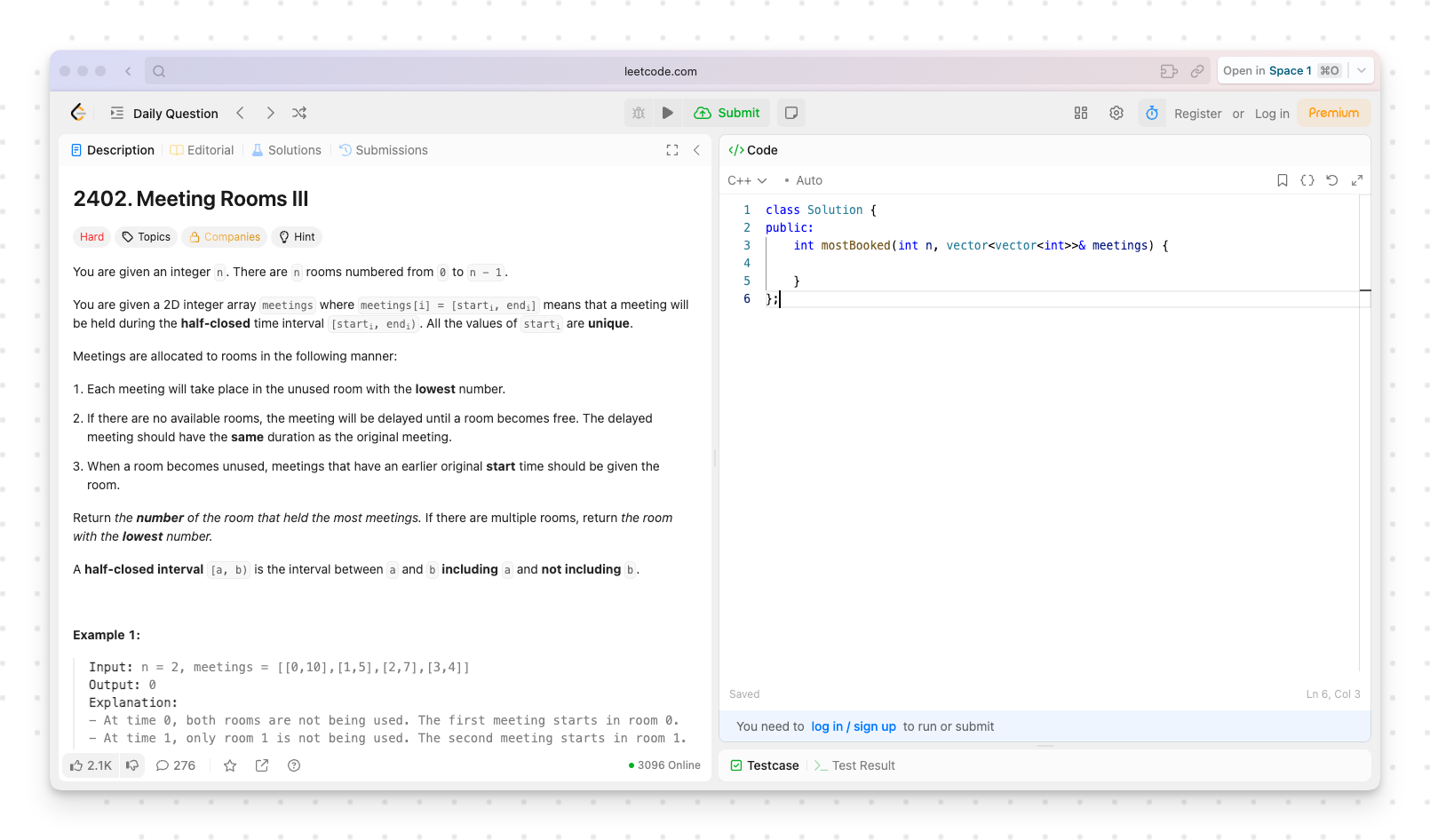

Scripting is a must. LeetCode-style problems should be enough here to assess algorithmic thinking. Many companies rightfully still require baseline coding proficiency, even for research-oriented roles. Consider allowing ChatGPT during live coding. Maybe only ChatGPT and not Cursor (idea here) so that you can see how exactly they write prompts.

Run standard coding exercises but add an AI twist:

- "Implement a function to batch API calls to an LLM efficiently"

- "Debug this code that processes embeddings"

- "Write a script to clean and prepare text data for fine-tuning"

They don't need deep ML implementation knowledge, though it helps. Some great solutions combine traditional ML with LLMs. Focus on clean code, error handling, and understanding trade-offs.

Advanced LLM Concepts

What we're testing: Technical depth in LLM-specific knowledge. We need engineers who understand the tools they're using, not just API wrappers.

Why it matters: When systems break or need optimization, surface-level knowledge isn't enough. Engineers must understand what's happening under the hood.

Key concepts to probe:

- Fine-tuning: "When does fine-tuning actually help? What are the trade-offs?"

- RAG basics: "Explain how embeddings enable semantic search. How would you handle documents that exceed context limits?"

- Vector stores: "Compare different vector database options. When would you use each?"

- RLHF: "What is RLHF?"

- Context Engineering: "What different strategies could you use for long-term memory?"

Candidates should be ready to discuss practical applications and trade-offs, not just memorized definitions.

Look for Tinkerer Personalities

What we're testing: The best AI engineers are hands-on experimentalists who thrive in uncertainty. They embrace "try and check" philosophy over rigid academic approaches.

Why it matters: The field changes too fast for formal methodologies. We need hackers who can operate when best practices don't exist yet.

Listen to how they describe their recent projects. True tinkerers talk about failed experiments with enthusiasm. They describe debugging sessions that went deep into the night. They mention unofficial workarounds they discovered through trial and error.

When discussing their background, watch for signs of the experimental mindset. Did they build side projects just to understand how something works? Have they contributed to open source AI tools? Do they maintain personal repositories of prompt patterns or agent architectures they've tested?

Project stories reveal everything. A tinkerer will explain not just what worked, but what didn't. They'll mention trying five different approaches before finding the right one. They get excited describing edge cases they discovered or unexpected model behaviors they had to work around.

Strong candidates show comfort with ambiguity. They learn from real-world data, not academic studies.

Warning signs: Over-reliance on documentation, waiting for perfect information before acting, or frustration with the experimental nature of AI work. Academic perfectionism can be a handicap in this rapidly evolving field.

Communication & Problem-Solving

What we're testing: Prompt engineering is fundamentally about communication. Can they break complex problems into clear, logical steps that an LLM can follow?

Why it matters: The best technical solution fails if the engineer can't structure prompts effectively or explain their system to others. As noted in PromptLayer's guide to prompt engineering, effective prompting requires both technical knowledge and clear communication skills.

Whiteboard exercise: Give a complex problem and have them design a multi-step agent system. This is being established as the norm for AI Engineering interviews.

Example problems:

- "Design an agent that analyzes customer support tickets and automatically drafts responses, escalating complex issues"

- "Create a system where agents collaborate to produce a research report with citations"

- "Build an agent that can read code, understand its purpose, and suggest improvements"

Don't go too in the weeds. Ask users to draw a flow chart of steps, choose a model for each, and maybe write a draft prompt. This is an exercise in context design and problem-solving.

Watch for:

- Clear problem decomposition into sub-tasks

- Logical flow between agent steps

- Consideration of error handling and edge cases

- How they'd structure prompts for each component

Have them explain their reasoning at each step. Can they articulate why they chose certain agent boundaries?

Take-Home

What we're testing: Can they build real systems that work? Theory is nice, but we need builders.

Why it matters: Many engineers can talk about agents but struggle to implement them. A working prototype reveals true capability.

Give them a practical agent system to build with evaluation requirements. The system should have multiple steps requiring different prompts feeding into each other. Look for "smart orchestration and practical agent design".

Example assignments:

- "Build an agent that reads a CSV of customer data and generates personalized email campaigns. Include evaluation metrics for quality."

- "Create a document Q&A system with citation tracking. The agent should handle multi-hop questions."

- "Implement a code review agent that can analyze Python files and provide actionable feedback. Include accuracy evaluation."

Grading criteria:

- Does it actually work?

- Did they implement meaningful evaluation metrics?

- Can they explain their design choices?

If they build anything functional that demonstrates multi-step reasoning, that's a strong signal. Perfect accuracy matters less than thoughtful architecture and evaluation approach.

Overall

Testing for AI engineers requires a different approach than traditional software interviews. We're looking for a unique combination:

Tinkerer mindset – They experiment, iterate, and thrive in uncertainty.

Strong communication – They can decompose problems and structure clear prompts.

Genuine passion for AI – They stay current because they're genuinely interested.

Execution ability – They can build working systems despite incomplete information.

The field moves too fast for candidates to know everything. Focus on how they think, how they learn, and how they build.

Look for builders who can navigate ambiguity, communicate clearly, and ship working AI systems.

PromptLayer is an end-to-end prompt engineering workbench for versioning, logging, and evals. Engineers and subject-matter-experts team up on the platform to build and scale production ready AI agents.

Made in NYC 🗽

Sign up for free at www.promptlayer.com 🍰