text-embedding-3-small: High-Quality Embeddings at Scale

OpenAI pulled off an impressive feat: they made embeddings both better AND 5× cheaper, a model that outperforms its predecessor by 13% while costing just $0.02 per million tokens. This breakthrough, known as text-embedding-3-small, transforms text into 1536-dimensional vectors for semantic search, clustering, and RAG applications, an exponential increase in cost-efficiency for production AI systems that makes high-quality embeddings accessible at scale.

What Are Text Embeddings and Why They Matter

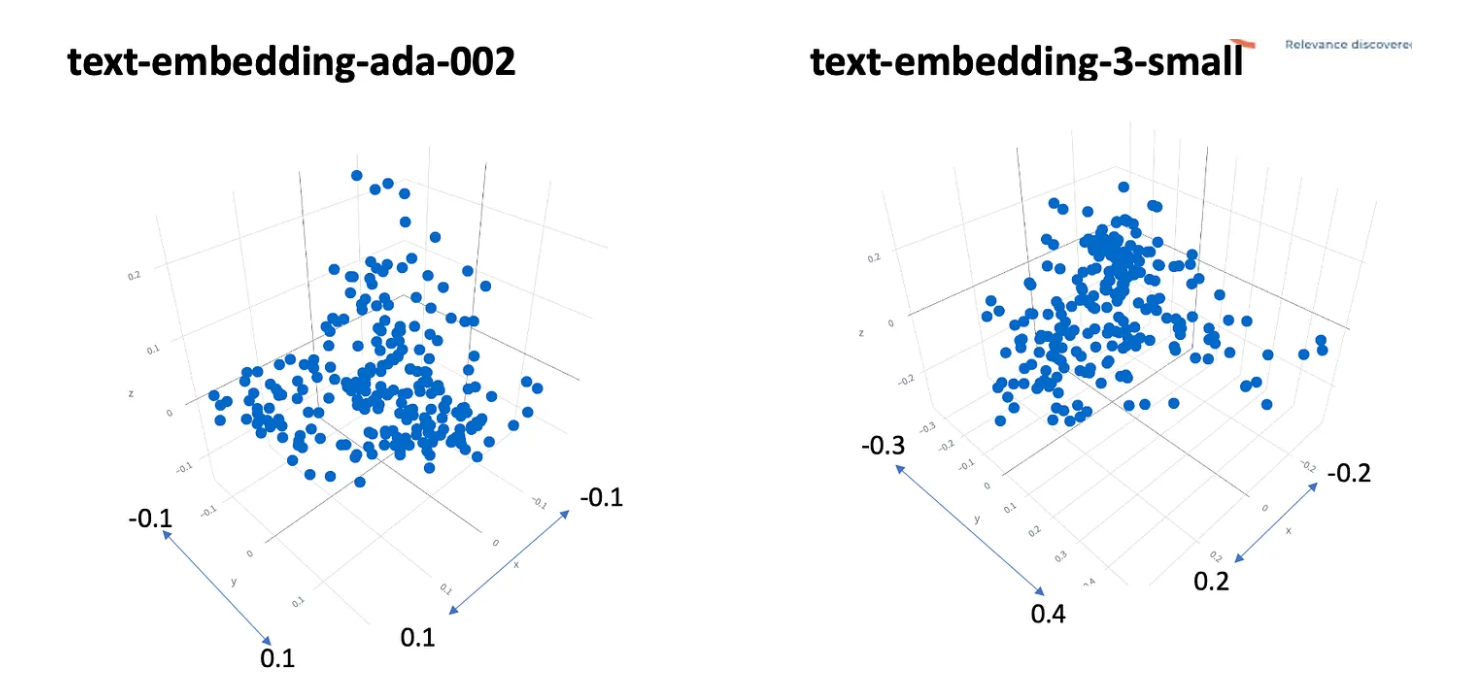

Text embeddings are numerical vectors that capture the semantic meaning of words and phrases. Think of them as coordinates in a vast multidimensional space where similar concepts cluster together, "king" and "queen" map to nearby points, while "apple" and "democracy" land far apart. These vectors serve as the foundation for modern AI's understanding of language.

The power of embeddings lies in their versatility. They enable computers to understand that "car" and "automobile" mean essentially the same thing, even though they share no letters. This semantic understanding powers ChatGPT's knowledge retrieval, enables Google to return relevant search results regardless of exact keyword matches, and allows recommendation systems to suggest similar content based on meaning rather than superficial characteristics. Without embeddings, modern AI would be limited to rigid pattern matching instead of genuine semantic comprehension.

The Leap from ada-002 to 3-small

Moving from ada-002 to text-embedding-3-small introduced a new economic reality for embeddings:

- On multilingual retrieval tasks (MIRACL benchmark), performance jumped from 31.4% to 44.0%, a remarkable 13-point gain that makes the model significantly more effective for global applications

- English task performance (MTEB benchmark) also improved from 61.0% to 62.3%, demonstrating consistent gains across languages

But the real revolution comes in pricing. While ada-002 cost $0.10 per million tokens, text-embedding-3-small slashed this to just $0.02 per million tokens, an 80% reduction that fundamentally changes the calculus for embedding-heavy applications. This means a startup processing 100 million tokens daily would see their embedding costs drop from $10 to just $2, freeing up resources for other innovations. Better results for one-fifth the cost, it's a democratization of AI capabilities.

the Flexible Dimension Feature

One of text-embedding-3-small's most innovative features is its implementation of Matryoshka Representation Learning, which allows developers to trim embedding dimensions on the fly without retraining the model. Like Russian nesting dolls, the most important information is packed into the earliest dimensions, with progressively less critical details following.

This flexibility offers practical benefits for real-world applications. Need faster similarity searches? Request 512-dimensional embeddings instead of the full 1536. Running low on vector database storage? Trim to 1024 dimensions while maintaining competitive accuracy. The remarkable aspect is that even a trimmed 1024D embedding from the large model beats ada-002's full output, demonstrating the superiority of the new architecture. This feature enables developers to dynamically balance accuracy, speed, and storage costs based on their specific use case requirements.

Real-World Applications

The impact of text-embedding-3-small extends across numerous domains where semantic understanding drives value. In semantic search and RAG systems, the model powers sophisticated customer support knowledge bases that understand intent rather than just keywords. When a user asks "how do I cancel my subscription," the system can retrieve relevant documents about account management, billing cessation, and membership termination, even if none use the exact phrase "cancel subscription."

For multilingual retrieval, the 13-point gain on MIRACL benchmarks translates to dramatically improved cross-language understanding. International organizations can now embed documents in Spanish, search in English, and receive accurate results, breaking down language barriers in global knowledge management systems.

In classification, clustering, and recommendation systems, the embeddings serve as rich features for machine learning models. E-commerce platforms use them to group similar products, detect anomalous reviews, and recommend items based on semantic similarity rather than simplistic keyword matching. The improved accuracy means better user experiences and higher conversion rates across these applications.

Monitoring Embeddings in Production

While text-embedding-3-small makes embeddings economically viable at scale, production deployment introduces new challenges around observability and cost management. Teams processing millions of embeddings daily need visibility into token consumption, API latency, and performance patterns across different use cases. Platforms like PromptLayer provide essential infrastructure for tracking embedding costs, monitoring API reliability, and analyzing usage patterns that help teams optimize their semantic search systems

When to Choose 3-small vs Alternatives

Text-embedding-3-small hits the sweet spot for general-purpose, cost-sensitive, high-volume applications. It's the ideal choice when you need solid performance without breaking the budget, think startup MVPs, proof-of-concepts, and production systems processing millions of daily requests. The model excels at standard semantic search, content recommendation, and document clustering tasks where its balanced performance meets most requirements.

However, trade-offs exist. For mission-critical applications demanding absolute best accuracy, text-embedding-3-large (3072D) offers marginally better performance at $0.13 per million tokens, still cheaper than the old ada-002. The large model scores 54.9% on MIRACL versus 3-small's 44.0%, which might justify the 6.5× price increase for applications where every percentage point matters.

Important considerations include the proprietary nature of OpenAI's API, potential rate limits for high-volume applications, and lack of customization for highly specialized domains. Some teams might need open-source alternatives like BGE or Nomic embeddings for on-premise deployment or fine-tuning capabilities. Evaluate your specific requirements around latency, privacy, customization needs, and budget constraints before committing.

Efficiency Meets Performance

Text-embedding-3-small proves that AI progress doesn't require choosing between quality and cost. With 13-point gains on multilingual tasks and 80% lower pricing, it delivers what was once a pipe dream: genuinely better results for dramatically less money. The model's flexible dimensions and broad integration support make it production-ready out of the box.

For most teams building semantic search or RAG systems, the decision is straightforward: text-embedding-3-small is the new baseline. Unless you're operating in highly specialized domains or need absolute maximum accuracy regardless of cost, this model offers the rare combination of performance, flexibility, and economics that makes it the obvious starting point. The next time someone claims AI advancement means escalating costs, point them here this is what democratization looks like.