spec-workflow-mcp: A Structured Approach to AI Software Development

For developers exploring ways to manage the complexity of autonomous agents, spec-workflow-mcp has emerged as a compelling solution. It is a specialized, MCP-compliant server purpose-built for the disciplined creation of software specifications.

As organizations move from simple chatbots to sophisticated agents that manage files and implement features, they face challenges like context drift and architectural incoherence. spec-workflow-mcp addresses this by mirroring best practices from the traditional SDLC - think requirements docs, design phases, and approval gates - but tailored specifically for AI-driven projects.

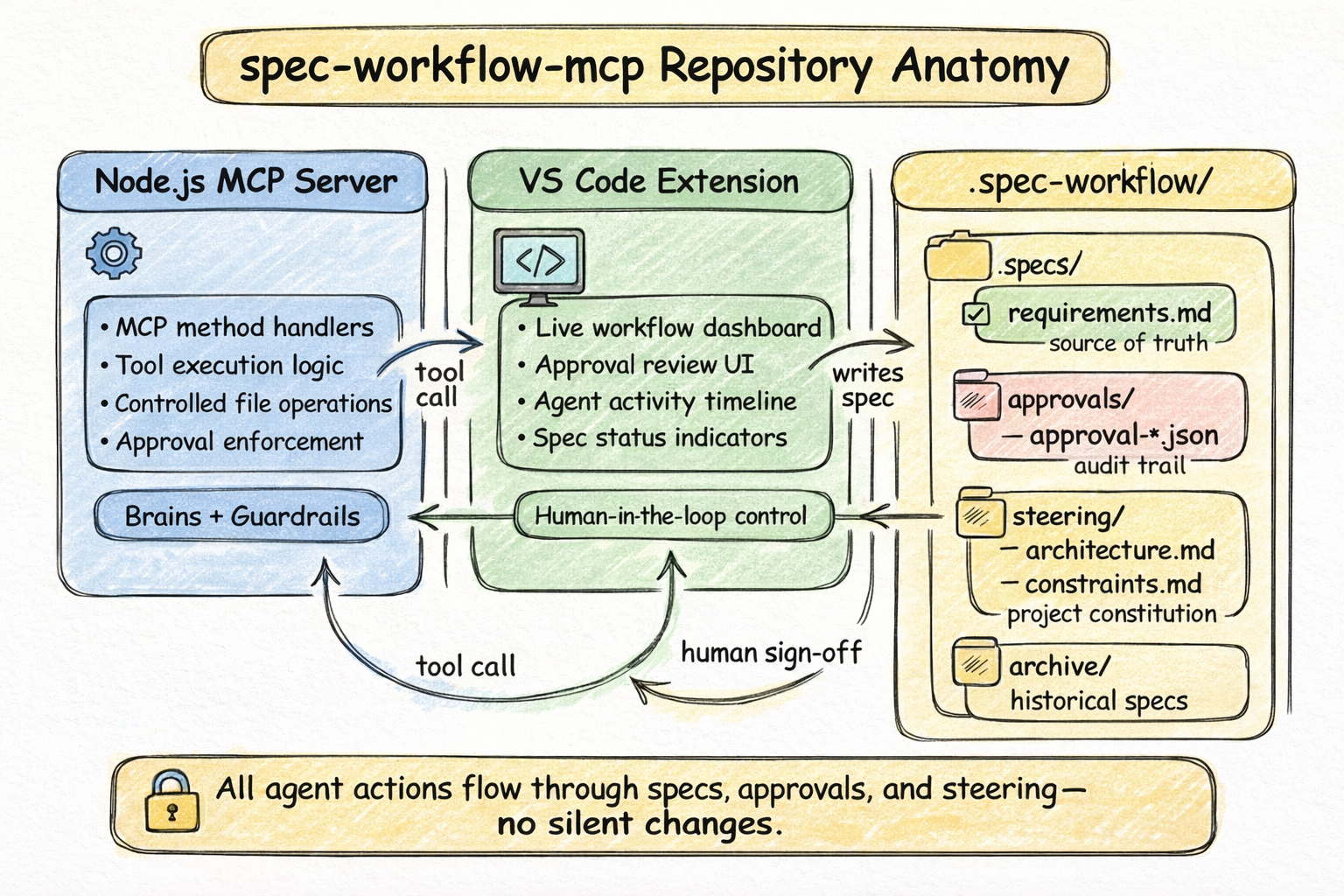

Inside the spec-workflow-mcp Architecture

The core of this approach lies in its structure. It is designed to bring order to agentic development through a specific project anatomy:

The Repository Structure

- Node.js Logic: Handles core server logic for MCP methods, tool execution, and file operations.

- VS Code Extension: Offers a rich, live dashboard, allowing developers to oversee AI-driven workflows directly within their IDE.

- .spec-workflow Folder: The backbone for orchestrating state, typically organized into:

- specs/ (Markdown specs for requirements and design)

- approvals/ (JSON records of human sign-offs)

- steering/ (Project-wide technical directives)

- archive/ (Historical specs for traceability)

Key Functionalities

- Semantic Tooling: Instead of generic file-writing, the server exposes intent-rich tools like create-spec-doc, request-approval, and manage-tasks.

- Automated Approvals: Drafts submitted by the AI are queued for human review. The workflow proceeds only when a doc is approved.

- Steering Documents: These act as a project constitution, ensuring design choices remain coherent even as features evolve.

The Implementation Framework: Four Phases

At the heart of spec-workflow-mcp is a structured process best described as 'Micro-Waterfall.' While the term 'Waterfall' might seem counterintuitive in an Agile world, this framework applies that discipline strictly to atomic units of work, rather than the entire project.

- Requirements: Define "what" needs building (no code allowed) - strictly requirements.md.

- Design: Once requirements are approved, specify the "how" - structures and stack choices in design.md.

- Tasks: Decompose the design into atomic work units in tasks.md.

- Implementation: Agents execute only the approved, pre-defined tasks.

Every phase requires explicit human approval before the AI can progress. This tight feedback loop reduces the risk of runaway generations, helping ensure outputs match the original vision.

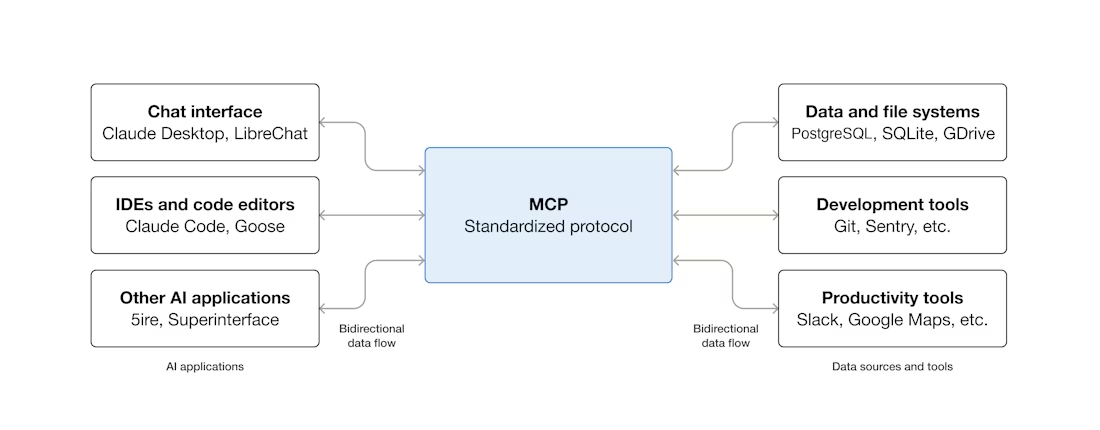

The Foundation: Understanding the Model Context Protocol (MCP)

To understand why spec-workflow-mcp works effectively across different environments, it helps to understand the underlying protocol.

The Model Context Protocol acts like a "USB-C for AI Agents." It solves the "N × M" integration problem by standardizing how AI models interact with data sources and tools. Rather than hard-coding integrations for every model, MCP allows any compatible agent to access external resources through a universal interface.

The architecture uses JSON-RPC and is organized around three primitives:

- Resources: "Read" operations (documents, data).

- Tools: "Write" or "execute" actions (creating specs, changing state).

- Prompts: Reusable templates or instructions.

This separation allows teams to control exactly what models can see and do, minimizing confusion and security risks.

Observability in LLM Applications

If spec-workflow-mcp is the backbone that keeps your agent on track, deep observability is the nervous system that tells you what's actually happening. As developers move from simple linear scripts to dynamic 'agent graphs’, the complexity explodes. Suddenly, knowing why an agent made a specific decision - or why it requested a weird approval - is just as important as the decision itself. By natively supporting MCP, a platform like PromptLayer can act as a control plane for these workflows. Every action - prompt, tool call, document generation, or approval - creates a "span" in the trace.

This combination of structured specifications and deep observability provides significant benefits for multi-model environments, promoting AI accountability:

- Debugging: Developers can drill down to the exact tool call or parameter when an output looks off.

- Cost & Performance: Teams can analyze which phases dominate token usage or latency.

- Compliance: All approval actions and document changes are audit-logged in structured formats.

Security by Design

This workflow prioritizes safety alongside automation. The MCP protocol enforces strict "roots" - directory-level access controls that ensure agents cannot access unauthorized parts of the filesystem. Furthermore, the human-in-the-loop design ensures that no tool can trigger destructive actions without explicit permission, enforced directly through approval gates.

Your Next Move: Make Your Agents Boring (In the Best Way)

If you want agents you can actually trust, don’t chase “smarter” - chase tighter. Lock down context with steering docs, force clarity with requirements and design, and make every leap forward earn an approval. That’s how you turn probabilistic outputs into deterministic progress.

Start small this week: stand up spec-workflow-mcp locally, draft one feature’s requirements/design/tasks, and wire the whole loop into PromptLayer so every tool call is traceable. Then ship exactly what got approved - nothing more, nothing less.

The teams that win with agentic AI won’t be the ones with the flashiest demos. They’ll be the ones who can point to a trace, an approval record, and a spec - and say, “Here’s why this behavior happened, and here’s how we change it.”