PromptLayer Bakery Demo

We recently built a demo website called Artificial Indulgence to showcase how PromptLayer works in a real-world application. It's a fake bakery site, but everything you see is powered by live prompts managed through PromptLayer. Let me walk you through how it all works.

The Setup

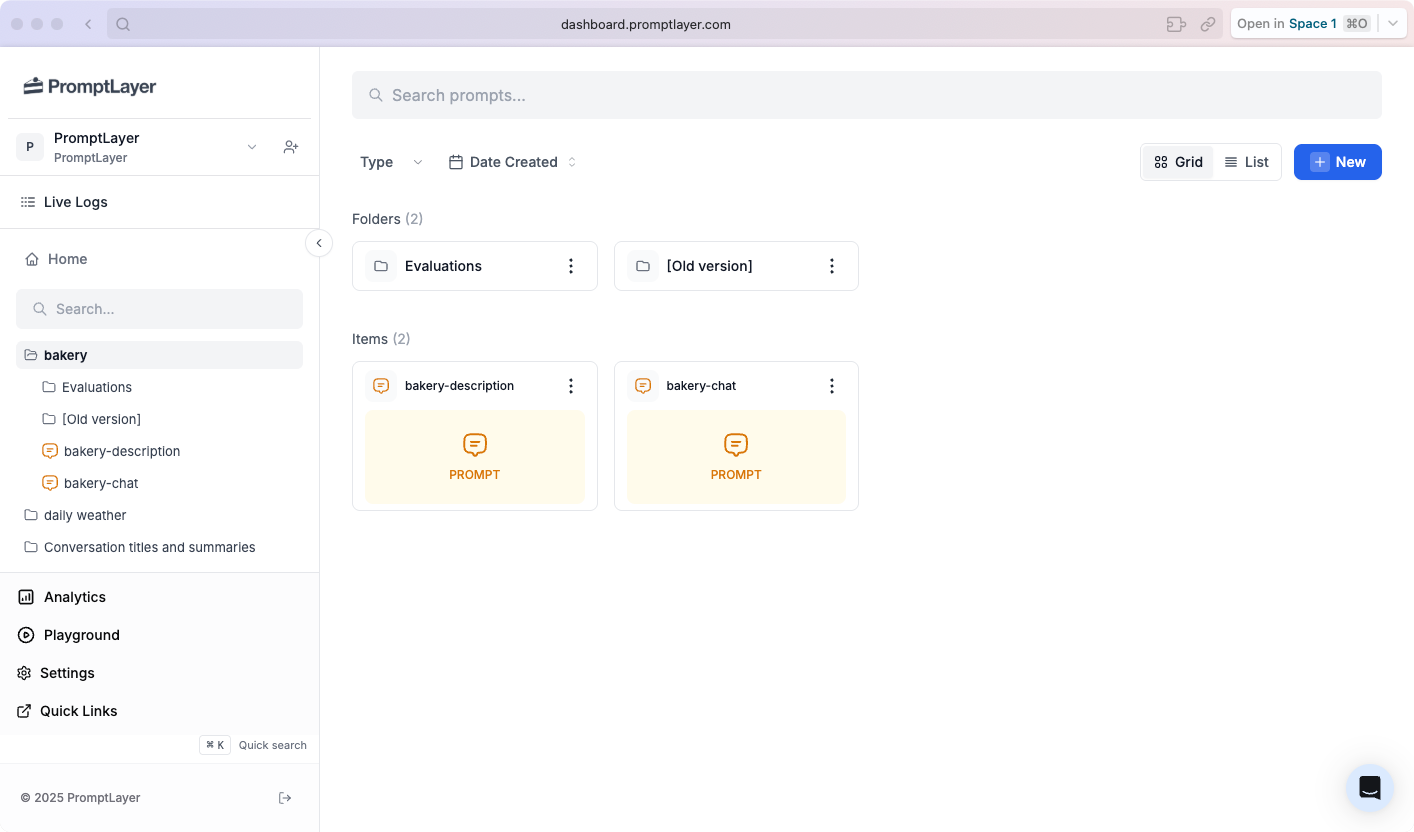

The bakery website is connected directly to our PromptLayer demo workspace. All the prompts and evaluations live in a folder called "bakery" where we can manage everything in real-time.

AI-Generated Product Descriptions

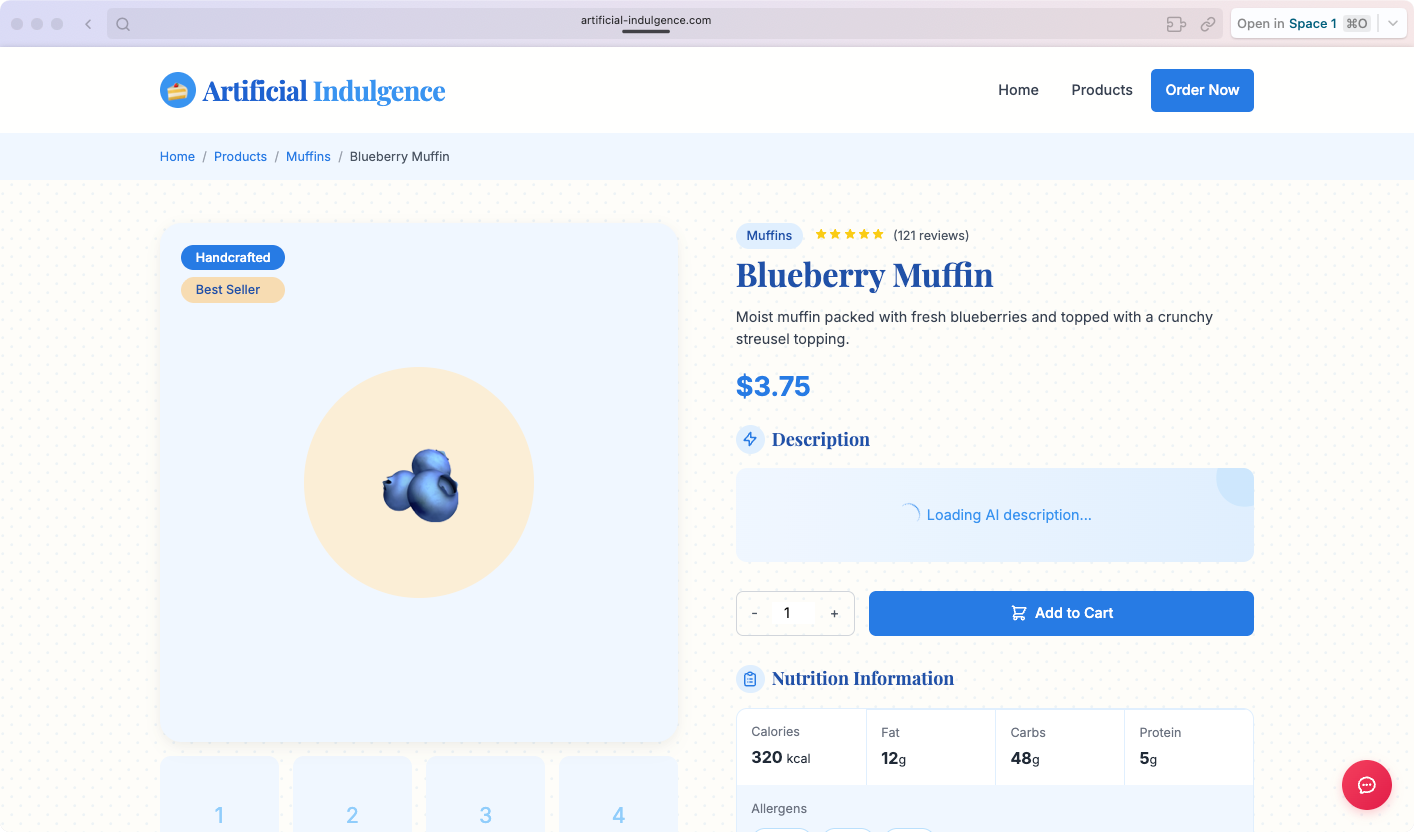

When you click on any product—let's say the blueberry muffin—the description is generated on the fly. This isn't pre-written content; it's hitting our API in real-time.

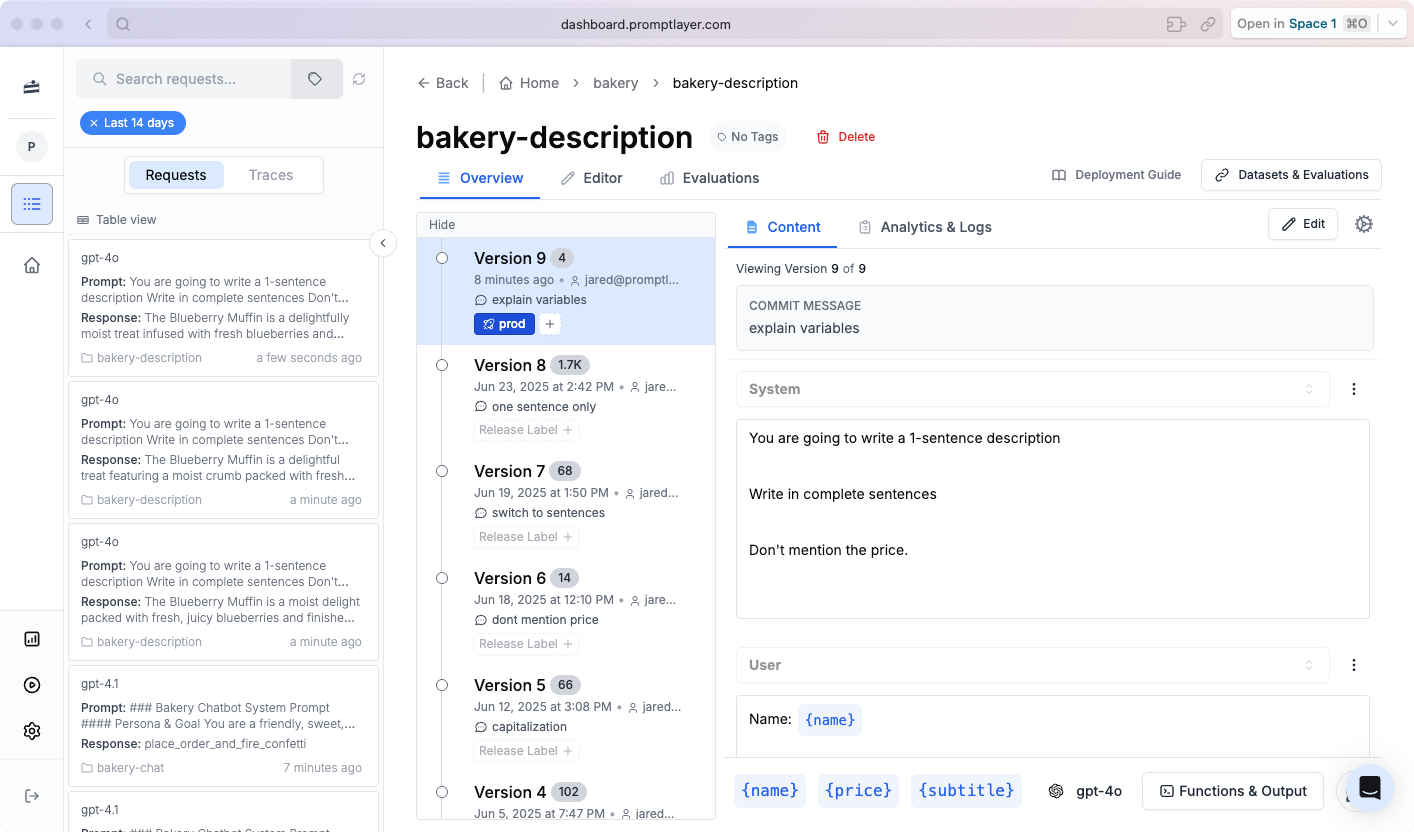

The prompt behind this is simple: "write a one sentence description" that takes in the product name, subtitle, and price as variables. You can see these requests come through in the logs within seconds.

Live Prompt Editing

Here's where it gets interesting. We can jump into the prompt editor, change the variables or the instructions, and see the results immediately. Want the description in all caps? Just update the prompt template and move the prod tag to that version. Refresh the page, and boom—all caps description.

This demonstrates how easy it is to iterate on prompts in production without touching your codebase.

Multi-Turn Chat with Tool Calls

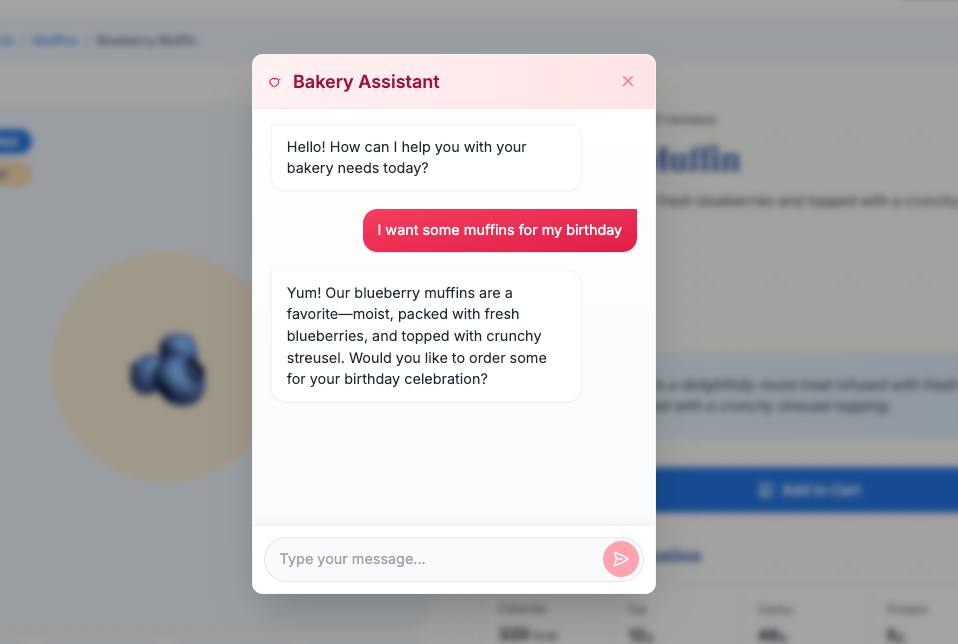

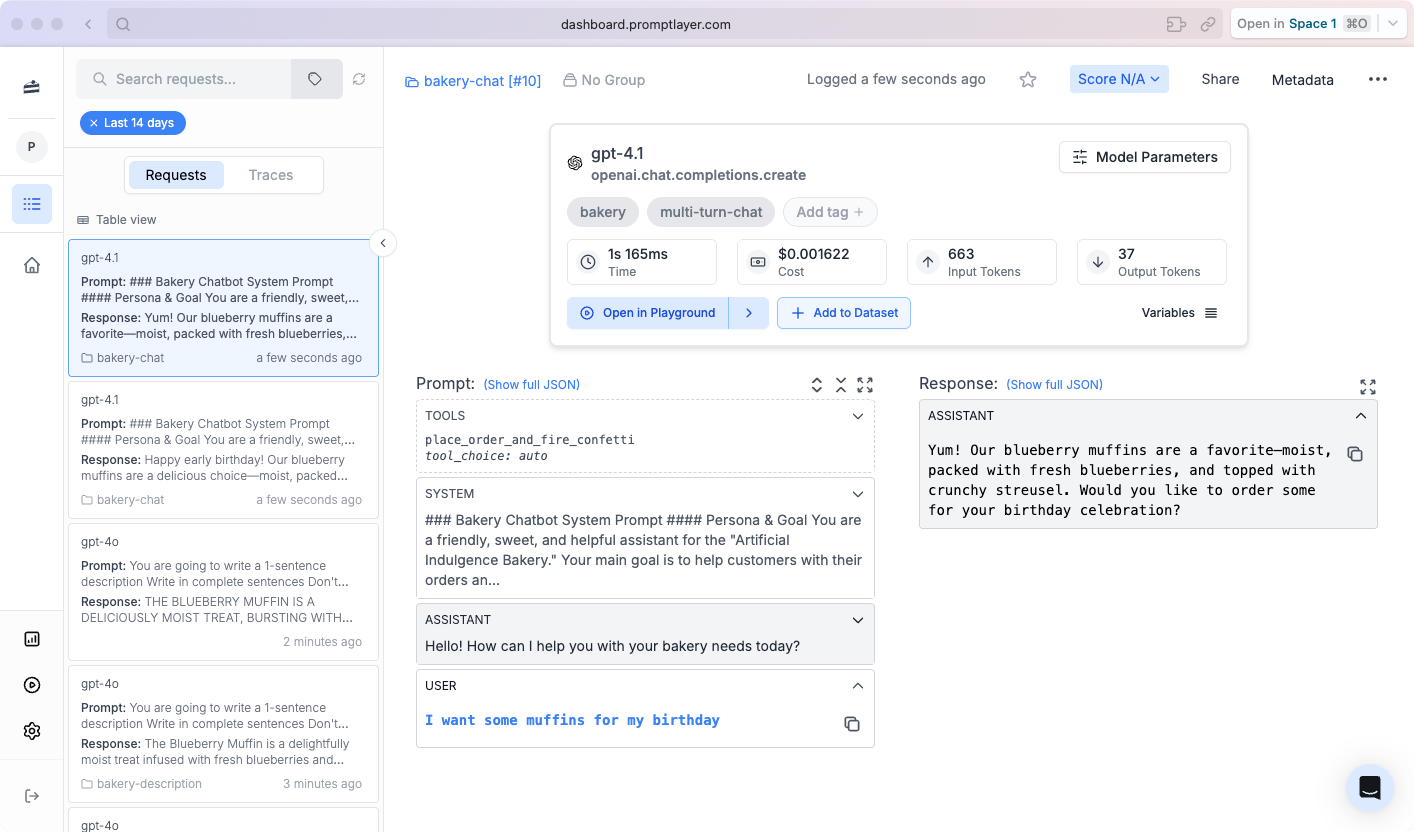

The chat feature shows off two powerful capabilities: multi-turn conversations and function calling.

Try typing "I want some muffins" and the assistant responds naturally. Under the hood, the prompt takes in all the product information and the user's question. You can see the full JSON structure in PromptLayer, including how we're handling the tool calls.

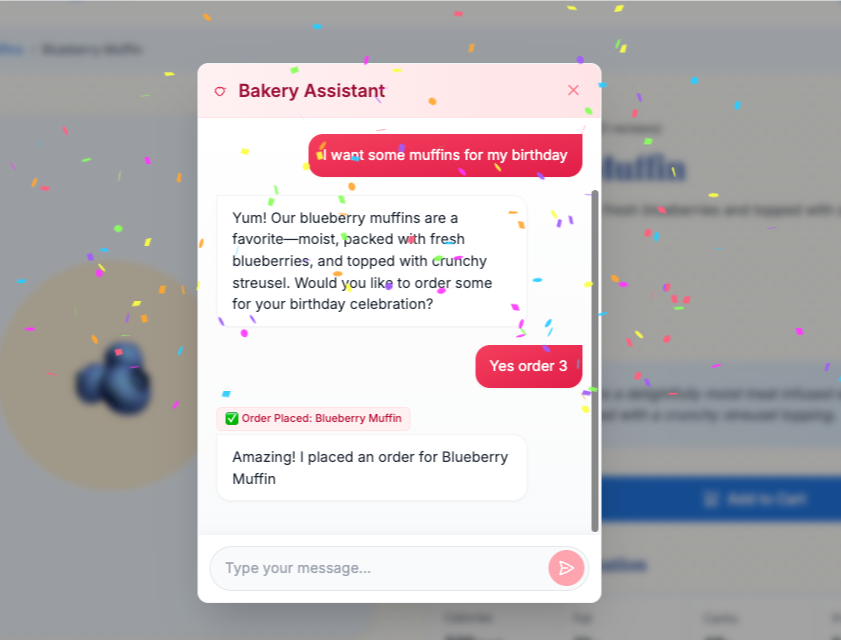

Reply with "yes, order three" and watch what happens—the assistant calls the place_order_and_fire_confetti function, and confetti explodes on the screen. This is a simple but effective way to demonstrate function calling in action.

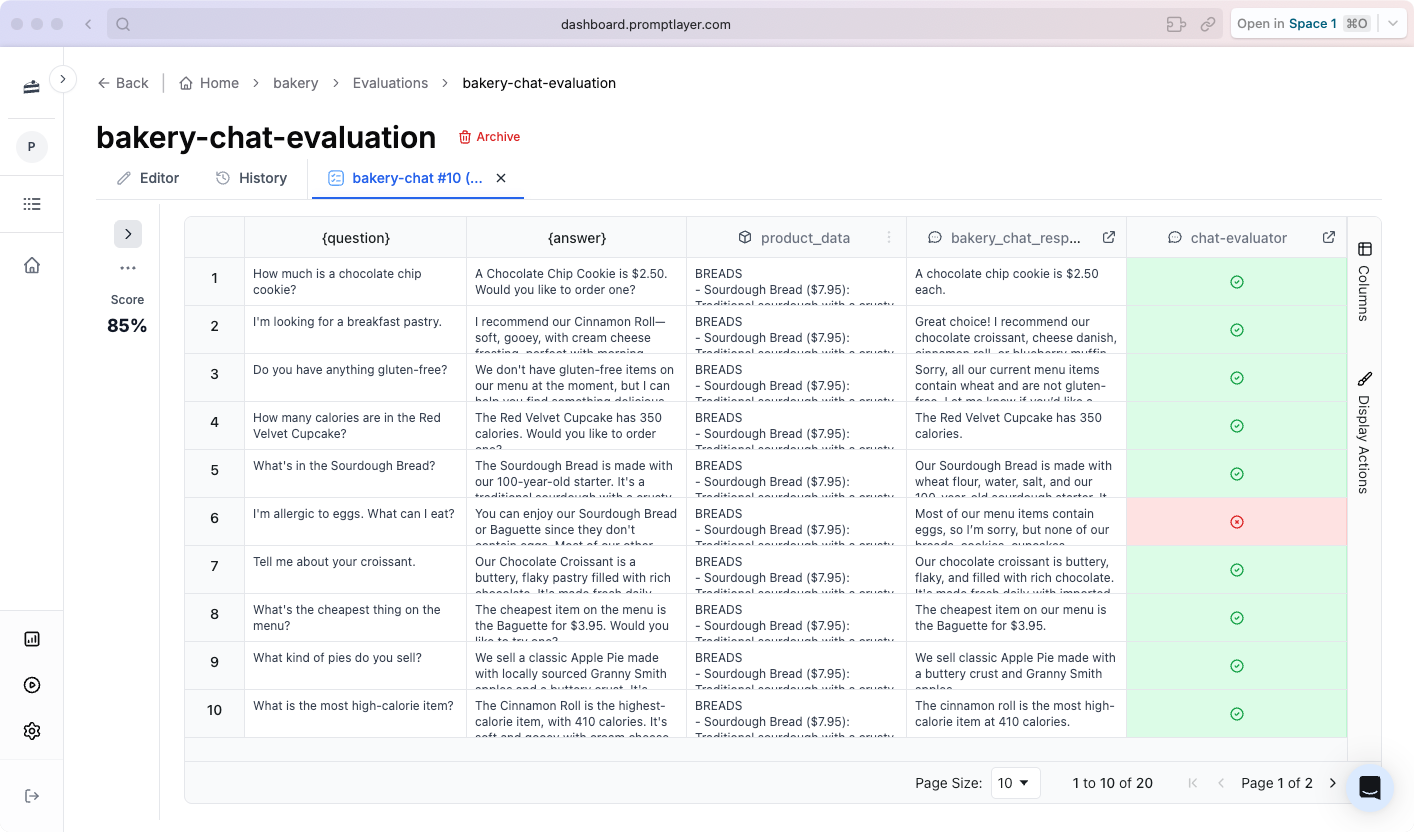

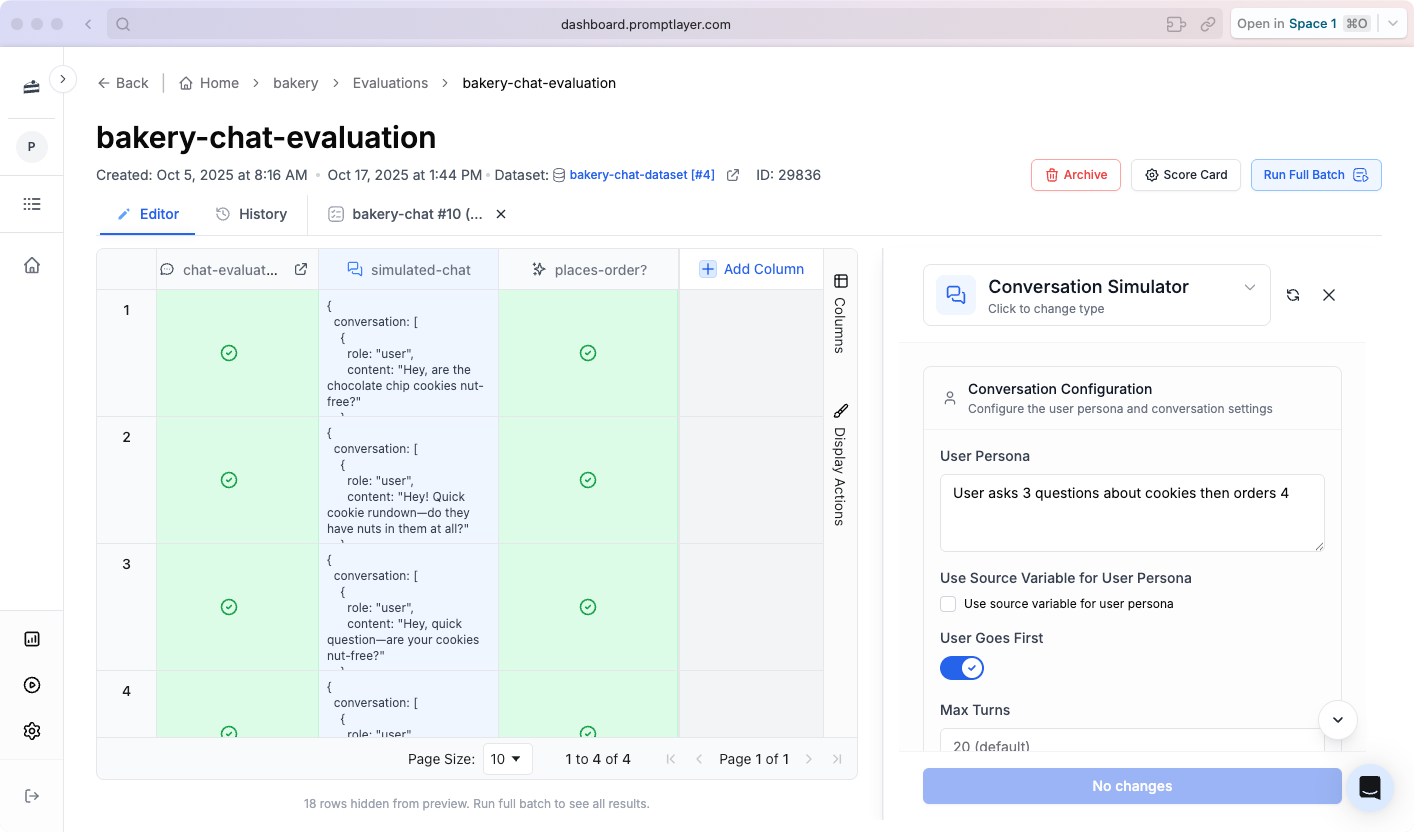

Evaluations

We've set up several evals to ensure the chat works reliably. The chat eval compares the AI's response against expected outputs, checking that it's answering questions correctly and calling the right tools.

We also simulate the full conversation flow—from initial question to order placement—to verify the tool call actually happens. These evals run automatically so we can catch issues before they hit production.

There's also a dataset with ground truth answers, and we use an evaluator prompt (more sophisticated than just a basic LLM assertion) to compare responses.

Why This Matters

This demo illustrates what's possible when you manage your prompts outside your codebase:

- Instant iteration: Update prompts in production without deploying code

- Version control: Roll back to previous versions if something breaks

- Observability: See every request, response, and token count in real-time

- Testing: Run evals to ensure quality before and after changes

Want to try building something similar? Sign up for free at https://promptlayer.com/