Orchestrating Agents at Scale (OpenAI DevDay Talk)

Building dynamic UIs for complex agentic workflows used to take months of custom engineering—now it takes minutes. At OpenAI's DevDay 2025, the company unveiled AgentKit, a revolutionary toolkit that transforms how developers build, deploy, and optimize AI agents. This isn't just another incremental update; companies like Canva and HubSpot are already seeing massive efficiency gains, marking a fundamental shift in AI agent development.

Orchestrating Agents at Scale was one of my favorite talks. The below are some learnings.

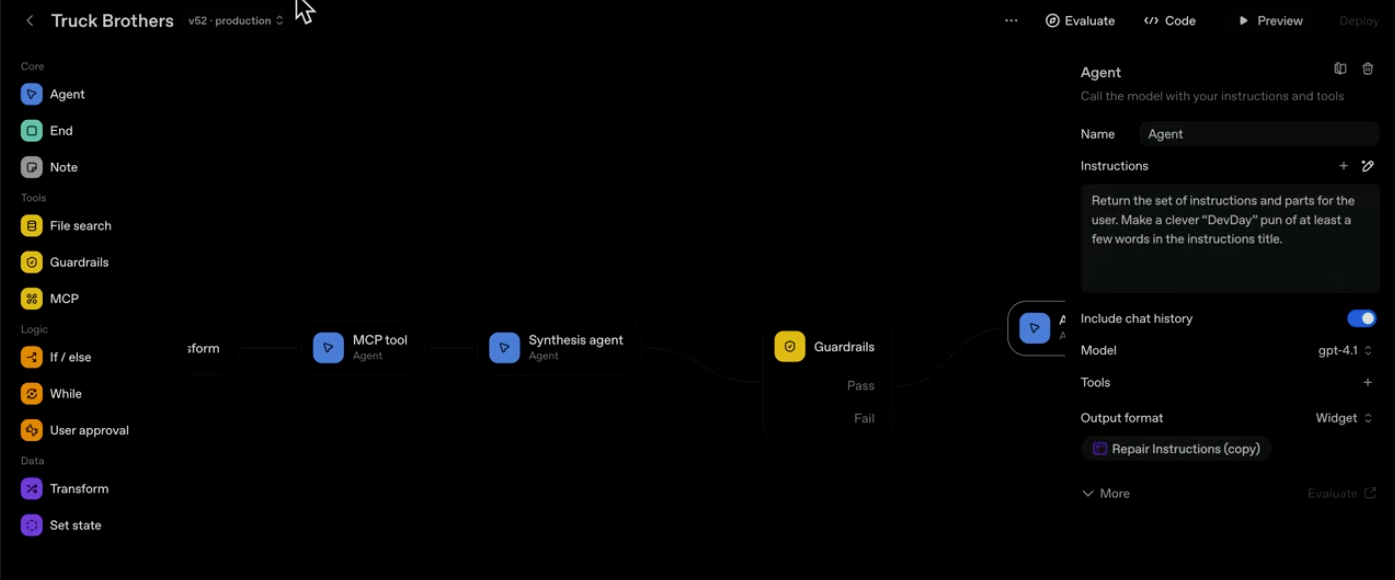

AgentKit: The Complete Stack for AI Agents

AgentKit represents OpenAI's answer to the fragmented landscape of AI agent development. At its core is the visual Agent Builder with a drag-and-drop interface that makes constructing complex workflows as intuitive as drawing a flowchart.

The system revolves around four node types:

- Core nodes handle fundamental agent operations

- Tools nodes integrate external services and APIs

- Logic nodes enable branching and conditional flows

- Data nodes manage information processing and transformation

During the DevDay demonstration, OpenAI showcased a real-world example: a semi-truck maintenance workflow handling thousands of daily inquiries. The demo started with a simple query about low fuel economy and orchestrated a complete diagnostic workflow—searching maintenance manuals, identifying relevant procedures, finding required parts, and returning formatted instructions. What's remarkable isn't just the functionality, but the speed: from concept to production deployment in seconds.

The Agent Builder allows developers to visually connect nodes, test workflows in real-time, and deploy directly to production with a single click. No more wrestling with complex orchestration code or debugging distributed systems—the visual interface handles all the underlying complexity.

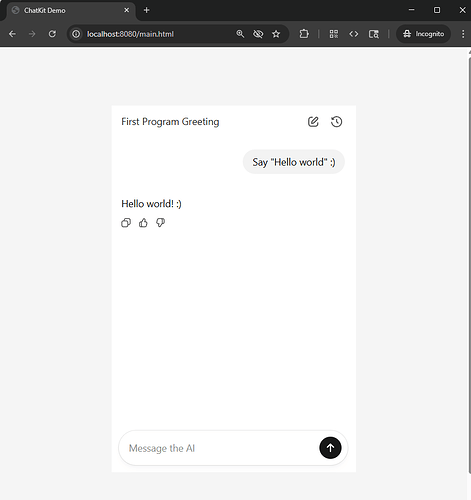

ChatKit: Solving the UI Problem

One of the most underappreciated challenges in building AI agents is creating the user interface. ChatKit emerges as OpenAI's solution to this universal pain point, providing pre-built, embeddable chat components that "just work."

OpenAI isn't just talking the talk—their own help center already runs on ChatKit, proving its production readiness. The framework includes:

- Ready-to-use chat components that handle streaming, reasoning displays, and dynamic content

- Widget Studio for creating custom visual outputs without frontend expertise

- Seamless integration with existing web and mobile applications

- Full customization while maintaining consistency with OpenAI's design patterns

The DevDay demo illustrated this powerfully. When the presenter wanted to enhance the maintenance workflow's output format, they simply created a custom widget displaying instructions and parts lists in a more digestible format. Upload the widget, click publish, refresh the page—and the new UI is live. This eliminates what traditionally requires months of UI development work.

Self-Hosting with the OpenAI Agents SDK

While cloud deployment offers convenience, many enterprises require self-hosted solutions for compliance or data sovereignty reasons. The OpenAI Agents SDK bridges this gap elegantly.

With a single button click in the Agent Builder, developers can export workflows directly to JavaScript or Python code. This isn't just a basic code export—it's production-ready code using the open-source OpenAI Agents SDK that maintains feature parity with the hosted version.

The SDK includes:

- Full streaming support for real-time interactions

- Reasoning trace visibility for debugging

- Widget rendering capabilities for custom UIs

- MCP (Model Context Protocol) integration for accessing private data sources

During the demo, the presenter seamlessly switched from OpenAI's hosted infrastructure to a local deployment. They connected to a local MCP server accessing private data, changed a few configuration lines, and the entire workflow ran identically on their own infrastructure. This flexibility means teams can prototype in the cloud and deploy on-premise without rewriting their entire system.

Production-Ready Monitoring and Optimization

Deploying agents is only the beginning. The real challenge comes when thousands or millions of users start interacting with your system. AgentKit includes sophisticated monitoring and optimization tools that turn what was once a debugging nightmare into a manageable process.

Automatic tracing captures every workflow execution, providing detailed insights into:

- Which agents ran and in what order

- Token usage and latency metrics

- Success and failure patterns

- Complete input/output logs

The platform's LLM-as-judge evaluation system can analyze thousands of traces automatically. Instead of manually reviewing logs, developers can set up graders that evaluate whether outputs are correct, properly formatted, or meet specific business criteria.

The Visual Evals interface takes this further by allowing optimization of individual agents within a workflow. During the demo, a formatting grader showed only 40% success rate. After adding clearer instructions to the prompt and clicking the "rerun" button, the success rate jumped to 80%—all validated with real data, not guesswork.

Perhaps most impressively, the one-click optimization button uses all available context—prompts, ground truth data, grader results, and failure reasoning—to automatically improve agent performance. This transforms optimization from an art to a science, with measurable improvements backed by data.

The New Development Paradigm

AgentKit introduces several paradigm shifts in how we build AI applications:

Guardrails for safety are now first-class citizens, not afterthoughts. Developers can add hallucination prevention, input validation, and output screening as simple nodes in their workflow. The demo showed a guardrail verifying that maintenance instructions were grounded in actual manual data—critical for high-stakes applications.

The Connector Registry centralizes integration management, making it simple to connect agents to existing systems. Whether it's GraphQL APIs, databases, or custom tools, integrations become reusable components rather than one-off implementations.

Most significantly, development velocity has fundamentally changed. What used to take months—building UIs, implementing orchestration, adding monitoring, creating evaluation frameworks—now takes hours. The demo proved this wasn't hyperbole: adding new functionality, deploying updates, and optimizing performance all happened in real-time.

Real metrics replace "vibes-based" development. Instead of hoping changes improve the system, developers see concrete performance numbers. Did the new prompt improve accuracy? Did the UI change reduce user confusion? The data tells the story immediately.

Conclusion

AgentKit represents the next evolution beyond GPTs—a complete reimagining of how AI agents are built, deployed, and optimized. The visual builder democratizes agent creation, making it accessible to non-engineers while providing the depth that developers need. ChatKit solves the perennial UI challenge with embeddable components that work out of the box. The SDK ensures no vendor lock-in, allowing seamless transitions between cloud and self-hosted deployments.

Most importantly, the barrier to building sophisticated AI agents just collapsed. From visual design to production deployment, from monitoring to optimization, every piece of the puzzle now fits together in a cohesive platform. As companies like Canva and HubSpot are already demonstrating, this isn't just about making development easier—it's about enabling entirely new classes of AI applications that were previously too complex or expensive to build.