Moltbot Review (formerly Clawdbot)

The idea of a proactive digital assistant has floated around tech circles for years. We’ve watched Siri handle timers and weather queries since 2011, and we’ve chatted with GPT-based tools that forget us the moment we close the tab. At PromptLayer, where we spend a lot of time thinking about memory, long-running context, and what it actually means to operate AI systems in the real world, that limitation has always felt like the missing piece.

But something shifted in January 2026 when Peter Steinberger, founder of PSPDFKit, released Moltbot (formerly Clawdbot) as an open-source project. Within days it racked up over 9,000 GitHub stars. Within weeks, reports surfaced of Mac Mini stock shortages as enthusiasts snapped up hardware to run their own instances. Moltbot is an autonomous agent that lives on your machine, remembers your context indefinitely, and messages you first when it has something useful to share.

Why waiting for a prompt feels outdated

Traditional assistants operate in a reactive loop. You ask, they answer, the conversation ends. Moltbot flips that model. Configure a morning briefing and it will summarize your inbox, list today's calendar events, and flag deadlines before you've finished your coffee. It can watch a product page and ping you when the price drops, or monitor your CI pipeline and send a message the moment a build fails.

This proactive stance mimics what people imagined when they first saw Jarvis in the movies - an assistant that anticipates needs rather than waiting to be summoned. The difference now is that the underlying language models have become capable enough to reason about context, and Moltbot provides the scaffolding to let that reasoning persist across days, weeks, and months.

Two components that make autonomy possible

Moltbot's architecture splits into an AI agent and a gateway. The agent is the brain: a long-running process on your local machine that interfaces with whichever large language model you prefer. It can call out Anthropic's Claude, OpenAI's GPT family, Google's Gemini, or run entirely offline using local models through Ollama. This model-agnostic design means you can balance cost, latency, and privacy however you like.

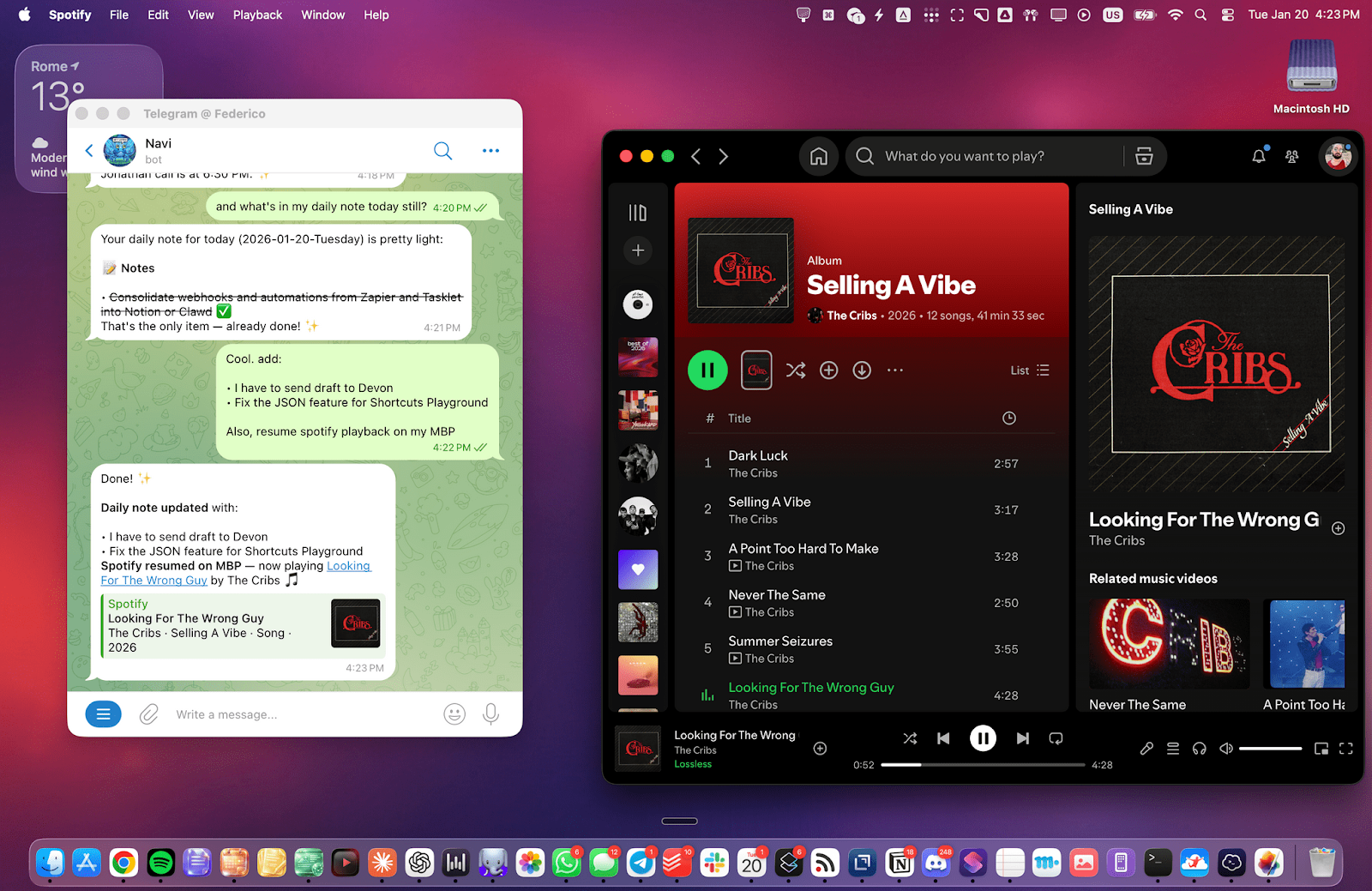

The gateway handles messaging. Rather than forcing you into a proprietary app, Moltbot plugs into the platforms you already use - iMessage, WhatsApp, Telegram, Signal, Discord, Slack. Chatting with your assistant feels no different from texting a colleague. The gateway also supports threading and group chats, so a family or small team can share a single Moltbot instance without stepping on each other's requests.

Everything lives locally in plain files. Conversations, memories, configuration, and skills sit in a directory structure you can open in any text editor. Think of it like an Obsidian vault for your AI. Because state persists on disk, Moltbot can recall a detail you mentioned casually two weeks ago - a dramatic improvement over context-window-limited cloud chatbots.

What Moltbot can actually do

The feature list reads like a wish list from years of assistant complaints finally addressed:

- Proactive alerts and scheduled briefings via a built-in cron system

- Full system access including file read/write, shell commands, and headless browser control

- Long-term memory stored as Markdown journals the AI references automatically

- Extensibility through community skills and a plugin protocol called MCP

Real-world examples illustrate the range. Developers have Moltbot run test suites and report failures, update npm dependencies, and open pull requests on their behalf. Home automation fans control Hue lights, Sonos speakers, and thermostats through natural language. One user configured Moltbot to manage a family tea-selling business - scheduling follow-ups, tracking inventory, and responding to customer inquiries with shipping info.

For teams experimenting with LLM-powered workflows, tools like PromptLayer can help track prompt iterations and model calls, which pairs nicely with Moltbot's model-agnostic design when you want visibility into what the agent is actually sending to each provider.

Community momentum is shaping the roadmap

The project's growth has been community-driven from the start. Over 160 pull requests landed in the first month. A public repository called Clawdhub collects community-contributed skills ranging from a GPT-4 coding helper to a Roomba vacuum controller. Users share configuration recipes in forums and Discord, swapping tips on everything from optimizing briefings to sandboxing untrusted plugins.

This grassroots energy has led to unexpected phenomena. Some power users run multiple Moltbot instances for different roles. One developer spun up 12 Mac Minis as an experiment in scaling personal agents. The enthusiasm reflects a genuine hunger for assistants that act rather than merely chat.

Your assistant has a pulse

Moltbot turns AI from a chat session into an always-on system. It can monitor, remember, and initiate - surfacing what matters without waiting for you to prompt it.

Because it keeps long-term context and has real tool access (files, shell, browser), it can move from suggestions to execution within the limits you set. Combined with local, plain-file state, that makes it less of a black box and more like infrastructure you can operate and control.