LLM For Product Content Generation

The daily grind of crafting compelling product descriptions has long been the bane of e-commerce teams. Picture this: thousands of SKUs sitting in your catalog with thin, inconsistent descriptions that fail to capture search traffic or convert browsers into buyers. Meanwhile, your competitors are pumping out rich, SEO-optimized content that dominates search results. Sound familiar? This was the reality for most online retailers until Large Language Models entered the scene.

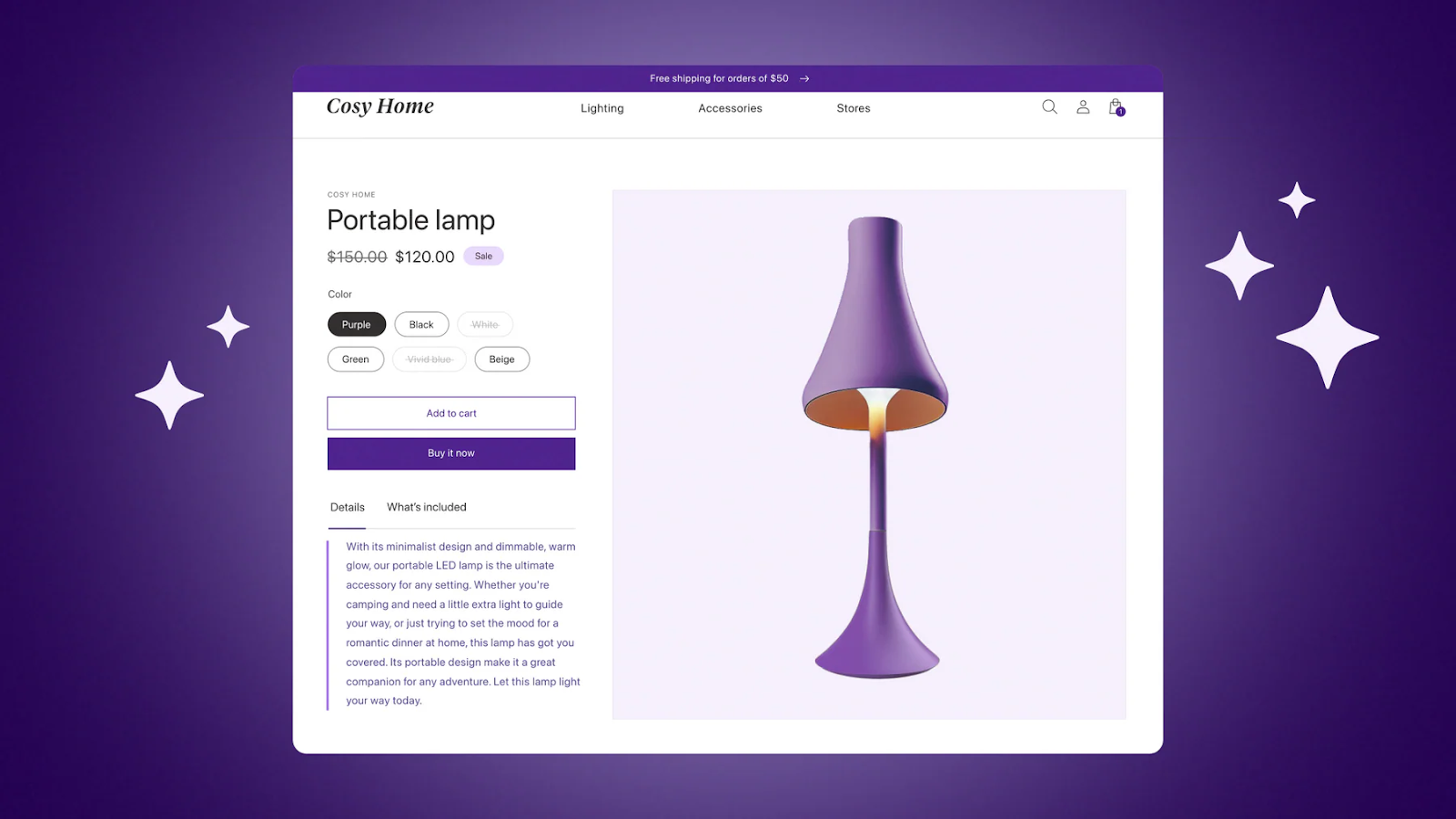

Today, LLMs are fundamentally changing how businesses approach product content. These AI systems don't just automate writing - they transform it into a strategic advantage. By analyzing product data and generating human-quality descriptions at scale, LLMs are helping companies boost their search rankings, improve conversion rates, and deliver personalized experiences to millions of customers simultaneously. The shift isn't just about efficiency; it's about competing in an e-commerce landscape where content quality directly impacts your bottom line.

Where teams see real gains

The numbers tell a compelling story. When Hexaware Technologies deployed an LLM-powered content generation system for a major retailer, the results exceeded expectations: a 25% increase in search engine results page (SERP) rankings and a 20% jump in conversion rates for products with AI-enhanced descriptions. These aren't isolated results - businesses across the industry are seeing similar gains.

What once required teams of copywriters working for weeks can now be accomplished in hours. An LLM can ingest basic product specifications and generate thousands of unique, detailed descriptions faster than a human could write a dozen. This speed doesn't come at the expense of quality either. Unlike human writers who may produce varying styles and inconsistent quality, a well-configured LLM maintains uniform tone and depth across your entire catalog.

Consider the ripple effects of this transformation. Better product descriptions lead to improved organic search visibility, which drives more qualified traffic to your site. Once visitors arrive, they encounter comprehensive, engaging content that answers their questions and builds confidence in the purchase. The result? Higher conversion rates, fewer returns, and stronger customer satisfaction.

Amazon recognized this potential early, rolling out generative AI tools that allow sellers to create complete product listings from just a few keywords. Early adopters report that they're using the AI-generated content with minimal edits, dramatically reducing the time from product sourcing to live listing. This democratization of quality content creation levels the playing field, allowing smaller sellers to compete with larger brands on content quality.

Setting up an LLM pipeline

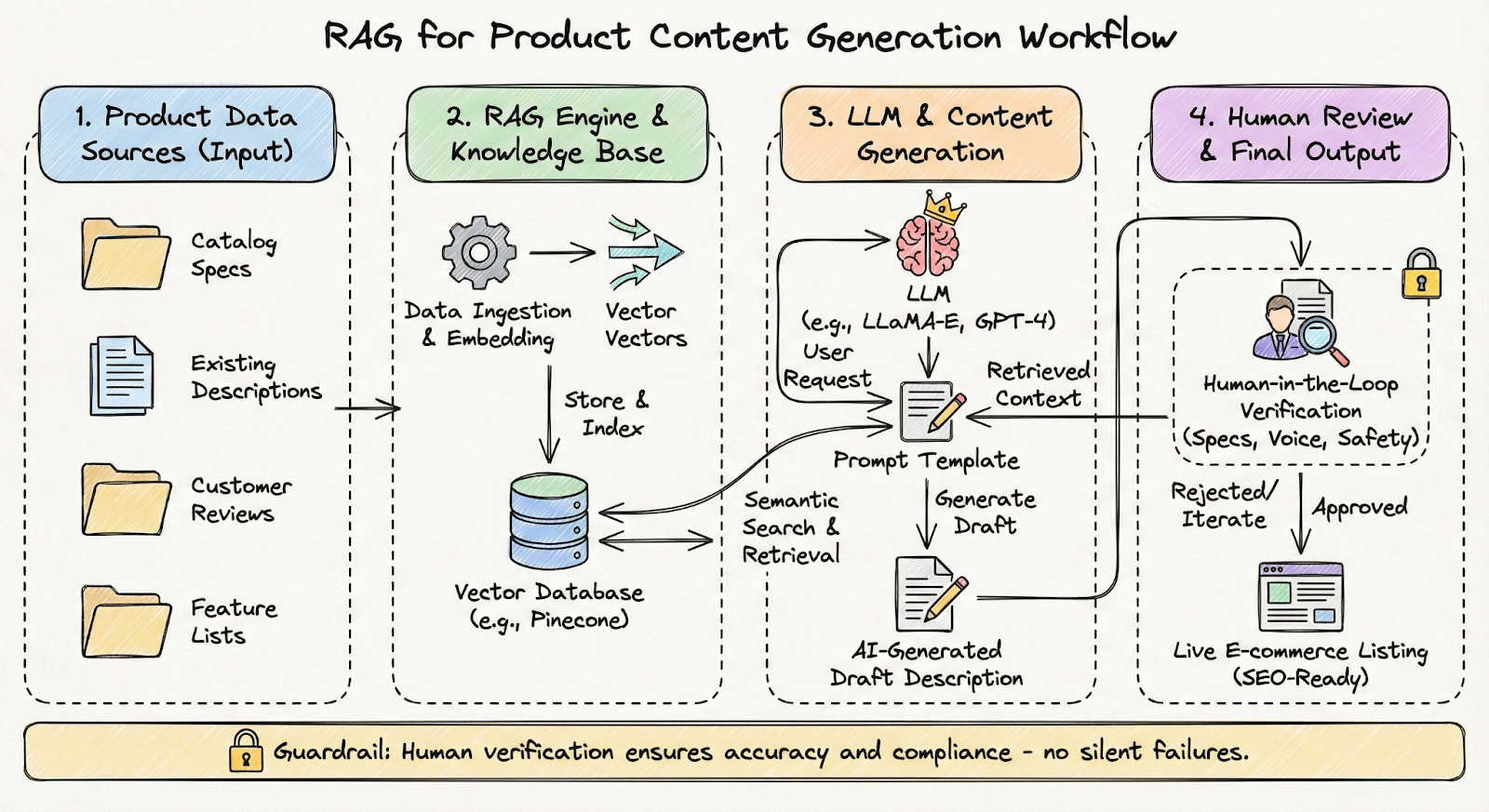

The magic behind effective LLM-powered content generation lies in a sophisticated technical stack. At its core, most production systems rely on Retrieval-Augmented Generation (RAG) paired with vector databases. This combination addresses one of the biggest challenges in AI content generation: ensuring factual accuracy.

Here's how it works in practice. Your product data - specifications, features, existing descriptions, customer reviews - gets converted into mathematical representations called embeddings and stored in a vector database like Pinecone. When the LLM needs to generate a description, it first queries this database to retrieve relevant information. This grounding in real data dramatically reduces the risk of the AI inventing features or making incorrect claims about your products.

The choice of LLM matters too. While general-purpose models like GPT-4 can handle product descriptions, domain-specific models like LLaMA-E show even better results for e-commerce applications. These specialized models understand the nuances of product content - from technical specifications to marketing language - and can generate more relevant, conversion-focused copy.

Emerging multimodal models like NExT-GPT push the boundaries even further. These systems can generate not just text but also suggest product images, create video scripts, or even produce audio descriptions. Imagine uploading a product photo and receiving not just a written description but also alt text for accessibility, social media captions, and ideas for lifestyle imagery - all generated by a single AI system.

The integration of these technologies into existing e-commerce platforms is accelerating. Shopify Magic, for instance, brings AI-powered description generation directly into the merchant dashboard. Similar tools are appearing across major platforms, making advanced AI capabilities accessible to businesses of all sizes.

Using prompt management tooling

Success with LLM-powered content generation starts with solid foundations. Before touching any AI tools, audit your product data. Clean, complete specifications and attributes are the single biggest factor in generating accurate descriptions. Fix missing fields, standardize formats, and ensure your data accurately reflects your current inventory.

Next, build your technical infrastructure. Start by creating a vector database index of your canonical product information - specifications, manufacturer descriptions, verified reviews. This becomes your source of truth that the LLM references when generating content. Tools like Pinecone offer production-ready solutions for this critical component.

Develop clear prompt templates and style guides. Your prompts should specify not just what information to include but how to present it. For instance: "Generate a 150-word product description that highlights the key benefits for outdoor enthusiasts, includes the three most important technical specifications, and uses an approachable but knowledgeable tone." Platforms like PromptLayer can be invaluable here to manage, test, and optimize your prompt templates at scale, ensuring quality control.

Establish your human review workflow before generating content at scale. Define which elements require manual verification (safety claims, technical specs, pricing) versus what can be automatically approved. Build in logging that tracks the origin of each piece of content - which prompt generated it, what data it referenced, and who reviewed it.

Start with a pilot program. Choose a representative subset of products - perhaps 100-200 SKUs across different categories. Generate content for these products and run a controlled A/B test comparing AI-generated descriptions against your existing content. Measure key metrics: click-through rates from search results, add-to-cart rates, conversion rates, and any increase in returns or customer complaints.

Use the pilot results to refine your approach. Maybe certain product categories need more technical detail, or perhaps your prompts need adjustment to better match your brand voice. Iterate on your templates, expand your retrieval database, and gradually scale to more products as you build confidence in the system.

Throughout implementation, measurement is crucial. Set up proper analytics to track how AI-generated content performs compared to human-written descriptions. Monitor not just conversion metrics but also SEO performance, customer feedback, and return rates. This data guides continuous improvement and helps justify broader rollout.

How do we actually make this work?

LLMs don’t replace product content work - they compress it. The winners are the teams that treat AI as a production line: grounded in your catalog data (RAG + a source of truth), constrained by brand rules, and finished by humans who verify the facts that can’t be wrong.

If you want this to be more than a shiny demo, make it measurable. Pick 100–200 SKUs, generate drafts, run a four-week A/B test, and track what actually matters: organic CTR, add-to-cart, conversion, and any downstream fallout like returns or support tickets.

One last guardrail: never let the model freelance on specs or “testimonial-ish” language. Trust is hard to win back. Your competitors are already iterating. Start the loop - draft, verify, test, ship - then scale what proves itself.