Leading AI Visibility Optimization Platforms for LLM's Observability

As organizations deploy LLMs at scale, a critical question emerges: How do you monitor a system that's probabilistic, opaque, and capable of generating millions of unpredictable responses? The answer lies in a new generation of AI observability platforms.

Traditional logging doesn’t cut it for LLMs, teams need visibility into prompts, responses, costs, and model decisions to catch hallucinations, drift, and compliance issues before they erode trust. Understanding the leading platforms that bring "DevOps-style" monitoring to AI systems and what makes each uniquely valuable has become essential for organizations serious about AI deployment.

Why LLM Observability Matters Now

The landscape of LLM monitoring has evolved dramatically from basic prompt logging in 2022-2023 to today's sophisticated end-to-end monitoring solutions. Early deployments revealed a critical gap: organizations lacked insight into why a model produced a given output or how it was performing over time. Initial solutions involved repurposing ML monitoring tools and rudimentary prompt logging, but these approaches proved inadequate for the unique challenges of generative AI.

Modern LLM observability platforms now provide essential capabilities,including:

- Tracing workflows through complex multi-step AI agent interactions

- Tracking costs and latency to identify efficiency bottlenecks and manage expenses

- Detecting quality issues like hallucinations, factual errors, and toxic content

- Managing prompts with version control and A/B testing capabilities

- Ensuring compliance with audit trails and decision transparency

The urgency for robust observability has intensified with regulatory pressures like the EU AI Act, which demands transparency and accountability in AI systems. Organizations can no longer treat AI as a black box, they need verifiable records of model behavior and decision-making processes.

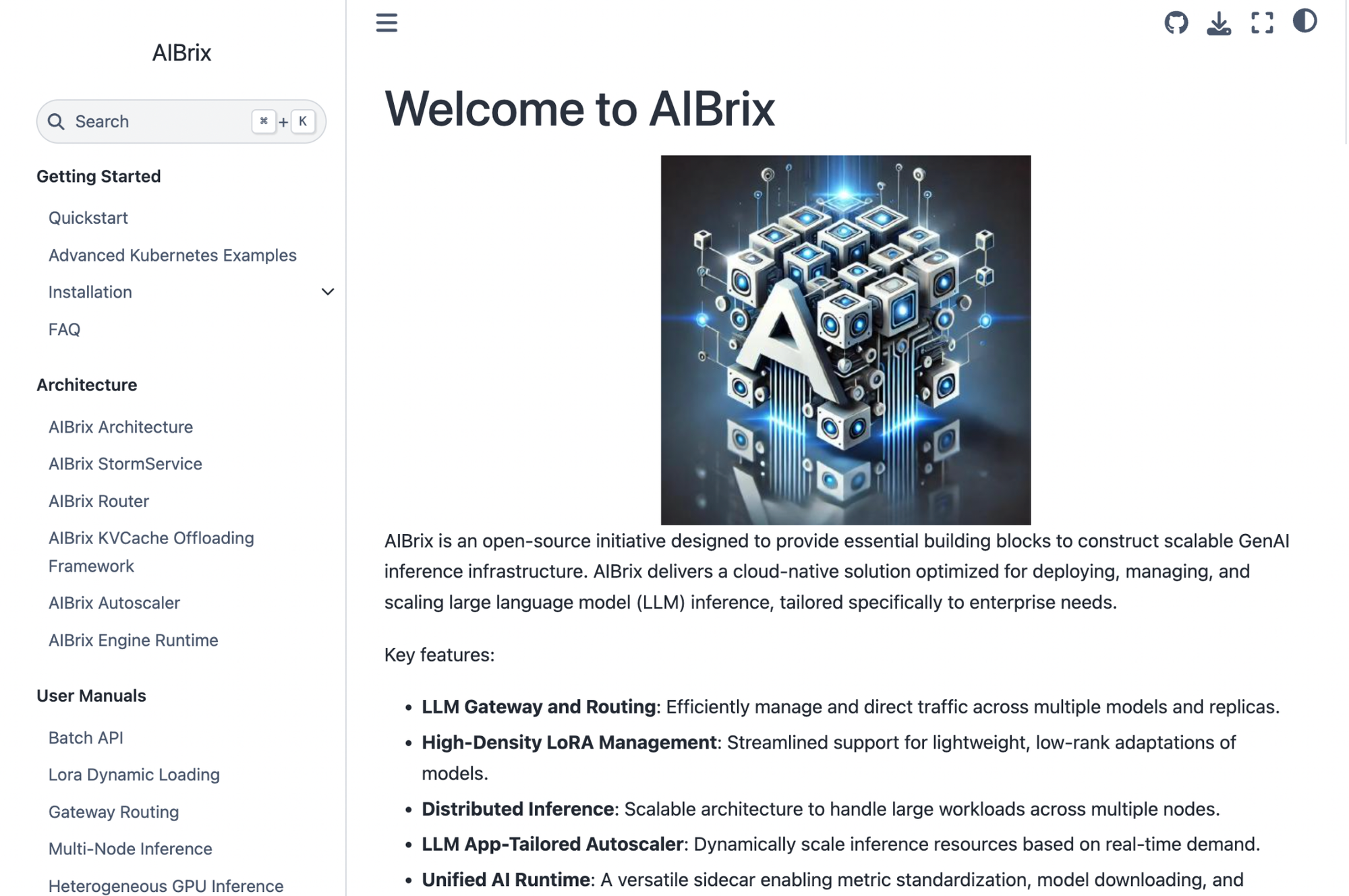

Infrastructure-First: AIBrix

AIBrix represents a cutting-edge approach to LLM infrastructure optimization, emerging in 2025 as an open-source, cloud-native framework designed for massive-scale deployments. Born from academic and industry collaboration, AIBrix acts as a control plane for serving LLMs efficiently in the cloud, with every layer purpose-built to work with inference engines like Meta's vLLM.

Standout Features

The platform's most impressive innovation is its distributed key-value cache that extends attention caching across nodes rather than limiting it to individual instances. This shared cache yields a reported 50% throughput boost and 70% reduction in latency by reusing computation results when multiple requests share overlapping prompt prefixes.

AIBrix also introduces prefix-aware routing that directs requests to model instances already "warm" with relevant context, dramatically increasing cache hits. The system's SLO-driven GPU optimization actively monitors service-level objectives like tail latency and dynamically adjusts GPU allocations to meet performance targets while optimizing costs.

Additional capabilities include:

- High-density LoRA management for serving multiple fine-tuned model variants

- LLM-specific autoscalers that respond to token throughput patterns

- Hybrid orchestration combining Kubernetes and Ray for flexible scaling

- Built-in reliability tools with automated GPU failure detection and routing

Best For

AIBrix shines for organizations running their own LLMs at massive scale, think cloud providers, or enterprises powering AI services for millions of users. However, it focuses purely on inference optimization without providing observability into outputs or quality but it requires integration with other monitoring tools for complete visibility.

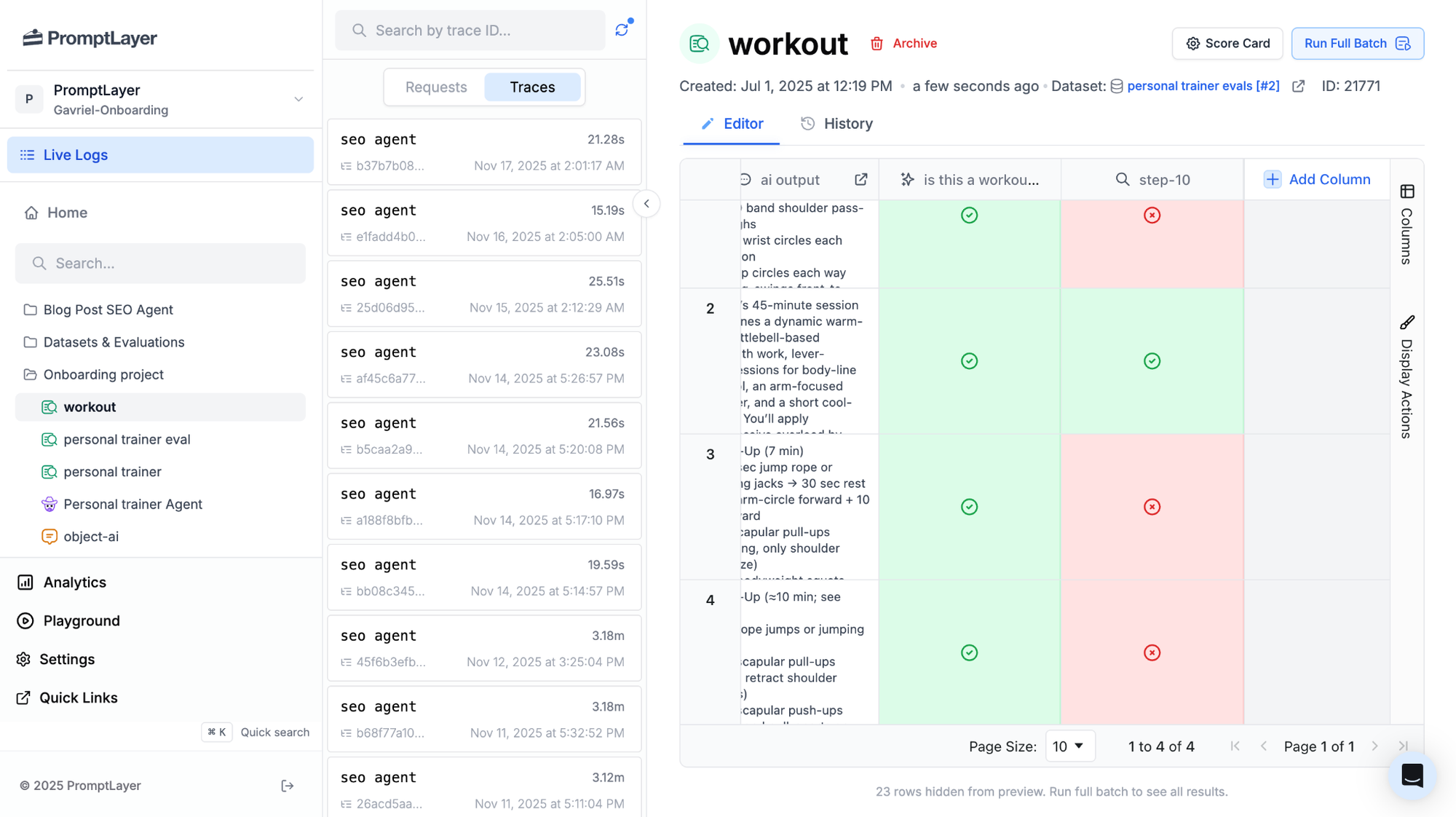

PromptLayer: Git for Prompts

PromptLayer emerged as one of the first platforms specifically addressing prompt management pain points. It treats prompts as first-class artifacts requiring version control, monitoring, and quality assurance, much like code.

Key features include:

- Prompt version control with Git-like history and comparison capabilities

- A/B testing to measure the impact of prompt changes on model performance

- Searchable logging of every prompt-response pair with rich metadata

- Native integrations with OpenAI and LangChain requiring minimal setup

PromptLayer's interface shows what happened and enables a data-driven workflow: identify problematic outputs, adjust prompts, and verify improvements through measurable outcomes.

Best For

PromptLayer is ideal for teams where prompt quality directly determines product success, organizations needing rigorous prompt management and experimentation it fits teams wanting immediate visibility with minimal setup overhead.

Advanced Analysis: Arize AI & Specialized Tools

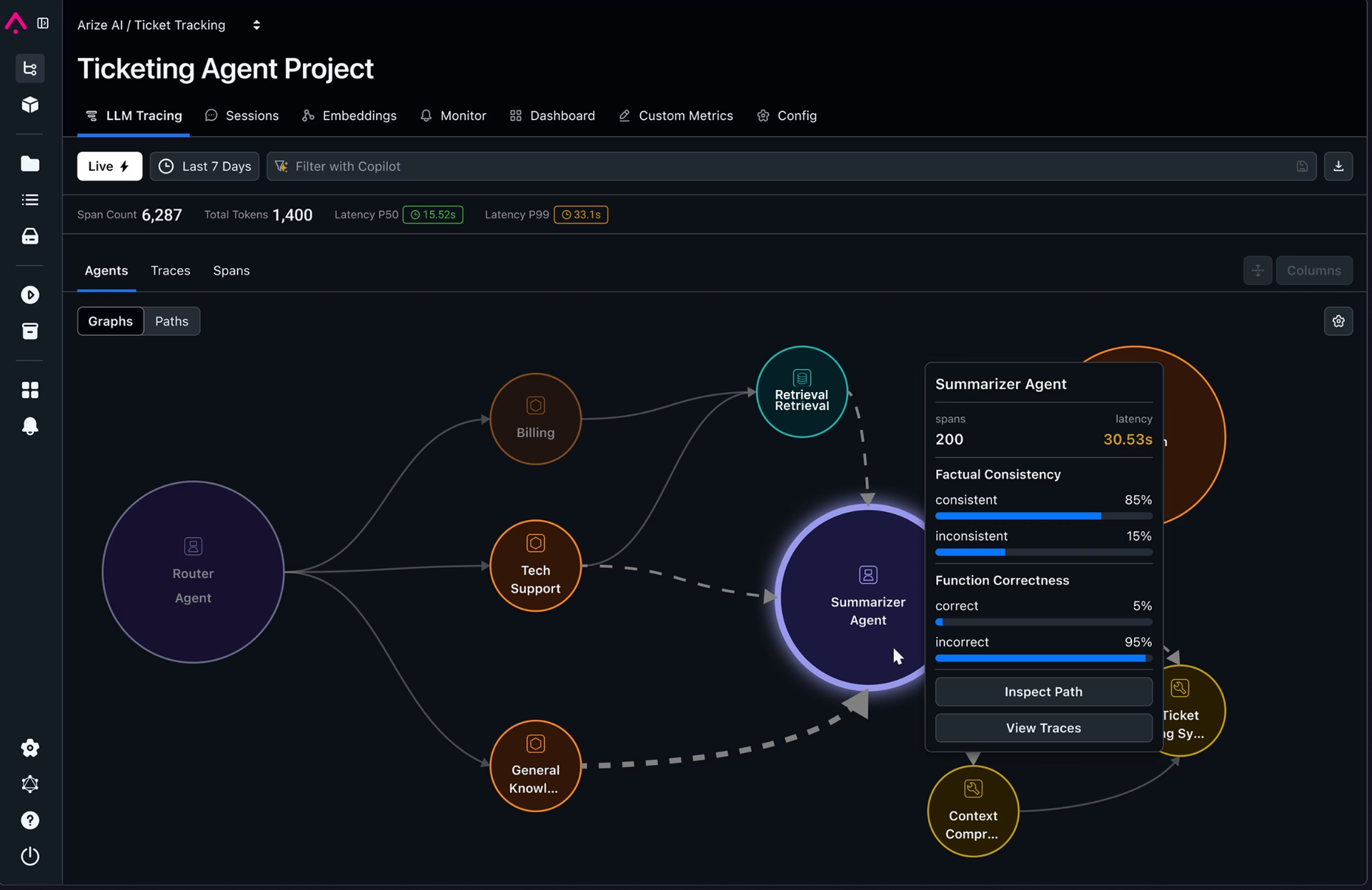

Arize AI: ML-Native Observability

Arize AI brings sophisticated ML monitoring capabilities to LLMs, focusing on detecting subtle performance degradation that simpler tools miss. Its standout feature is embedding drift detection, monitoring numerical text representations to catch semantic shifts indicating the model is encountering new input types or its understanding is changing.

The platform provides:

- RAG pipeline that tracks monitoring each stage from retrieval to generation

- Fine-grained analysis allowing metric slicing by user segment, prompt type, or time

- Integration with major providers and an open-source Phoenix toolkit for local analysis

AgentSight: System-Level Intelligence

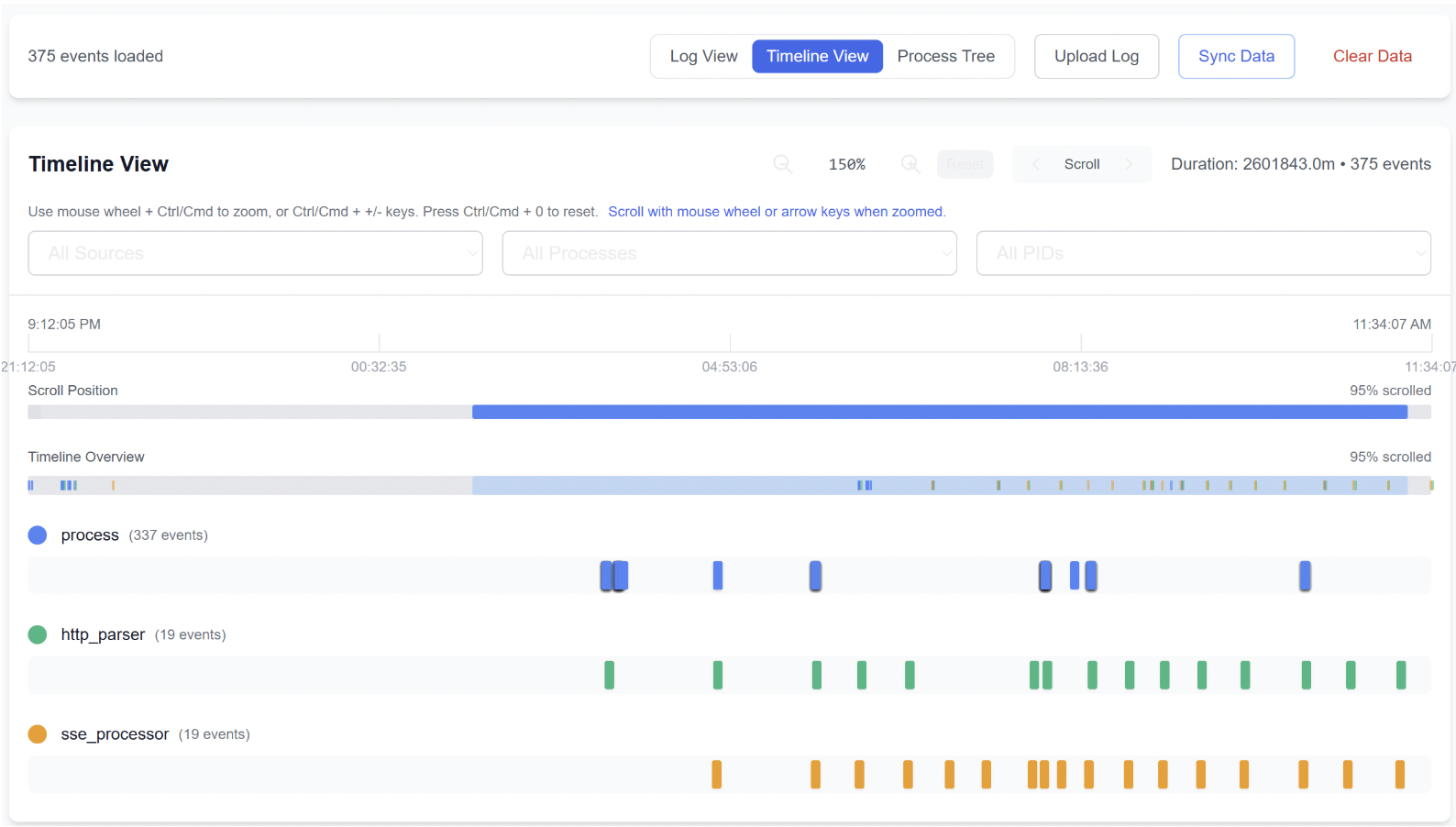

AgentSight represents a novel approach using eBPF technology to monitor AI agents at the kernel level. It bridges the gap between an agent's high-level intentions and its actual system effects, crucial for autonomous agents with system privileges.

The tool correlates:

- Semantic intent extracted from LLM API calls

- System actions like file operations and process creation

- Real-time analysis flagging when benign intentions lead to potentially harmful actions

This approach has proved effective at catching prompt injection attacks and resource-wasting loops with minimal overhead (under 3%).

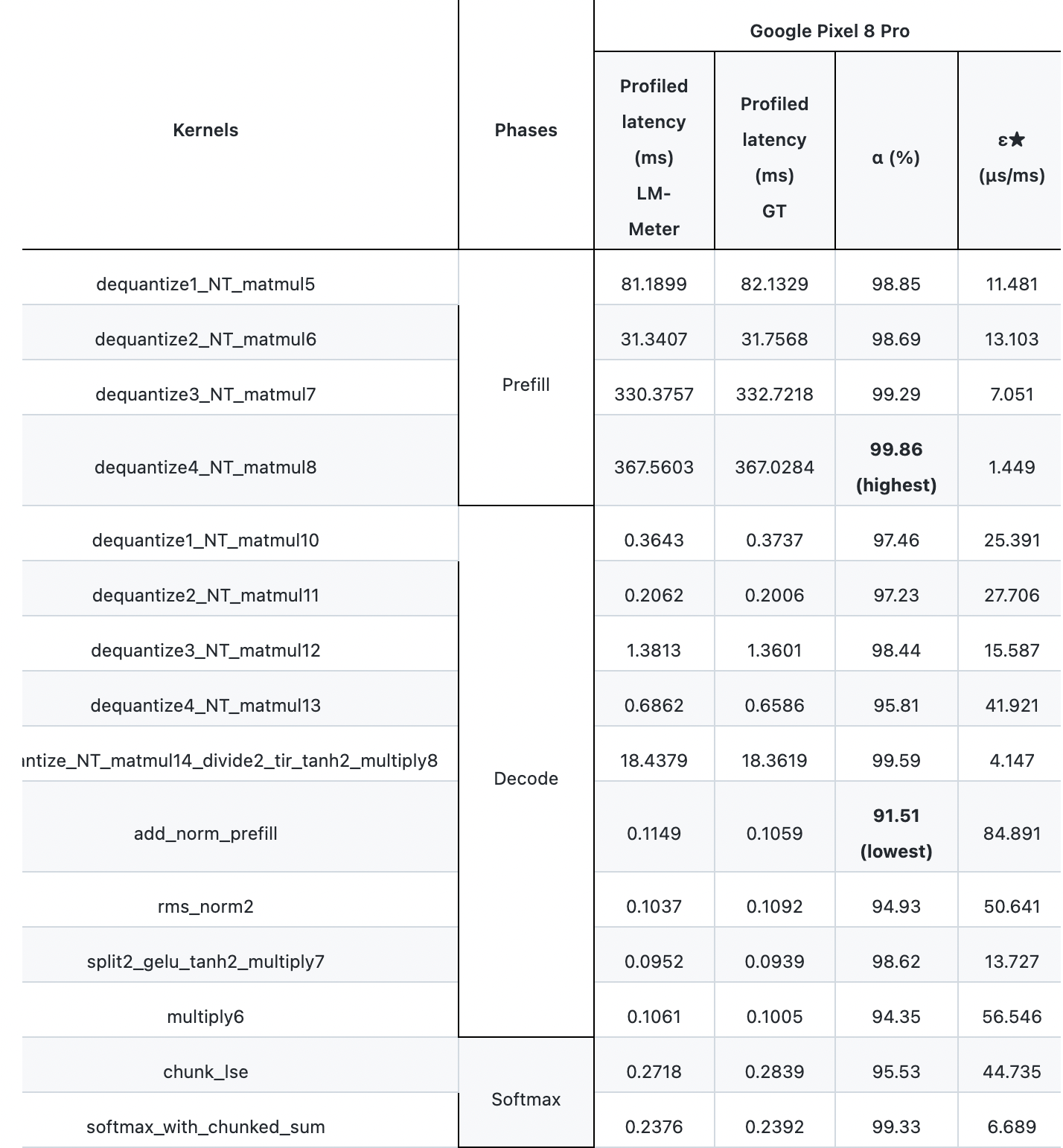

lm-Meter: Edge Deployment Insights

For on-device LLM deployments, lm-Meter provides the first lightweight latency profiler designed specifically for constrained hardware. It breaks down inference into phases, embedding computation, token decoding, softmax, revealing exactly where time is spent on mobile devices.

The tool achieves:

- Fine-grained profiling at kernel operation level

- Minimal overhead (under 2.5% in heavy workloads)

- Real-time operation without external debugging tools

Best For

These specialized tools serve specific niches: Arize for data science teams requiring deep ML analytics, AgentSight for security-conscious deployments of autonomous agents, and lm-Meter for optimizing edge AI applications where every millisecond counts.

The New AI Ops Stack

These platforms collectively transform AI from art into an engineering discipline,making issues transparent and improvements measurable. The evolution from basic prompt logging to today's sophisticated monitoring represents a crucial maturation of the AI ecosystem.

No single platform solves everything. Organizations often need combinations based on specific requirements: infrastructure optimization with AIBrix, prompt management with PromptLayer, and deep analysis with Arize.

Looking ahead, we're seeing exciting developments: AI-assisted observability where models help monitor themselves, tighter integration with evaluation frameworks for automated quality testing, and regulatory compliance features becoming standard as governance requirements solidify.

As LLMs move from experiments to production systems powering critical applications, robust observability is the foundation for building AI that's reliable, efficient, and trustworthy. These platforms provide the instrumentation needed to deploy AI confidently at scale, turning the opaque into the observable and the unpredictable into the manageable.