Is JSON Prompting a Good Strategy?

A clever trick has circulated on Twitter for prompt engineering called "JSON Prompting". Instead of feeding in natural language text blobs to LLMs and hoping they understand it, this strategy calls to send your query as a structured JSON.

For example... rather than "Summarize the customer feedback about shipping", you pass {"task": "summarize", "topic": "customer_feedback", "focus": "shipping"}.

Traditional prompting relies on natural language instructions, which can produce inconsistent responses especially when those outputs need to be parsed by downstream systems. JSON prompting solves this problem by structuring the prompt as a contract.

This technique significantly reduces ambiguity, and enables easier validation and monitoring of AI-generated responses. Whether you're building multi-agent systems, automating workflows, or extracting structured data, JSON prompting ensures that LLM behave like dependable APIs.

But not everyone agrees. Noah MacCallum from OpenAI says "Guys I hate to rain on this parade but json prompting isn’t better. This post doesn’t even try to provide evidence that it’s better, it’s just hype."

We believe it's a valuable tool in your toolbox. It clearly helps with image generation models, and maybe it will help with better prompt organization.

What JSON Prompting Actually Does

Traditional AI interactions feel like conversations with a brilliant but inconsistent colleague. You ask a question, and while the response might be insightful, it arrives in an unpredictable format that requires complex parsing and error handling. JSON Prompting changes this dynamic entirely.

Consider the following example:

Traditional Prompt:

"Analyze this customer review and tell me about the sentiment"

JSON Prompt:

{

"task": "sentiment_analysis",

"input": "The product exceeded my expectations!",

"output_format": {

"sentiment": "positive|negative|neutral",

"confidence": "0.0-1.0",

"key_phrases": ["array", "of", "strings"],

"summary": "brief explanation"

}

}

As you see, passing information in a structured code-like format makes conveying some messages easier and others harder.

Why it Works

The effectiveness of JSON Prompting it leverages how LLMs actually learn. During training, these models encounter millions of examples of structured data: API responses, configuration files, database schemas, and code documentation. JSON patterns are deeply embedded in their understanding.

JSON Prompting transforms AI from an unpredictable tool into a reliable system component. Teams can build automated workflows knowing that outputs will consistently match expected formats, reducing the need for complex error handling and manual intervention.

Integration Simplicity

Structured outputs eliminate the parsing nightmare that often accompanies AI integration. Developers can treat the output like any other API response.

Schema-Driven Development

The most sophisticated JSON Prompting implementations use detailed schemas that define exact output requirements:

{

"prompt": "Extract key information from this legal document",

"schema": {

"type": "object",

"required": ["document_type", "parties", "key_dates"],

"properties": {

"document_type": {"enum": ["contract", "agreement", "memorandum"]},

"parties": {"type": "array", "items": {"type": "string"}},

"key_dates": {"type": "array", "items": {"type": "string", "format": "date"}},

"risk_level": {"enum": ["low", "medium", "high"]}

}

}

}

This approach ensures outputs a conform to specific business requirements and data types.

Constrained Generation

Leading model providers now offer constrained generation capabilities, where models are literally prevented from producing invalid JSON. This eliminates parsing errors entirely and guarantees system compatibility.

Key Platforms Supporting JSON Constraints:

- OpenAI: Structured outputs with schema validation

- Anthropic: JSON mode for reliable formatting

- Google Gemini: Native JSON response options

Format Violations

LLMs can sometimes return extra text or malformed JSON. To mitigate this, several strategies can be used. First, apply explicit constraints in your prompt. Second, take advantage of platform-specific features like native JSON modes, which are designed to enforce structured outputs. Finally, implement robust parsing and validation using tools like Pydantic in Python or Zod in JavaScript. These libraries validate the structure of the response and correct minor formatting issues automatically.

Schema Complexity and Validation

When designing JSON schemas for AI outputs, complexity is the enemy of reliability. Overly intricate schemas confuse models and dramatically reduce accuracy, so always start with simple structures like {"classification": "category_name", "confidence": 0.85, "reasoning": "brief explanation"} before iterating toward more sophisticated designs.

Use clear, descriptive field names, provide examples within your prompts, and test thoroughly before production deployment. As your needs evolve, invest time in well-designed schemas that reflect your business requirements— for instance, a customer feedback schema with fields for sentiment, priority, department, action flags, and summaries.

Treat AI responses like any external API: assume they might be malformed and handle errors gracefully with robust parsing, schema validation, and retry logic. This defensive approach transforms potentially brittle AI integrations into rock-solid production components.

Monitor and Iterate

It is impossible to declare that "JSON Prompting" is the best strategy for every use case. As with most things in the world of context engineering, tinkering and building reliable evals is the only way to find out. Try it out in your project. Run tests, see if it helps or hurts.

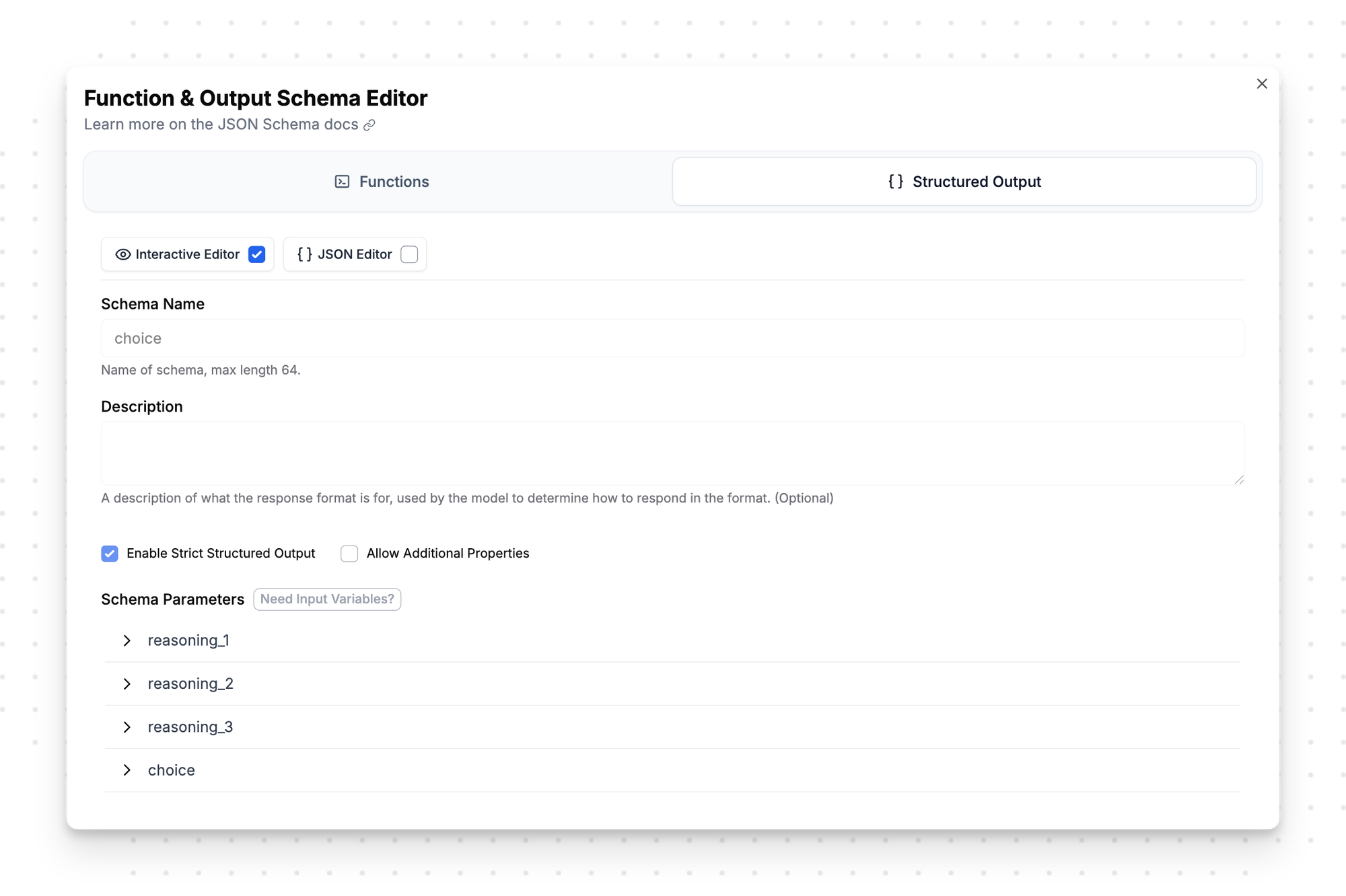

Platforms like PromptLayer are really useful for tracking the performance of your JSON prompts. These tools provide insights into validation failures, response consistency, and prompt effectiveness. Look for patterns in the data and adjust schemas accordingly. Build use case specific evals, compare JSON prompts with natural language prompts side-by-side, and version your agentic systems.

PromptLayer even has an interactive editor for defining structured output JSON. See screenshot below.

Drawbacks and Limitations

JSON prompting isn't a silver bullet. Several experts have pointed out significant drawbacks.

Context Switching Penalty

The founder of PromptLayer notes that models "think" differently when outputting JSON versus natural text. Asking for "Write a love note and return it in JSON with key 'note'" produces worse results than simply "Write a love note". The model switches into technical mode when it sees JSON syntax. This affects creative quality.

Token Inefficiency

Noah MacCallum from OpenAI highlights that JSON creates unnecessary overhead:

- Whitespace consumes tokens

- Escaping special characters adds complexity

- Closing brackets require attention tracking

- The format itself creates "noise" in the attention mechanism

Markdown or XML often performs better. They're more token-efficient and models handle them naturally.

Wrong Distribution

When you force JSON output, you push the model into "I'm reading/outputting code" mode. This isn't always desirable. Creative tasks suffer. Nuanced reasoning gets constrained. The model pattern-matches on technical documentation rather than human communication.

Good Alternatives Exist

Many models excel at other formats:

- Claude handles XML exceptionally well

- Markdown provides structure without overhead

- Plain text with clear delimiters works fine

The key is matching format to task. Technical extraction? JSON might help. Creative generation? Avoid it.

The Structured Future of AI

JSON prompting represents a shift in how we think about AI integration. The goal of a prompt engineer is to make models more predictable, not smarter.

As AI becomes embedded in production systems, structured communication becomes non-negotiable. JSON prompting bridges the gap between natural language flexibility and software engineering requirements. The technique works because it aligns with how models were trained and how software systems communicate.

Critics are right that JSON prompting doesn't improve raw performance. But they miss the point. In production, consistency beats brilliance. A slightly less eloquent response that always follows your schema is worth more than genius-level insights you can't parse.

For prompt engineers, JSON prompting is a fundamental skill. Master it. You'll build systems that scale. Ignore it. You'll be stuck parsing text forever.

PromptLayer is an end-to-end prompt engineering workbench for versioning, logging, and evals. Engineers and subject-matter-experts team up on the platform to build and scale production ready AI agents.

Made in NYC 🗽

Sign up for free at www.promptlayer.com 🍰