Gorgias Uses PromptLayer to Automate Customer Support at Scale

Gorgias Scaled Customer Support Automation 20x with PromptLayer and LLMs

The following is a case study of how Gorgias uses PromptLayer.

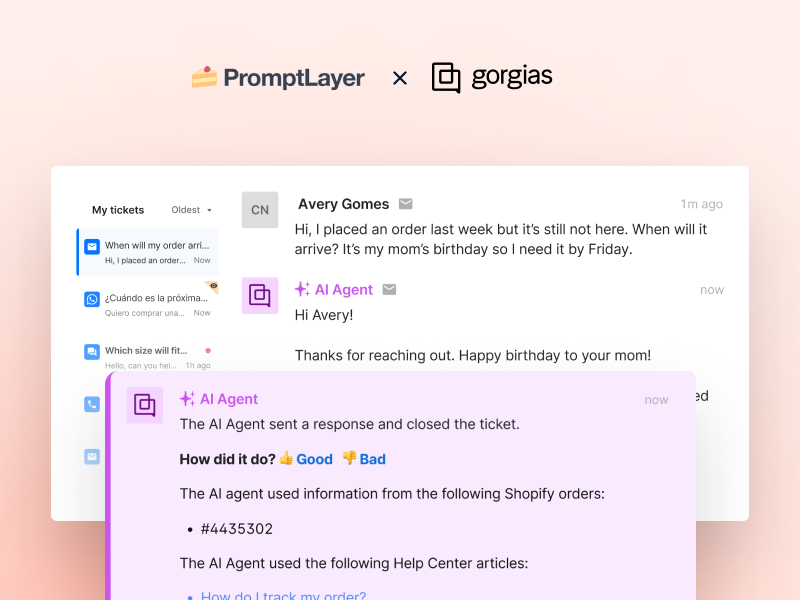

Gorgias is the #1 helpdesk for Shopify, providing ecommerce merchants with tools to deliver excellent customer support. While Gorgias had initially stayed away from using AI chatbots, with recent advancements in large language models, they are now revisiting automation.

Gorgias’ AI Agent, which handles customer support interactions, is expected to become one of the company’s biggest sources of revenue. By the end of the year, they aim to have AI Agent handle 60% of new customer conversations. Gorgias will offer the agent to all their 15,000 customers and is expecting rapid adoption by at least 6,000 merchants immediately.

The Gorgias AI Agent Team

The AI Agent team at Gorgias consists of machine learning engineers building model APIs and gateways, and software engineers building the pipeline to interact with the models.

Rafaël Prève, the ML team’s Product Manager, started as the de-facto prompt engineer. He has since converted Ognjen Mitrovic from technical support engineer to a full-time prompt engineer, who is now using PromptLayer on a daily basis to develop and test prompts. With the success seen using PromptLayer, the team is now planning to substantially increase the headcount of prompt engineers.

The goal is to make the prompt engineers fully autonomous without needing direct ML team involvement for prompt changes and improvements.

Building Fully Autonomous Prompt Engineers

Gorgias’ ML team led by Firas Jarboui is setting up PromptLayer to enable the AI Agent team’s prompt engineers, PMs from the analytics team, and other teams within Gorgias building AI features to use it autonomously without direct ML team involvement.

For example, the analytics team is building a system to grade AI Agent’s conversations on a rubric. The ML team built the API endpoint and an initial version of the prompts in PromptLayer. Now, the analytics team, who has the relevant domain expertise, can work independently to refine the prompts and scoring rubric without direct ML team involvement.

Prompt Engineering Feedback Loop

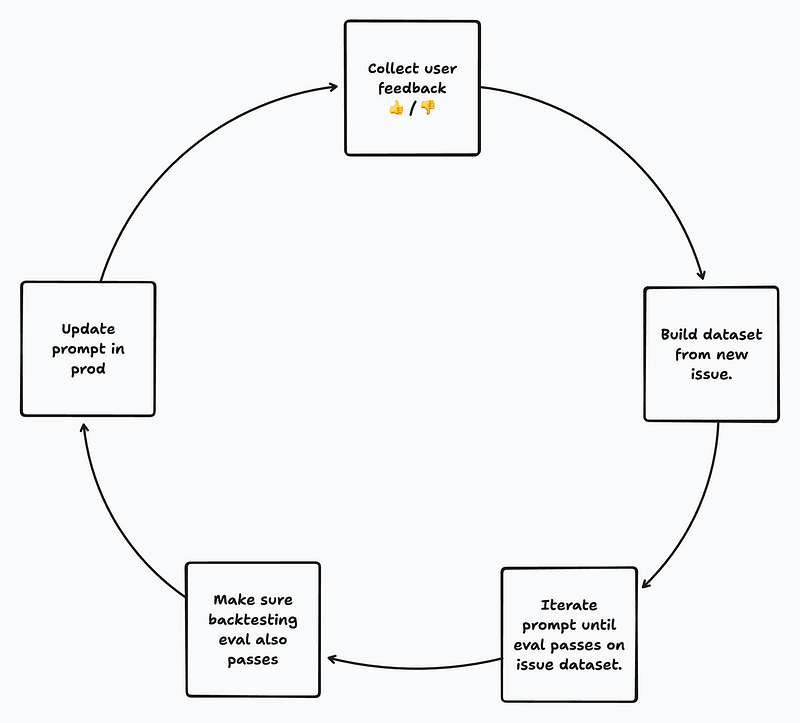

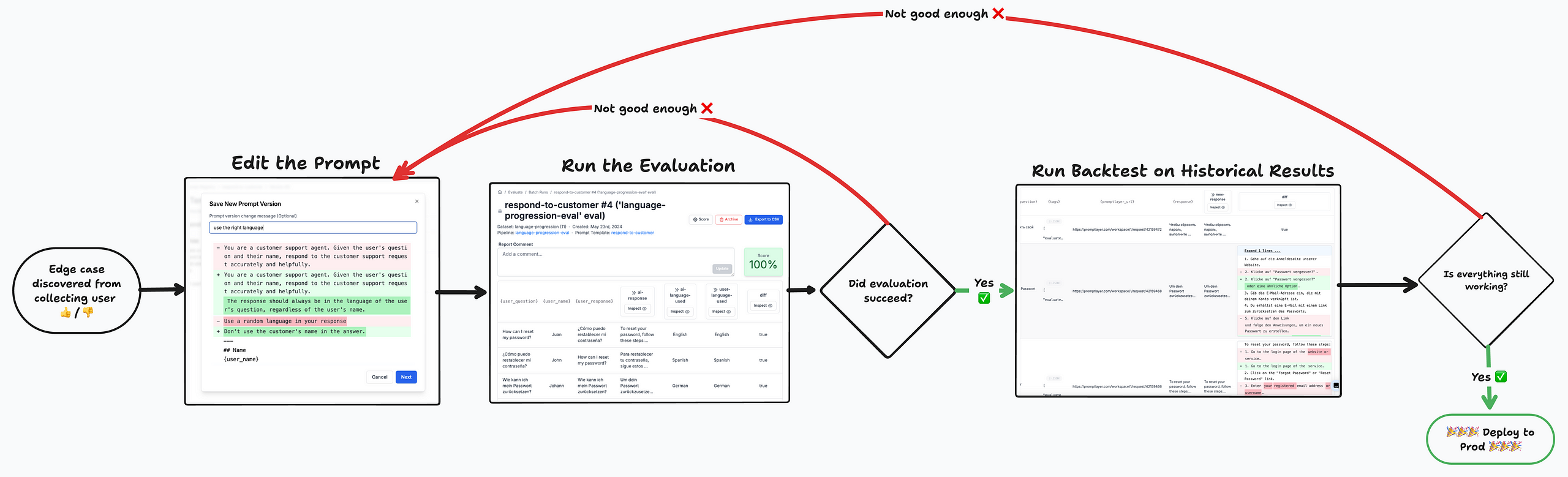

The prompt engineering process at Gorgias follows an iterative loop:

Discover Edge Cases

The first step is to discover edge cases by collecting user feedback (👍 and 👎) from merchants who are using AI Agent. The prompt engineers closely monitor the negative feedback to identify and surface issues, such as the AI overpromising, using the wrong language, or incorrectly closing tickets when it should have handed the conversation over to a human agent.

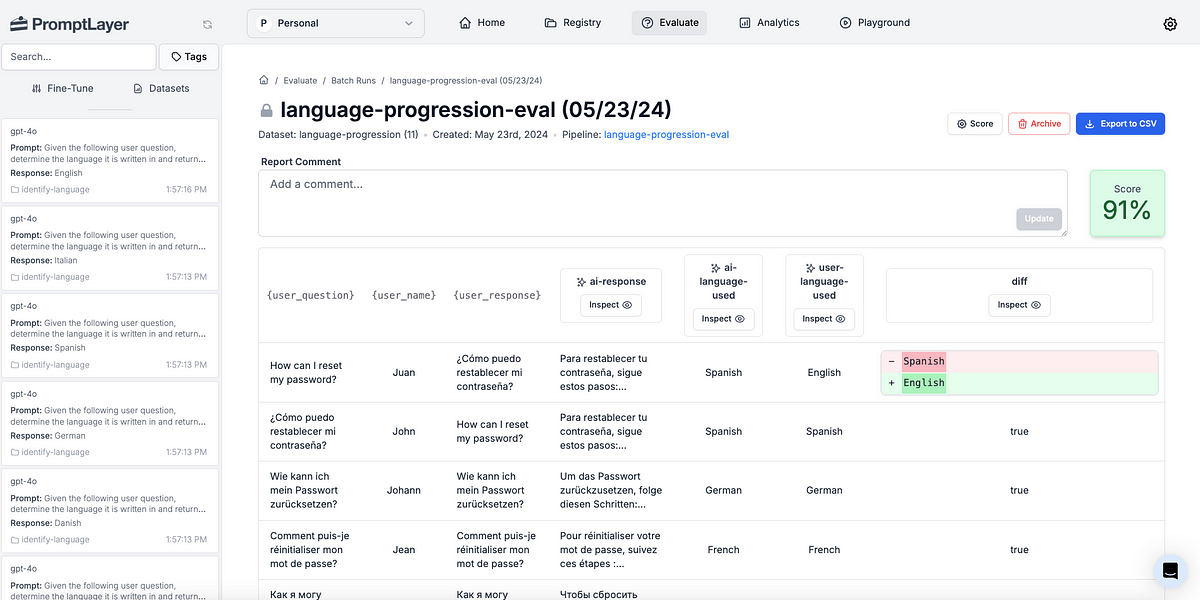

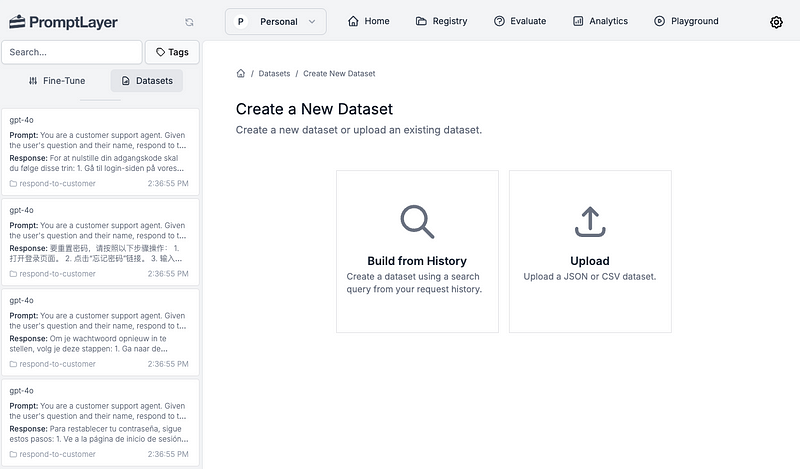

Create a Dataset & Evaluation Pipeline

Once the edge cases are identified, the next step is to create a dataset in PromptLayer using the historical conversations that received negative feedback. We will call this a progression dataset because it is used to improve the prompt. The prompt engineers manually label the dataset with the correct ground-truth responses for each conversation. They then configure an evaluation pipeline within PromptLayer that compares the outputs generated by the prompts to the ground-truth responses.

Iterate on Prompt, Pass Eval

With the evaluation pipeline set up, the prompt engineers can now iterate on the prompt by editing the system message, trying different models, or tweaking other parameters. This is where the real prompt engineering happens. The engineers continue iterating until the evaluation passes and the prompt is generating satisfactory outputs for the edge cases.

Doing this without PromptLayer would be painful. The feedback loop of editing a prompt and evaluating it’s performance is lightning fast, allowing for more iterations and better quality outputs.

Ensure No Regression with Backtesting

Before deploying the updated prompt, it’s crucial to ensure that the changes have not introduced any regressions. To do this, the engineers run the updated prompt on a second “non-regression” evaluation dataset. This dataset consists of historical conversations that received 👍 feedback, so they can be assumed to be ground-truth. The non-regression dataset is frequently updated by pulling from recent request logs in PromptLayer.

Running the evaluation on this dataset helps ensure that the prompt is not overfitting to the specific edge cases and is still performing well on the types of conversations it was already handling correctly.

By following this iterative prompt engineering loop, the Gorgias team can systematically identify and fix issues, while ensuring the overall quality and performance of AI Agent remains high as it’s scaled up to handle more customer conversations.

Modular Prompt Engineering

Gorgias uses a modular prompt architecture to make their system more testable and achieve better results. They split the process into different agents, with each logical block representing a specific task or part of their finite state machine, such as summarizing, filtering, or QA. This modular architecture allows the Gorgias team to evaluate each prompt individually, enabling easier iteration and unit testing.

Challenges

Prompt engineering is hard and time-consuming, with the AI Agent team spending about 20% of their time creating new prompts and 80% of their time iterating and improving existing ones, largely through evaluations.

As AI Agent rolls out to more users, an increasing number of edge cases are discovered that need systematic fixes. Updating prompts without introducing regressions at this scale is challenging. Victor Duprez, the Director of Engineering, notes “without PromptLayer I’m sure this would be a mess.”

Solution

Gorgias uses PromptLayer to store and version control prompts, enabling them to rapidly deploy prompt updates, run evaluations on regression and backtest datasets, review logs to identify issues, and build new datasets for targeted improvements. PromptLayer gives the Gorgias team confidence to do prompt engineering at scale.

Results

We iterate on prompts 10s of times every single day. It would be impossible to do this in a SAFE way without PromptLayer.

Victor Duprez (Director of Engineering at Gorgias)

- 10-person team made 1,000+ prompt iterations, 500 evaluation reports, and 221 datasets over 5 months

- AI Agent now successfully handling 20% of all email support conversations

- Built independent prompt engineering team to process feedback, update prompts, maintain datasets, and run evaluations

- Developed repeatable process to safely & rapidly develop and launch new AI features

- Providing observability for company executives into the “AI black box”

By making prompt engineering a systematic, measurable, and scalable process, Gorgias is turning AI Agent into a key driver of the business. PromptLayer empowers a growing team of prompt engineers to work autonomously to expand automation across customer support.

PromptLayer is the most popular platform for prompt engineering, management, and evaluation. Teams use PromptLayer to build AI applications with domain knowledge.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰