Edge AI Implementations: A Practical Guide

97% of CIOs now have Edge AI on their roadmap, intelligence is moving from cloud data centers to the devices around us. From factory floors where split-second decisions prevent costly downtime to autonomous vehicles navigating busy streets, artificial intelligence is migrating to where data originates. This shift is a transformation in how we process information and make decisions.

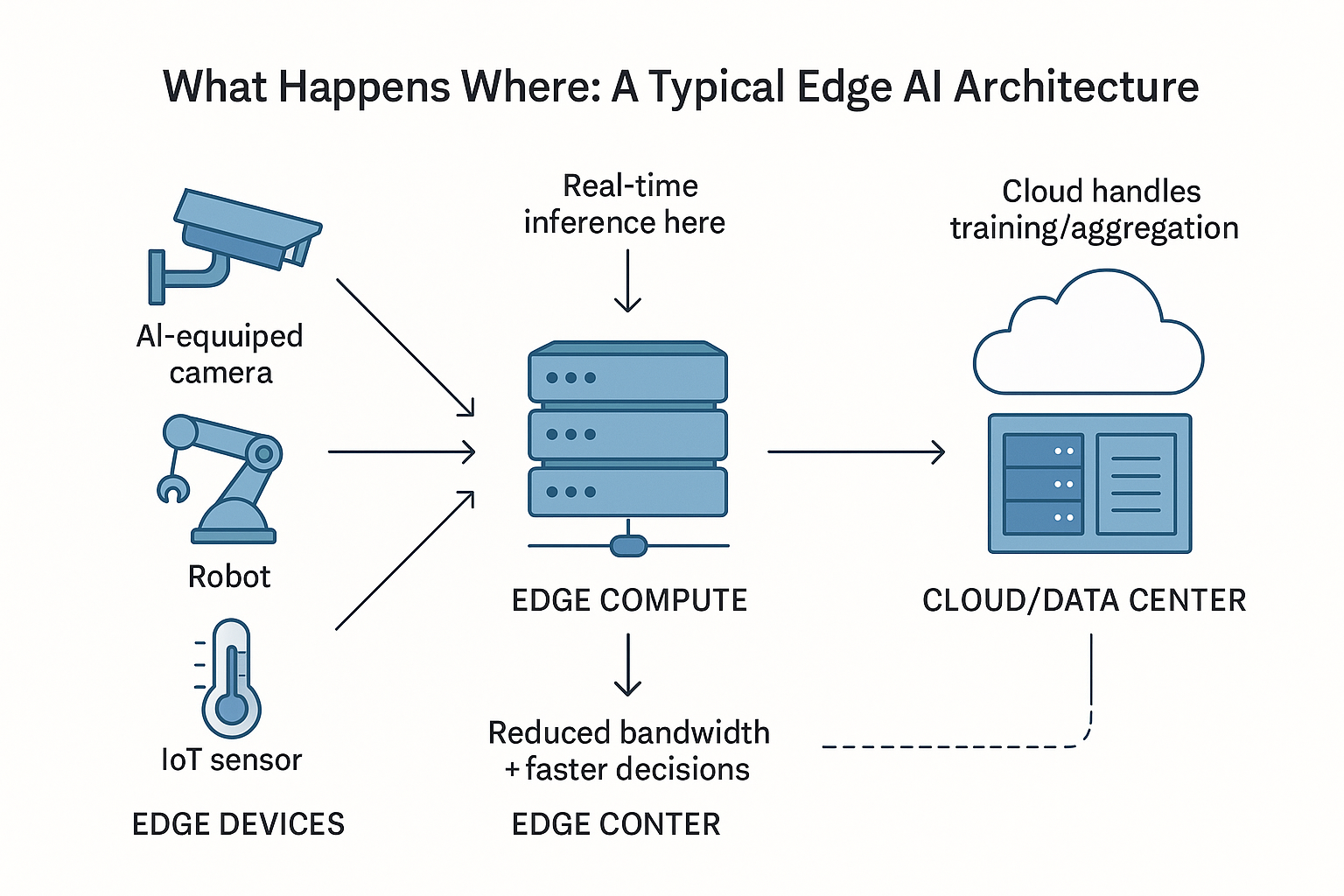

Edge AI processes data locally on devices, cameras, sensors and machinery, rather than sending everything to remote servers. This proximity to data sources enables something previously impossible: millisecond decision-making for critical applications where every second counts. Whether it's a healthcare monitor detecting cardiac anomalies or a manufacturing robot avoiding collisions, Edge AI is redefining what's possible at the periphery of our networks.

What Edge AI Actually Is

Edge AI represents a paradigm shift in artificial intelligence deployment. At its core, it's AI inference happening "at the edge", on local devices, not distant data centers. Unlike traditional cloud-based AI that requires constant connectivity and introduces latency, Edge AI processes data in situ, sending only insights upstream rather than raw data streams.

This distinction is crucial. When a security camera analyzes video footage for anomalies, it doesn't need to stream high-definition video to the cloud 24/7. Instead, it processes frames locally and transmits only relevant alerts or metadata. This approach dramatically reduces bandwidth requirements while enabling real-time responses.

The evolution toward Edge AI didn't happen overnight. It builds on decades of distributed computing innovation:

- 1990s: Content Delivery Networks like Akamai pioneered placing servers near users to cache web content

- 2000s: Cloud Computing centralized compute power but revealed limitations in latency, bandwidth, and privacy

- 2010s: Fog Computing introduced intermediate processing nodes between cloud and devices

- Early 2020s: Mobile Edge Computing integrated servers at cellular base stations for ultra-low latency

- Today: On-Device AI enables truly autonomous operation with models running entirely offline

Modern Edge AI represents the culmination of this journey, distributed intelligence that operates adjacent to data sources, dramatically lowering latency and bandwidth needs while preserving privacy.

The Hardware Making It Possible

The Edge AI revolution wouldn't be possible without specialized hardware designed for efficiency. Traditional CPUs simply can't deliver the performance-per-watt needed for edge deployment. Instead, a new generation of accelerators has emerged:

Application-Specific Integrated Circuits (ASICs) like Google's Edge TPU deliver exceptional throughput and energy efficiency for fixed workloads. These chips achieve orders of magnitude better performance per watt than general-purpose processors, critical when every milliwatt matters.

NVIDIA's Jetson platform, including the recently announced Jetson Thor processor, offers 8× the performance of previous generations while maintaining thermal efficiency suitable for robotics and embedded systems. These GPU-based solutions excel at parallel processing tasks like computer vision.

Neuromorphic chips represent perhaps the most intriguing development. Intel's Loihi and IBM's TrueNorth mimic biological neurons, computing only when stimuli occur. This event-driven architecture achieves extraordinary energy efficiency, processing data only when something happens rather than constantly polling sensors. For always-on applications like anomaly detection or adaptive control, neuromorphic processors can reduce power consumption by 1000× compared to traditional approaches.

The key metric driving adoption is TOPS/W (tera-operations-per-second per watt), which reveals the dramatic efficiency gains:

- Traditional CPUs: ~0.1 TOPS/W

- Specialized edge accelerators: 10-100+ TOPS/W

- Impact: This efficiency gain makes battery-powered AI devices practical for the first time

## Why Companies Are Racing to Deploy

The business case for Edge AI extends far beyond technical advantages. Organizations report concrete benefits that directly impact their bottom line:

- Ultra-low latency enables applications previously impossible with cloud-based AI. When a manufacturing robot detects an obstruction, it needs to stop within milliseconds. For safety-critical systems, this difference can prevent injuries or save lives.

- Bandwidth savings are equally compelling. A single 4K security camera generates approximately 25 Mbps of data. For a facility with 100 cameras, that's 2.5 Gbps of continuous upstream bandwidth, prohibitively expensive for cloud processing. Edge AI reduces this to kilobits of metadata and occasional alert clips, cutting bandwidth requirements by 99% or more.

- Privacy advantages address both regulatory requirements and customer concerns. When facial recognition happens on-device rather than in the cloud, sensitive biometric data never leaves the premises. This helps organizations comply with GDPR, HIPAA, and other privacy regulations while building customer trust.

- Resilience provides operational continuity. Edge AI systems continue functioning during network outages, critical for remote oil rigs, warehouses with spotty connectivity, or retail stores during internet disruptions. Cached models and local processing ensure business operations don't grind to a halt when connectivity fails.

Emerging Innovations

The Edge AI landscape continues to evolve rapidly, with several innovations poised to expand capabilities dramatically.

TinyML brings neural networks to microcontrollers with just kilobytes of memory. New frameworks like TensorFlow Lite Micro enable sophisticated AI on battery-powered sensors and wearables. Even advanced language understanding is creeping to the edge, with embedded voice assistants processing commands locally rather than in the cloud.

5G integration creates new possibilities for edge-cloud hybrid systems. Ultra-low-latency networks enable edge servers co-located with 5G base stations, providing cloud-like capabilities with edge-like responsiveness. Network slicing allows dedicated bandwidth for critical edge AI applications.

Federated learning addresses privacy concerns while enabling continuous improvement. Multiple edge devices collaboratively train shared models by exchanging parameters instead of raw data. A network of medical devices, for instance, can improve diagnostic accuracy without any patient data leaving individual hospitals.

Neuromorphic computing promises another leap in efficiency. Brain-inspired processors and event-based vision sensors process information only when changes occur. These systems can deliver always-on perception at power levels suitable for battery operation, potentially enabling entirely new categories of autonomous edge devices.

Intelligence at the Edge

For organizations beginning their Edge AI journey, the path forward requires careful consideration of use cases, hardware selection, and deployment strategies.