Drag-and-Drop LLMs: Zero-Shot Prompt-to-Weights Synthesis

What if you could customize an AI model in seconds, just by describing what you want it to do? This is the revolutionary promise of Drag-and-Drop LLMs, a breakthrough approach that transforms how we adapt language models for specific tasks.

Traditional model fine-tuning through methods like LoRA requires hours of training on expensive GPUs for each new task. DnD changes the game entirely by introducing instant adapter generation: simply drop in prompts describing your task, and get specialized model weights immediately, no training required. This innovation delivers results 12,000× faster than conventional methods, potentially democratizing AI customization for developers everywhere.

The Problem with Current Fine-Tuning

Today's parameter-efficient fine-tuning methods have a fundamental bottleneck: every new task requires a separate training run. Consider the reality of adapting even a modest 0.5B-parameter model using LoRA: it typically occupies four A100 GPUs for half a day. Scale that to multiple tasks or larger models, and the computational burden becomes overwhelming.

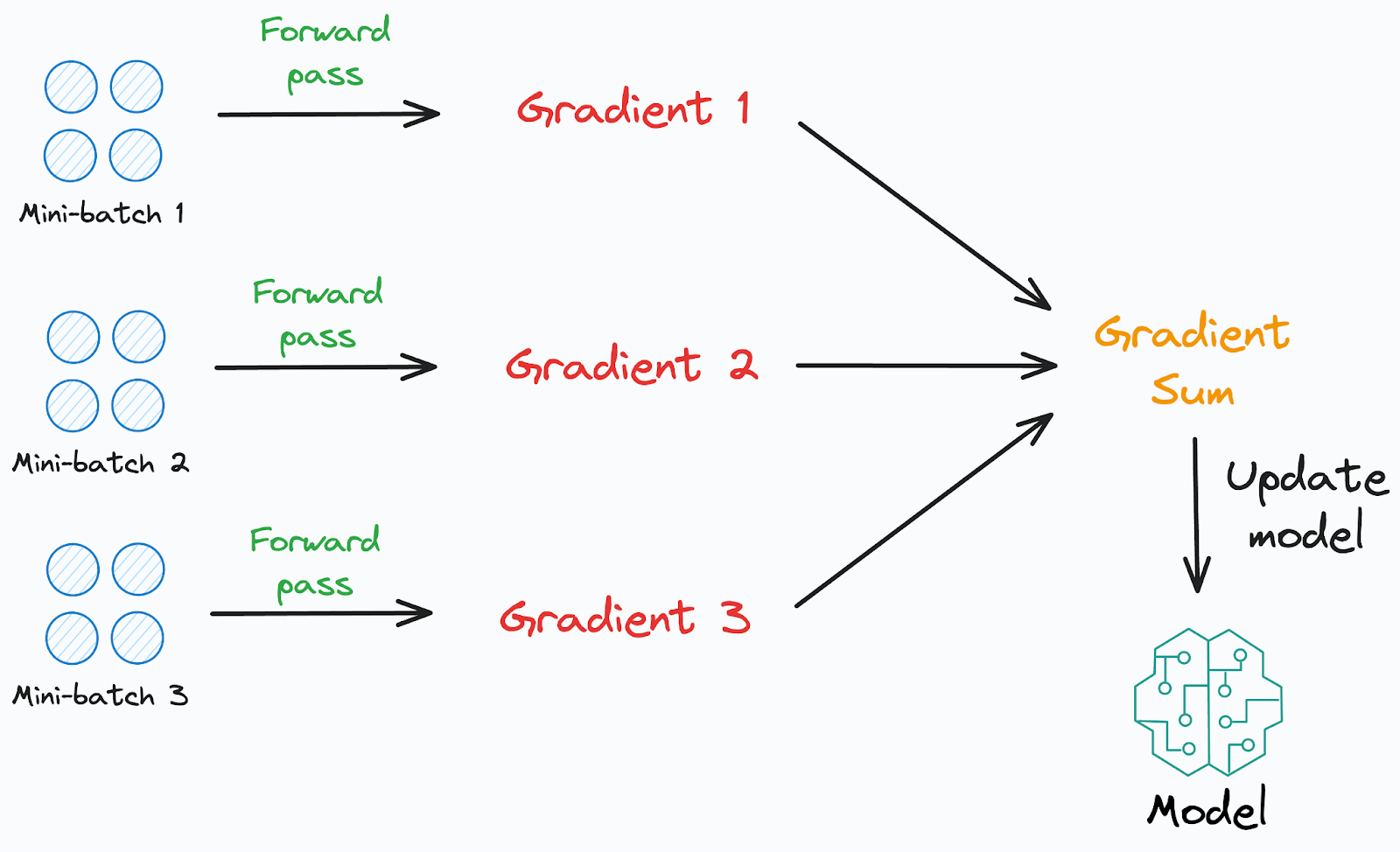

This traditional approach creates significant friction for rapid prototyping and deployment. Teams must collect labeled data, configure training pipelines, and wait hours or days for results. The rigid "data → gradient → weights" loop means that experimenting with different task variations becomes prohibitively expensive, both in time and compute resources.

For organizations needing to deploy specialized models across multiple domains or quickly iterate on model behavior, this bottleneck severely limits innovation and responsiveness to changing requirements.

How Drag-and-Drop Works

DnD fundamentally reimagines the adaptation process by mapping prompts directly to model weights, completely bypassing the traditional training loop. Think of it as teaching a network to become a "weight generator" that instantly produces the right parameters for any task based solely on example prompts.

The system operates through a clever two-stage training process:

- Stage 1: Building the Knowledge Base, Researchers collect prompt-weight pairs from diverse tasks by fine-tuning LoRA adapters conventionally across various domains like reasoning, coding, and mathematics. They save both the resulting weights and the unlabeled prompts used for each task, creating a rich dataset that links task descriptions to their optimal parameters.

- Stage 2: Training the Generator, The system trains a specialized generator network to predict weights from prompts. The architecture combines a frozen text encoder (like Sentence-BERT) that converts batches of prompts into condition embeddings, with a hyper-convolutional decoder that expands these embeddings into complete LoRA matrices for every layer of the target model.

- Deployment, The process is elegantly simple: feed in prompts describing your task, and in a single forward pass, receive a ready-to-use LoRA adapter. No gradients, no optimization loops, just instant specialization.

Performance Breakthrough

The numbers speak for themselves. DnD generates a complete LoRA adapter for a 7B-parameter model in under one second, a task that would traditionally take hours. But speed isn't achieved at the expense of quality.

On zero-shot benchmarks spanning common-sense reasoning, mathematics, coding, and vision-language tasks, DnD delivers a remarkable +30% improvement over traditional LoRA methods. Even more impressively, these generated adapters often outperform task-trained adapters that had access to full datasets and gradient optimization.

The system demonstrates robust scaling across model sizes from 0.5B to 7B parameters, maintaining its performance advantages throughout. Perhaps most remarkably, DnD achieves all this while requiring zero labels, only unlabeled prompts describing the task. This gives it a unique advantage over both traditional fine-tuning and few-shot in-context learning approaches.

Practical Applications

The ability to instantly generate specialized model weights opens up transformative use cases across the AI landscape:

- On-the-fly Personas: Transform a chatbot's personality and expertise in seconds by supplying style prompts like "You are a friendly fashion advisor" or "You are a technical support specialist." No retraining needed, just describe the desired behavior and watch the model adapt instantly.

- Rapid Prototyping: Generate dozens of specialized adapters by simply varying instruction prompts. Teams can explore different task formulations, test hypotheses, and iterate on model behavior at unprecedented speed, turning what used to be week-long experiments into afternoon brainstorming sessions.

- Multi-tenant Systems: Deploy custom LLMs for each user domain by feeding their representative queries into DnD. A single base model can serve hundreds of specialized use cases, with each tenant receiving a model tailored to their specific needs, all generated on demand from their own data.

Low-resource Domains: Adapt models to niche fields like legal analysis, medical diagnostics, or scientific research by mining just a handful of unlabeled examples from the domain. This bypasses the traditional requirement for large annotated datasets, making specialized AI accessible to fields where labeled data is scarce or expensive.

Democratizing AI Specialization

Drag-and-Drop LLMs represent a leap forward in how we think about model customization. By treating network weights as a data modality that can be directly generated from task descriptions, DnD eliminates the per-task training overhead that has long bottlenecked AI deployment while simultaneously improving performance.

This breakthrough joins a growing movement in the field, with related work like Text-to-LoRA and ModelGPT confirming the viability and importance of prompt-to-weight approaches. The community's enthusiastic response, including DnD's featuring among top ML papers on platforms like Hugging Face, signals strong momentum behind this new paradigm.

Looking forward, the concept of "weights as data" opens extraordinary possibilities for instant model customization. As these techniques mature and scale, we may be approaching an era where adapting AI to new tasks becomes as simple as describing what we want, making specialized AI truly accessible to everyone.