Comparing Tool Calling in LLM Models

The Importance of Tool Calling

Tool calling has become a powerful asset for many LLM applications. Tools allow Prompt Engineers to build applications that require models to perform actions that the LLM can not readily do on its own.

For example, if you are building an LLM application that converts natural language queries to SQL queries, using a tool call is a convenient way to let your LLM ask for the actual SQL query to be run and get the response.

Another example is if you are looking for timely information. If the LLM needs to know the current time, weather, or value for a specific customer attribute, providing it the ability to call a tool that will go out and grab that information opens up a whole host of applications that would have previously been a lot more difficult to Prompt Engineer from scratch.

Tool calling has been so successful, that some even use this feature not for tool calling specifically but instead to force the LLM to output a specific structured output. Given that tool calls loosely guarantee that the LLM will output a structured input into a function, a Prompt Engineer can use this feature to get structured output and not actually call the function. OpenAI released Structured Outputs specifically for this use case.

Model Differences in Tool Calling

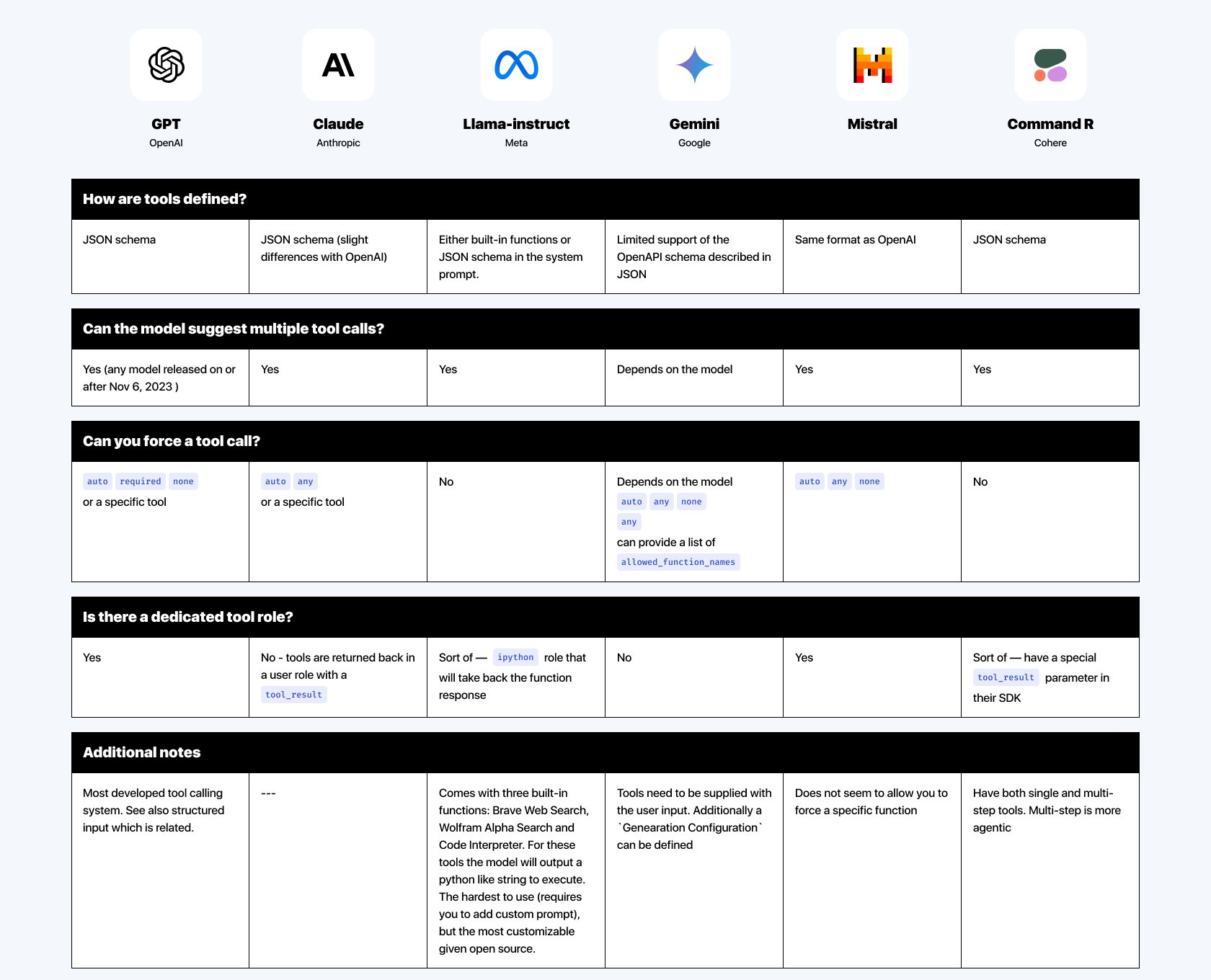

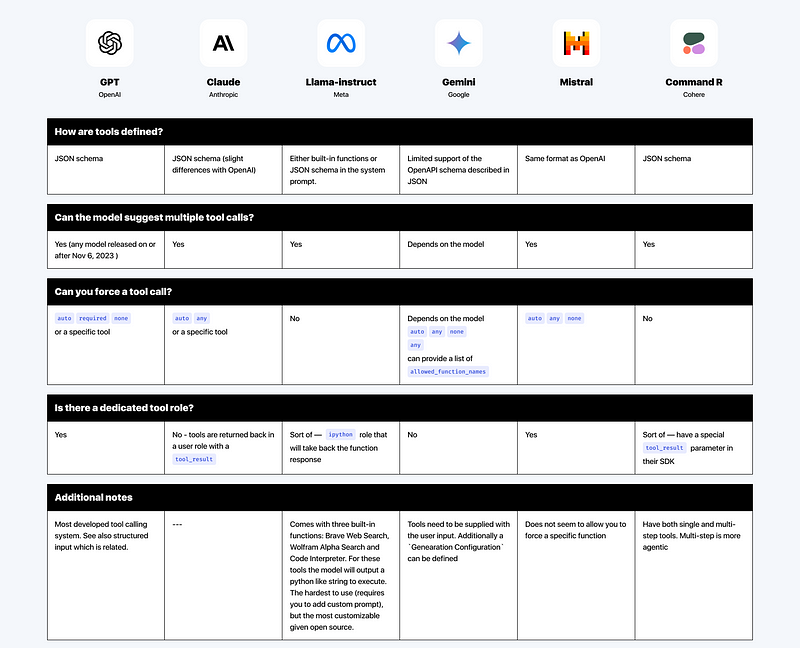

Most providers have a form of tool calling; however, there are subtle differences with how a Prompt Engineer can use it. In this table we have compared how function calling works with OpenAI GPT, Anthropic Claude, Meta Llama, Google Gemini, Mistral, and Cohere Command R models:

For the most part, most model providers are feature-complete. While all models support defining functions in a JSON schema there are subtle differences in how each model defines that JSON schema. Other differences between model providers include if you can force a use of a specific model or if the response of a tool goes into its own role or a user role. There are also differences when it comes to performance, accuracy and cost of these models, but that is a topic for a separate blog post.

The Power of a Prompt Management Platform

At PromptLayer, our we aim to build a Prompt Engineering Platform that allows Prompt Engineers to focus on building LLM applications quickly. We strive to be a model-agnostic platform that allows teams to quickly asses which features and models work best for their use case. With that philosophy, we have invested heavily in editing and testing tools. We have even built an interactive tool builder that allows teams to build out tools graphically. We deal with a lot of the complexity under the hood with the subtle differences between different model providers so you dont have to.

PromptLayer is the most popular platform for prompt engineering, management, and evaluation. Teams use PromptLayer to build AI applications with domain knowledge.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰