Claude Haiku 4.5: Initial Reactions

Anthropic just released a model that delivers near-frontier AI performance at one-third the cost and twice the speed, and it's free for everyone. Claude Haiku 4.5, launched October 15, 2025, represents a seismic shift in the AI landscape as Anthropic's "small" model that punches dramatically above its weight class.

What we're witnessing is the democratization of advanced AI capabilities that were locked behind expensive paywalls just months ago. For developers, enterprises, and everyday users, this changes everything about how we think about deploying AI at scale.

The Speed Demon That Rivals Giants

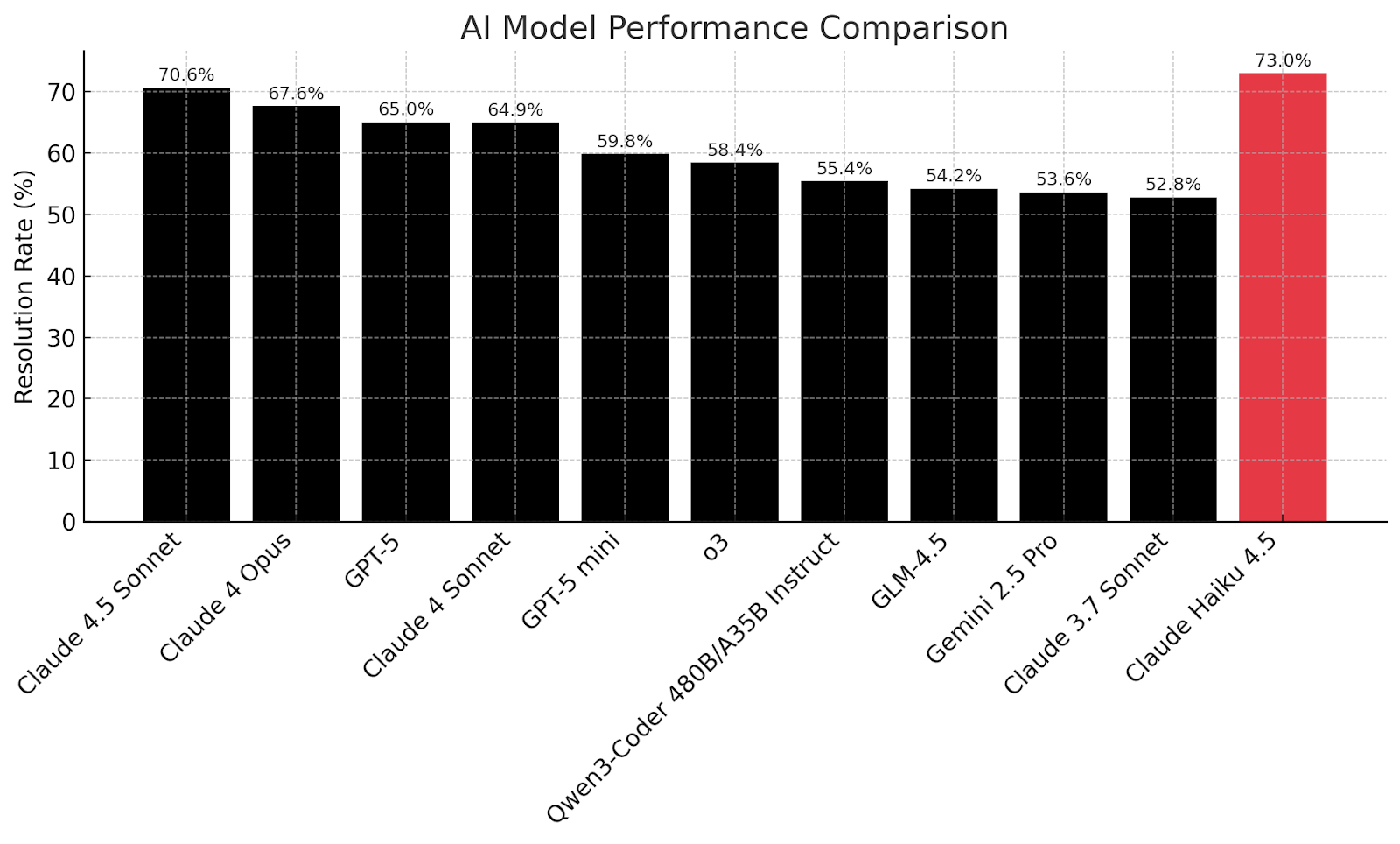

The numbers tell a compelling story. Claude Haiku 4.5 achieves 73% on SWE-Bench Verified, a rigorous software engineering benchmark where AI models fix real-world bugs in open-source Python repositories. This puts it neck-and-neck with Claude Sonnet 4.5 and in direct competition with heavyweights like GPT-5 and Google's Gemini 2.5 Pro.

Haiku 4.5 delivers this performance while running more than twice as fast as Sonnet 4.5. Early testers report being "shocked at the speed," with one developer successfully building a full-stack Python web application, complete with server management dashboard, in under one minute; it's a whole new experience that keeps pace with human thought.

Anthropic has optimized Haiku 4.5 for low-latency scenarios where every millisecond counts. Whether you're pair programming, running customer service bots, or building interactive tools, the difference between a 2-second and 0.5-second response fundamentally changes how users interact with AI.

The Economics Revolution

At $1 per million input tokens and $5 per million output tokens, Claude Haiku 4.5 rewrites the economics of AI deployment. This represents only one-third the cost of Claude Sonnet 4.5's $3/$15 per million pricing structure. To put this in perspective, generating a massive 100,000-token output (roughly 75,000 words) costs about $0.60, pocket change compared to what similar tasks cost on frontier models.

As industry observer Simon Willison notes "Haiku 4.5 certainly isn't ultra-cheap; it looks like they're continuing to focus squarely on the 'great at code' part of the market." Some competing "lite" models offer pricing at just cents per million tokens, but they can't touch Haiku's capabilities.

This strategic positioning makes sense. Rather than racing to the bottom on price, Anthropic has created a model that's affordable enough for high-volume deployments while maintaining quality that actually moves the needle on real-world tasks. For businesses deploying AI at scale, this sweet spot between cost and capability unlocks entirely new use cases that were previously economically unfeasible.

The pricing also comes with optimization options. Anthropic offers prompt caching that can reduce costs to as low as $0.10 per million tokens for cached reads, and their Message Batches API provides a 50% discount on output tokens for batched requests. These features signal that Anthropic expects, and has prepared for, massive enterprise adoption.

Advanced Capabilities in a Small Package

What makes Haiku 4.5 remarkable is the suite of advanced features previously reserved for Anthropic's largest models.

- Extended Thinking Mode represents a breakthrough for the Haiku line. The model can now internally deliberate and chain reasoning steps before producing answers, tackling complex problems with a simulated step-by-step thought process. While these "thinking" tokens are billed as output tokens, the capability transforms what's possible with a lightweight model.

- Tool Use and Computer Control capabilities put Haiku 4.5 in elite company. The model can operate in an agentic mode, using tools and interacting with computer environments. Remarkably, it actually outperforms Claude Sonnet 4 on computer use evaluations, excelling at tasks like controlling virtual machines, clicking, typing, and browser automation.

- Vision support marks another leap forward. Unlike earlier Haiku models, version 4.5 processes both text and images with competitive performance on multimodal benchmarks. Users can feed in diagrams, screenshots, or photos and receive accurate analysis, broadening the model's utility across domains.

- 200,000 token context window with up to 64,000 tokens of output generation represents a dramatic increase from Haiku 3.5's 8,000 token limit and matches the context length of Anthropic's flagship models. Engineers can now feed entire codebases, lengthy documents, or extensive conversation histories without hitting limits.

- Context-awareness training teaches the model to understand how much of its context window has been used. This prevents the common problem of models "cutting off" answers or losing track in long sessions. As the context fills up, Haiku 4.5 learns to wrap up appropriately; when ample context remains, it continues reasoning in depth rather than taking shortcuts.

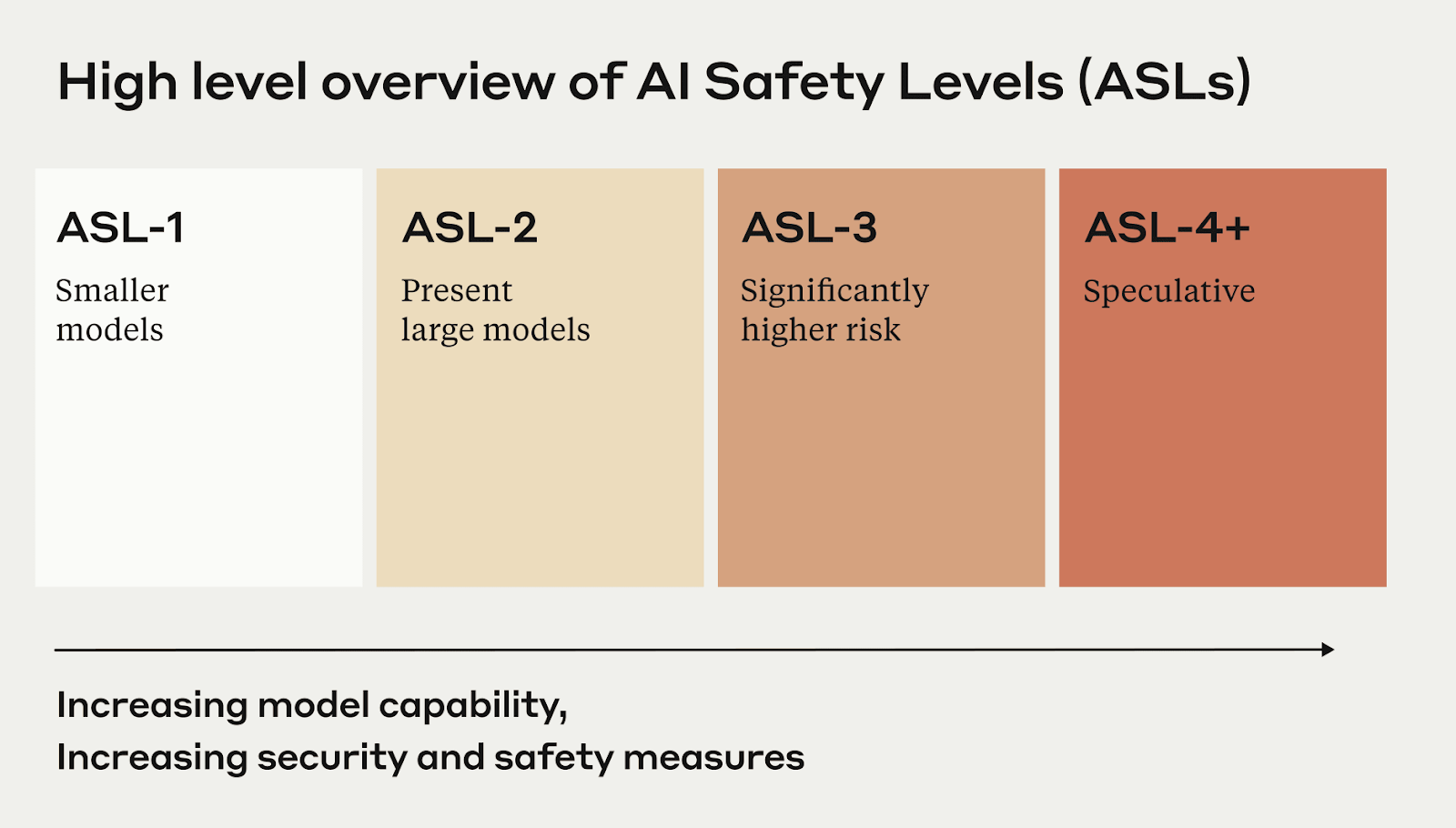

Safety hasn't been sacrificed for capability. Haiku 4.5 is Anthropic's safest model to date, showing substantially more aligned behavior than its predecessors and even lower rates of misaligned outputs than the larger Sonnet 4.5 and Opus 4.1 models. It's classified under Anthropic's AI Safety Level 2 (ASL-2), making it suitable for broader deployment without the heavy restrictions placed on frontier models.

The Multi-Agent Future

One of the most exciting paradigms emerging from Haiku 4.5's release is the multi-agent workflow. Because the model is both capable and affordable, organizations can deploy teams of Haiku agents working in parallel, orchestrated by a more advanced model like Sonnet 4.5.

This mirrors how human teams operate: a senior architect breaks down complex problems and delegates execution to a team of capable developers. As Anthropic explains, "Sonnet 4.5 could plan a major code refactoring while Haiku 4.5 agents simultaneously execute changes across dozens of files."

Early enterprise experiments with this approach show major efficiency gains. Tasks that would bottleneck on a single powerful model can now be parallelized across multiple Haiku instances. Long-running operations, large-scope refactoring, and complex data processing suddenly become tractable when you can throw a dozen fast, smart agents at the problem simultaneously.

For teams managing these distributed agent systems, observability becomes critical. Platforms like PromptLayer provide visibility into model performance, token usage, and agent behavior across deployments, allowing organizations to monitor, debug, and optimize their multi-agent architectures in real-time. This infrastructure layer transforms Haiku 4.5's cost efficiency from a theoretical advantage into a practical one, ensuring teams can validate that their agent workflows are performing as intended and adjust configurations to squeeze maximum value from their deployments.

Mike Krieger, Anthropic's Chief Product Officer, believes this "opens up entirely new categories of what's possible with AI in production environments." We're moving from a world of monolithic AI deployments to one where specialized agents collaborate, each optimized for their role in the larger system.

Free Access Changes the Game

In a bold strategic move, Anthropic made Haiku 4.5 the default model for all free Claude.ai users. Anyone can visit Claude's website or mobile apps and immediately access near-frontier intelligence at no cost. This is the full model with all its capabilities.

The implications are profound. Students, researchers, small businesses, and curious individuals worldwide now have access to AI capabilities that would have cost thousands of dollars per month just a year ago. By removing financial barriers, Anthropic is betting on network effects and ecosystem growth over short-term revenue maximization.

For developers, Haiku 4.5 is available through multiple channels: the Claude API (simply specify `claude-haiku-4-5`), Amazon Bedrock, and Google Cloud Vertex AI. This multi-platform availability ensures organizations can integrate Haiku using their preferred infrastructure, whether that's direct API access or managed cloud services.

The model is designed as a drop-in replacement for both Haiku 3.5 and Claude Sonnet 4, meaning existing applications can upgrade with minimal code changes. Free-tier users who were previously limited to older models automatically get Haiku 4.5's improved capabilities, a seamless upgrade that immediately improves user experience across the Claude ecosystem.

Where Haiku Falls Short

Despite the enthusiasm, it's important to understand Haiku 4.5's limitations. While it achieves roughly 90% of Sonnet 4.5's capabilities, that missing 10% can matter for the most complex tasks. On certain advanced benchmarks involving multi-hop reasoning or highly nuanced analysis, Haiku shows small but meaningful gaps compared to frontier models.

Early user feedback reveals some specific weak points. While Haiku excels at coding and structured tasks, it may struggle with understanding complex business requirement or engaging in deeply nuanced conversations. Users report that it sometimes requires more explicit instructions for tasks involving subtle human communication or abstract reasoning.

The model's optimization for speed and efficiency occasionally shows. When pushed on truly complex reasoning tasks that would benefit from extensive deliberation, Haiku might produce a reasonable but not exhaustive solution. Anthropic's work on context-awareness helps mitigate this tendency, but for mission-critical applications requiring absolute accuracy, validation with a larger model remains advisable.

Price-wise, while Haiku 4.5 offers excellent value, some users note the incremental price increases across Haiku generations, from $0.25/$1.25 per million tokens for Haiku 3, to $0.80/$4 for Haiku 3.5, and now $1/$5 for Haiku 4.5. The capabilities have grown dramatically to justify these increases, but it signals that "budget" doesn't mean "race to the bottom" in Anthropic's strategy.

For organizations considering Haiku 4.5, the best approach often involves a hybrid strategy: use Haiku for the bulk of work where it excels, but maintain access to frontier models for the most challenging problems. This isn't a limitation unique to Haiku, it's simply acknowledging that different tools suit different jobs.

The Inflection Point

Five months ago, achieving 73% on SWE-Bench Verified required a frontier model. Today, you can do it for free on your phone. That's not incremental progress, that's a phase transition in how AI technology diffuses through the economy.

Claude Haiku 4.5 demolishes the old calculus that forced teams to choose between capability and cost, between speed and intelligence. At $1/$5 per million tokens with twice the speed of Sonnet 4.5, it's not just cheaper, it enables entirely new architectures like multi-agent workflows that were economically impossible six months ago. When a startup can deploy a dozen Haiku agents for the price of one frontier model call, when enterprise teams can parallelize complex refactoring across hundreds of files simultaneously, we're witnessing a fundamental restructuring of what's buildable.

The real story is that the six-month frontier-to-commodity cycle is now the new normal, and if that pace holds, the AI landscape in 2026 will be unrecognizable. Haiku 4.5 is less a destination than a marker of velocity. The question is whether you'll be ready when what's cutting-edge today becomes table stakes tomorrow.