Browser agent security risk

The integration of AI into web browsers has dramatically shifted the landscape of how we interact with the internet. AI-powered browser agents don't just read web content - they actively engage with it, mimicking human actions. While this evolution opens new doors for automation and efficiency, it brings significant security risks that need urgent attention. Understanding how these browser agents operate helps us grasp the breadth of their potential threats.

Understanding browser agents' capabilities and threats

AI-driven browser agents are not passive readers of web content. They actively interact with the web, navigating pages, clicking elements, and taking actions traditionally reserved for humans - a behavior highlighted in real-world examples where Hashjack attacks target AI browsers and agentic AI systems, showing how hostile web content can exploit these interactive capabilities.

Core capabilities

Browser agents can:

- Navigate websites autonomously

- Read and interpret Document Object Model (DOM) content

- Click links and buttons

- Fill and submit forms

- Perform cross-site navigation

- Make decisions based on page content

Why this creates risk

These capabilities blur the line between observation and execution:

- Web content is inherently untrusted input

- Instructions embedded in pages can directly influence agent behavior

- A single malicious page can trigger unintended actions

- The agent’s privileges amplify the impact of manipulation

As browser agents collapse the gap between “reading” and “doing,” every page they interact with becomes part of the attack surface.

Key vulnerabilities in browser agents

Among the most significant threats to browser agents is indirect prompt injection. This vulnerability occurs when untrusted web content, perhaps an innocuous-seeming web page, contains hostile instructions that the AI might mistakenly prioritize over its initial programming. This could lead to action misuse, where the model executes unintended functions like downloading malicious files or leaking sensitive data.

A stark Confused Deputy problem, where a high-privilege agent is tricked into performing tasks on behalf of a lower-privilege entity. An agent, seeing misleading instructions buried in web content, may carry out operations such as sharing confidential information or altering settings unknowingly.

Impact of security risks

The implications of such risks are significant. Browser agents' access to sensitive data, including login credentials and session tokens, means potential exposure if an agent is compromised. Moreover, traditional web-native threats are heightened - an agent misled by a cleverly disguised phishing page could take damaging actions much faster than a human operator might.

There have already been real-world scenarios demonstrating these vulnerabilities. For example, Perplexity's "Comet" browser agent highlights how agents can be manipulated by malicious prompt injections, leading to unauthorized data access.

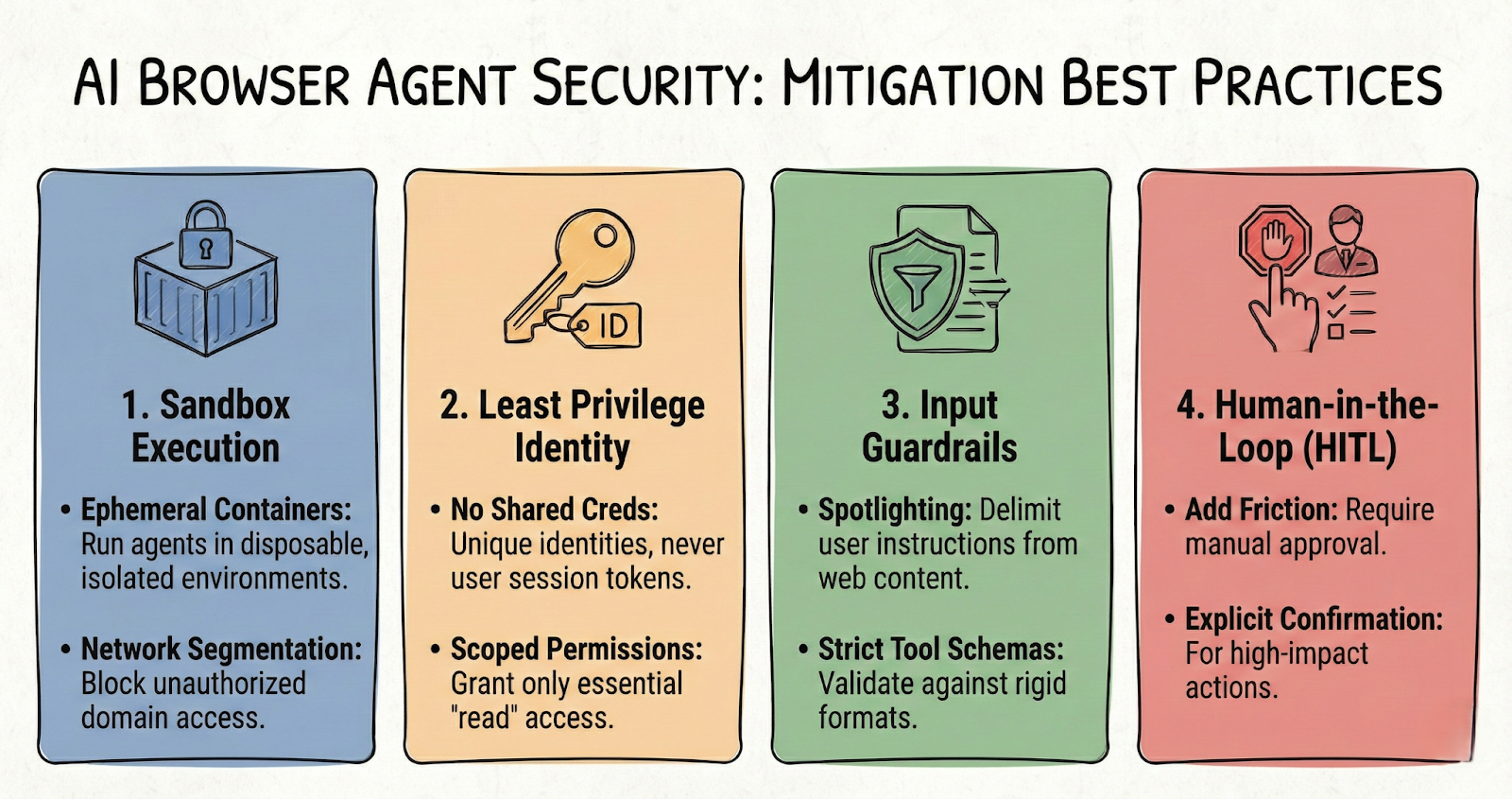

Mitigation strategies and best practices

To mitigate these growing risks, several strategies need implementation. First, engineering strategies such as strict permission controls, employing robust sandboxing techniques, and setting clear action constraints are crucial. Ensuring the browser agent operates within a tight frame reduces the chance of executing unauthorized actions.

Additionally, operational controls play a vital role. Active monitoring and rigorous auditing, coupled with a well-prepared incident response approach, help identify and mitigate issues before they escalate. Secure design principles must be ingrained in development practices, emphasizing strong prompt and tool design and fostering environments that encourage continuous red teaming to test security.

Win with boundaries

Browser agents are powerful precisely because they collapse the gap between “reading” and “doing” - and that means every page they touch should be treated like untrusted input, not helpful context. If an agent can click, navigate, and act while carrying your authenticated session, a single indirect prompt injection can turn it into a Confused Deputy in seconds.

To improve the security of browser agents, focus on rapid iteration and a tight development loop. This involves implementing robust observability and running thorough security evaluations. Leverage tools like PromptLayer to version prompts and detect tool drift proactively, preventing it from escalating into a security incident.

An example of granular execution tracing in PromptLayer, identifying exactly how an agent interprets prompt templates during a multi-step session.

The practical takeaway: don’t bet your safety on smarter prompts. Win with boundaries. Lock down permissions, sandbox execution, and put friction (human confirmation) in front of high-impact actions. Then instrument everything - because if you can’t trace why an agent clicked, you can’t defend it.