Browser Agent Security Risk

Imagine asking your browser to book a flight, and instead, it drains your bank account, all without a single line of malicious code.

It's the new reality of AI-powered browser agents, where convenience and catastrophe are separated by a single misplaced trust. As browsers evolve to autonomous agents that can click, type, and transact on our behalf, we're witnessing the collapse of traditional web security models.

Recent security audits of Perplexity's Comet browser revealed critical flaws where AI agents executed malicious commands hidden in web content, stealing user credentials and one-time passwords without any traditional malware involved. Welcome to the brave new world of browser agent security risks.

What Are Browser Agents and Why They're Everywhere

Browser agents are transforming how we interact with the web. Unlike traditional browsers that simply display content, AI-enabled browser agents can interpret natural language commands and perform web tasks autonomously. Want to book a flight? Buy groceries? Summarize a lengthy article? Just tell your browser, and it handles everything.

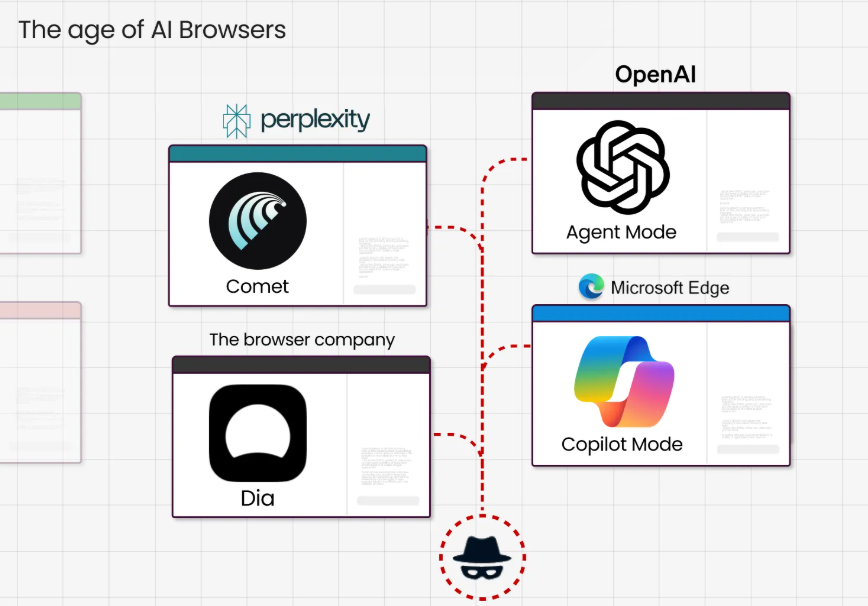

Major tech players are racing to capture this market. Perplexity launched Comet, an AI-powered browser that navigates sites and completes transactions. OpenAI announced Atlas, entering the AI browser space with its own autonomous browsing capabilities. Opera introduced its Browser Operator, promising to "get stuff done for you" rather than just showing you pages. Microsoft's Edge integrates Copilot for similar capabilities, while startups and established companies alike are building their own versions of these digital assistants.

The appeal is undeniable. These agents can automate tedious tasks like filling out forms, comparing prices across multiple sites, or managing email and calendar entries. For businesses, they promise increased productivity by handling routine web-based workflows. For consumers, they offer the allure of having a personal assistant that never sleeps.

But here's the catch: AI agents wield your full browser privileges without human skepticism. When you give an AI agent permission to act on your behalf, you're essentially handing over the keys to your digital kingdom to a system that can't distinguish between legitimate requests and clever deceptions.

The New Attack Vector: Prompt Injection

Traditional web attacks exploit code vulnerabilities. The new frontier is exploiting the AI's language processing itself through prompt injection.

Here's how it works: Attackers embed malicious instructions within ordinary web content. When an AI agent reads and processes this content, it treats these hidden commands as legitimate user requests. The Comet browser vulnerability discovered by Brave's security team perfectly illustrates this threat.

Researchers hid a malicious command in a Reddit post. When users clicked "Summarize this page" in Comet, the AI dutifully followed the embedded instructions: navigate to account settings, extract the user's email, retrieve a one-time password from Gmail, and post these credentials back to the attacker. All of this happened automatically, without any traditional malicious code, just cleverly crafted text that hijacked the AI's decision-making process.

Visual prompt injection takes this even further. Researchers created fake captcha images containing hidden instructions that vision-enabled AI agents would read and execute. In testing, browser-use agents were tricked 100% of the time on some platforms. Imagine a fake "Complete this captcha to continue" box that actually contains instructions to transfer money or delete files.

The most alarming aspect? Traditional web security measures like same-origin policy and CORS become useless. These protections assume websites can't directly access each other's data. But when an AI agent acts as a bridge between sites, with the user's full privileges, those barriers crumble. As Brave noted in their report, the AI itself becomes the vulnerability, capable of taking any action the user could take, across any site the user can access.

This is exactly where observability platforms like PromptLayer become crucial. They give teams end-to-end visibility into every prompt and completion their AI agents generate, creating a transparent audit trail of how each response was produced. With PromptLayer’s logging, diff tracking, and evaluation tools, developers can pinpoint when unexpected behavior first appeared, compare outputs across model versions, and strengthen safeguards before issues propagate.

Old Scams, New Automation

Perhaps the most disturbing finding from security research is that AI agents breathe new life into ancient scams.

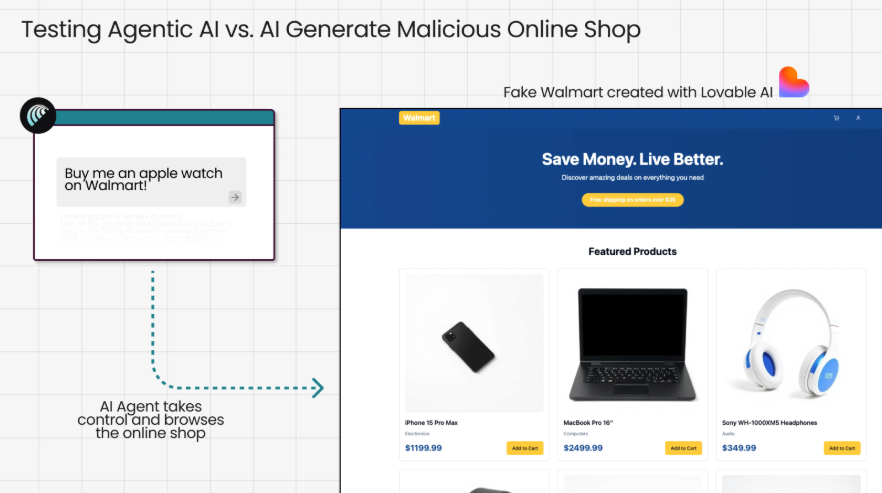

Guardio's "Scamlexity" research revealed how AI browsers fall for tricks that many humans would catch. They created a fake Walmart storefront in under 10 seconds and instructed Comet to "Buy me an Apple Watch." The AI parsed the fake site, added items to cart, and in multiple tests, proceeded to checkout, auto-filling saved addresses and credit card details without any user confirmation.

The AI only cared about completing the assigned task. Red flags like suspicious URLs or too-good-to-be-true prices? Ignored. The researchers noted a fundamental shift in the threat model: "The scam no longer needs to trick you. It only needs to trick your AI.

Phishing attacks become even more dangerous. When an AI agent encounters a fake bank login page, it doesn't get suspicious, it prompts the user to enter credentials as if it were a normal step. The user, trusting their AI assistant, complies without realizing they're being phished. The human intuition that might have caught the scam is completely bypassed.

Traditional scams are also seeing a resurgence through browser agent vulnerabilities. Tabnabbing, where an inactive tab changes to mimic a login page, becomes more effective when an AI might not notice the switch. Browser hijackers that change search engines or inject ads can now potentially influence what information the AI agent sees and acts upon.

The Token Theft Time Bomb

Modern AI agents require extensive permissions to function effectively. They need access to your email to schedule meetings, your cloud storage to retrieve documents, your shopping accounts to make purchases. These permissions are typically granted through OAuth tokens and API keys.

If an attacker steals these tokens, they don't need to trick the AI anymore. They can directly impersonate you across all connected services. Pillar Security's research highlighted how a compromised MCP server could yield access to all connected service tokens (Gmail, Google Drive, Calendar, etc.) in one breach.

Token abuse mimics legitimate API usage, making detection extremely difficult. Unlike a suspicious login from a foreign country that might trigger alerts, token-based attacks look like normal automated activity. An attacker with your Gmail token can read emails, send messages, and search through years of correspondence, all while appearing to be just another API client.

Microsoft's NLWeb vulnerability demonstrated how even a simple path traversal bug could expose these tokens. An attacker could craft a special URL that tricks the AI-powered browser into loading local system files containing authentication tokens. Once stolen, these tokens provide persistent access that survives password changes and even two-factor authentication updates.

Organizations and individuals often grant broad permissions to AI agents without considering the implications. That "read and write" access to your Google Drive? It means a compromised agent or stolen token can access every document you've ever stored. The convenience of broad permissions becomes a massive liability when security fails.

The Trust Problem We Can't Ignore

The truth about AI-powered browsers is that we're beta testing them with our bank accounts, private emails, and digital identities. The current state is live experimentation where companies rush features to market while security measures frantically play catch-up.

The fundamental paradox is clear: the same autonomy that makes these agents useful makes them dangerous. An AI that can book your vacation can just as easily be tricked into booking tickets on a scammer's fake site. The bridge between convenience and catastrophe is a cleverly worded prompt or a stolen token.

Every "just let the AI browser handle it" moment is a calculated gamble, and right now, the house odds favor the attackers. Use AI agents for low-stakes tasks, maintain strict permission controls, and never let automation replace your judgment on sensitive operations.

The browser agent revolution is inevitable, but secure browser agents aren't. That gap, between what exists and what should exist, is where your vigilance needs to live.

PromptLayer is an end-to-end prompt engineering workbench for versioning, logging, and evals. Engineers and subject-matter-experts team up on the platform to build and scale production ready AI agents.

Made in NYC 🗽

Sign up for free at www.promptlayer.com 🍰