Grok Code Fast 1: First Reactions

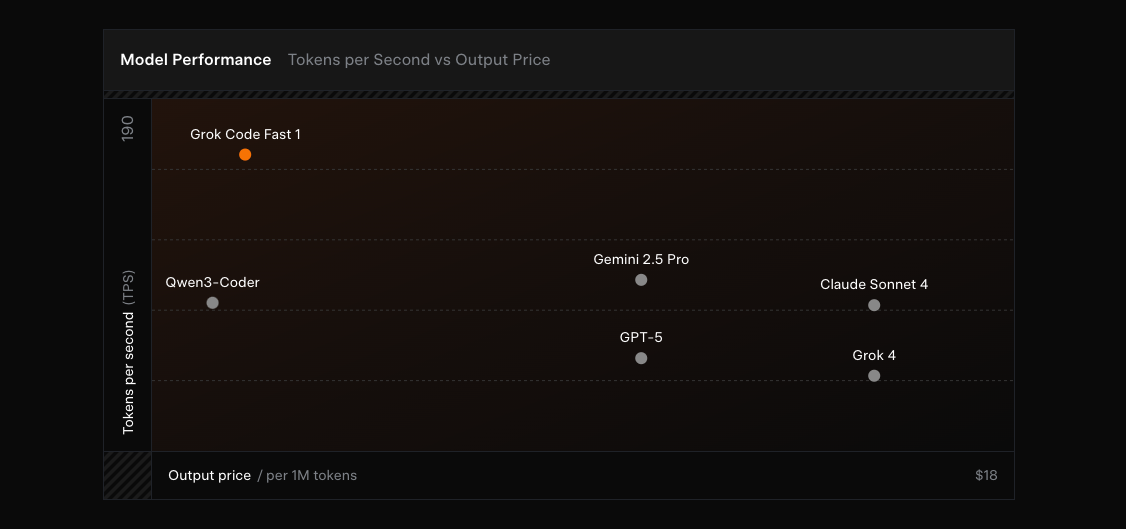

256k context and ~92 tokens/sec—xAI's coding model lands in GitHub Copilot public preview, aiming for speed at pennies per million tokens. This post covers what it is, why it's fast and cheap, best uses, where to try it, and what's next.

Why