AI contextual refinement

Understanding AI contextual refinement has become essential as technology shifts from focusing solely on prompt engineering to embracing context engineering. As AI models advance, the skill of contextual refinement becomes crucial to optimize outputs, increase accuracy, and reduce hallucinations. Effective context management in LLMs is now critical for higher efficiency and performance.

What contextual refinement contributes to AI

Contextual refinement in modern LLM systems revolves around fine-tuning the contextual input to ensure precise and accurate outputs. Unlike traditional prompt engineering, where static prompts were crafted to drive desired responses, contextual refinement involves dynamically adjusting the context presented to the model. This method extends beyond getting a single answer right - it's about maintaining precision and accuracy across complex interactions.

Mechanisms driving contextual refinement

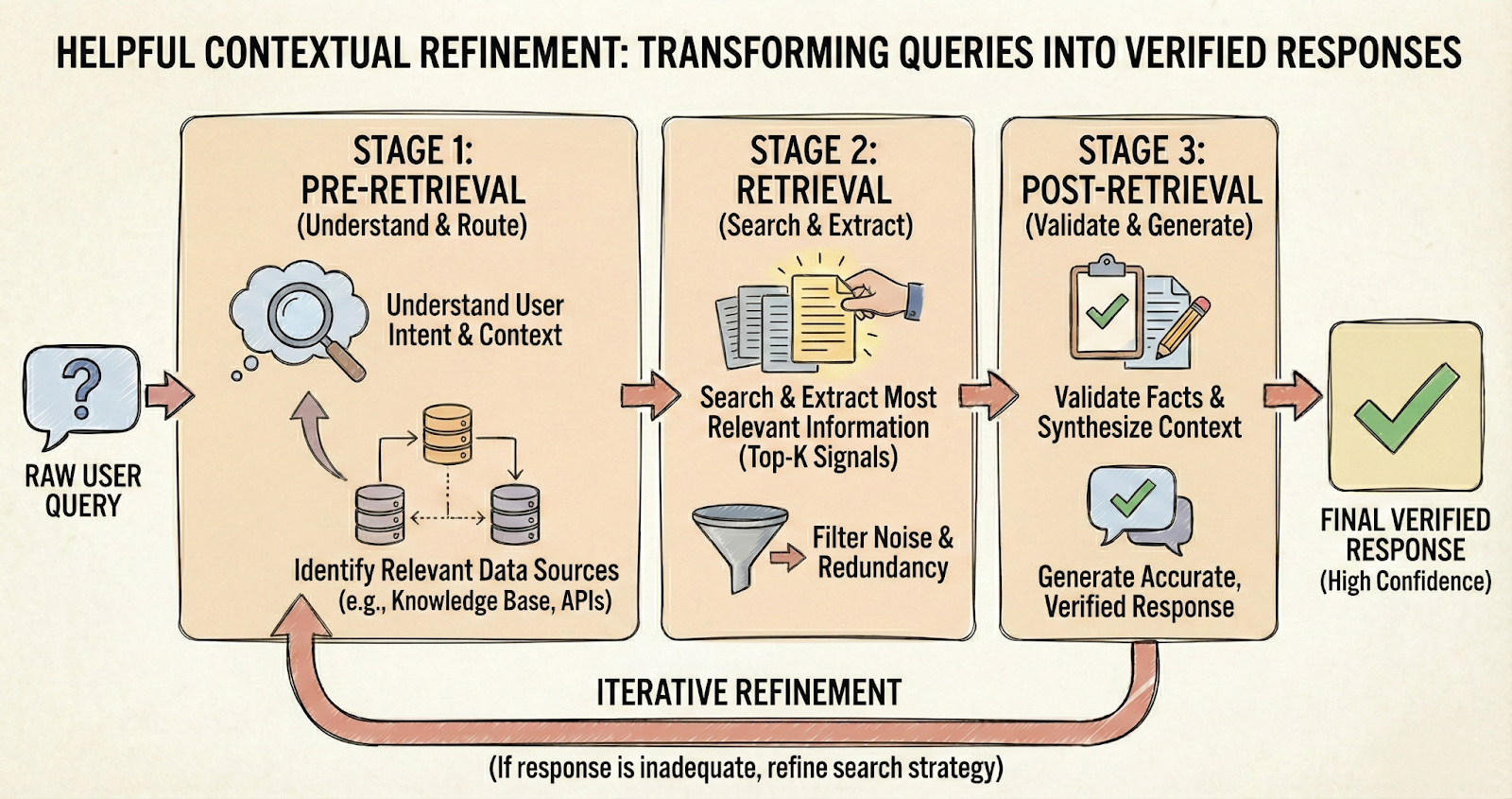

Contextual refinement employs several strategies. For example, single-turn versus multi-turn refinement contrasts how one-off inputs are handled against continuous dialogue adjustments. Retrieval selection narrows down relevant information, while agentic loops refine the model's ability to interact over multiple steps. RAG architectures operationalize these strategies - using context to retrieve pertinent information from data sources before generating a response. By leveraging techniques like chunk selection, RAG systems optimize how data is retrieved, ensuring relevance and precision in returned information.

Implementing refinement in RAG systems

RAG systems implement strategies like chunk selection, query rewriting, and context compression to refine AI understanding. Pre-retrieval optimization streamlines what data should be retrieved, retrieval mechanisms ensure the correct data is fetched, and post-retrieval techniques refine the output. Together, these strategies minimize unnecessary data processing, saving resources while enhancing performance.

Considerations in contextual refinement

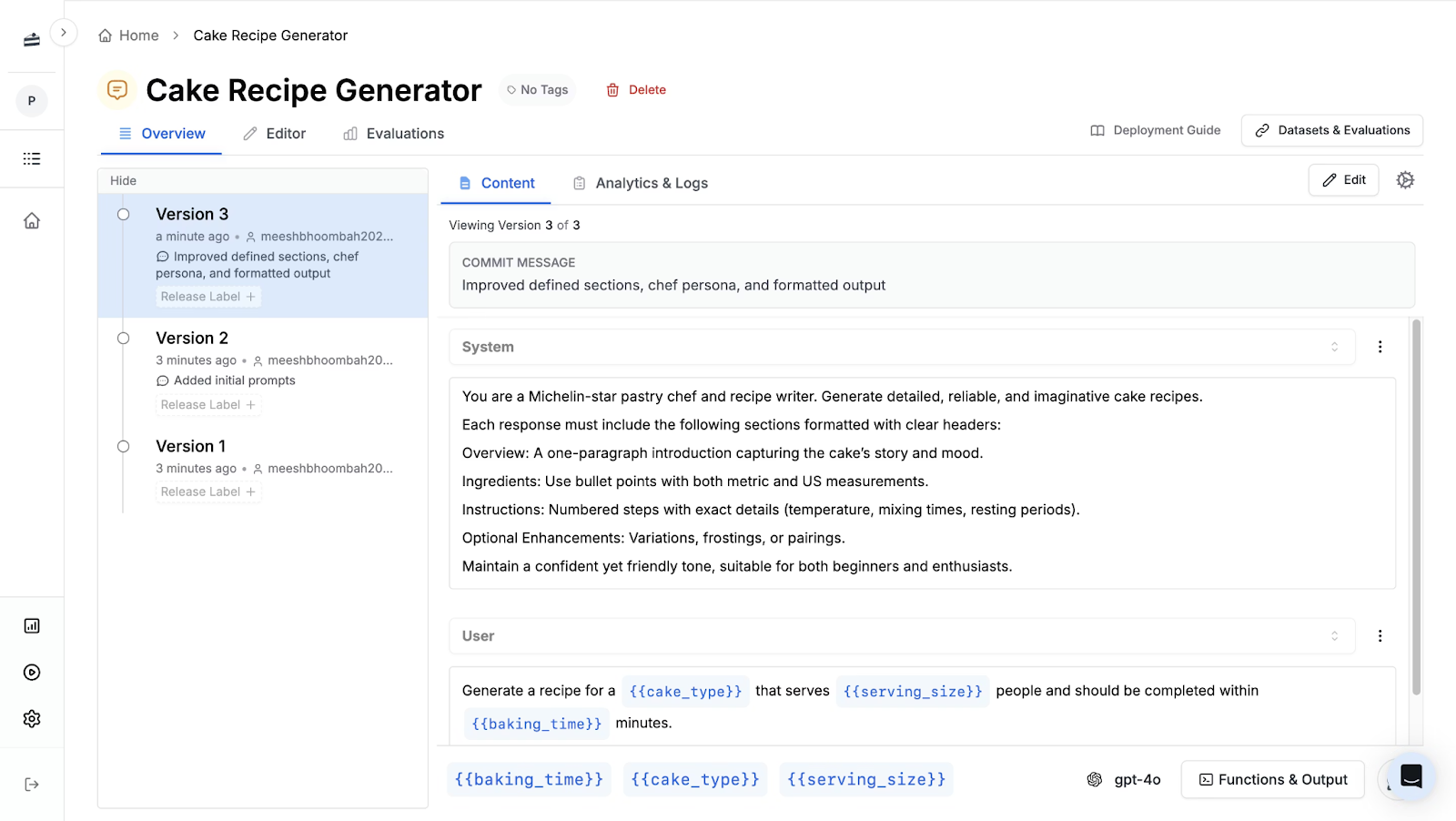

Systematic tracking and evaluation are essential for the effective observability of refinement techniques and understanding their impact. This requires implementing prompt versioning and utilizing robust evaluation methods to accurately assess changes in both efficiency and accuracy. Companies like Instacart and DoorDash offer practical examples, demonstrating how systematic refinement can successfully improve task accuracy while simultaneously minimizing response noise.

Despite its benefits, contextual refinement poses several risks. Context poisoning, where irrelevant or harmful data corrupts the AI’s decision-making capability, is a primary concern. Moreover, using extensive data raises privacy issues. Performance degradation can occur if the refinement process is not meticulously managed. Effective strategies to mitigate these involve stringent context management protocols and ongoing evaluation.

Stop treating context like an afterthought

Contextual refinement is quickly becoming the difference between an AI demo that sounds smart and a system that stays accurate under real-world pressure. The goal isn’t to cram more tokens into the window - it’s to curate what matters, compress what doesn’t, and keep the model anchored to the right signal at the right time.

If you’re building with RAG or multi-step agents, the best next step is simple: make refinement measurable. Version your prompts, track what context was injected, and evaluate whether each change actually improves retrieval relevance and answer faithfulness (instead of just shifting the failure mode). Tools like PromptLayer help teams operationalize this loop - so “better context” is a repeatable workflow.