AI Contextual Governance & Strategic Visibility: From Black Box to Glass House

Over 90% of security leaders admit to blind spots in their AI systems, a dangerous gap as artificial intelligence becomes the backbone of critical decisions in healthcare, finance, hiring, and operations. What was once a "nice to have" technology is now making life-altering choices: diagnosing diseases, approving loans, filtering job candidates, and managing supply chains.

Most organizations operate their AI like a black box, trusted but unverified. This creates a stark reality: Organizations deploying AI need contextual governance, rules tailored to each AI's role, and strategic visibility, transparent insight into what AI is doing to avoid becoming either invisible in AI-driven markets or liable for AI failures they can't explain.

The Evolution from "Trust Me" to "Show Me"

The early days of AI governance relied on vague principles and reactive fixes. When biased sentencing algorithms made headlines in the 2010s, organizations scrambled to issue high-level ethics guidelines about fairness and transparency. But these were essentially promises on paper, the corporate equivalent of "trust us, we're being careful."

By the 2020s, this approach crumbled. One-size-fits-all policies failed spectacularly because they treated a customer service chatbot and an autonomous trading algorithm as if they carried the same risks. Regulators and boards began demanding audit-grade frameworks instead of philosophical statements. The shift was clear: from informal assurances to verifiable controls.

Context emerged as the critical factor. A diagnostic AI in a hospital operates in a fundamentally different environment than a recommendation engine for streaming services. Each requires governance rules calibrated to its specific function, autonomy level, and potential impact. The era of generic AI policies was over.

What Is Contextual Governance?

Contextual governance means tailoring AI oversight to how, where, and by whom AI is used. Instead of blanket rules for all AI systems, it asks precise questions: What decisions does this AI make? Who's affected? How much autonomy does it have? What happens if it fails?

This specificity is critical because treating all AI the same ignores fundamental risk differences. A customer service bot that suggests FAQ responses operates in a low-stakes environment. An autonomous trading algorithm that can execute million-dollar transactions in milliseconds requires entirely different safeguards.

The Human-AI Governance (HAIG) framework exemplifies this approach by analyzing trust dynamics along continuous dimensions rather than rigid categories. Instead of simply labeling systems as "human-in-the-loop" or "fully automated," HAIG examines:

- Decision authority distribution: How much power does the AI have to act independently?

- Process autonomy: Can the AI modify its own decision-making process?

- Accountability configuration: Who's responsible when something goes wrong?

The governance rules reflect this balance, the AI must explain its reasoning, log its recommendations, and flag cases where it has low confidence. As the AI proves itself over time, the trust calibration might shift, but always with clear thresholds and human oversight for critical decisions.

Strategic Visibility: Seeing What AI Actually Does

Strategic visibility provides transparent, real-time insight into AI operations, the where, why, and how of every AI action. Without visibility, even the best governance policies become unenforceable wishes.

Research from the Centre for Governance of AI identifies three pillars of strategic visibility:

Agent Identifiers

Every AI system gets a unique ID, like a passport or license plate. This prevents "shadow AI" from operating undetected. When an AI makes a decision, its identifier is logged, making it traceable across systems and time.

Real-Time Monitoring

Continuous surveillance of AI decisions and behavior as they happen. it's about understanding patterns, detecting drift, and ensuring compliance with governance rules. If an AI suddenly starts rejecting 90% of loan applications when it normally approves 60%, monitors flag this anomaly immediately.

Activity Logging

Detailed audit trails capture inputs, outputs, and reasoning paths. When a customer asks why their loan was denied, or a regulator investigates a pattern of decisions, these logs provide the evidence trail. They transform AI from an inscrutable oracle into an accountable system.

The payoff is comprehensive: no more "shadow AI" operating outside oversight, every system tracked and explainable, and accountability built into the technology stack rather than bolted on as an afterthought.

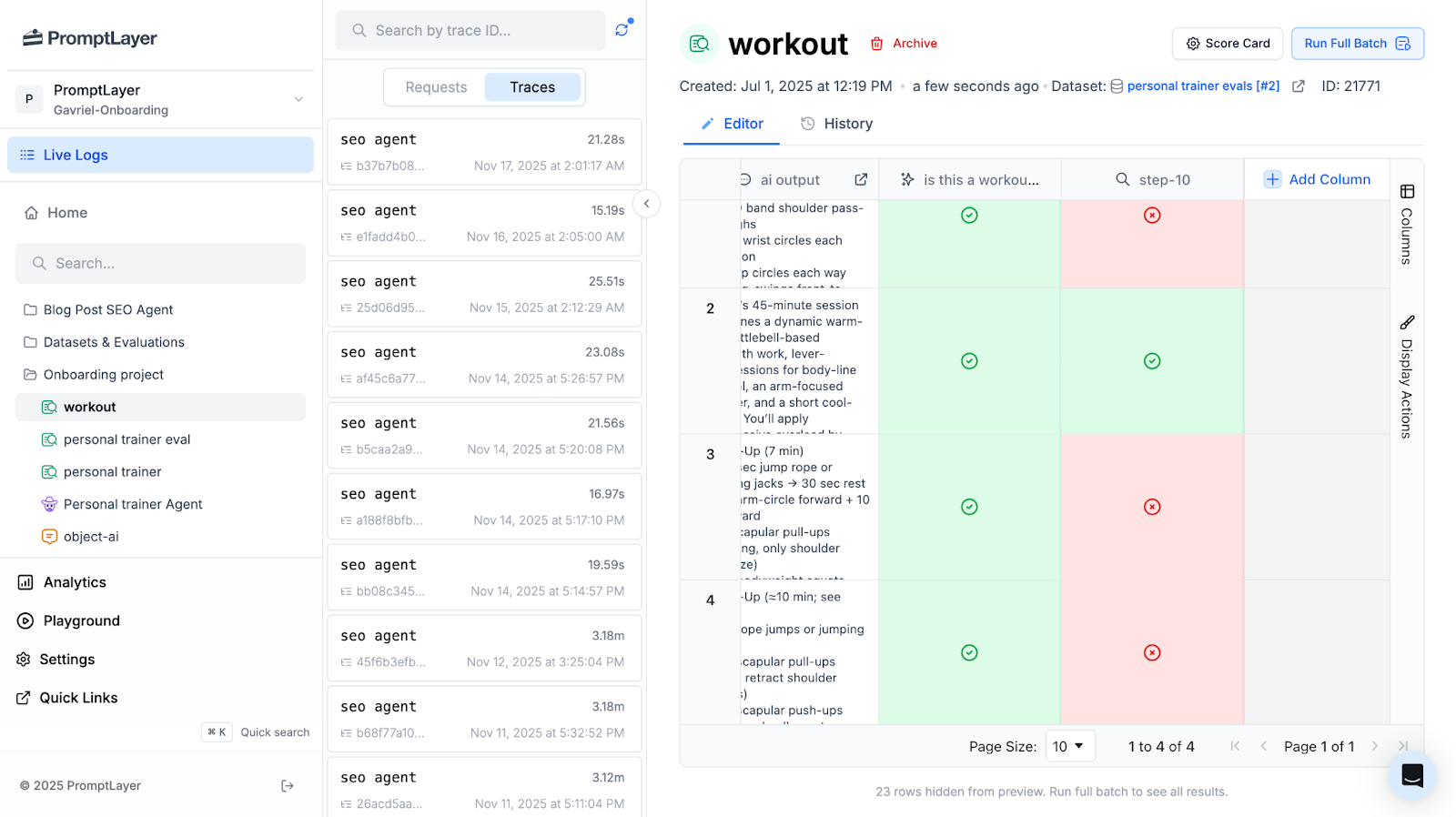

Platforms like PromptLayer operationalize these visibility principles in real production systems, transforming governance ideals into measurable practices. PromptLayer delivers full prompt tracing that captures every AI interaction, recording inputs, outputs, and reasoning pathways for complete audit trails. The platform combines cost monitoring to track AI spending patterns with evaluation pipelines that systematically validate performance against governance standards. By ensuring every AI system is uniquely tagged and traceable, PromptLayer prevents shadow AI deployments while creating accountability chains that connect decisions back to responsible teams. This brings visibility and control directly into the technology stack, making contextual governance enforceable rather than theoretical.

Why Boards Should Care: The AIVO Standard

A new risk has emerged that should alarm every board: companies can become invisible in AI-generated answers. As ChatGPT, Claude, Gemini, and other AI assistants become primary information sources, being absent from their responses is like being delisted from Google in 2010.

The AI Visibility Optimization Standard introduces the Prompt-Space Occupancy Score, a metric measuring how often your brand appears in AI responses within your industry. In one audit, an airline appeared in 82% of relevant AI prompts while its competitor, despite heavy marketing spend, showed up in only 27%. That's a massive competitive gap hidden from traditional metrics.

Boards must now oversee AI visibility like they monitor cybersecurity or financial risk. The questions are straightforward but urgent:

- Are we discoverable when customers ask AI assistants about our industry?

- How does our AI visibility compare to competitors?

- What happens to our market share if we're invisible in AI channels?

This governance angle extends beyond internal AI use to how external AI systems represent your organization, a new frontier requiring board-level attention and strategic response.

Frameworks Making It Real

Several frameworks are turning these concepts from theory into practice:

Agent Registry

Think of this as a central inventory, a single source of truth listing every AI system in your organization. Each entry includes:

- Purpose and business function

- Owner and responsible team

- Risk classification

- Data access permissions

- Performance metrics

- Compliance status

This prevents "rogue" deployments where teams spin up AI services that fly under the radar of risk management. Major platforms like Collibra and Credal now offer registry features, recognizing this as foundational to governance.

Zero Trust for AI

Borrowed from cybersecurity, the principle is simple: never trust, always verify. Every AI query is checked against policies, every access is logged, every output is validated. Unlike traditional security that assumes internal systems are safe, Zero Trust assumes nothing and verifies everything.

For instance, even if an AI model is pre-approved, each time it's invoked, the system checks: Is this user authorized? Is this use case approved? Are we logging this interaction? Does the output comply with our policies?

Semantic Layer Governance

AI is only as good as its data, so governance must start at the data layer. A semantic layer acts as a translator and gatekeeper between raw data and AI systems. It ensures:

- Consistent definitions: Every AI uses the same definition of "customer lifetime value"

- Access controls: Marketing AI can't access medical records

- Data quality: Information is cleaned and contextualized before AI consumption

- Lineage tracking: Every data point can be traced from source to AI output

Map-Measure-Manage

This operational approach, championed by platforms like Konfer, breaks governance into three actionable steps:

1. Map: Build a comprehensive inventory of all AI assets, their relationships, and dependencies

2. Measure: Establish metrics for performance, risk, bias, and compliance, creating scores for each system

3. Manage: Implement alerts, workflows, and incident response when measurements indicate problems

This creates a continuous improvement cycle where governance evolves with your AI landscape rather than remaining static.

From Governance Theory to Operational Reality

Contextual governance equals right rules for each AI's role. Strategic visibility equals always knowing what AI is doing. Together, they transform AI from a mysterious black box into a glass house, powerful yet transparent, autonomous yet accountable.

The stakes couldn't be higher. Trust in your AI systems determines whether customers embrace or abandon them. Competitive advantage hinges on being visible in AI-driven markets while managing AI risks effectively. Legal liability looms for organizations that deploy AI they can't explain or control.

It's about unleashing AI's potential responsibly. When stakeholders trust AI because they can see and understand its operations, adoption accelerates. When governance is calibrated to context rather than applying blanket restrictions, innovation flourishes within appropriate boundaries.

AI is an ongoing practice that requires continuous attention, investment, and evolution. Organizations that master contextual governance and strategic visibility now will lead in the AI era. Those that ignore these imperatives risk becoming either invisible in AI-mediated markets or liable for AI failures they never saw coming.

Treat AI governance with the same rigor as financial controls or cybersecurity. Build visibility into your AI systems from the ground up. Tailor oversight to each AI's specific context and risk profile. Most importantly, recognize that in an AI-driven world, what you can't see can definitely hurt you.