Agentic AI Frameworks: Empowering Autonomous AI Systems

The leap from linear automation to autonomous AI agents has transformed what’s possible with software. No longer are we simply linking together prompt-response scripts or traditional workflows - today’s advanced systems can perceive, plan, take independent actions, and adapt over time, all while handling complexity with minimum human handholding. At the heart of this new paradigm are agentic AI frameworks - runtimes and libraries that arm developers with everything needed to build reliable, flexible, and safe AI agents for production.

Let’s dig into how these frameworks work, what sets them apart from earlier automation approaches, which frameworks stand out in 2025, and why new interoperability standards and ops layers like PromptLayer are now critical for success.

What Does It Mean to Be “Agentic”?

You’ll hear “agentic” everywhere in AI right now, but the term often blurs into a buzzword. Here’s a simple, working definition:

Agentic AI frameworks power autonomous systems that can:

- Perceive (ingest and interpret state or context)

- Plan (reason and break down objectives into actionable steps)

- Act (invoke tools, APIs, or functions, not just generate text)

- Remember (persist and recall state/memory across interactions)

- Learn (adapt through feedback, self-correction, or explicit evals)

The critical distinction is agency. Instead of simply following static workflows or returning answers when prompted, agentic systems exercise goal-driven control: they can navigate task uncertainty, use external resources, and manage their own state and execution logic - sometimes over hours or days.

Agentic frameworks provide building blocks for:

- Tool integration: Letting agents query APIs, read/write files, trigger workflows, or even control hardware.

- Memory management: Retaining user context, long-term state, or episodic memory for complex, ongoing goals.

- Workflow definition: Supporting chains, branches, loops, and multi-step plans - not just “single prompt, single answer.”

- Durability and human-in-the-loop (HITL): Resuming after crashes, supporting approvals, safely pausing/retrying on error.

- Observability and evaluation: Tracing decisions, logging actions, monitoring health, and evaluating reasoning quality.

That’s a major leap from basic prompt-driven LLM apps or retrieval-augmented generation (RAG) searchbots. When built right, agentic frameworks form the backbone for everything from customer support automations to autonomous data pipelines, research copilots, and even AI-powered coding IDEs.

Why Production-Ready Agentic Systems Demand More Than Smart Prompts

The hype around “AI agents” often overlooks what happens at scale: demo scripts fail spectacularly in real-world apps due to tool failures, runaway prompt loops, no audit trail, and prompt drift. The agentic frameworks that matter in 2025 solve for production realities:

Durability and Resumability:Agents must survive interruptions or crashes - sometimes running for hours or days, picking up right where they left off. Frameworks like LangGraph and LlamaIndex Workflows offer persistent state and auto-recovery. This is vital for systems with long or multistep tasks.

Human-in-the-loop Controls (HITL):Autonomy doesn’t mean an agent can do everything unsupervised. Production frameworks must offer interrupt points for approvals, failover handling, and explicit checkpoints - ensuring business logic or compliance requirements stay firmly in control. LangGraph 1.0, for example, added native “pause-and-approve” patterns for this reason.

Graph-Based/Workflow Control:Linear “chain-of-thought” reasoning quickly hits limits once an agent must branch, retry, loop, or hand off subtasks to other agents. Modern frameworks expose graph or event-driven runtime models: you can define explicit flows, set up multi-agent team structures (CrewAI’s Crews and Flows), or use subgraphs to handle complex decision logic.

State and Memory Management:Agents need both short-term (request/session) and long-term (persistent) memory. This underpins context-aware conversations, long-running planning, and recall of facts or prior decisions. Most frameworks now offer plug-and-play support for vector stores, databases, or in-memory state layers.

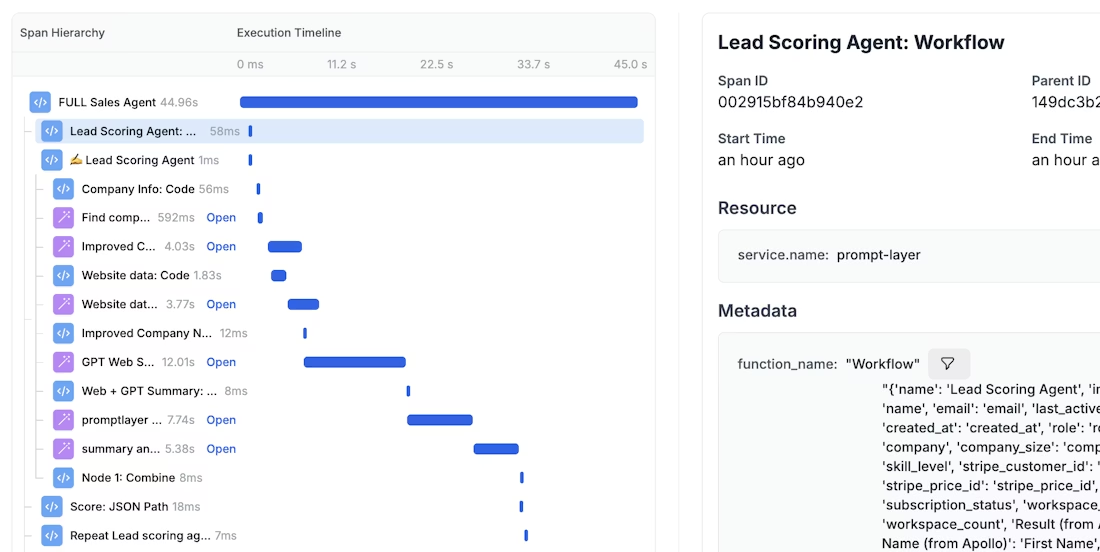

Tracing, Observability, and Evaluation:When an AI agent can trigger actions, tracing its exact logic path becomes essential for debugging, safety, and improvement. Modern stacks - especially with platforms like PromptLayer - offer OpenTelemetry-based trace views, versioned prompt registries, and real-time performance analytics.

In short, building agentic AI for production is no longer about experimental “vibes.” It’s a discipline combining controlled autonomy, robust task management, and surgical observability.

The 2025 Agentic AI Framework Landscape: What to Use and When

Dozens of frameworks now claim “agent” capabilities, but real-world teams gravitate toward mature, well-supported options that offer production-grade control, extensibility, and observability.

1. LangChain + LangGraph

- Model: Chain or graph-based agent orchestration; built-in persistence, HITL, and advanced control flow.

- Highlights:

- LangGraph 1.0 (Oct 2025) brought durable execution and native HITL into the mainstream.

- LangChain’s agent primitives wrap workflows as graphs - supporting branching, multi-agent handoffs, and checkpointing.

- Tight integration with LangSmith for deployment, tracing, evals, and model monitoring.

- Best for: Teams needing mature workflow controls, resilience, and rich tool ecosystems.

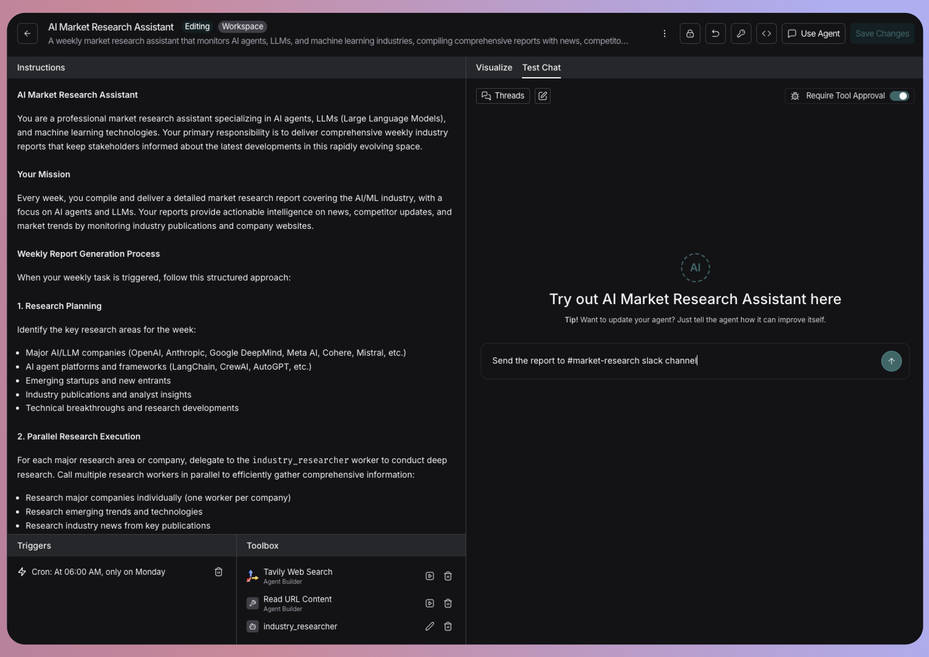

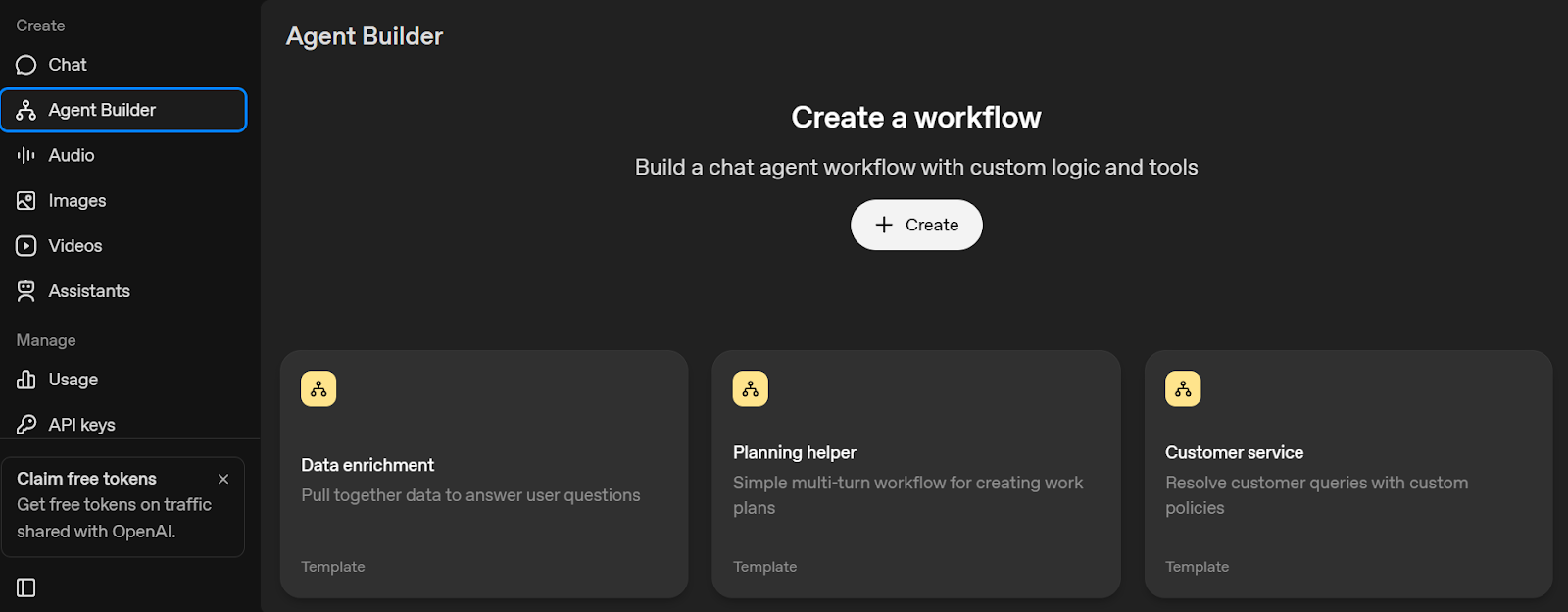

2. CrewAI

- Model: Role-based multi-agent “crews” working collaboratively across tasks/events (with explicit control “flows”).

- Highlights:

- Crews encapsulate agents in well-defined roles (“manager,” “worker,” etc.), supporting “org chart” style collaboration.

- Flows allow precise event-driven orchestration, ideal for complex pipelines needing both autonomy and determinism.

- Best for: Pipelines where collaboration/coordination between specialist agents mirrors real-world teams.

3. Microsoft’s AutoGen and Semantic Kernel (Agent Framework)

- Model: Agent orchestration with single/multi-agent support, strong workflow and enterprise hooks.

- Highlights:

- AutoGen now directs users toward Microsoft Agent Framework - the company’s unification of agentic orchestration patterns with robust workflow and HITL controls.

- Semantic Kernel focuses on .NET shops and enterprise use cases: plugin architectures, strong type-safety, and state/thread abstractions.

- Best for: Enterprise scenarios needing guardrails, cross-agent threading, and compliance-oriented pipelines.

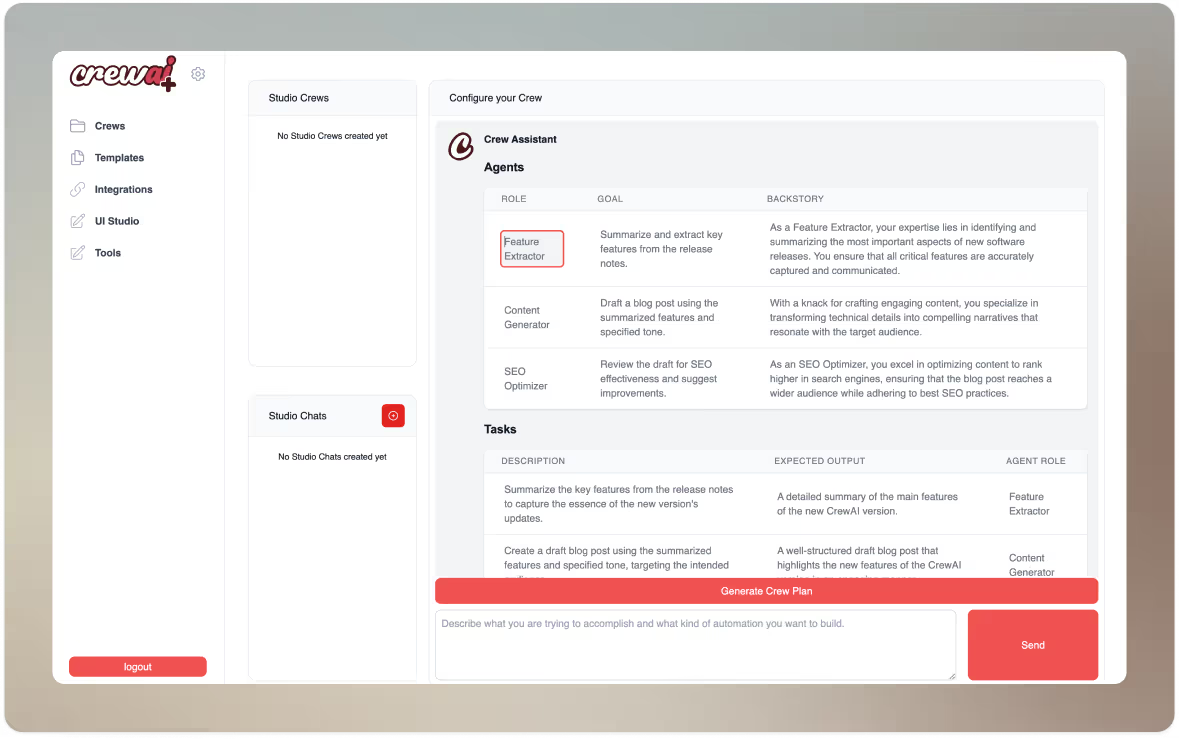

4. OpenAI Agents SDK and Responses API

- Model: Provider-agnostic SDK with first-class tracing, handoffs, and streaming; new Responses API for built-in tool use.

- Highlights:

- Agents SDK supports multi-step reasoning, streaming output, tool calls, and high-fidelity trace logs.

- Responses API provides off-the-shelf LLM-accessible tool use (web search, file search, computer use).

- Best for: OpenAI-centric teams building agentic apps with built-in evaluation, or those wanting a clean, provider-neutral orchestration SDK.

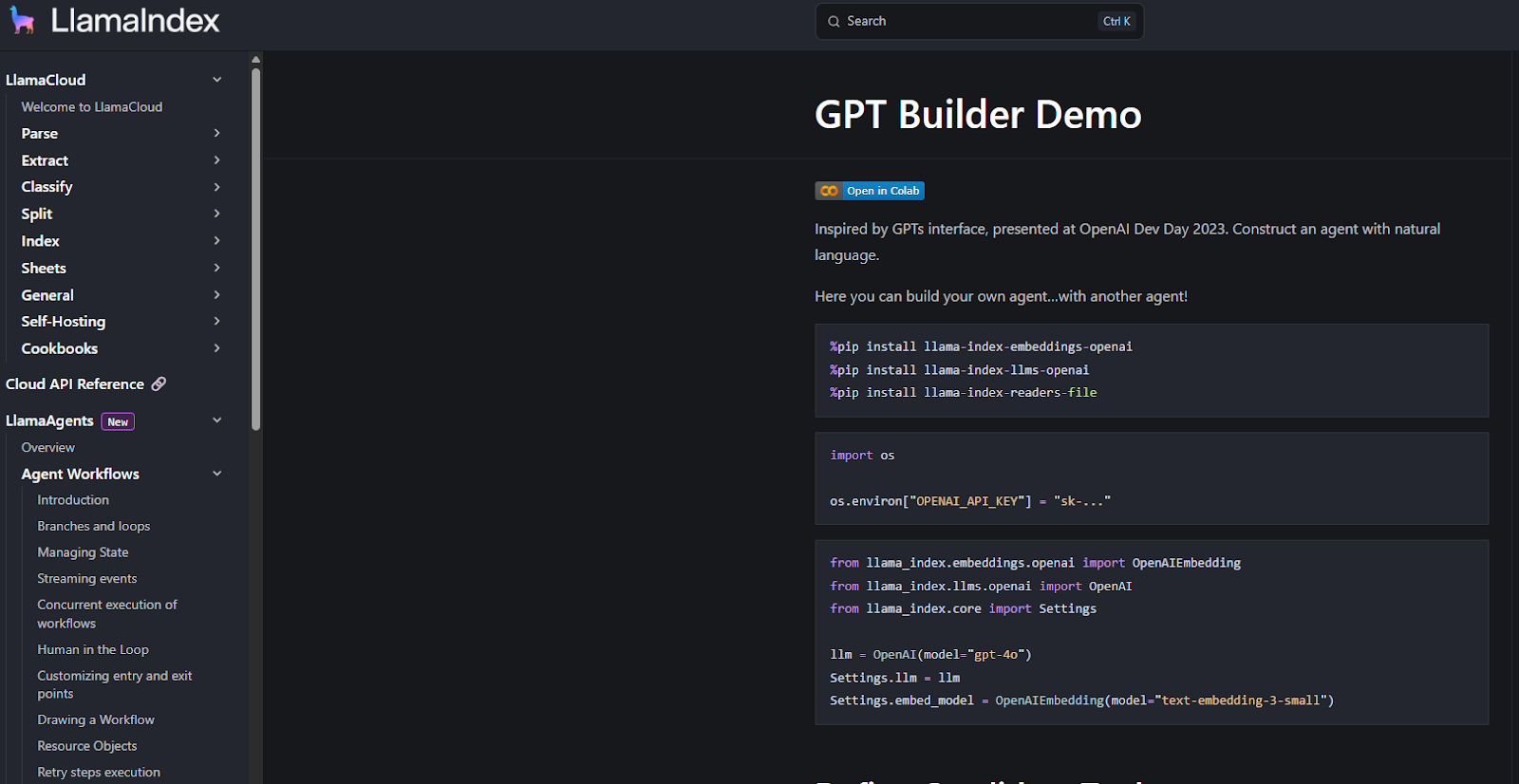

5. LlamaIndex Workflows (+ Agent Workflows)

- Model: Lightweight orchestration layer, especially good for retrieval-augmented and data-driven agents.

- Highlights:

- Workflows 1.0 delivers standalone event-driven chaining plus strong observability plugin support.

- Agent Workflows support one-to-many agent coordination, real-time streaming, and multi-agent handoffs.

- Best for: Teams prioritizing AI-infused data pipelines, tight retrieval integration, or needing a small-footprint orchestrator.

6. Other Notables

- PydanticAI: Production-ready Python agent framework with robust type-safety and built-in observability, ideal for teams emphasizing structured outputs.

- LlamaIndex, Camel, MetaGPT: Each brings a specific flavor - semantic indexing, “role-play” agents for software development, or decentralized multi-agent swarms.

What to look for when choosing a framework:

- Does it support durable state, recovery, and pause/resume flows?

- Are human-in-the-loop and approval checkpoints easy to add?

- Does tracing/explainability cover tool calls and state updates?

- Are integrations (APIs, data sources) pluggable, standardized, and secure?

- Is there support for multi-agent “teams,” or only single-agent loops?

- What’s the story for memory, privacy, and compliance?

No single framework is perfect for every application. Pair your choice with clear requirements - durability, HITL, ecosystem breadth, or observability - and remember that the tech is still evolving rapidly.

Why Interoperability Is the New Baseline: The Rise of Standards

The agentic ecosystem just took a leap forward with the December 2025 formation of the Agentic AI Foundation (AAIF), now stewarding open standards like the Model Context Protocol (MCP) and AGENTS.md.

What do these standards bring to the table?

- MCP (docs): A universal, open protocol for connecting agents to external tools, APIs, and environments - decoupling agents from hardcoded integrations and allowing seamless “plugin” discovery.

- AGENTS.md: An open format for describing agent capabilities and handoff instructions, now referenced by 60,000+ OSS projects as of Dec 2025.

- Impact: Over 10,000 MCP servers are deployed, supporting major platforms (Anthropic Claude, OpenAI ChatGPT, Google Gemini, Microsoft Copilot, and more).

These standards make it easier for tools, platforms, and frameworks to interoperate - lowering integration costs and protecting teams from lock-in. Now, adding a new API or tool connector is far less painful, and your agent can join a growing ecosystem rather than being trapped in bespoke code.

Agent Ops in Practice: Best Patterns for Combining Agentic Frameworks with PromptLayer

Once you’ve chosen a runtime, keep in mind: building agentic systems is just the start. The real challenge is operating, monitoring, and iterating on those systems - ensuring they adapt as requirements change and remain safe as you scale.

PromptLayer shines as the glue layer in this process:

- Prompt registry and versioning: Version prompts, labels, and evals separately from code - crucial for regression testing and rapid experiments.

- Trace-based debugging: View every prompt, model call, tool invocation, and state change in a single, queryable timeline.

- Automated eval gates: Gate releases on eval metrics - if a prompt fails regression, roll it back or flag for investigation.

- OpenTelemetry integration: Plug traces into your existing monitoring, so anomalies and regressions never fly under the radar.

This operational rigor complements any major agentic framework, allowing you to optimize reasoning steps, spot drifts, and keep systems accountable - even as autonomy grows. PromptLayer empowers agent engineering teams to move fast and build reliably.

Safety and Governance: Never an Afterthought

With agency comes risk. An agent with tool-use powers can move money, update databases, or trigger real-world workflows - so production frameworks must support:

- Secrets management and approval steps (never let an agent execute sensitive actions unsupervised).

- Audit logging and redaction policies (trace every action, control data retention, enable incident postmortems).

- Access control and state boundaries (grant granular permissions, sandbox dangerous tool calls, and verify compliance).

Always treat logs and traces as potential sensitive information. Use frameworks that support encrypted storage and strong governance controls, and bake in human intervention at key risk points, especially for critical operations.

The New Blueprint for Autonomous AI Is Here

Agentic AI frameworks are now the backbone of next-generation software, offering every component necessary to turn a prompt-response model into a true autonomous system - complete with planning, memory, tool use, workflow control, and real-time visibility. With the emergence of open standards like MCP and AAIF, the days of fragmented, ad-hoc integrations are waning, giving way to cohesive, future-proof stacks.

GPT-5.2 marks a shift from AI as perceived “magic” to AI as engineered capability. It introduces a model designed for professional-grade agents, advanced coding workflows, and large-scale analytical tasks.

That increase in capability brings real operational complexity. Teams that succeed will be those that can evaluate, version, and optimize these models with discipline - balancing performance, cost, and reliability without destabilizing production systems.