Where to Build AI Agents: n8n vs. PromptLayer

When you're having trouble getting one prompt to work, try splitting it up into 2, 3, or 10 different prompt workflows. When prompts work together to solve a complex problem, that's an AI agent.

What Are AI Agents and What Are They Used For

AI agents are autonomous software programs designed to interact with their environment, make decisions, and perform tasks to achieve specific goals (How to Build AI Agents for Beginners: A Step-by-Step Guide). An AI agent might take a user’s request, decide on a series of steps (which could include calling external tools or APIs), and then return a result or execute an action — all without constant human guidance.

Common use cases for AI agents span both user-facing applications and internal workflows.

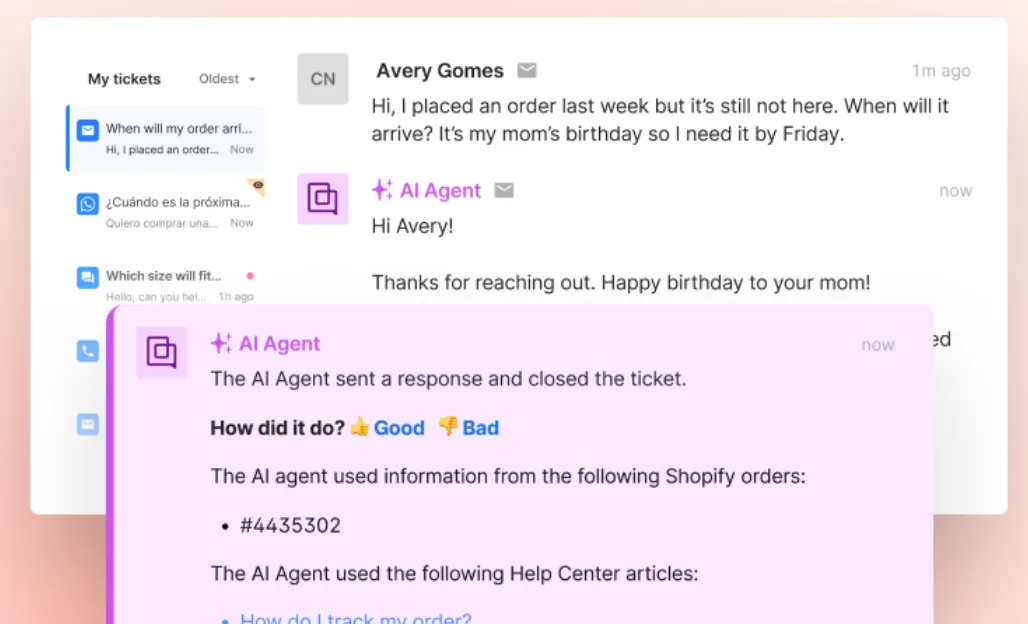

On the user-facing side, AI agents power chatbots and virtual assistants that can hold conversations, answer questions, or provide recommendations (for example, a customer support chatbot on a website or an AI assistant in a mobile app). They can also drive features like personalized tutoring apps, AI writing assistants, or even game NPCs that react intelligently to players.

On the internal automation side, AI agents are used to streamline business processes: they might summarize and route support tickets to the right team, generate reports or insights from data, or handle routine communications. For instance, an AI agent could be set up to read incoming emails and automatically draft replies or categorize them by urgency. By chaining together multiple AI calls and business rules, agents can handle tasks such as triaging customer requests, composing thousands of personalized sales emails a day, or monitoring and analyzing documents — tasks that would be tedious or error-prone for humans to do manually.

PromptLayer: Fine-Grained Control for Production Agents

PromptLayer is a platform built specifically for managing and deploying AI agents (and the prompts that drive them) at production scale. It offers deep control, robust versioning, prompt evals, and comprehensive observability suited for small team and enterprise use-cases.

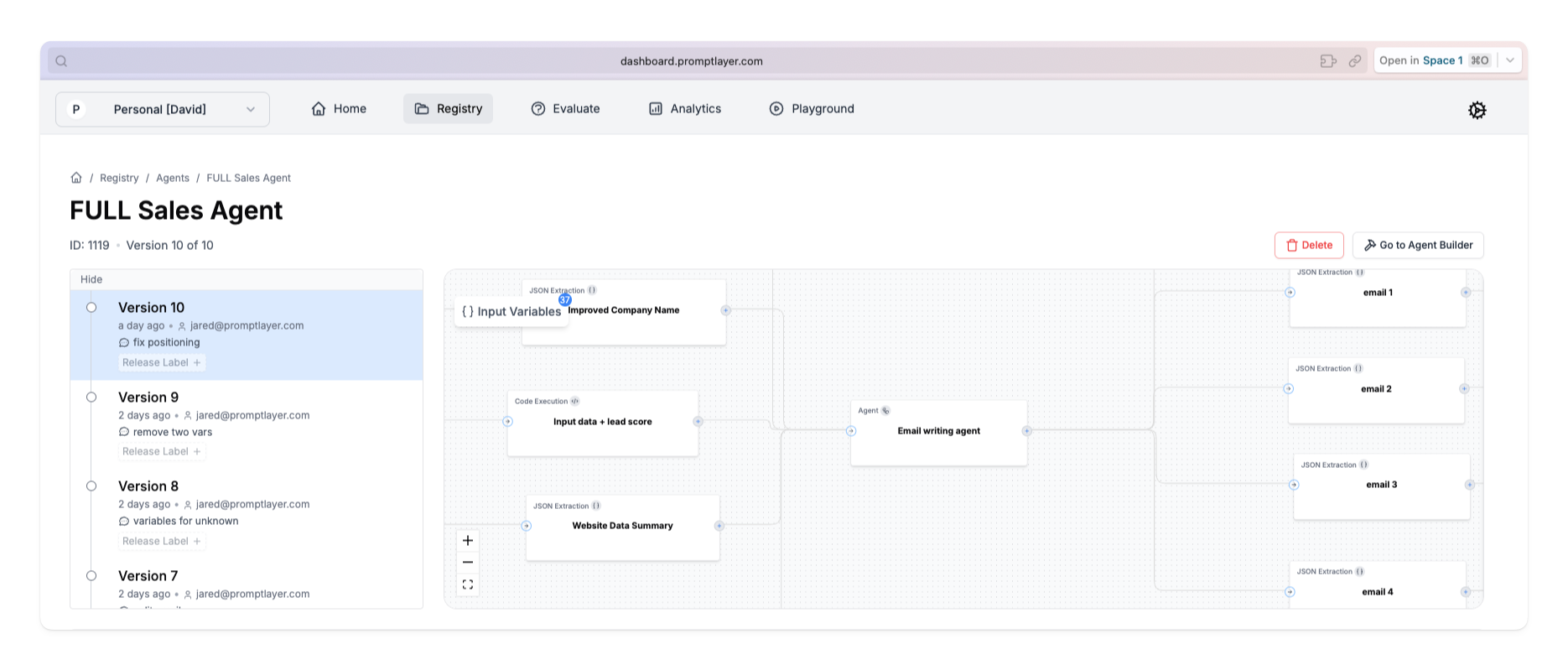

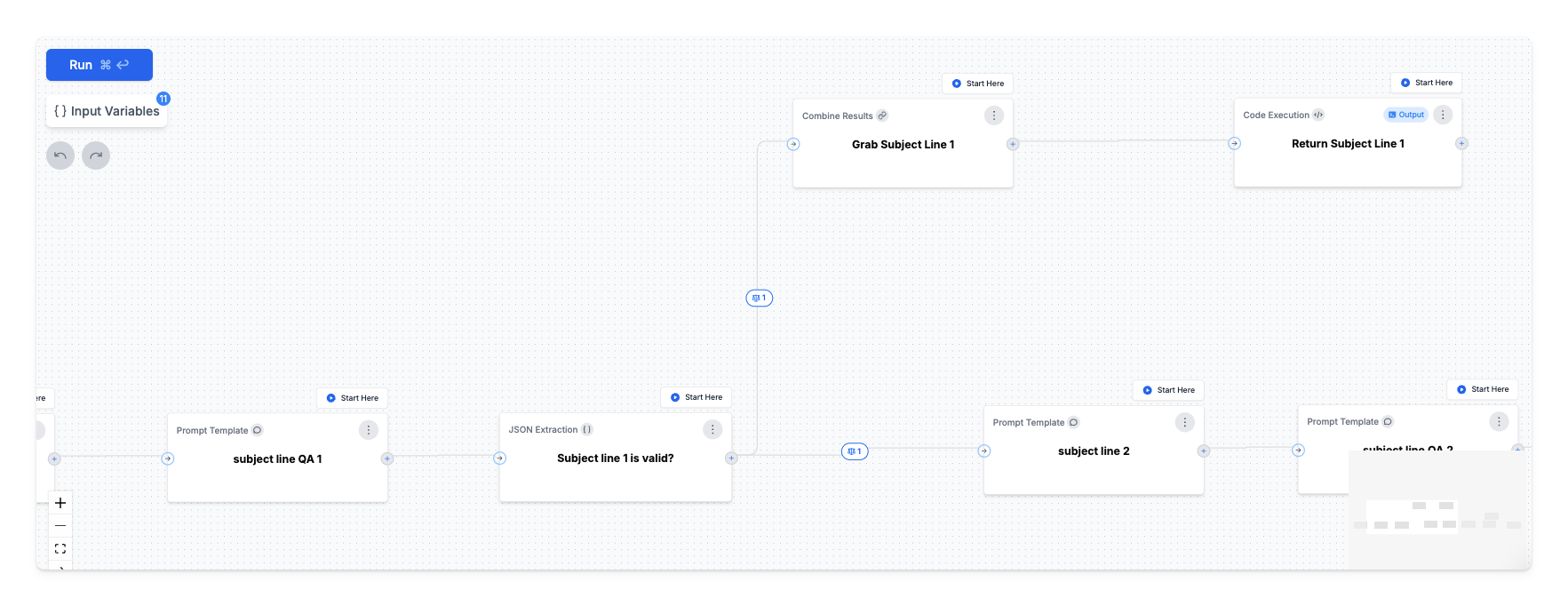

PromptLayer’s agent infrastructure allows developers (and even non-developers) to visually design AI workflows that can include multiple LLM calls, conditional logic, and external API calls (Agents - PromptLayer). The idea is to let you create and iterate on complex AI behaviors without having to build and maintain a custom backend from scratch.

Fine-Grained Prompt Tuning and Management

At the core of PromptLayer is a “prompt registry,” essentially version control and management for your AI prompts .

Every prompt used by your agent can be tracked, experimented on, and improved over time. You can A/B test different prompt phrasings, monitor which version performs best, and roll out updates gradually. This level of prompt tuning is crucial in production because small wording changes can significantly affect an AI’s output.

PromptLayer treats prompts as first-class citizens: you can store them, label them, and evaluate them systematically. For example, a team might maintain one prompt version for a QA chatbot’s tone and another version for its fallback behavior, and PromptLayer makes it easy to switch or update those versions in the live agent. This emphasis on prompt management means your AI agent’s behavior can be finely adjusted and optimized over time, much like how code is versioned and improved.

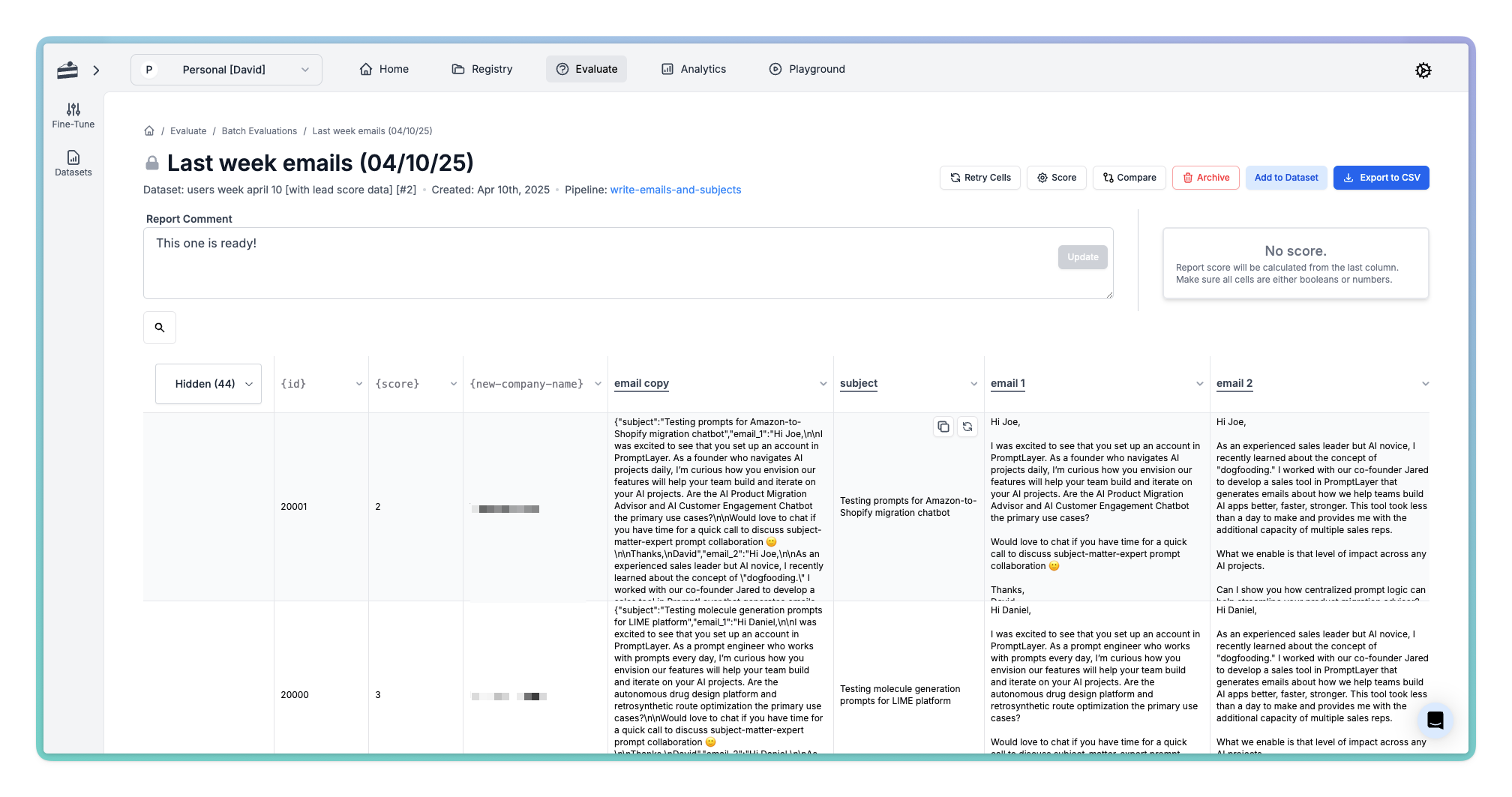

Batch Runs over datasets and CSVs

PromptLayer lets you schedule or trigger agents to run over large datasets—CSV, JSON, or native PromptLayer Datasets. The batch runner spins up isolated executions for each row and streams logs in real time, so you can monitor thousands of calls per minute without blowing past rate limits. Results are captured in a structured table where you can filter, compare prompt versions, and export back to your data warehouse. Typical scenarios include mass‑personalized email campaigns, back‑filling app content, or reprocessing historical tickets whenever a prompt variant changes.

Stateless Agent Design for Predictability

PromptLayer agents are designed with a stateless architecture, meaning each run of the agent is independent of previous runs. This statelessness ensures predictability, repeatability, and reliability in agent behavior. Developers can precisely replicate any interaction, making troubleshooting straightforward and significantly reducing uncertainty. For example, if a user reports unexpected behavior from an agent interaction, teams can quickly replicate the scenario exactly as it occurred, pinpointing and resolving issues efficiently.

The real reason that agents are so hard for teams to build is because they are impossible to debug. Stateless agents solves this and lets teams build unit tests, integration tests, and reproduce user action errors.

Unit Testing for Regression Safety

Every agent endpoint can be paired with a test suite of input/output assertions or metric targets (e.g., classification accuracy ≥ 0.9). Tests run automatically on each prompt change and surface diffs inline—similar to CI for code. PromptLayer’s eval batch runner supports golden‑set tests, randomized Monte‑Carlo cases, and hallucination checks by chaining to external validators. Green tests gate deployments, allowing PMs and non‑technical editors to tweak copy while engineering keeps safeguards in place.

Scaling and Production Readiness

PromptLayer was designed with production workloads in mind, meaning it includes observability and infrastructure to handle high volumes and mission-critical tasks. Logging and monitoring are built-in: every request and response can be recorded, with rich metadata like execution IDs and user IDs.

This means when your agent is handling thousands of requests, you will have a trace of each step it took. Companies using PromptLayer have leveraged this to great effect – for example, Ellipsis (an AI startup) scaled from 0 to over 500,000 agent requests in 6 months, and used PromptLayer’s centralized logs and debugging UI to cut their troubleshooting time by 75%.

Engineers could quickly search any anomalous workflow by ID and pinpoint the exact prompt and response that misbehaved, then fix it in a few clicks. This kind of observability and rapid debugging is invaluable for scaling AI agents in production, where issues inevitably arise across many edge cases.

Real Examples with PromptLayer

PromptLayer’s infrastructure is used in both customer-facing products and internal automation at scale.

For instance, the e-commerce helpdesk provider Gorgias built an AI support agent with PromptLayer that they plan to roll out to all 15,000 of their merchant customers (Gorgias Uses PromptLayer to Automate Customer Support at Scale). Their PromptLayer-powered agent will handle as much as 60% of incoming support conversations, showing how the platform enables an high-scale AI feature integrated into a SaaS product.

Another example is in content creation and journalism – organizations have used PromptLayer to build newsroom tools that automatically summarize articles or suggest headlines, effectively an AI editorial assistant working alongside journalists. Because PromptLayer allows multiple LLMs and prompt chains, such an agent can cross-verify facts with one model, generate a summary with another, and format the output according to editorial guidelines.

On the internal side, companies have crafted AI agents for sales automation. Imagine an AI Sales Outreach Agent that takes a spreadsheet of leads, personalizes an email to each using an LLM (with prompt tuning to match the company’s tone), and then sends them out via an email API node. Users of PromptLayer have indeed set up systems like this – sending thousands of personalized emails per day autonomously, while keeping humans in the loop via logs and occasional approvals.

n8n: Rapid Automation with No-Code Simplicity

N8n approaches AI agents from the angle of low-code automation. It’s a general-purpose workflow automation tool (similar in spirit to Zapier or Make) that has embraced AI integrations to let users build intelligent workflows without heavy coding. N8n is particularly popular among indie developers, startup tinkerers, and teams who want quick solutions spun up with minimal overhead – it’s free and source-available, and you can self-host it or use their cloud.

AI and LangChain Integrations

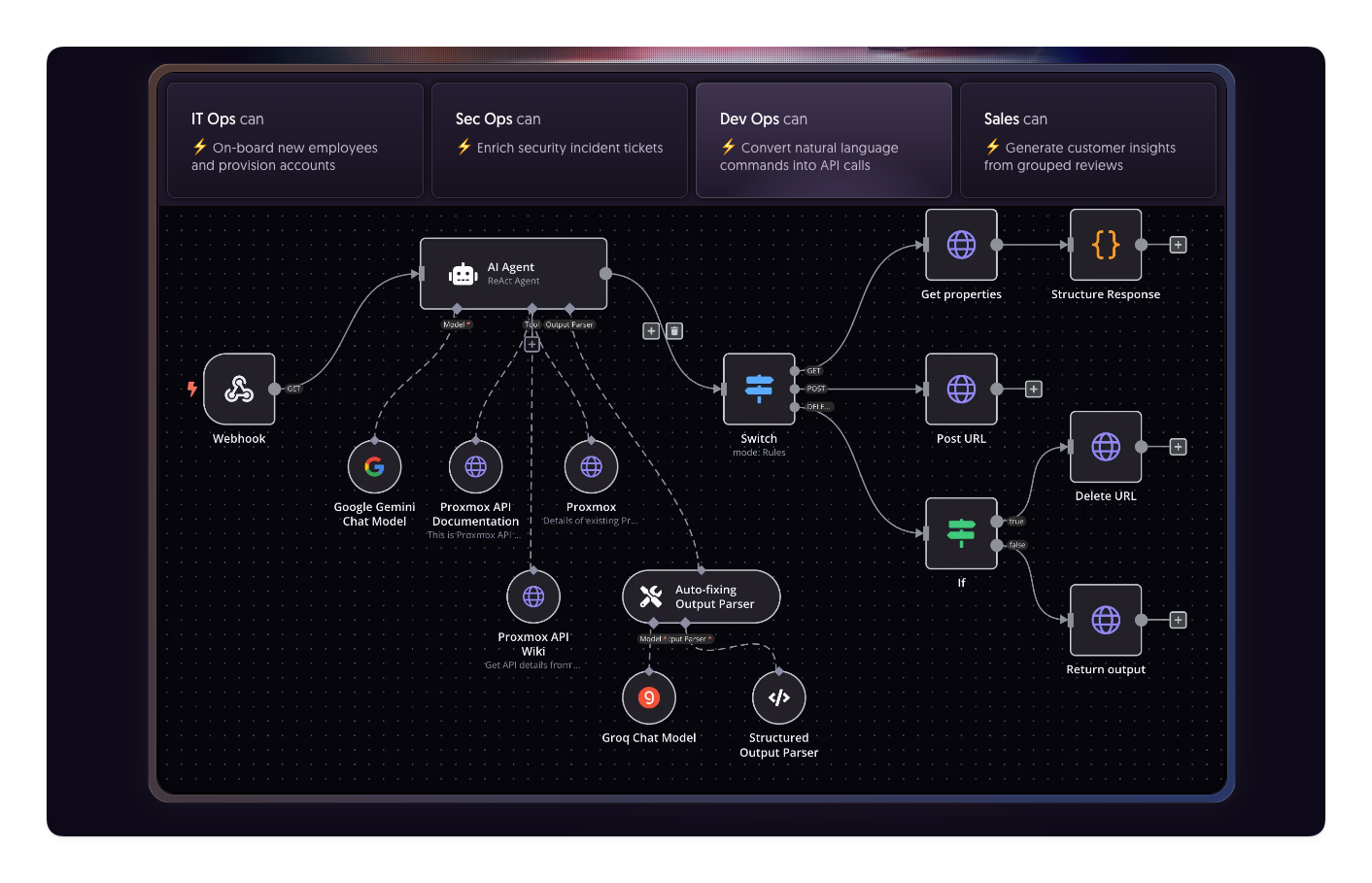

N8n began as an automation tool, and it has recently added AI features, including direct integration with OpenAI APIs and even deeper support via LangChain. Out of the box, n8n provides nodes for various LLM providers (OpenAI’s GPT, Anthropic’s Claude, etc.) and allows using LangChain’s constructs like chains and agents within the n8n workflow canvas (LangChain in n8n | n8n Docs ).

Building Common-Pattern Agents

Constructing an AI agent in n8n is a matter of dragging and configuring nodes rather than coding agent logic. You typically start with a trigger node – this could be time-based (cron jobs), webhook calls, or app-specific triggers (like “new row in Google Sheets” or “incoming Telegram message”). For a chatbot agent, a common pattern is to use a webhook or chat message trigger. In fact, n8n now offers an “Embed” option where you can embed a chat widget on a website that directly pipes user messages into an n8n workflow.

Once triggered, you add AI processing nodes. N8n offers an OpenAI node (and similar nodes for other LLMs) where you provide a prompt or conversation for the model to generate a completion. They also have an AI Agent node (via LangChain) where you can specify tools the agent can use. You can also include function nodes (JavaScript code) for custom logic, or decision nodes (IF, Switch) to handle workflow branching. The result is a UI-based graph representing your agent’s logic flow.

Because n8n is a general automation tool, an AI agent you build with it can be immediately hooked up to lots of services. Want your agent to post results to Slack? Just add a Slack node. Need to save data to Notion or Airtable? There are nodes for that too.

Limited Concurrency and Scaling

N8n's cloud plans offer capped concurrent executions, with higher capacities available at enterprise levels, though self-hosting requires manual infrastructure management.d

Use Cases for N8n Agents

Many people use n8n for personal or small-business automations enhanced with AI. For example, a personal use might be an automation that watches your incoming emails and uses OpenAI to summarize each email for you every morning (a “digest” bot).

Another example is sending a custom welcome message to new users of your product: when a new account is created (trigger via your database or webhook), n8n could use an AI node to compose a friendly, personalized welcome email or Slack message. This kind of login/onboarding messaging can be made more engaging with an AI that references the user's context (e.g., what options they chose during sign-up). N8n shines here because you can integrate your user database, the AI, and your email service all in one place.

It’s worth noting that while n8n is powerful, the low-code approach can hit limits for very complex agents. If your needs get very intricate (lots of custom logic, complex memory handling, etc.), at some point you are configuring so many nodes that writing code might be easier. But for a huge range of moderate-complexity agents, n8n strikes a good balance by providing building blocks. It also offers the ability for developers to inject code where needed (via a Code node, usually JavaScript, or even a custom function tool in LangChain).

In summary, n8n is a great choice if you want a quick, no-frills way to build an AI agent and integrate it with numerous other services, all through a user-friendly interface. It’s especially suited for indie hackers, hackerspaces, or teams that need a solution fast without a big engineering investment. You get a lot of pre-built functionality (including community-contributed templates for common AI workflows) and the flexibility to customize if needed – all while writing little to no code for the majority of tasks.

Use-Case Comparison: PromptLayer vs. n8n

| Use‑case | Why PromptLayer? | Why n8n? |

|---|---|---|

| Application AI feature (e.g., news summarizer) | Need prompt traceability, rollback, and real‑time latency monitoring to keep public‑facing features stable and fast. | — |

| Internal sales-outreach agent | Thousands of personalized emails/day require A/B prompt testing and CI evals to prevent regressions before reaching customers. | — |

| Personal daily-digest bot | — | Quick setup: Gmail → OpenAI → Telegram in minutes without additional infrastructure. |

| System-wide RAG chain | Visual branching, multi-model ensembles, and detailed step-by-step observability for sophisticated retrieval and generation workflows. | LangChain integration with vector-store nodes that integrate smoothly with existing n8n workflows. |

Feature-by-Feature Breakdown

| Capability | PromptLayer | n8n |

|---|---|---|

| Drag‑and‑drop builder | ✅ | ✅ |

| Prompt versioning | ✅ | ❌ |

| Automated evals | ✅ | ❌ |

| Fine‑grained prompt editing | ✅ | ⚠️ Limited |

| Multi‑LLM support | ✅ | ⚠️ Limited |

| Logs & analytics | ✅ Detailed | ⚠️ Basic |

| Auto-scaling concurrency | ✅ | ⚠️ Requires manual setup |

| RBAC / Team workspaces | ✅ | ⚠️ Enterprise only |

| SaaS integrations | ⚠️ API-based | ✅ Extensive |

| Ideal user | AI-first and production teams | Indie developers, quick automations |

Making Your Choice

- Choose n8n if you’re an indie developer or small team looking to quickly prototype or automate simple workflows with minimal overhead.

- Choose PromptLayer when you're building agents as critical components of your product or internal operations, requiring robust version control, scalability, observability, and multi-provider flexibility.

With this guide, you're well-equipped to choose the right platform to make your AI agents efficient, scalable, and effective.