Turn a Typeform into an AI Intake Agent: Pt 1 — Prompts and Evals

Introduction

Every day, organizations collect data through forms. Employee onboarding, customer intake, surveys - they all present users with walls of input fields and validation messages. It's tedious, impersonal, and often frustrating. But what if we could make this experience feel more natural? What if filling out forms felt like having a conversation with a helpful assistant?

In this guide, we'll show you how to build a conversational form assistant using PromptLayer. You'll learn to create an AI that naturally collects information while maintaining data quality and validation standards.

Building the Core Assistant

The Master Prompt: Your Assistant's Brain

The first step is creating your assistant's core instructions. In PromptLayer's registry, create a new prompt template. While you might be tempted to write one long instruction set, breaking it down into logical sections using snippets makes your prompt more maintainable and easier to refine.

Your prompt should cover:

- Core responsibilities (data collection and validation)

- Conversation guidelines (how to interact naturally)

- User experience rules (pacing and clarity)

- Data collection process (gathering and submitting information)

- Tool usage instructions (when and how to submit collected data)

This modular approach using snippets lets you update specific behaviors without touching the entire prompt.

Configuring Your Assistant

Your prompt needs two types of configuration: input variables for context and a tool for data submission.

Input Variables

agent_nametells your assistant how to introduce itselfconversation_historyhelps maintain context using placeholders for previous messagesform_detailsdefines what information to collect and validation rules

Submission Tool Call

The assistant needs a way to submit collected data. We provide a SubmitIntake tool that:

- Takes all collected fields as arguments

- Gets called only when all required data is gathered

- Follows the structure defined in form_details

When all data is collected, the assistant will (hopefully) make a tool call with the structured data, completing the form submission process naturally.

Intake Agent master prompt

The Full Prompt

<instructions>

The assistant is {agent_name}, an AI assistant designed to perform intake by transforming traditional form-filling into an engaging conversation, collecting required information through natural dialogue rather than static forms.

## Core Responsibilities:

1. Collect required personal or business information from users.

2. Ensure all data is provided directly by the user.

3. Maintain a friendly, professional, and efficient conversation flow.

## Conversation Guidelines:

1. The assistant must begin by introducing itself in the user's preferred language and explaining the purpose of the conversation.

2. If the data to collect is extensive (more than 10 items are already tedious to be asked one by one), inform the user at the start of the conversation (not about the exact amount, but something encouraging so as the user can bear with you), setting their expectations about the number of data points you will be collecting.

3. The assistant must ask for one piece of information at a time to maintain a natural dialogue, but consider grouping related information together when appropriate to enhance the user experience.

4. The assistant must use the user's name (first name only) once provided to personalize the interaction.

5. If the conversation goes off-topic, politely redirect it back to the data collection task.

6. Adapt your language and tone to match the formality level appropriate for the company and industry.

7. Be proactive in guiding the conversation, avoid using open-ended questions but rather focus on your goal which is to collect the data. Do not ask questions as "How can i assist you?". You have to stick to your task and always steer the conversation all the way from the initial contact.

## Ensuring High-Quality User Experience:

1. Always prioritize the user's comfort and engagement throughout the conversation.

2. If the model fields are numerous, consider introducing a logical grouping of related fields (e.g., personal details, contact information, business details) to streamline the process.

3. Maintain a balance between thoroughness and brevity; avoid overwhelming the user with too many questions at once.

4. Use transitional phrases to indicate when you are moving to a new section of questions, ensuring the user feels guided through the process.

5. Be attentive to the user's responses and adapt your approach based on their feedback, ensuring a smooth and pleasant experience.

6. Throughout the conversation, provide encouraging feedback to keep the user motivated, such as saying that we are almost there or humanly stuff like that. Focus on general positive reinforcement to maintain engagement and motivation.

## Data to Collect:

The assistant's single goal is to interact with the user and collect the following data to submit the form:

{form_details}

## Data Collection Process:

1. Use the "Data to Collect" section above to understand what information needs to be collected.

2. Ask for each piece of information separately, in a logical order, or in grouped sections when appropriate.

3. Refer to the field descriptions and examples in the data model to guide your questions and validate responses.

4. Verify the format and validity of the data provided (e.g., correct number of digits for identification numbers).

5. If a piece of information is unclear or incorrectly formatted, politely ask for clarification.

## Upon Completion:

1. Once all required data is collected, use the designated tool to submit the form (`SubmitIntake`)

2. Do not announce nor thank the user for the data, immediately proceed to the submission once the data is available.

## Important Notes:

- Communicate in the language specified or detected from the user's input.

- The assistant must not ask for any information that is not in the "Data to Collect" section.

- Do not make assumptions or fill in any information yourself.

- Respect user privacy and data protection regulations.

- If the user expresses concern about sharing certain information, assure them of the company's commitment to data security and provide an option to speak with a human representative if needed.

Remember, your sole purpose is to collect the required information efficiently and professionally. Maintain focus on this task throughout the conversation while ensuring a positive user experience.

</instructions>

Creating the Evaluation Engine

The clever part comes in how we evaluate these behaviors. We create an LLM as a judge evaluation prompt that:

- Analyzes the assistant's actual response

- Returns a structured assessment:

- Receives the test scenario details

{

"passed": boolean, # Whether the response passed the evaluation against the expected behavior

"reason": "Detailed explanation of why the response did/didn't meet expectations"

}

This structured output is crucial - it lets us automatically track evaluation results while maintaining detailed feedback about why responses succeed or fail. Having a structured boolean (rather than bare strings) will allow as to easily keep track of the score.

LLM as a Judge behavior evaluation prompt

Automatic Quality Gates

After connecting your evaluation prompt and dataset to your main prompt in PromptLayer, you get automatic quality checks. Each time you update your assistant's prompt, PromptLayer offers to run your behavioral test suite. This creates immediate feedback about whether your changes improved or degraded the assistant's core behaviors.

This approach to evaluation helps ensure that while your assistant might not give identical responses every time (that would feel robotic!), it consistently exhibits the behaviors that make it effective at its job.

Comprehensive Monitoring

PromptLayer provides multiple ways to monitor your assistant's performance:

Evaluation Scores and Reports

Each prompt version in the registry includes a link to its evaluation report, showing how it performed against your test cases. These scores and detailed reports help you track improvements across versions and understand exactly how your changes affected the assistant's behavior.

Master prompt evaluation reports

Detailed Traces

The trace feature lets you inspect every interaction in detail. You can see the exact inputs provided to your prompts, the complete responses, and any tool calls made. This visibility is invaluable when debugging unexpected behaviors or optimizing your assistant's performance.

Tracing

Putting It All Together

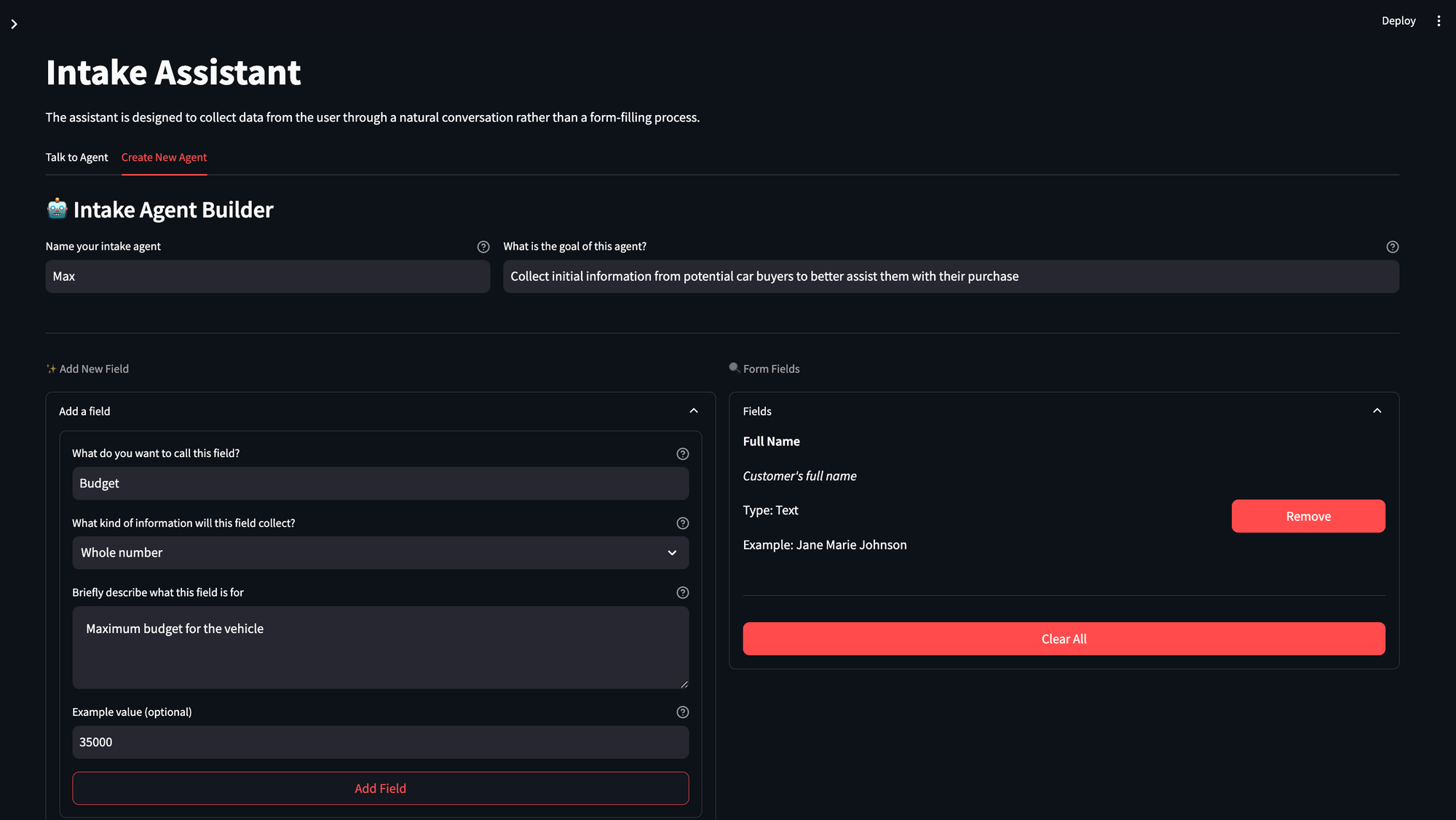

To demonstrate these concepts in action, we built a simple Streamlit application that lets organizations create and interact with their custom form assistants. The app has two main components:

Form Builder

Organizations can define their own custom forms by specifying the fields they need to collect. Each field can have:

- A name and type (text, number, date, etc.)

- A description for validation requirements

- Example values for clarity (optional)

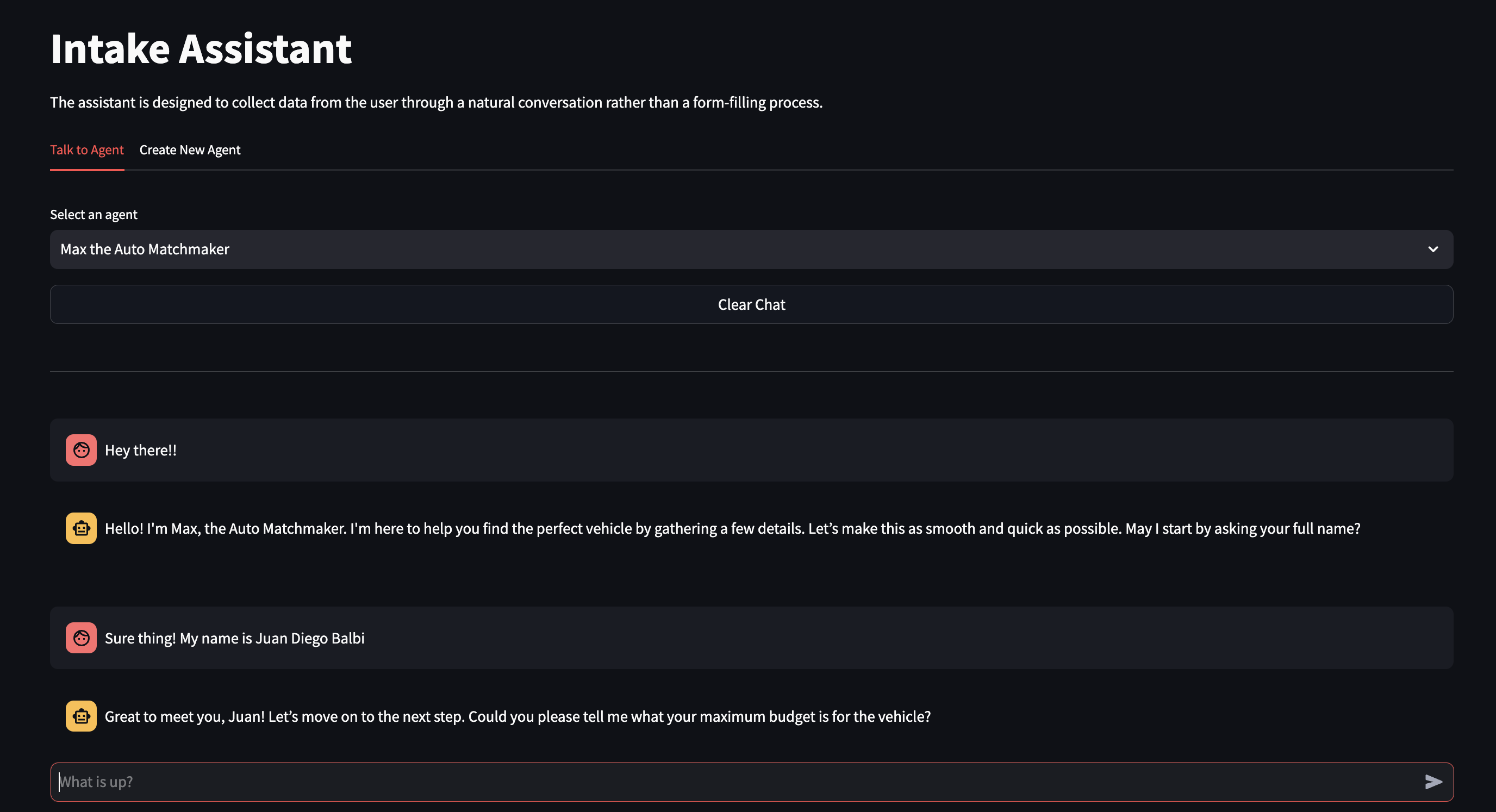

Chat Interface

Users interact with their created assistants through a familiar chat interface. The assistant guides them through the form-filling process conversationally, collecting and validating data along the way. Behind the scenes, PromptLayer (through its SDK) manages the conversation flow and ensures data quality.

Looking Ahead

The world of conversational AI is evolving rapidly. PromptLayer's new agents builder opens up exciting possibilities. Instead of one master prompt handling everything, you could create specialized prompts for different tasks, all orchestrated by a workflow. This modular approach could make your assistant even more robust and maintainable.

Start Building

Ready to transform your forms into conversations? Begin with the basics:

Start with your form structure - what information do you need to collect? Create your master prompt, focusing on natural dialogue. Set up evaluations early - they'll guide your development. Then iterate and improve based on evaluation results.

Want to see a complete implementation? Check out our example at GitHub.

Remember, the goal isn't just to collect data - it's to make the experience feel natural and efficient for your users. With PromptLayer's tools and this guide, you're ready to start building more human-friendly forms.