Prompt Routers and Modular Prompt Architecture

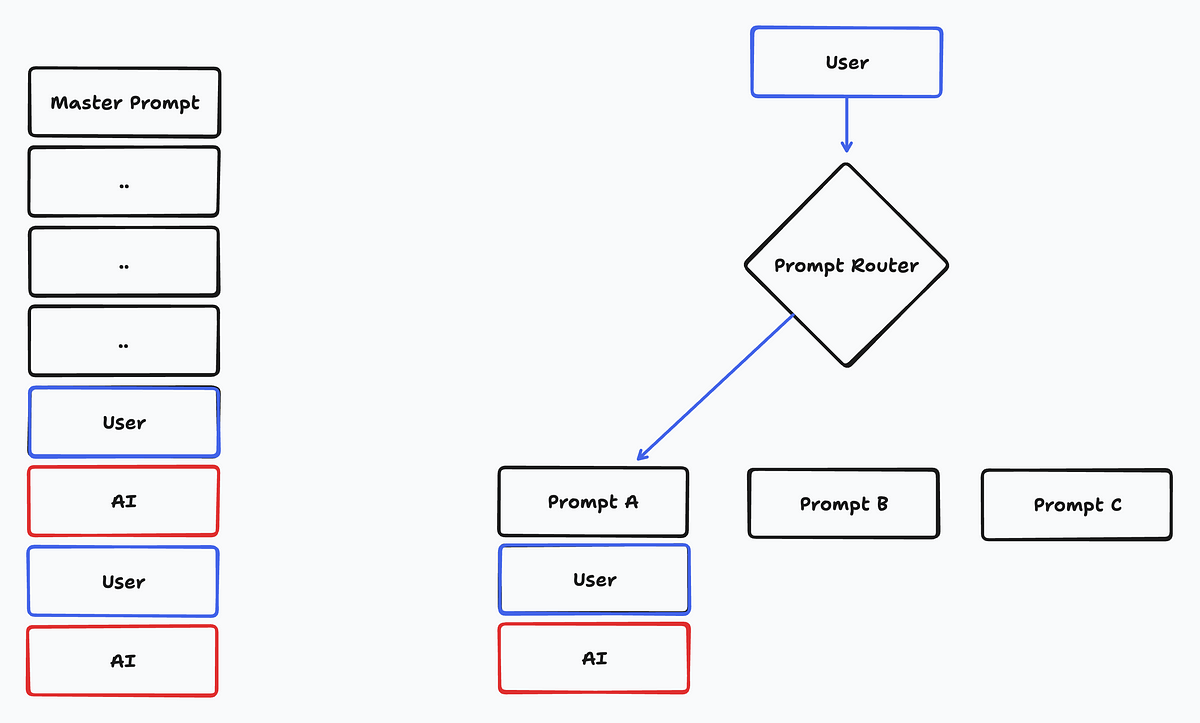

The most naïve approach to building an AI chatbot is using a single master prompt. Every new user message will be appended to the prompt. Every new AI response will be appended to the prompt.

This article will examine a better approach, informed by interviews of our PromptLayer customers.

If you want to learn how the naïve approach works, read our tutorial on building ChatGPT from scratch.

The screenshot above is taken from an AI doctor chatbot. As you can see, the master prompt (read: the list of messages) has started to get increasingly long.

Longer prompt ➡️ longer context window ➡️ Slower & more expensive responses ⌚️ 💰

The solution to this is prompt routing. This is a concept in prompt engineering where you break up your monolithic master prompt into a bunch of small modular task-based prompts.

This also has the added advantage of making your responses better & easier to debug! Smaller, more specialized prompts are easier to maintain as a team and way easier to systematically evaluate (learn more about prompt management).

It just makes more sense for production apps. Below, we will go through an example of using the prompt router architecture.

Video tutorial about prompt routing

Identify the Subtask Categories

Imagine you’ve released a general-purpose chatbot application and, after a month of analyzing user traffic, you’ve identified three main categories of questions:

- Questions about the bot itself (e.g., “What is your name?” or “What is your purpose?”)

- Questions about a specific news article (e.g., “What is going on with the upcoming election?”)

- General programming questions (e.g., “How do I fix this bug?” or “I have a missing semicolon, what should I do?”)

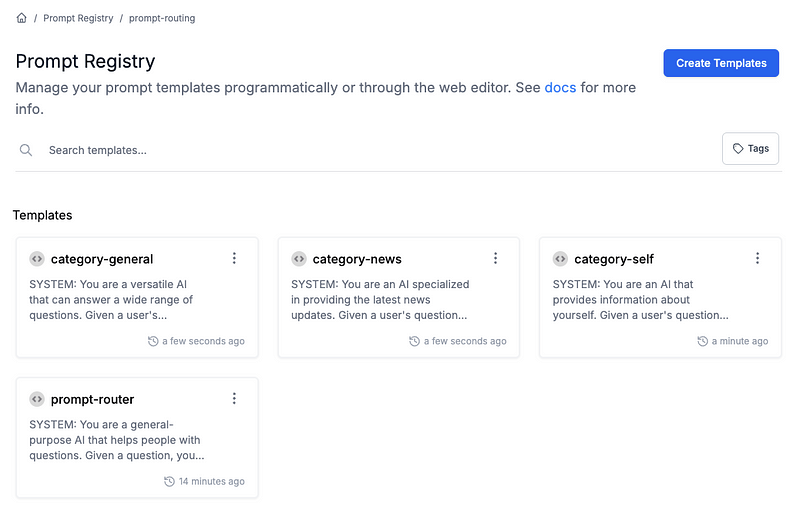

Divide into Specialized Prompt Templates

Now that you’ve identified these categories, you can create prompt templates that are responsible for handling each one. This approach allows you to keep your prompts modularized, which simplifies development and evaluation. However, you now face the challenge of deciding which prompt template to use for each incoming question.

Building the Prompt Router

There are many ways to programmatically decide which prompt to route the user’s message into.

- General LLM Model: The easiest and most common way to do this is to use a large LLM like GPT-4. We’ll use this method below. In short, create a prompt template that takes in the user message and responds with the prompt template to route to.

- Fine-Tuned Model: Usually, if you can reliably get a GPT-4 prompt as your router, you can fine-tune a smaller model to save on money and latency. Try fine-tuning GPT-3.5-Turbo on your GPT-4 outputs.

- Vector Distance: Generating embeddings is really quick and really cheap (as compared to normal completions). You can easily build a prompt router by comparing vector distances of the user’s message to the possible categories.

- Deterministic: Sometimes you can decide how to route it simply with a deterministic solution. Maybe you are searching for a keyword in the string…

- Traditional Machine Learning: Last but not least, categorization is a very popular use-case of traditional machine learning. Something like decision trees can help you build a way cheaper and way faster prompt router than a general-purpose LLM.

To keep it simple we will use the first option and write a prompt for GPT-4 as our router. Our prompt template will take in a user question and responds with a single word indicating the appropriate category: “self,” “news,” or “coding.” In your application code, you can use this output to map the question to the corresponding prompt template.

You are a general-purpose AI that helps people with questions.

Given a question, your job is to categorize it into one of three categories:

1. self: For questions about yourself, such as "What is your name?"

2. news: For news-specific questions, like "What's going on with the election?"

3. general: For all other questions.

Your response should be one word only.Testing and Evaluating the Router

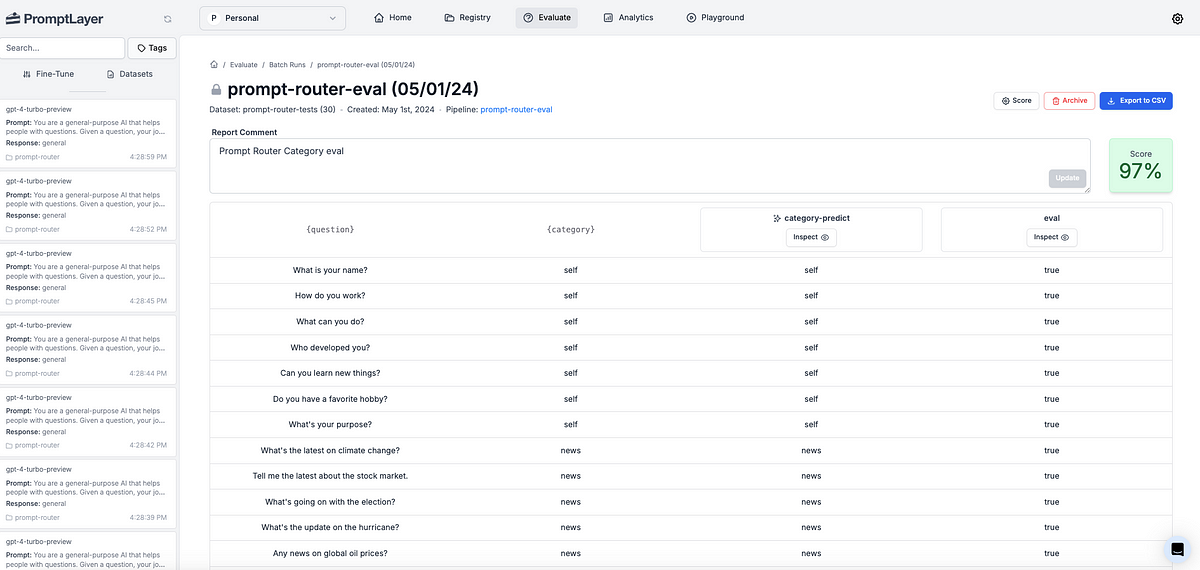

The biggest advantage of prompt router architectures is that it makes evaluation easy. In our case, all we need to do is build a bunch of test cases and do a string comparison eval.

Learn how to build prompt evaluations in PromptLayer.

Adding Memory

Switching away from a monolithic prompt means we need to manually inject chat-context into the prompt. There are a few ways to do this but we will use the following three methods:

- Include the previous 3 messages in the context.

- Include a summary of the chat in the context.

- Make the AI respond with an updated summary after each message.

You are a versatile AI that can answer a wide range of questions. Given a user's question, your task is to provide an accurate and informative response regardless of the topic.

Chat summary: {summary}

The most recent three messages in the chat: {recent_messages}

Please respond with two things:

1. An answer to the user question

2. A new summary of the chatAs you see in the category-general System prompt above, we inject two context variables: “summary” and “recent_messages”.

We have also asked the AI to generate a new summary on every message. This is how we will maintain short-term memory in our chatbot.

Learn more about LLM memory in a tutorial video here.

Conclusion

By implementing a model routing architecture in your LLM application development, you can keep your prompt templates small and focused, making them easier to maintain and evaluate. This approach helps you create a more modular and scalable AI application, allowing you to handle a wide range of user queries effectively.

Next, learn why prompt management is important and why you should use a prompt CMS.

PromptLayer is the most popular platform for prompt engineering, management, and evaluation. Teams use PromptLayer to build AI applications with domain knowledge.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰