LLM Architecture Diagrams: A Practical Guide to Building Powerful AI Applications

What is an LLM (Large Language Model)?

A Large Language Model (LLM) is an advanced AI system trained to understand and generate human-like text based on large datasets.

Understanding LLM architecture is crucial for creating efficient, scalable, and task-specific AI applications that meet user needs effectively.

LLM architecture diagrams for visualizing and planning

LLM architecture diagrams serve as a visual tool to map out the key components, workflows, and interactions within the system, making it easier to plan, build, and optimize AI solutions.

PromptLayer lets manage and monitor prompts with your whole team, including non-technical stakeholders. Get started here.

Why LLM Architecture Diagrams Matter

LLM architecture diagrams can help you understand how AI systems work. They simplify complex workflows by breaking down intricate processes into clear, manageable sections.

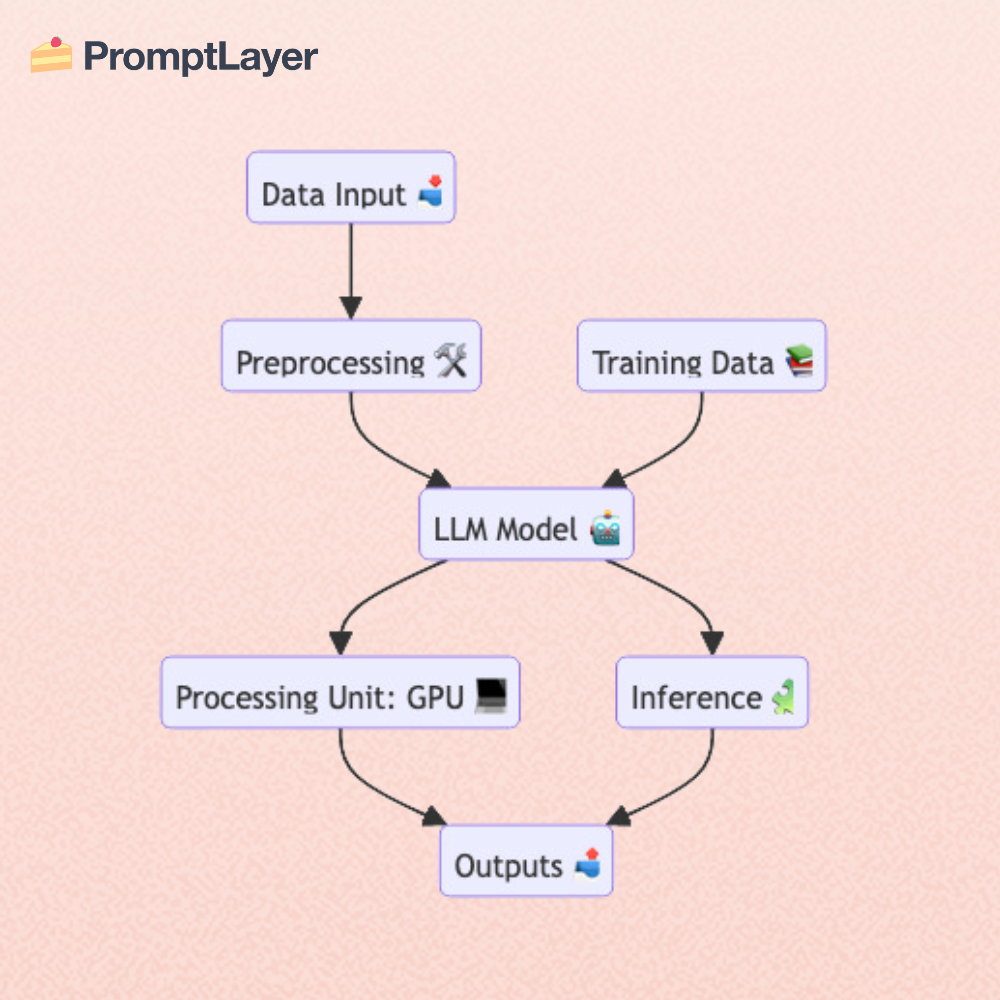

These diagrams help identify key components, such as data inputs, processing layers, and outputs, making it easier to pinpoint how different parts of the system interact. Here's a simple example showing a basic LLM System Architecture:

For teams, they act as a shared visual language, improving collaboration and reducing misunderstandings during development. By clarifying workflows and dependencies, architecture diagrams also make it easier to identify potential bottlenecks or areas for improvement.

Core Principles of Effective LLM Architecture

Building an effective Large Language Model (LLM) system requires thoughtful planning and design. By focusing on specific objectives, choosing the right tools, and tailoring solutions to tasks, you can create powerful AI applications that are efficient and easy to maintain.

Focus on Specific Problems

LLMs are powerful, but trying to address too many tasks at once can lead to inefficiency and confusion. Begin with a clear understanding of the problem you want to solve. For instance, a customer service chatbot might focus exclusively on handling billing inquiries or troubleshooting technical issues. This targeted approach allows you to streamline your resources and deliver precise solutions.

Customize for Tasks

Tailoring the LLM to specific tasks improves performance and reduces costs. Techniques like prompt engineering and fine-tuning allow you to align the model’s behavior with your unique requirements. For example, a specialized prompt for technical support might guide the AI to request error codes or log details before offering a solution.

Breaking Down LLM Architecture Diagrams

LLM architecture diagrams help visualize the structure of your system, making it easier to plan, build, and optimize. Below are the key components to include in your diagram.

Modular Design

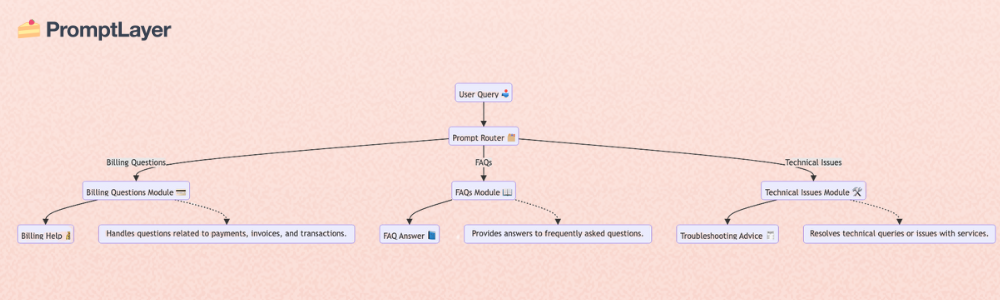

Breaking the system into smaller, task-specific modules improves efficiency and maintainability.

- Benefits: Modular prompts allow faster responses, easier debugging, and reduced costs.

- Example: A modular chatbot could have separate prompts for handling billing questions, answering FAQs, and resolving technical issues.

- Testing and Evaluation: Modular prompts simplify evaluation. You can create test cases for each module and compare predicted outputs with expected results.

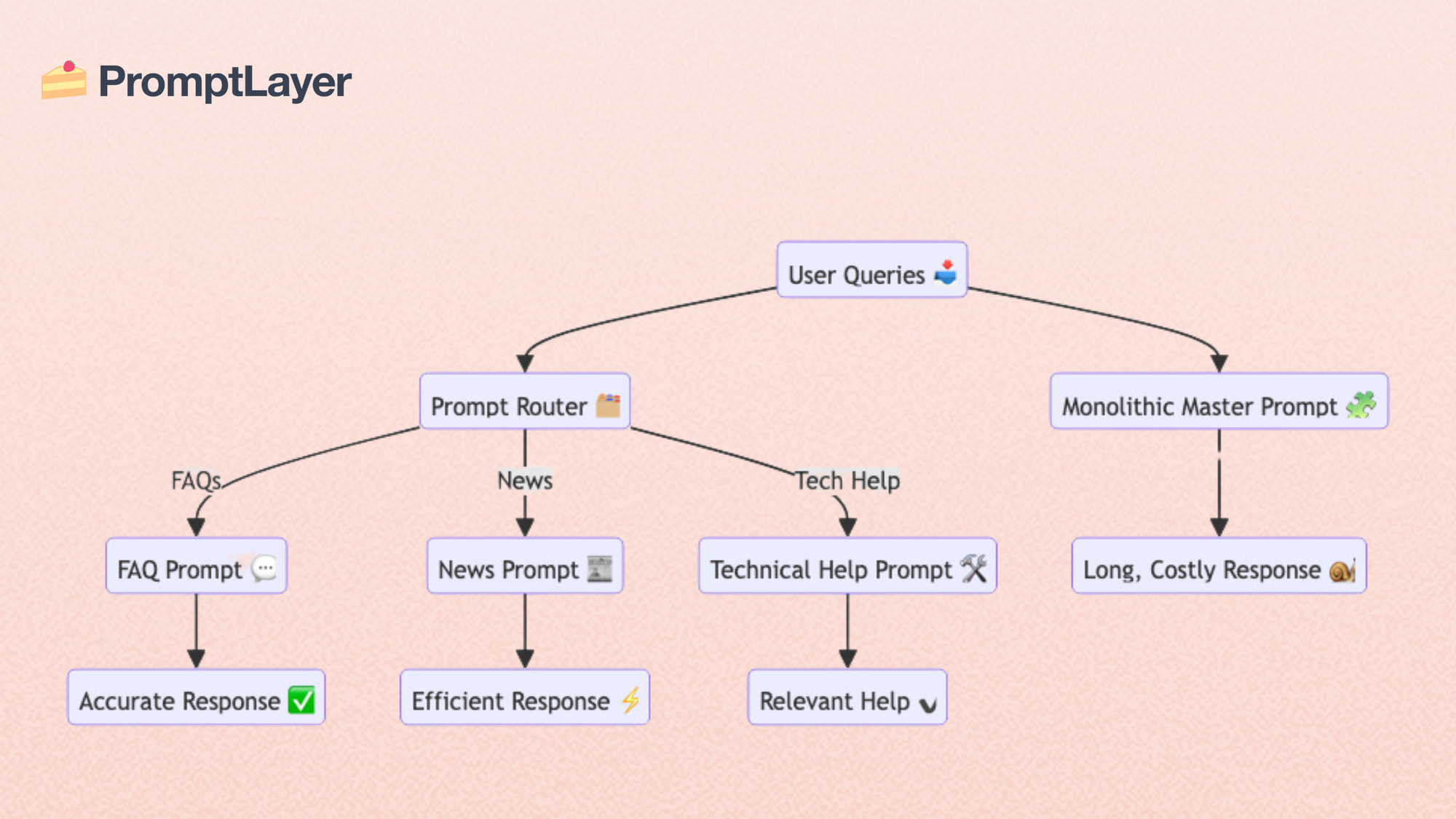

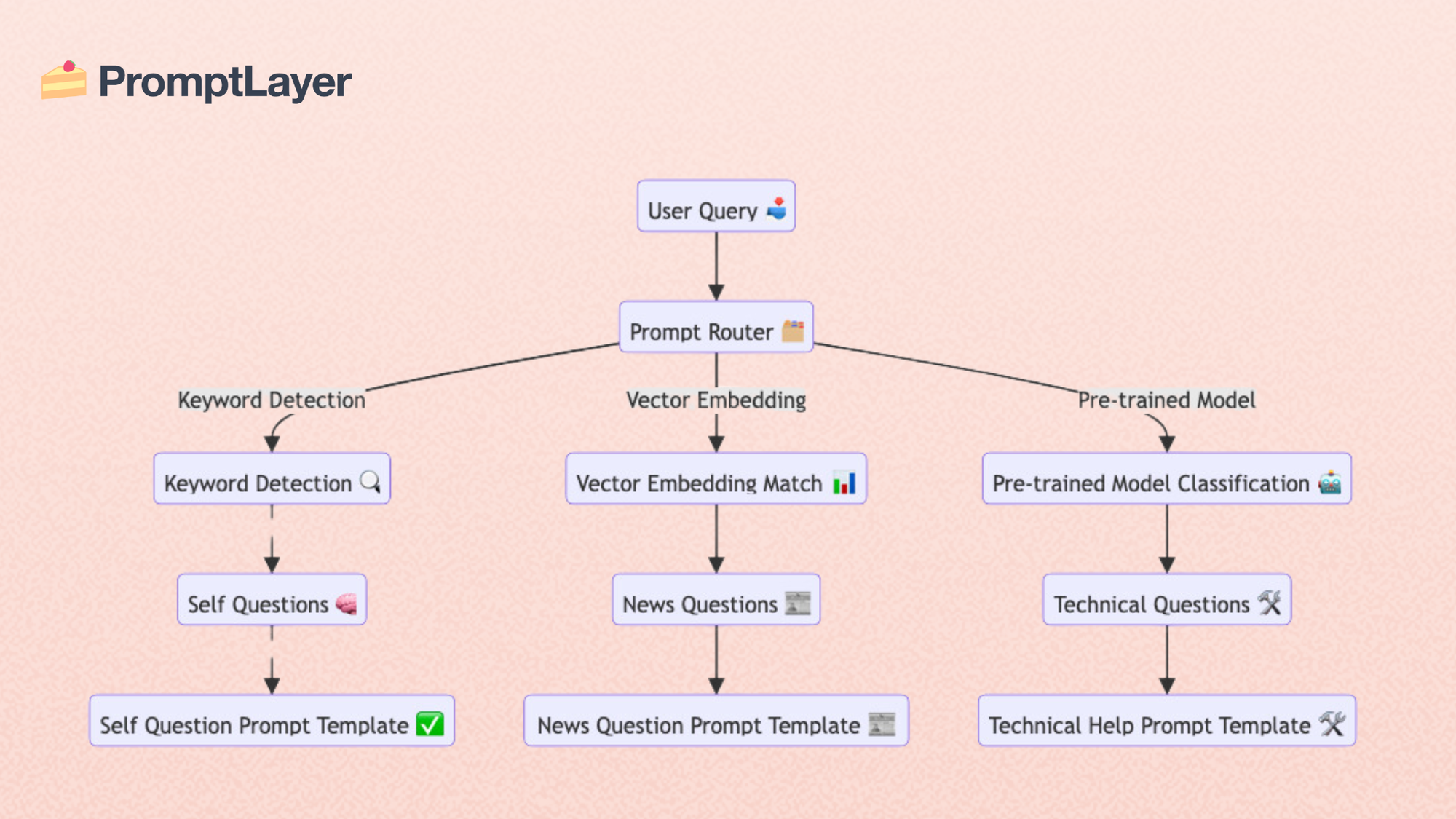

Prompt Routers

Prompt routers determine which specialized prompt should handle a user’s query.

- How They Work: Instead of a single, monolithic prompt, routers classify queries and send them to task-specific templates.

- Routing Methods:

- General-purpose LLMs: Use a large model like GPT-4 for initial classification.

- Fine-tuned models: Train smaller models for routing tasks to reduce costs.

- Vector embeddings: Quickly classify queries by comparing their semantic similarities.

- Deterministic methods: Use keyword detection to assign queries to categories.

Example: A news chatbot might route queries about politics to one module and sports-related queries to another.

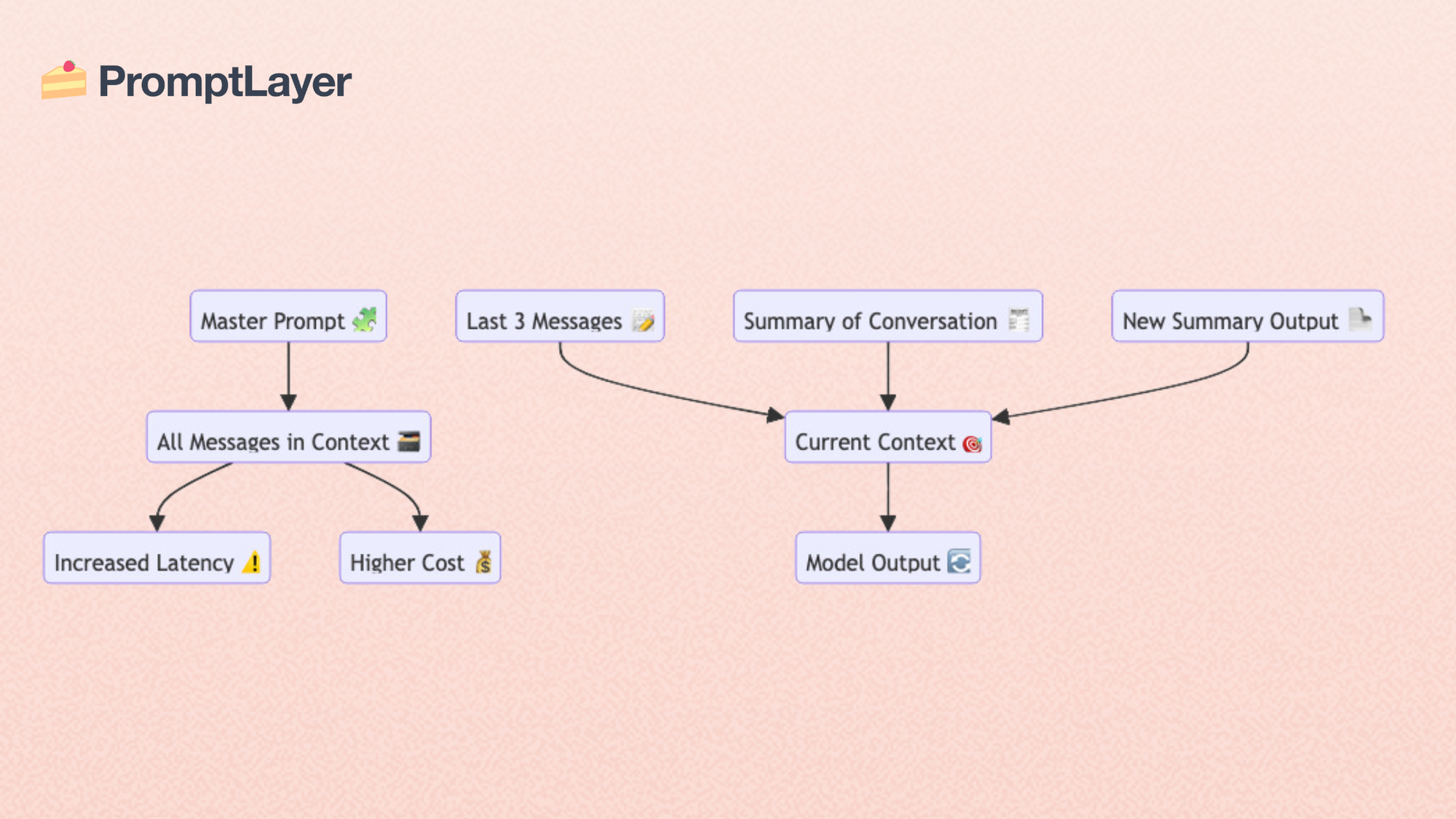

Memory Management

Maintaining context is crucial in LLM applications but should be done efficiently to avoid performance issues.

- Techniques:

- Include the last three user messages in the context.

- Summarize previous conversations to retain key details.

- Update the summary after each interaction.

- Example: A technical support chatbot might summarize the user's issue and proposed solutions, so the AI can provide relevant follow-ups.

Building Scalable and Secure LLM Systems

Creating an LLM system that scales effectively and protects user data is essential for long-term success.

Scalability

Design systems to handle growing demand without degrading performance.

- Use load balancers to distribute traffic evenly.

- Optimize prompt routing and resource allocation.

- Add new categories or prompts as needed without overhauling the entire system.

Data Privacy

Protect user data with robust security measures.

- Encrypt sensitive information and minimize data storage.

- Use anonymized tokens to process queries without retaining identifiable details.

- Ensure compliance with regulations like GDPR and HIPAA.

Example: A healthcare chatbot could anonymize patient data to provide medical advice while ensuring privacy.

Optimizing the User Experience

A well-designed LLM system prioritizes the user experience, ensuring responses are accurate, fast, and relevant.

Seamless Integration

LLM applications should fit naturally into existing workflows.

- Example: Integrate a customer service bot into a company’s CRM system for smoother ticket handling.

Speed and Accuracy

Modular prompts and efficient routing ensure responses are quick and precise.

- Example: A chatbot using vector embeddings can classify queries in milliseconds, speeding up the response process.

Continuous Improvement

Regularly monitor performance metrics and refine the system.

- Collect user feedback to identify areas for improvement.

- Update prompts and routing logic to address emerging issues.

Practical Example of LLM Architecture Diagrams

- Customer Support Bot:

- Separate prompts for FAQs, troubleshooting, and account-related queries.

- A router ensures user questions are directed to the appropriate module.

Step-by-Step Diagram Creation

- Identify the main components: inputs, processing units, outputs, and memory management.

- Draw connections to represent the flow of data and decision-making processes.

- Label each module for clarity and functionality.

FAQ

What are prompt routers, and why are they better than master prompts?

Prompt routers replace monolithic prompts by dividing tasks into smaller, focused prompts. They are faster, easier to maintain, and improve response quality.

How can I implement memory management in my chatbot?

Use recent messages and summaries to maintain context efficiently. Ask the AI to update the summary after each interaction for improved relevance.

Why is my routing slow, and how can I fix it?

Inefficient routing logic or reliance on large models might cause delays. Optimize your router by using smaller fine-tuned models or keyword-based classification.

How do I improve the accuracy of my responses?

Fine-tune your prompts based on feedback and regularly test your router’s performance with predefined test cases.

About PromptLayer

PromptLayer is a prompt management system that helps you iterate on prompts faster — further speeding up the development cycle! Use their prompt CMS to update a prompt, run evaluations, and deploy it to production in minutes. Check them out here. 🍰