How OpenAI's o1 model works behind-the-scenes & what we can learn from it

The o1 model family, developed by OpenAI, represents a significant advancement in AI reasoning capabilities. These models are specifically designed to excel at complex problem-solving tasks, from mathematical reasoning to coding challenges. What makes o1 particularly interesting is its ability to break down problems systematically and explore multiple solution paths—skills that were previously challenging for language models.

A recent research paper by Chaoyi Wu and colleagues, "Toward Reverse Engineering LLM Reasoning: A Study of Chain-of-Thought Using AI-Generated Queries and Prompts", provides fascinating insights into how o1 actually works under the hood. Through careful analysis and experimentation, the researchers have reverse engineered o1's approach to problem-solving, revealing the sophisticated mechanisms that drive its reasoning capabilities.

The findings are particularly valuable for prompt engineers because o1's architecture essentially institutionalizes best practices in prompt engineering. The way o1 approaches problems—through systematic decomposition, chain-of-thought reasoning, and iterative refinement—mirrors many of the techniques that prompt engineers have discovered to be effective. By understanding how o1 works, we can learn to design better prompts for any language model.

The Power of Chain-of-Thought Prompting

Reverse engineering o1 reveals its fundamental reliance on chain-of-thought reasoning. This isn't just a feature—it's the cornerstone of how the model operates. Behind the scenes, o1 combines sophisticated initialization (including fine-tuning on reasoning examples) with reinforcement learning that rewards effective problem-solving behavior. What makes it particularly interesting is how it searches through multiple possible solution paths, using an internal reward system to identify the optimal approach.

Understanding o1's Reasoning Process

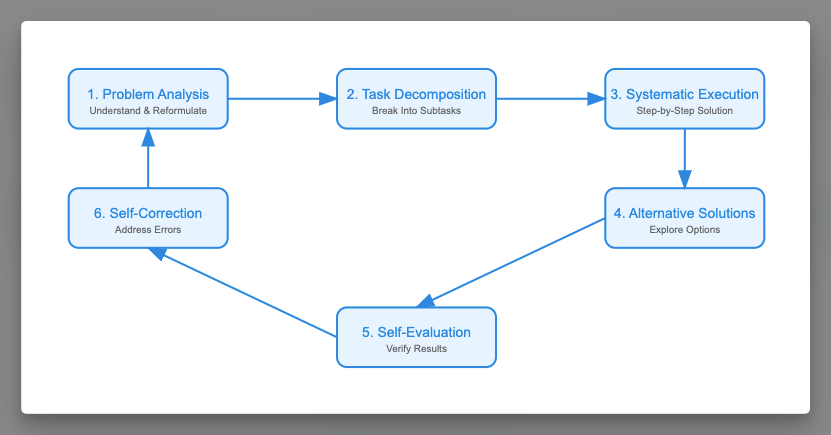

OpenAI o1 chain-of-thought has 6 steps as detailed below.

1. Problem Analysis

The model begins by reformulating the problem and identifying key constraints. This initial step creates a comprehensive mapping of the challenge ahead.

2. Task Decomposition

Complex problems are broken down into manageable chunks. This systematic approach prevents the model from becoming overwhelmed by complexity.

3. Systematic Execution

Solutions are built step-by-step, with each stage building on the previous one. This methodical approach ensures thoroughness and accuracy.

4. Alternative Solutions

The model doesn't stop at the first solution—it generates multiple approaches and evaluates their relative merits.

5. Self-Evaluation

Regular verification checkpoints are built into the process, ensuring the solution remains on track.

6. Self-Correction

When errors are spotted, they're addressed immediately rather than being allowed to compound.

The Importance of "Thinking Time"

A crucial insight from the reverse engineering of o1 is the importance of giving language models space to "think" methodically. The performance improvement when models are allowed to work through problems systematically is dramatic. Success comes not from racing to an answer but from building a robust foundation of understanding first.

Practical Applications for Prompt Engineering

These insights from o1's architecture can be applied to prompt engineering in several ways:

- Begin with Understanding

- Request the model's interpretation of the problem

- Verify this understanding before proceeding with solutions

- Embrace Decomposition

- Guide the model to break down complex problems

- Create clear subtasks with defined objectives

- Implement Verification

- Build checkpoints into prompts

- Request solution validation at key points

- Explore Multiple Paths

- Encourage the generation of alternative solutions

- Compare different approaches systematically

- Match Method to Complexity

- Adjust the depth of reasoning to the task at hand

- Scale the complexity of the prompting approach to match the problem

Don't Over-Engineer

Chain-of-thought prompting isn't necessary for every task. The key is knowing when to deploy these techniques strategically. Simple queries often don't require elaborate reasoning chains, while complex problems benefit immensely from structured thinking approaches.

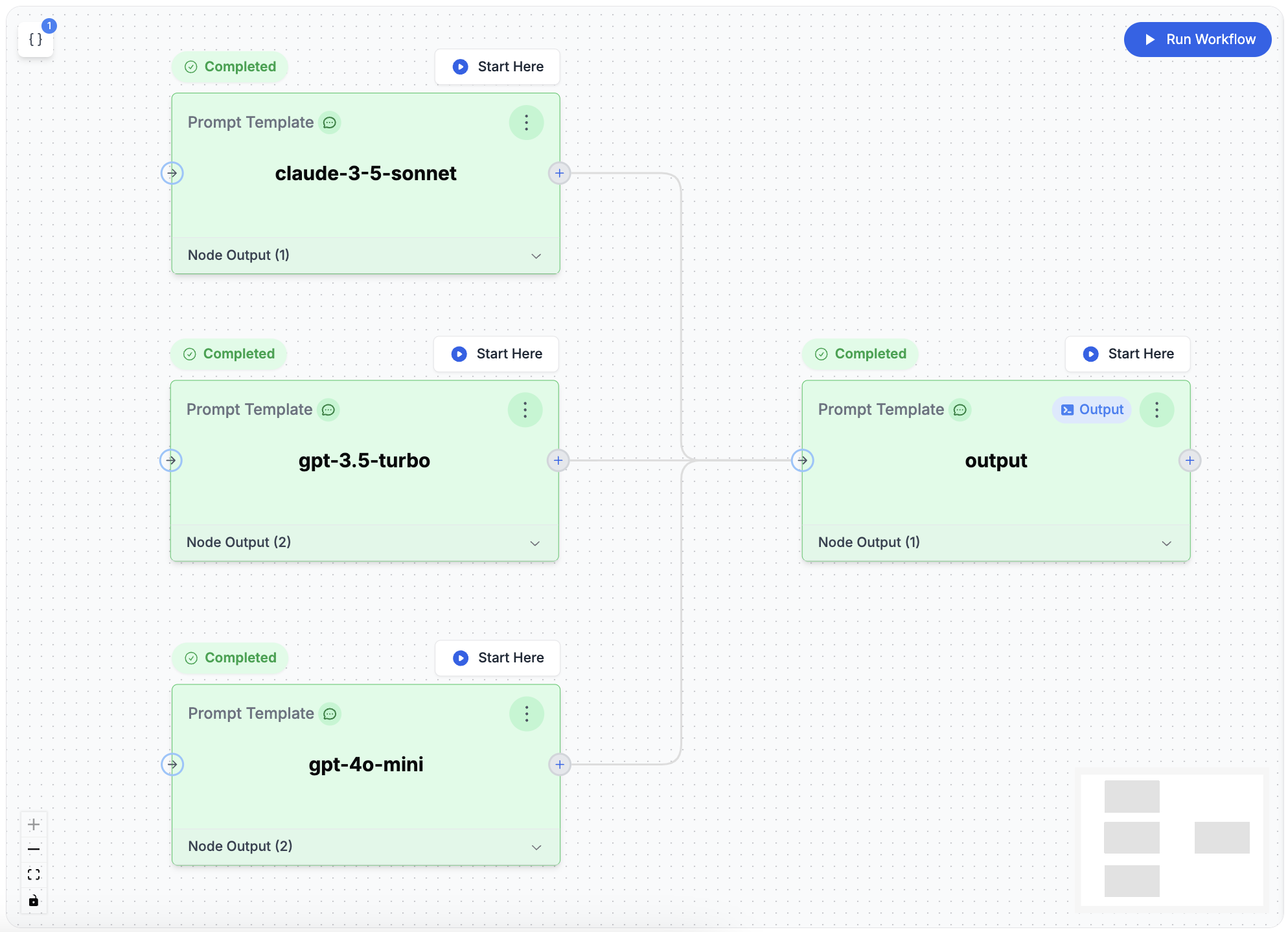

Building Better Agents

The reverse engineering of o1's architecture provides more than just theoretical insights—it offers a practical blueprint for building better AI systems. By implementing these principles through visual tools like PromptLayer's agent builder, practitioners can create sophisticated AI applications that demonstrate the same kind of methodical reasoning that makes o1 so effective.

The future of prompt engineering lies not just in crafting better prompts, but in building systems that can orchestrate multiple prompts in intelligent ways.

PromptLayer is the most popular platform for prompt engineering, prompt management, and evaluation. Teams use PromptLayer to build AI applications with domain knowledge.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰