How NoRedInk Used PromptLayer Evals to Deliver 1M+ Trustworthy Student Grades

NoRedInk has been on a mission to unlock every writer's potential since 2012. Today, their adaptive writing platform serves 60% of U.S. school districts and millions of students worldwide. But when they decided to build an AI grading assistant, they faced a challenge that many EdTech companies know all too well: how do you create AI-generated feedback that's not just fast, but pedagogically sound and trustworthy enough for real classrooms?

Initially, a small Engineering team collaborated with non-technical Curriculum Designers, moving from manual scripts toward a scalable, data-driven eval pipeline. This case study explores how NoRedInk leveraged PromptLayer to build an AI grading assistant that teachers trust, generating over 1 million pieces of feedback delivered directly to students.

The key to their success? Moving from ad-hoc scripts and manual testing to a repeatable, scalable evaluation system that puts domain expertise at the center of AI development.

The Journey: Why NoRedInk Built, Then Bought

NoRedInk began by building an internal custom platform for managing prompts and evaluations. The initial MVP was developed using rudimentary tools, undergoing multiple enhancements after retrospectives of each release.

"We did things very manually, very rudimentary at first," the team reflects. Each release brought a retrospective, and each retrospective revealed new patterns and bottlenecks.

Cracks began to show during their second major release. The team discovered critical bottlenecks that were slowing their ability to ship quality AI features:

- Confusion regarding which prompt changes matched specific evaluations.

- Ineffective version history tracking.

- Engineering becoming a bottleneck for pedagogical changes.

- The curriculum designers—former teachers who deeply understood pedagogy—were largely excluded from direct experimentation.

The build versus buy decision came down to a simple realization: building these tools wasn't core to NoRedInk's mission of improving student writing. After researching the landscape of evaluation and prompt management tools, they chose PromptLayer for its focus on collaborative workflows and robust evaluation capabilities.

Engineering a Robust Evaluation Pipeline

NoRedInk's evaluation pipeline runs like clockwork. On every major prompt tweak, their system runs batch evaluations against approximately 50 diverse essay samples. These samples are expert-crafted, informed by years of classroom experience, and represent the full spectrum of abilities and backgrounds across the country.

The technical implementation leverages several key optimizations:

- Scorecard design: A rubric categorizing feedback into 4 named categories, with clear version-scoped metrics

- Full traceability: The PromptLayer SDK logs template IDs, versions, variables, and provider parameters for every run

- Performance gains: Cached template fetching and provider swapping cut batch evaluation time by over 40%

Golden sample sets provide quantitative benchmarks alongside qualitative reviews by Curriculum Designers. This data-driven approach combines quantitative metrics with qualitative pedagogical review, ensuring that AI feedback meets both technical and educational standards.

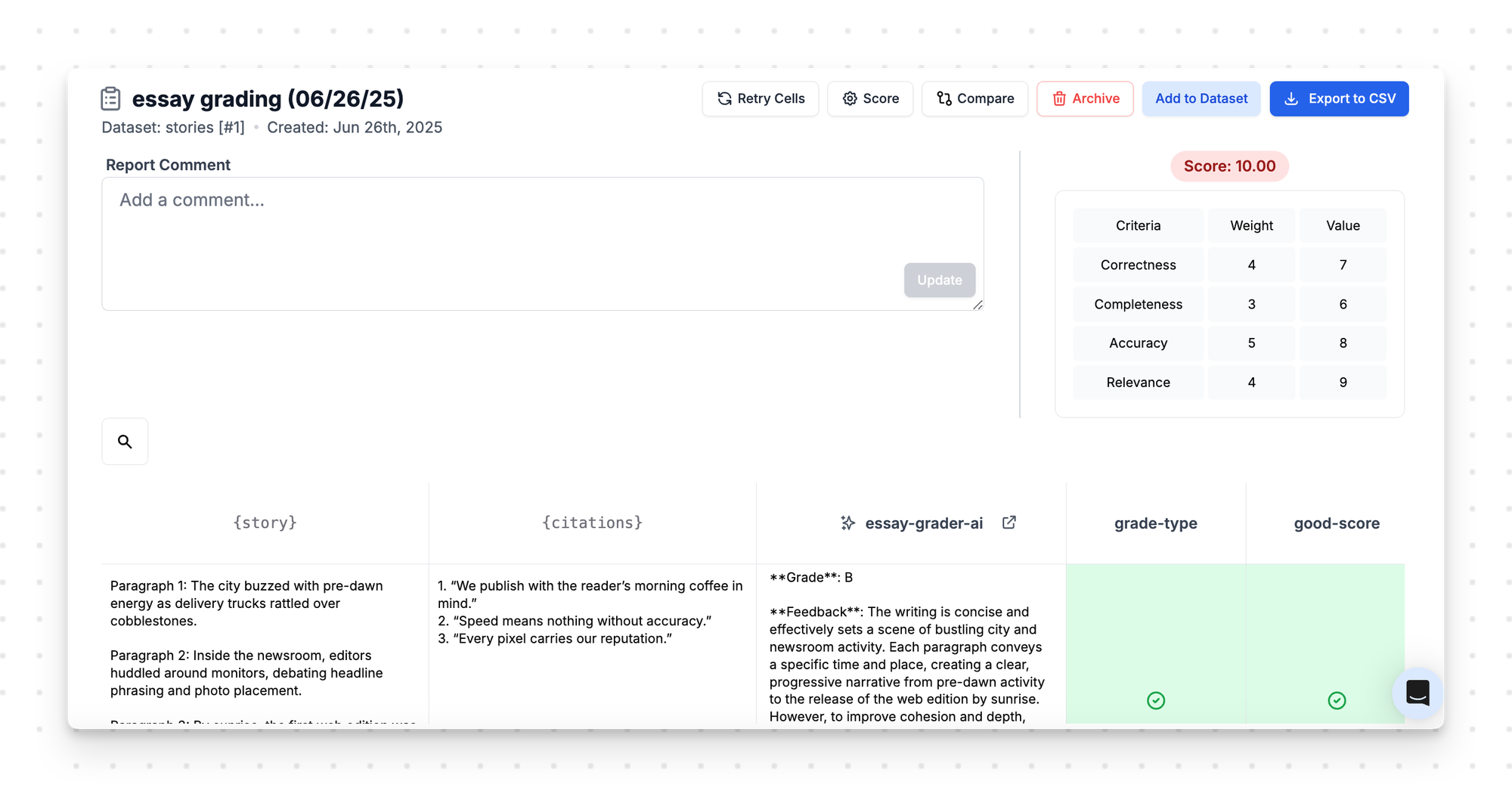

Advanced Scorecard Evals — Quantifying Pedagogy

NoRedInk’s scorecard system goes well beyond simple pass/fail checks. Confusion-matrix drill-downs surface exactly which error types spike after a prompt tweak—critical insight when you’re making nuanced pedagogical decisions.

Model comparison is streamlined through head-to-head bake-offs where multiple providers can be tested side-by-side. High-level metrics paired with rubric-level deltas make any regression immediately obvious.

This hybrid of quantitative signals and expert review ensures the AI doesn’t just nail the grammar; it preserves the authentic “teacher voice” that makes feedback genuinely helpful to students.

The Game-Changer: Non-Technical Curriculum Designers in the Loop

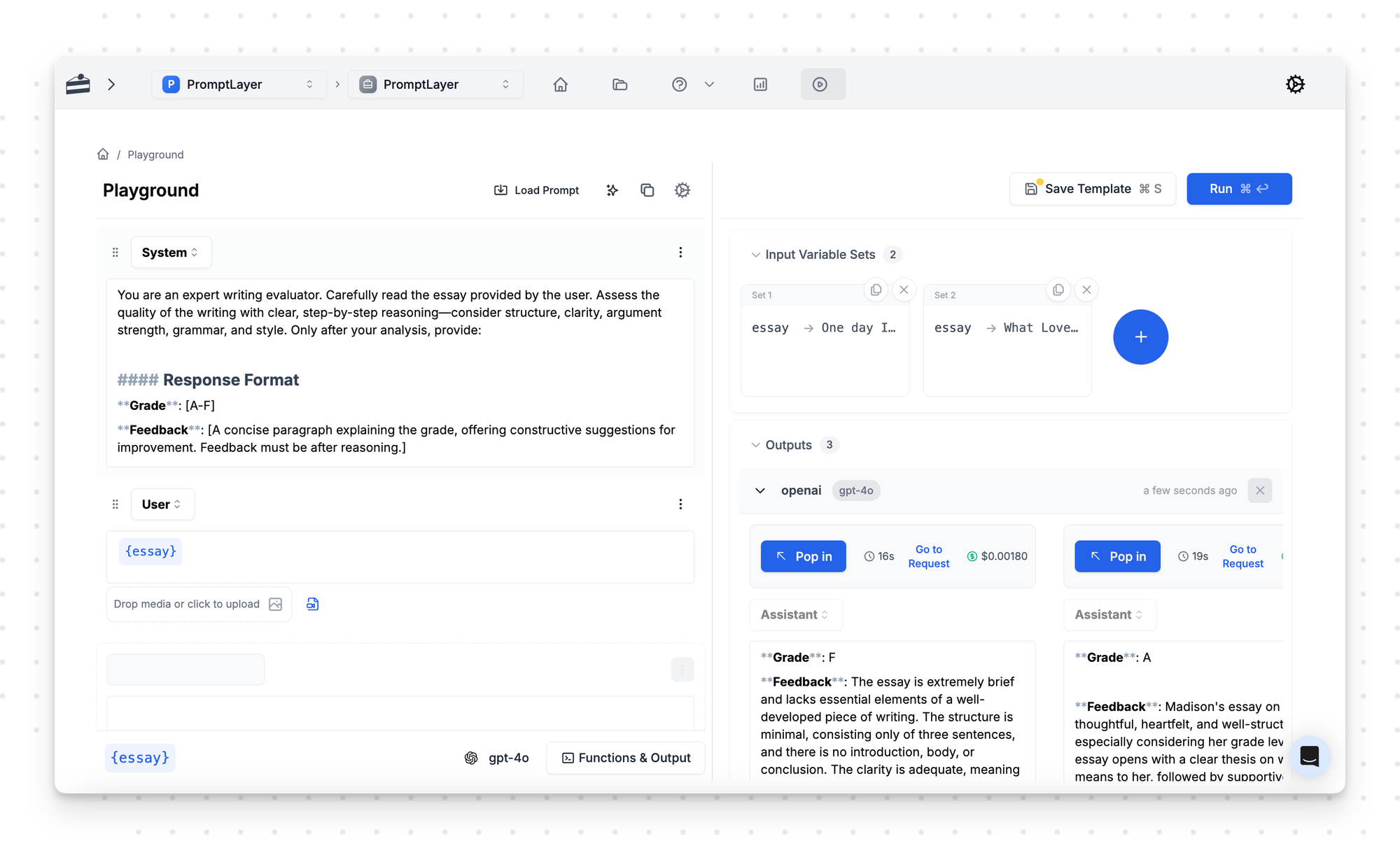

The transformation accelerated when six former educators, NoRedInk's "Curriculum Designers” gained direct access to prompt-level experimentation. These domain experts, deeply familiar with the product but without development or AI backgrounds, could now tweak prompts in the Playground without writing a single line of code.

Today, NoRedInk’s curriculum designers—who've been with the company long enough to deeply understand both the product and its pedagogical mission—have become prompt engineers in their own right. Using PromptLayer's Playground, they experiment with different approaches to "nudge the LLM to think like a teacher."

This shift collapsed feedback loops from days to hours. Curriculum Designers gained new capabilities that fundamentally changed the development process:

- Direct access to prompt versions without touching code

- Real-time experimentation through "Open in Playground" links

- Ability to leave pedagogical feedback on specific outputs

- Scorecard reviews with embedded human comments for deeper analysis

When a curriculum designer sees a student getting unhelpful feedback, they can immediately pull up the exact prompt version, test modifications, and see results—all without writing a line of code.

“Domain experts can try changes, run them, and see their impact without engineering’s involvement, which has been very helpful for brainstorming thorny problems.”

- Faraaz Ismail, Software Engineering Manager on the AI Team

This empowerment has unlocked innovation. Those "thorny problems" in grading—the edge cases that require deep pedagogical understanding—are now solvable by the people who understand them best.

Impact in Production

PromptLayer’s integration into NoRedInk’s workflow produced tangible results:

- Over 1 million entries of AI-generated feedback delivered since launch

- Teachers reporting "orders of magnitude" time savings and richer student feedback

- Significantly increased internal confidence, enabling rapid multi-model comparisons and faster deployments

- A notable 12 percentage point increase in eval rubric pass-rate following the first PromptLayer-driven iteration

Students who might have received generic or no feedback are getting detailed, pedagogically-sound guidance. Teachers trust the system enough to use it with their students. And the curriculum team has discovered they can shape AI behavior to match their educational vision—no computer science degree required.

Conclusion: A Blueprint for Domain-Expert Driven AI

NoRedInk’s integration of PromptLayer demonstrates how combining robust technical infrastructure with deep domain expertise can produce trustworthy AI solutions. Domain-aligned evals and robust scorecards are critical for measurable pedagogical effectiveness.

For other EdTech companies considering this path, NoRedInk's experience offers clear guidance. Invest in tools that bridge the gap between technical capability and domain expertise. Create evaluation frameworks that measure what matters to your users. Most importantly, recognize that your domain experts—not your engineers—often hold the key to making AI truly useful.

PromptLayer is an end-to-end prompt engineering workbench for versioning, logging, and evals. Engineers and subject-matter-experts team up on the platform to build and scale production ready AI agents.

Made in NYC 🗽 Sign up for free at www.promptlayer.com 🍰