Building Better AI Systems: Lessons from Anthropic's AI Engineer Talk

"Evals are your company's intellectual property" - Alexander Bricken at AI Engineer Summit

I recently attended Anthropic's talk at AI Engineer Summit, and it offered fascinating insights into how one of the leading AI companies thinks about building robust AI systems. Here are my key takeaways from the session.

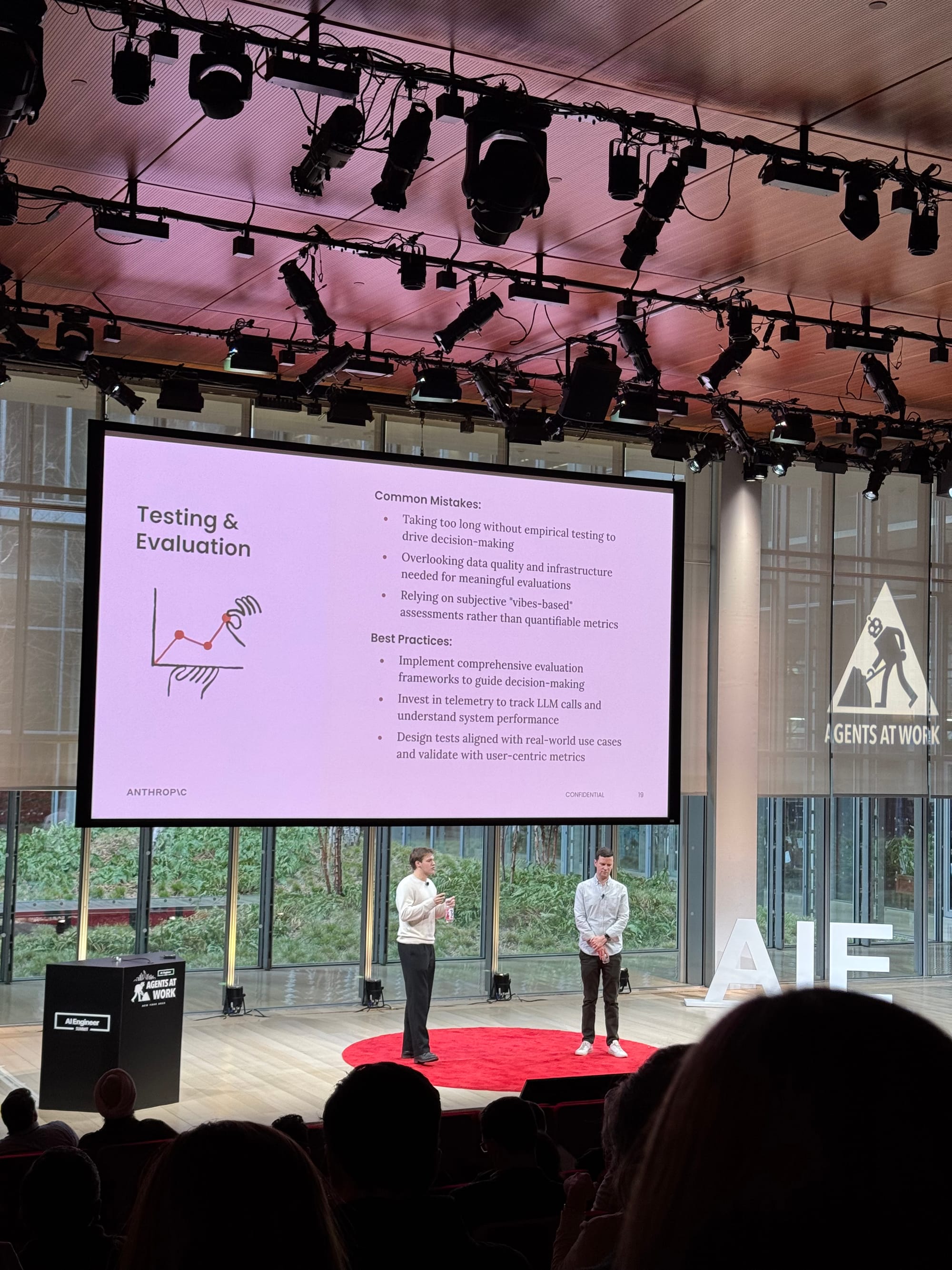

The Power of Evaluations

The most striking insight was how Anthropic views evaluations as crucial intellectual property. This isn't just about testing - it's about competitive advantage. Many teams make the fundamental mistake of testing their AI models based on "vibes" or with datasets that are too small to be statistically significant. They're essentially flying blind.

Building Robust Evaluation Systems

Good eval practices are what separate successful AI implementations from failures. You need:

- Comprehensive telemetry to track real-world performance

- Representative test cases that mirror actual user behavior

- Edge case testing (like handling a kid asking about Minecraft on a business platform)

- Systematic ways to measure and improve model capabilities

The Metrics Triangle: Speed vs Intelligence vs Cost

One of the most practical frameworks shared was the "metrics triangle." Most teams can only optimize for two out of three factors: speed, intelligence, and cost. You need to make deliberate choices based on your use case:

- Customer support requires responses in under 10 seconds

- Financial analysis can afford longer processing for better quality

- Design your UX around these constraints instead of fighting them

The Fine-Tuning Trap

There's a common misconception that fine-tuning is the answer to all AI problems. In reality, it should be your last resort, not your first solution. Fine-tuning is essentially performing "brain surgery" on the model - it's expensive, complex, and can actually limit the model's reasoning capabilities in unexpected ways. Exhaust other options first.

Your AI Engineering Toolbox

The good news is that you have many tools at your disposal before reaching for fine-tuning:

- Basic prompt engineering

- Context retrieval optimization

- Prompt caching for performance

- Citation systems

- And many many more

Start with these fundamentals before moving to more complex solutions. Often, clever use of basic tools can get you the results you need without the complexity of advanced techniques.

The key message throughout was clear: building effective AI systems isn't about using the most advanced techniques, but about systematic evaluation, understanding your constraints, and making smart architectural choices. It's about being methodical rather than reaching for the shiniest new tool.

This lines up pretty exactly to many of our core theses at PromptLayer. We believe in helping teams build great products through iteration, not magic bullets.