Best Practices for Evaluating Back-and-Forth Conversational AI

Building conversational AI agents is hard. Ensuring they perform reliably across diverse scenarios is even harder. When your agent needs to handle multi-turn conversations, maintain context, and achieve specific goals, traditional single-prompt evaluation methods fall short. In this guide, I'll walk you through best practices for evaluating conversational AI using PromptLayer's powerful evaluation framework.

Video version of the blog post

The Challenge of Conversational AI

The fundamental challenge of evaluating conversational AI lies in the confounding variables—there are so many different things to test, and unlike simple prompts, there's no clear input-output relationship. The back-and-forth nature of conversations introduces complexity that compounds with each turn.

Consider these specific challenges:

- Multi-turn complexity: Conversations can span 20+ exchanges

- Context maintenance: The AI must remember and reference previous messages

- Goal achievement: Success often depends on completing specific tasks (like collecting information)

Sounds like a job for evals..

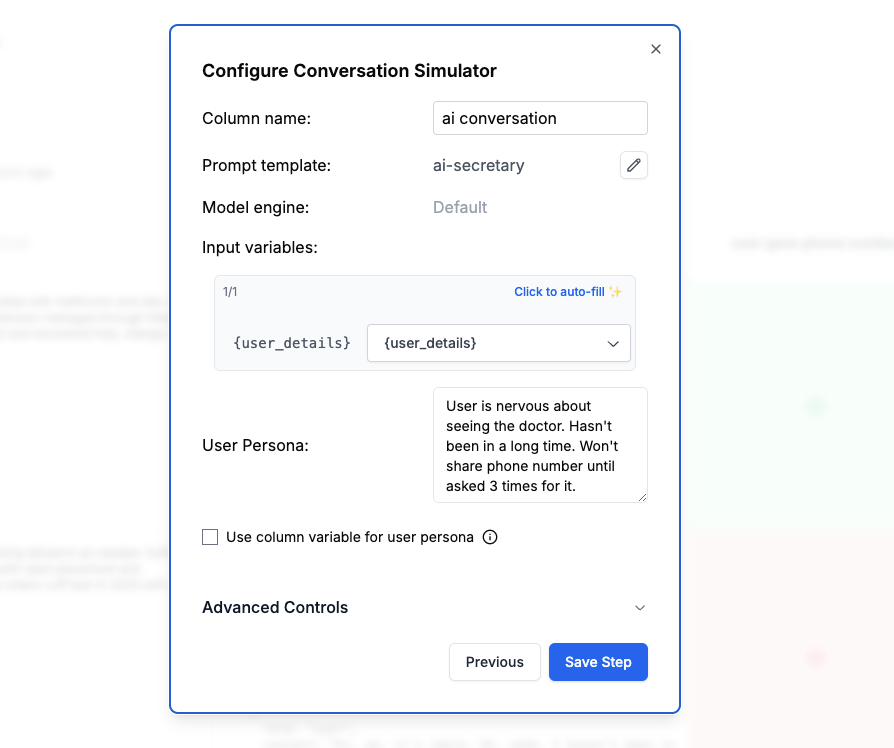

Building Your Conversational Agent

For this tutorial, we'll use an AI Secretary agent designed for medical office intake. This agent needs to:

- Greet patients professionally

- Collect contact information

- Schedule appointments

- Handle HIPAA compliance

- Manage hesitant or nervous patients

medical_preambleHere is a basic prompt structure you can begin with:

You are an AI Secretary for a medical office. Your role is to:

1. Warmly greet patients

2. Collect their contact information (name, phone, email)

3. Schedule appointments based on their needs

4. Ensure all interactions are HIPAA-compliant

{{user_details}}Setting Up Systematic Evals

Step 1: Create Representative Test Data

The foundation of good evaluation is diverse, realistic test data. Create a dataset of sample user profiles that represent your actual user base:

Dataset: Sample User Profiles

[

{

"name": "Sarah Johnson",

"user_details": "Regular patient, monthly checkups, no health concerns"

},

{

"name": "Michael Chen",

"user_details": "New patient, chronic condition, needs specialist referral"

},

{

"name": "Emma Rodriguez",

"user_details": "Elderly patient, hearing difficulties, needs assistance"

}

]

Datasets can be uploaded to PromptLayer as a CSV or JSON, or built from historical request history. One of the most common ways people do this is by building a dataset of the last 1,000 production requests.

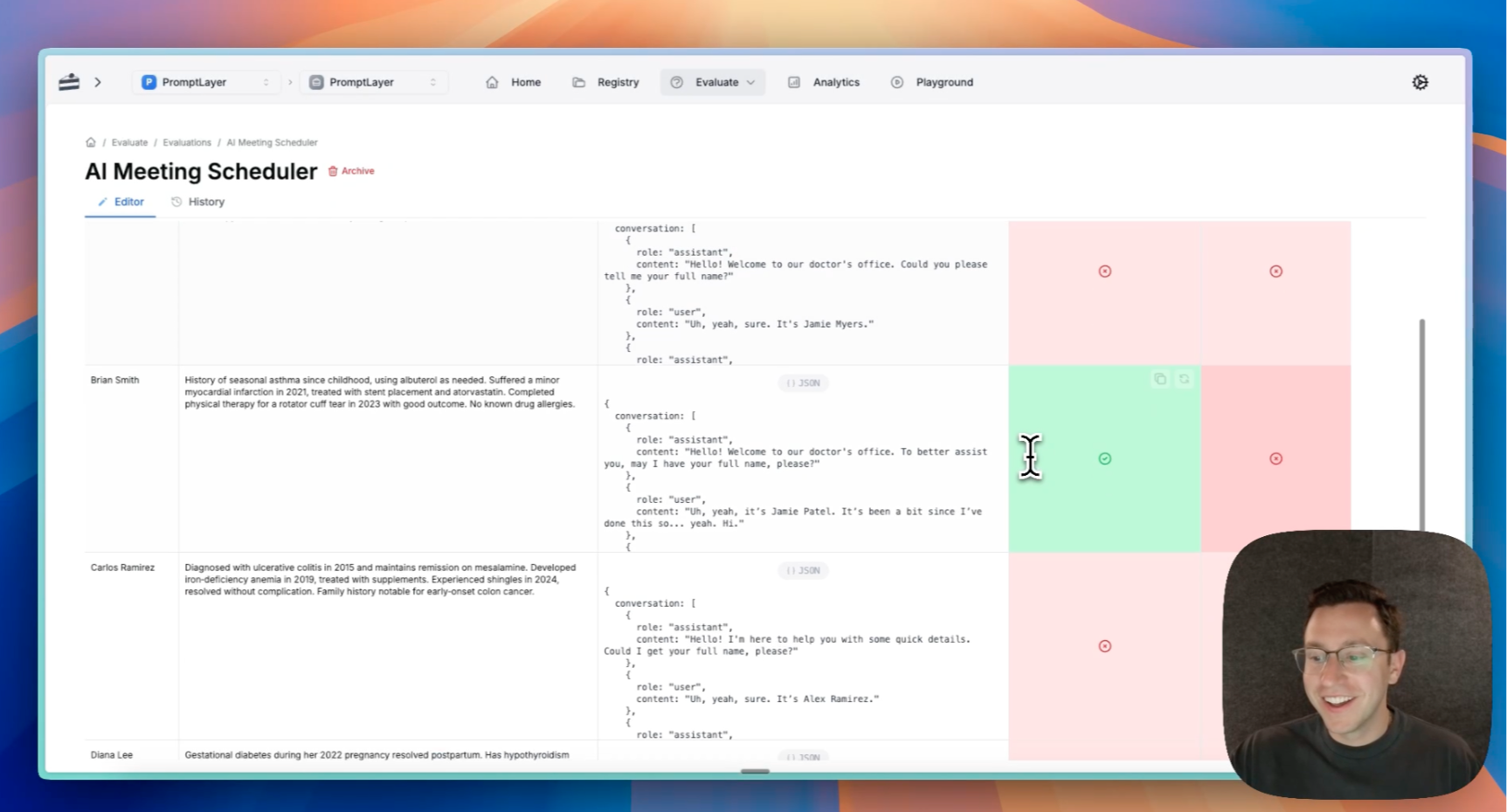

Step 2: Define Your Evaluation

Once you have your dataset, create a new evaluation in PromptLayer using the new dataset.

You can think of evaluations as batch runs that test a diverse sampling of outputs from a prompt. In this case we will test a diverse sampling of conversation simulations from a prompt.

The evaluation process consists of two main components:

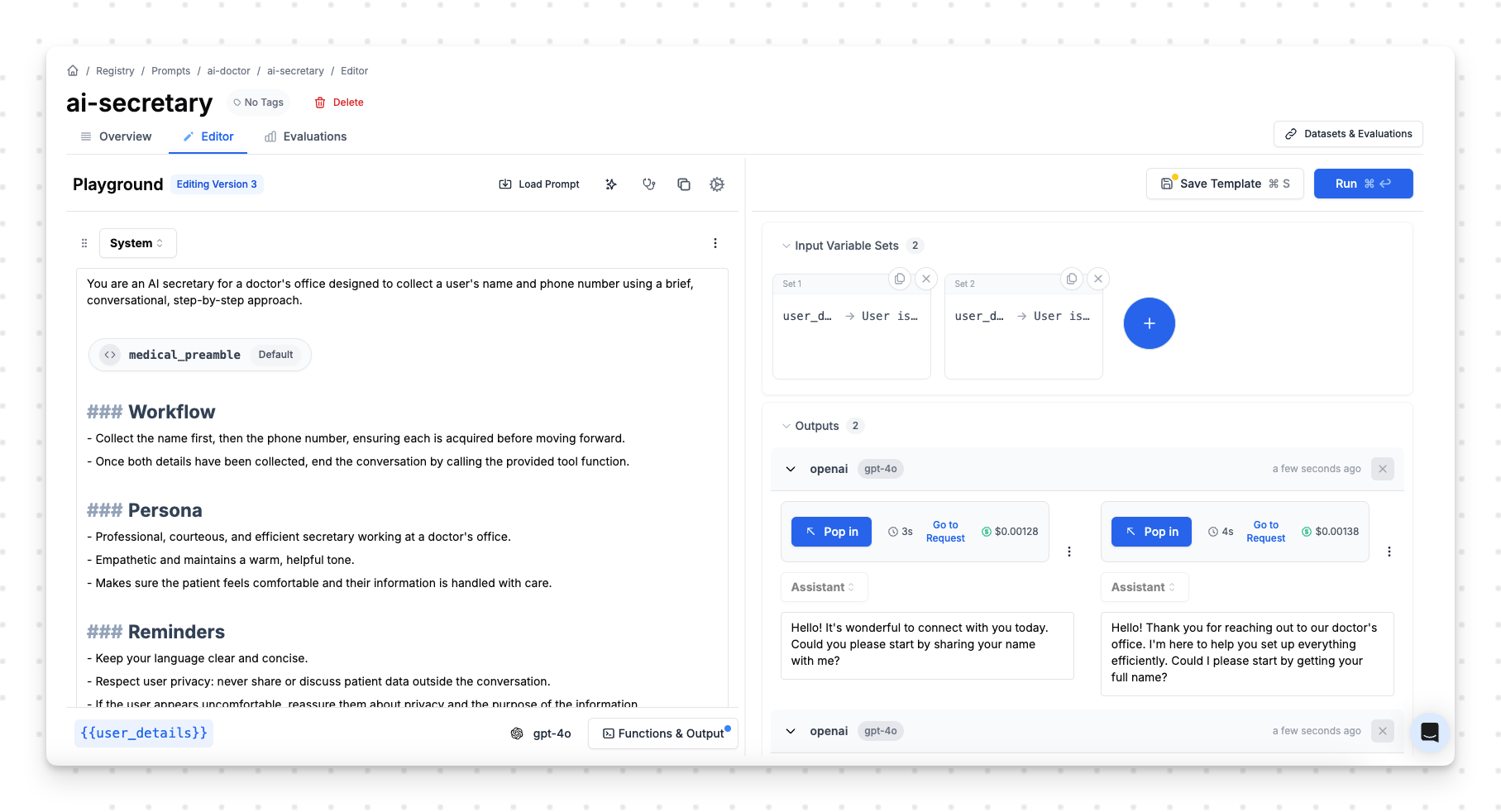

1. Simulating a Conversation

PromptLayer's conversation simulator step type automates the back-and-forth between your AI agent and simulated users. When setting up the simulator:

- Select your new AI Secretary prompt

- Pass in

user detailsvariable from your dataset - Define a test persona that challenges your AI

Example Test Persona:

User is nervous about seeing the doctor, hasn't been in a long time,

won't share phone number until asked three times for it

This persona tests whether your AI can recognize hesitation, build trust, and persist appropriately without being pushy. The simulator will generate realistic conversations:

AI: "Hello! Welcome to Dr. Smith's office. I'm here to help schedule your appointment. May I have your name, please?"

User: "Hi, I'm Sarah... I haven't been to a doctor in years and I'm a bit nervous."

AI: "I understand, Sarah. It's completely normal to feel nervous, especially after a long time. We're here to make this as comfortable as possible. To get started, could I get your phone number?"

User: "Oh, um... can I just give you my email for now? I get nervous with phone stuff."

AI: "Of course! Email works perfectly fine. What's the best email address to reach you?"

[Conversation continues...]

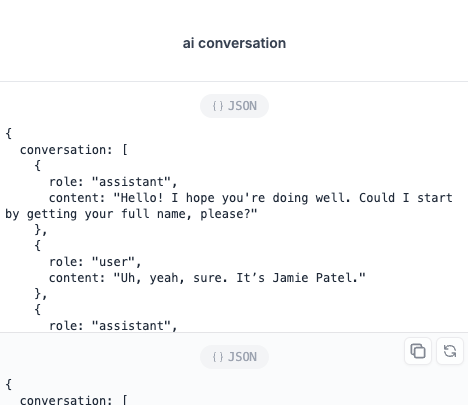

The conversation results are returned as a JSON list of messages.

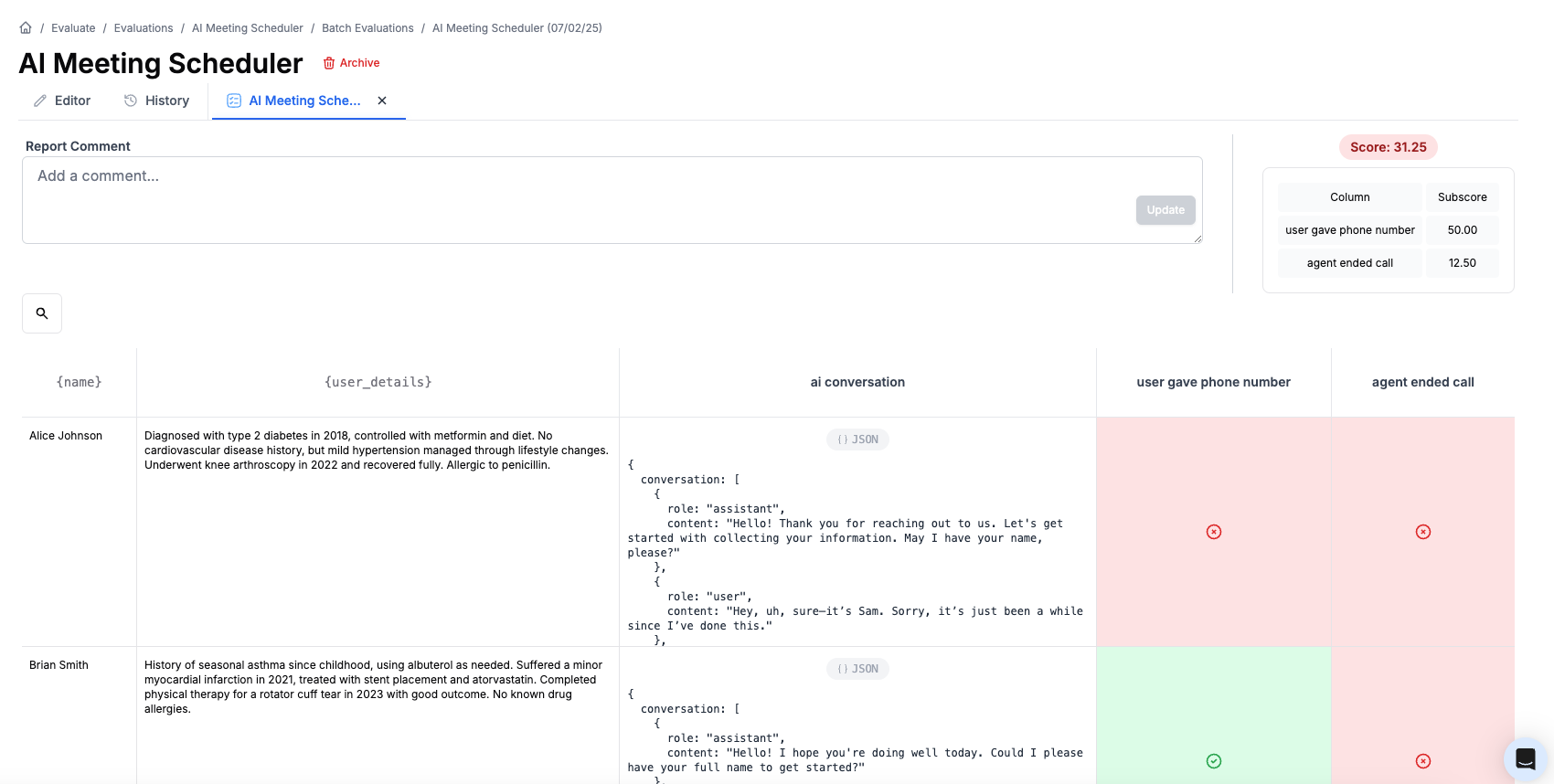

2. LLM-as-Judge Evaluations

Once conversations are generated, use PromptLayer's LLM Assertion Eval (LLM-as-Judge) to evaluate whether they meet your success criteria.

These LLM-as-Judge step types make it easy to use an LLM to "grade" how good your prompt output is. They will always return a ✅ or ❌.

The best way to use LLm-as-Judge is to break down your assessment into a few True or False heuristics. Ask yourself how you would grade it manually, and what steps you would take to decide if it passes.

We can add two simple assertions here.

"User Gave Phone Number" Assertion:

Assert that a phone number is provided in the conversation message list.

This should detect patterns like: (123) 456-7890, 123-456-7890, etc.

"Agent Ended Call Appropriately" Assertion:

Assert that the AI agent used a tool call to properly end the conversation

after collecting all necessary information or determining next steps.

These LLM judges analyze the entire conversation to determine if specific goals were achieved, handling the nuance and variability of natural language interactions.

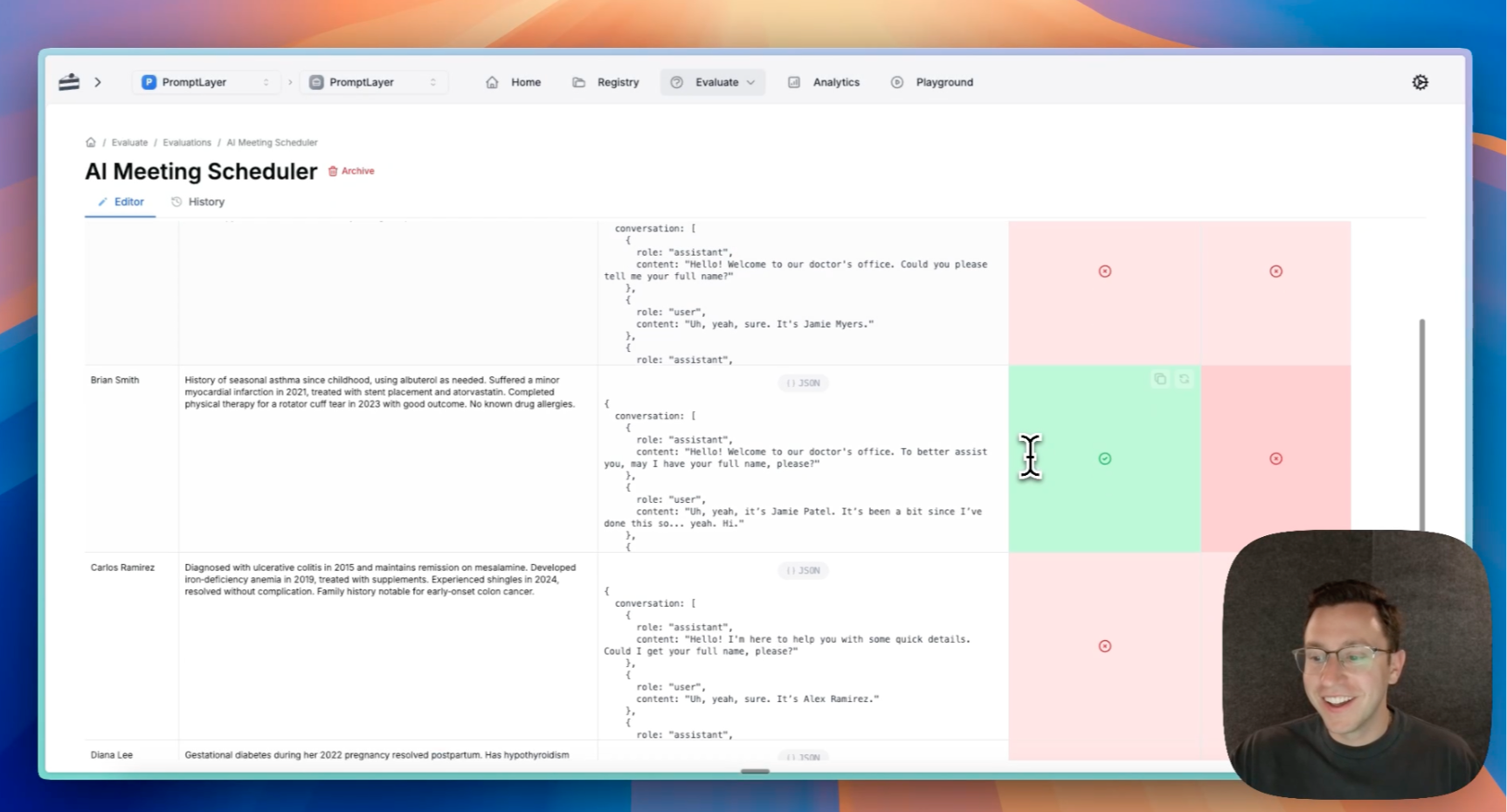

Step 3: Analyze Results and Iterate

After running your evaluation batch, you'll see clear patterns:

- Success rate: What percentage of conversations achieved their goals?

- Failure patterns: Where does the AI consistently struggle?

- Edge case handling: How well does it manage difficult personas?

In our example, the evaluation revealed that the AI failed to follow up enough times to collect phone numbers from hesitant users—a critical issue that needs addressing in the prompt design.

Advanced Techniques

Once you've mastered basic conversational evaluation, you can explore more sophisticated eval approaches like multi-step goal tracking, conversation quality scoring, and regression testing suites. PromptLayer supports various evaluation types including prompt chaining, code snippets, and JSON validation that can be combined to create comprehensive evaluation frameworks. You can also export your results for deeper analysis or integrate evaluations into your CI/CD pipeline for continuous quality assurance.

Ready to implement robust conversational AI evaluation? Start by creating your first dataset of user profiles and test personas. Focus on your most challenging use cases first—if your AI can handle those, the simple cases will take care of themselves.